|

depthstream-accelerator-ros2-integrated-monocular-depth-inference repositorymonocular_depth |

ROS Distro

|

Repository Summary

| Description | DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments. |

| Checkout URI | https://github.com/jagennath-hari/depthstream-accelerator-ros2-integrated-monocular-depth-inference.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-03-17 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| monocular_depth | 0.0.0 |

README

DepthStream-Accelerator-ROS2-Integrated-Monocular-Depth-Inference

DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments.

🏁 Dependencies

1) NVIDIA Driver (Official Link) 2) CUDA Toolkit (Official Link) 3) cuDNN (Official Link) 4) TensorRT (Official Link) 5) OpenCV CUDA (Github Guide) 6) Miniconda (Official Link) 7) ROS 2 Humble (Official Link) 8) ZoeDepth (Official Link)

⚙️ Creating the Engine File

ONNX File

Create the onnx file cd monocular_depth/scripts/ZoeDepth/ && python trt_convert.py. The ONNX file gets saved in the working directory as zoe_nk.onnx, configure the input dimensions as per your input image dimensions (h, w).

You can download a prebuilt .onnx file from LINK.

TensorRT engine creation

Once you have the .onnx file created go into the tensorRT trtexec directory. Mostly this is cd /usr/src/tensorrt/bin/. Now it is time to create the engine file, this could take a few minutes to create. Run the command below,

./trtexec --onnx=zoe_nk.onnx --builderOptimizationLevel=3 --useSpinWait --useRuntime=full --useCudaGraph --precisionConstraints=obey --allowGPUFallback --tacticSources=+CUBLAS,+CUDNN,+JIT_CONVOLUTIONS,+CUBLAS_LT --inputIOFormats=fp32:chw --outputIOFormats=fp32:chw --sparsity=enable --layerOutputTypes=fp32 --layerPrecisions=fp32 --saveEngine=zoe_nk.trt

🖼️ Running Depth Estimation

Build the ROS2 workspace

colcon build --symlink-install --cmake-args=-DCMAKE_BUILD_TYPE=Release --parallel-workers $(nproc)

Inference

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=true With GUI.

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=false Without GUI.

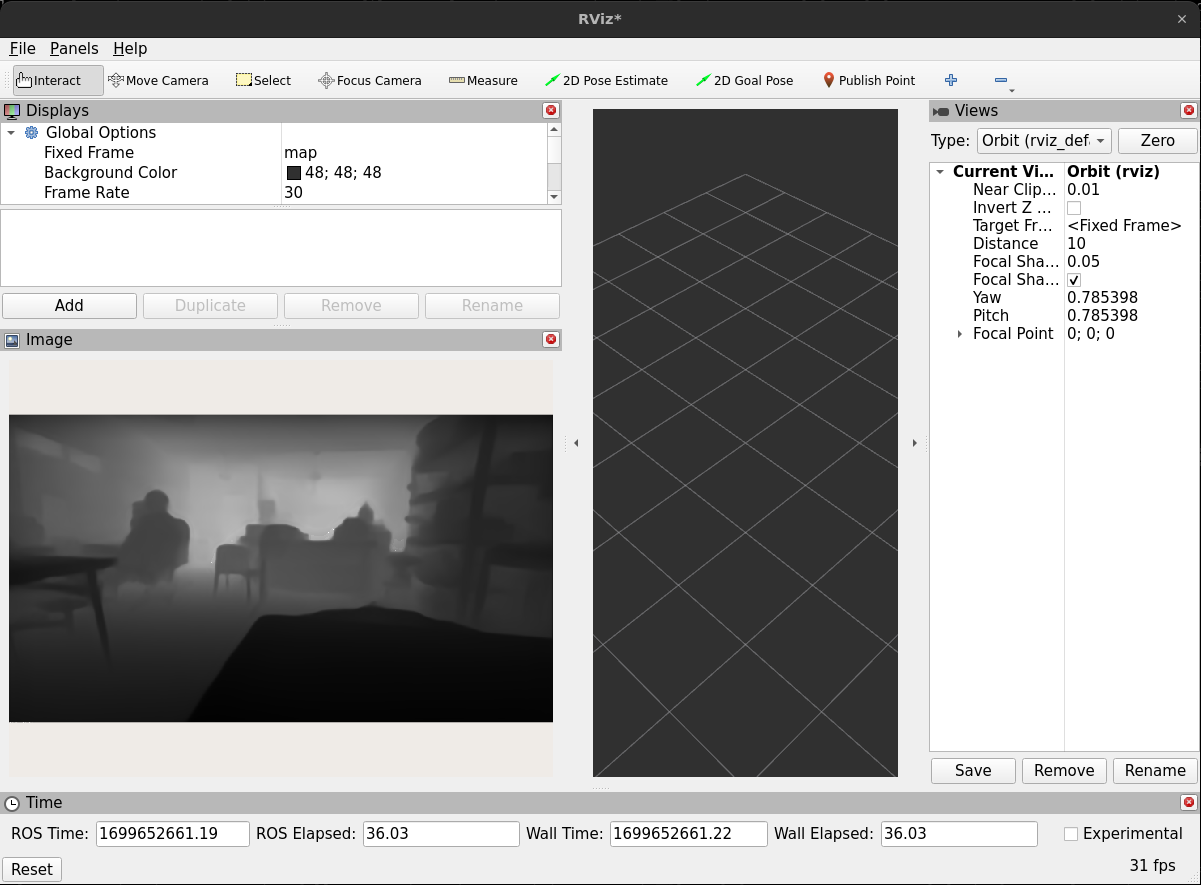

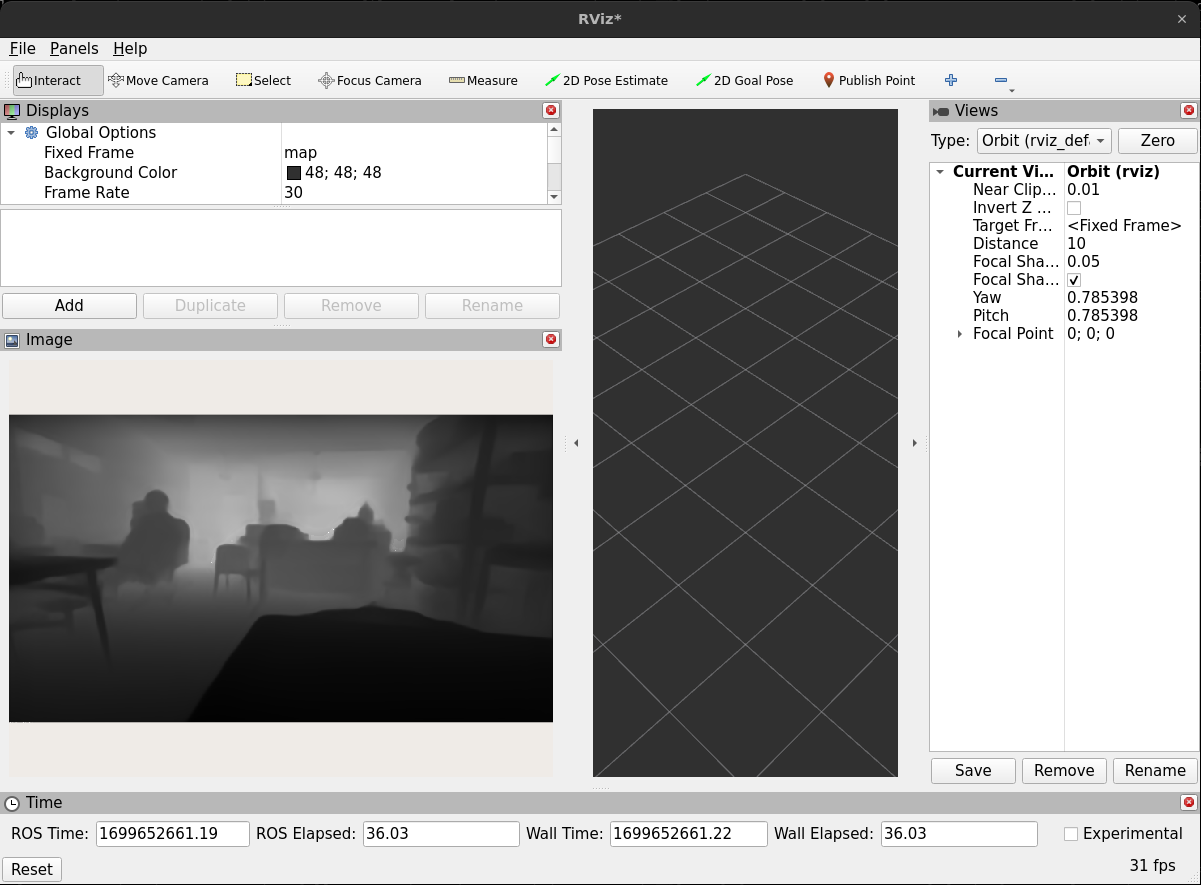

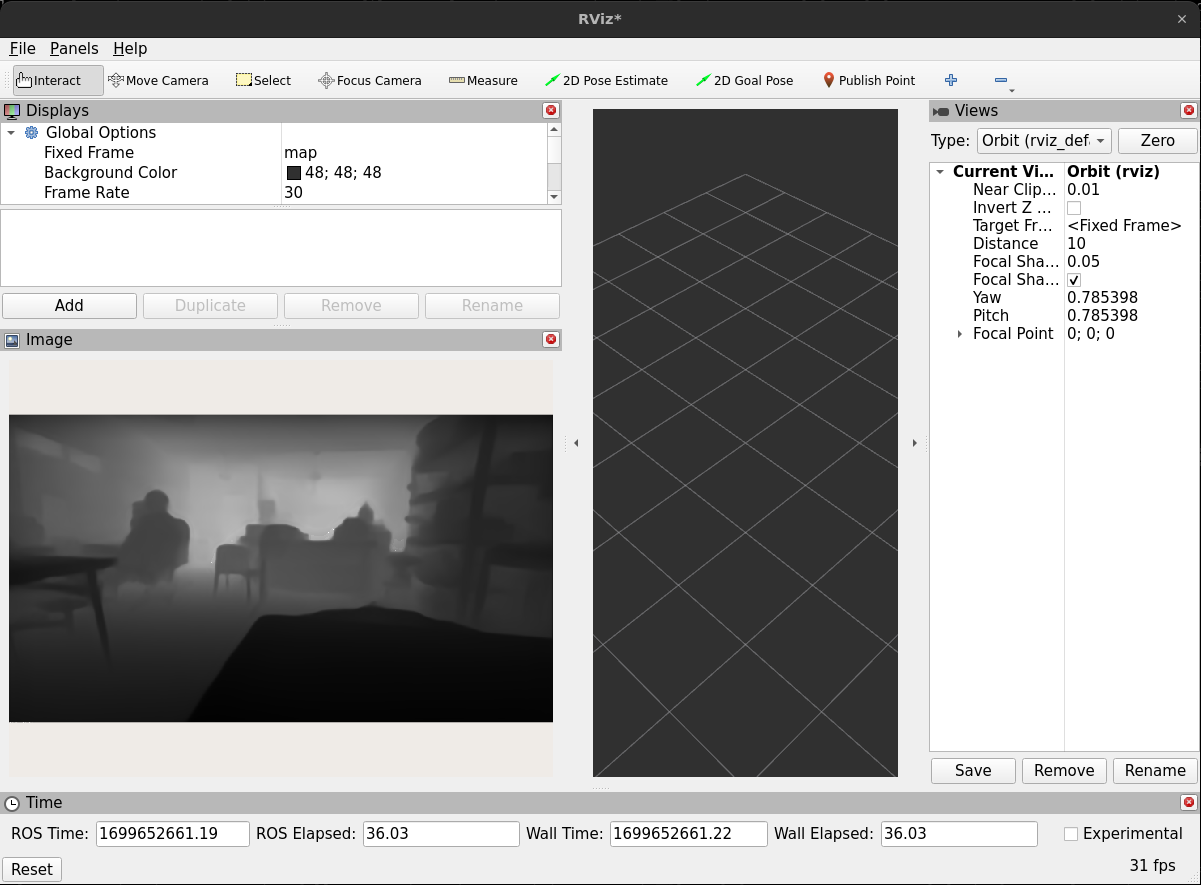

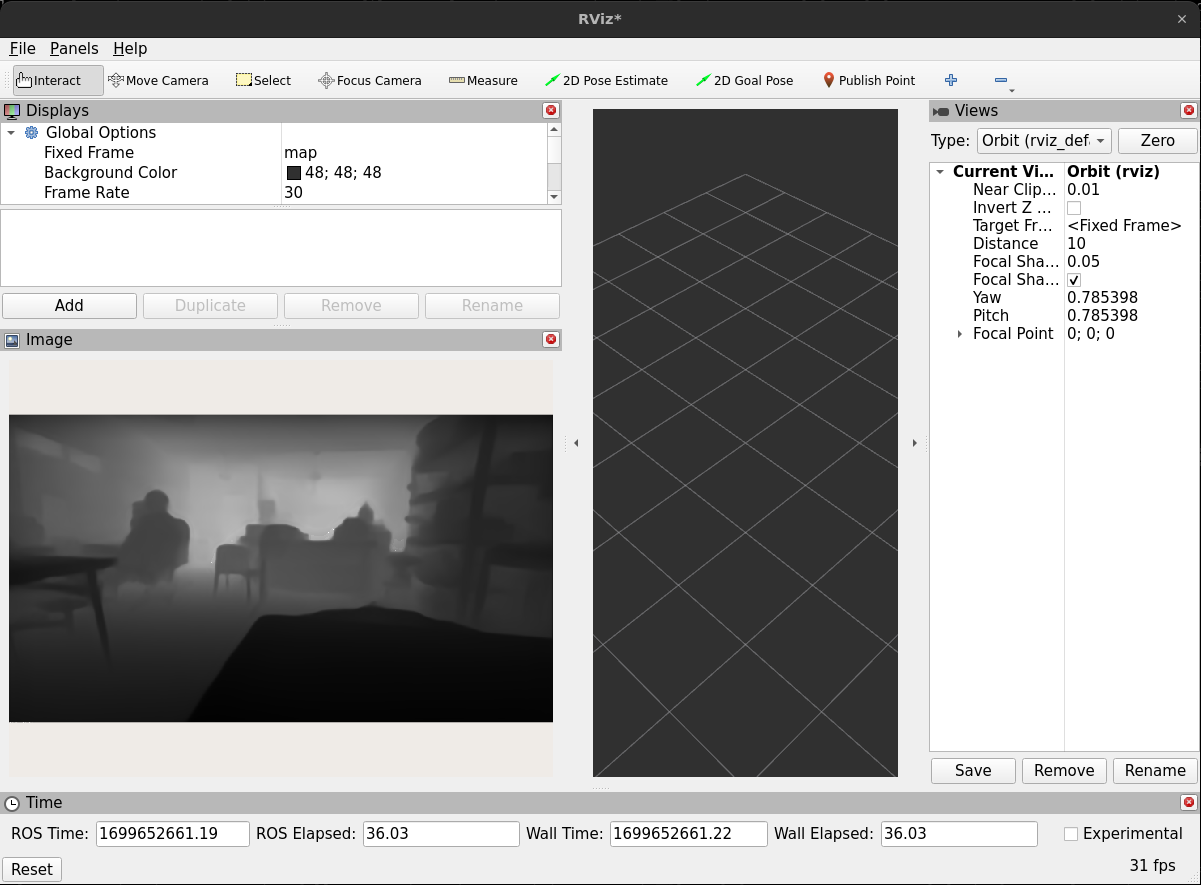

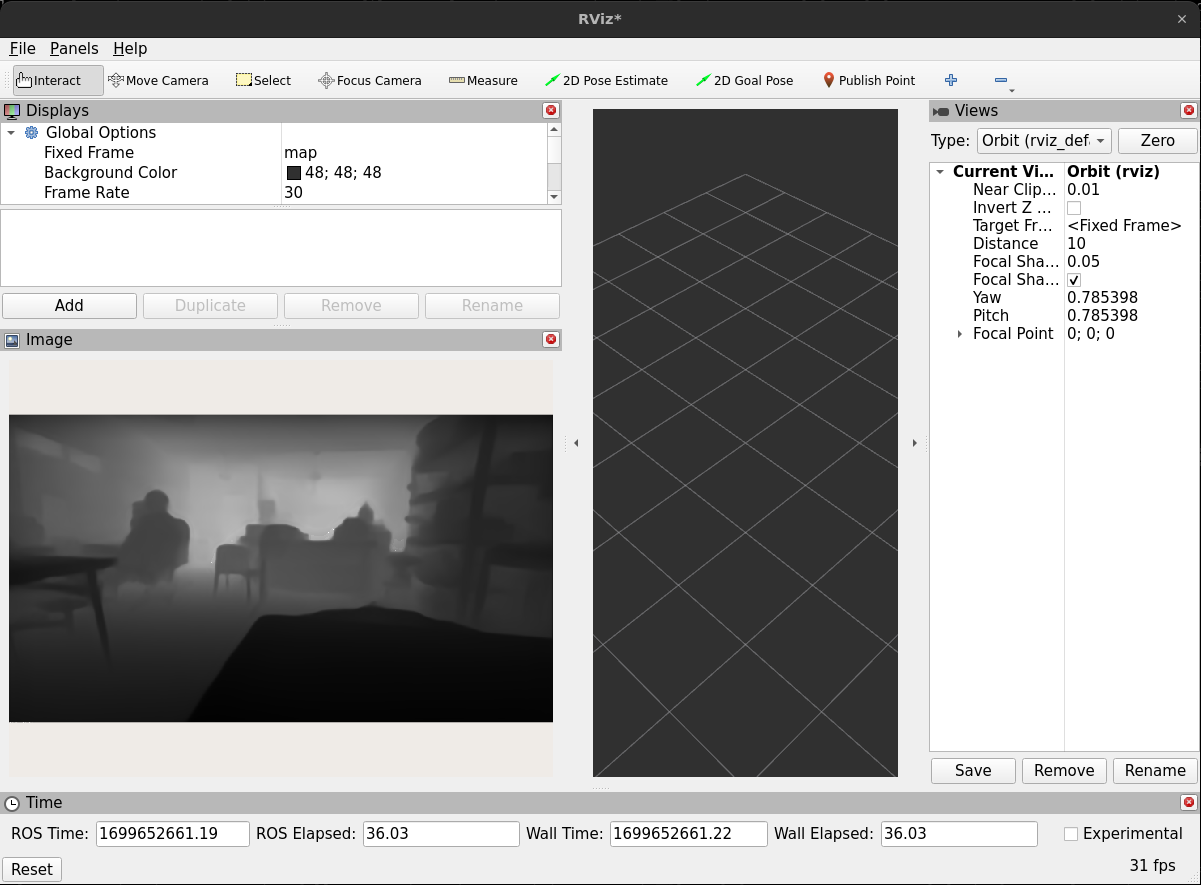

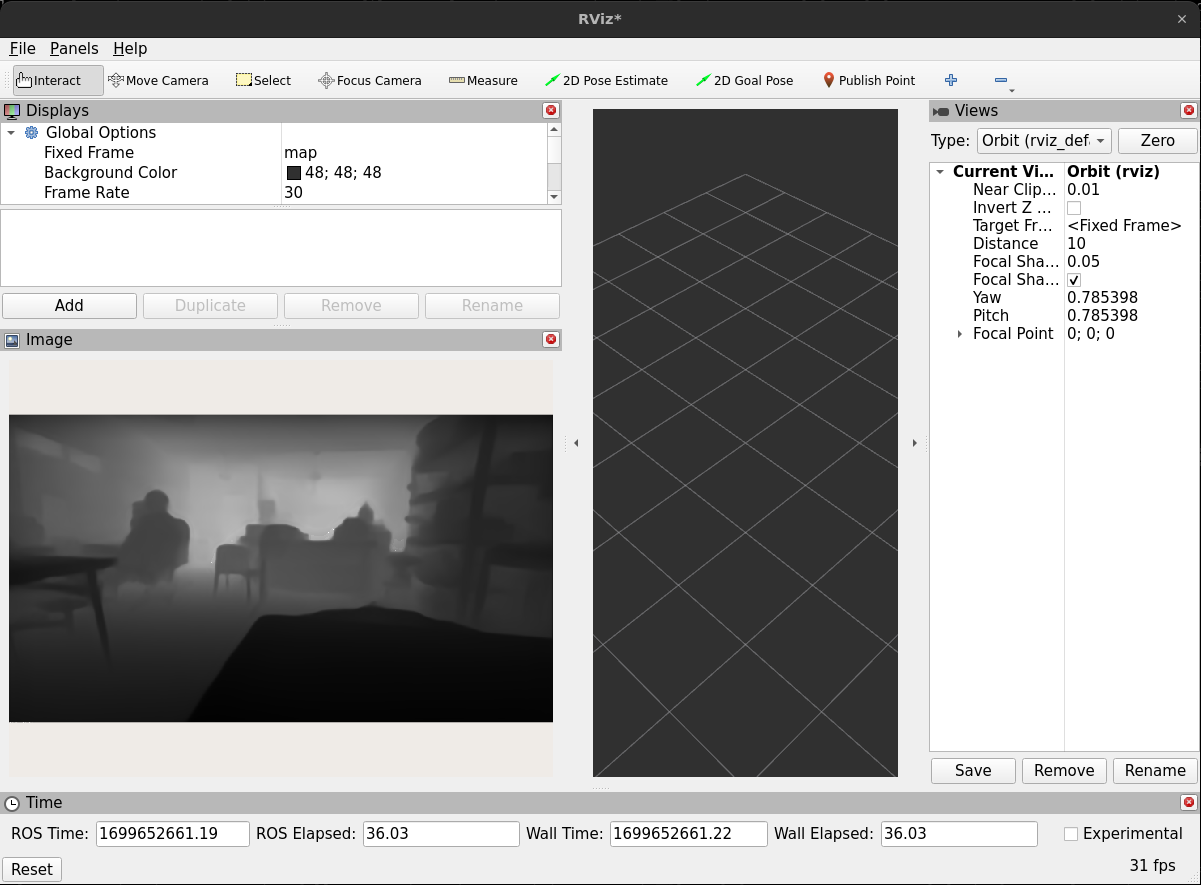

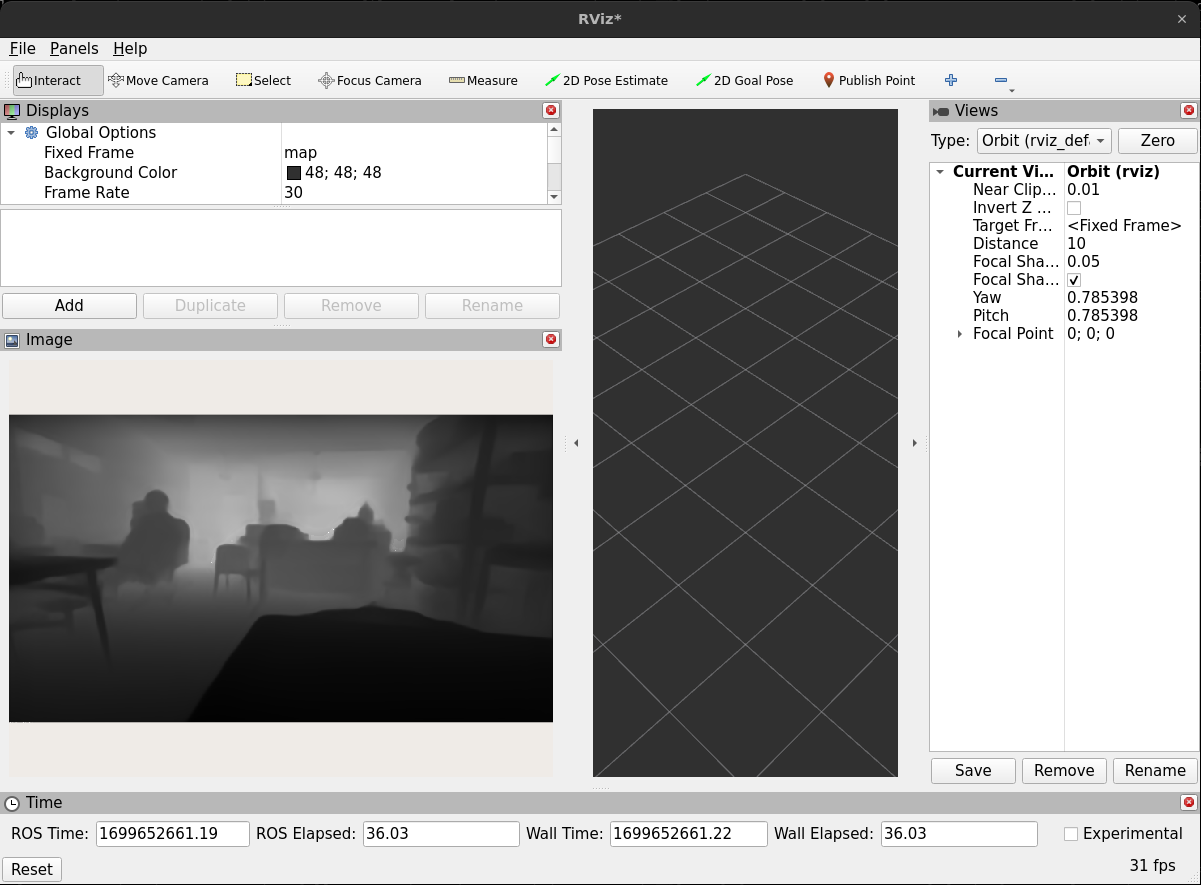

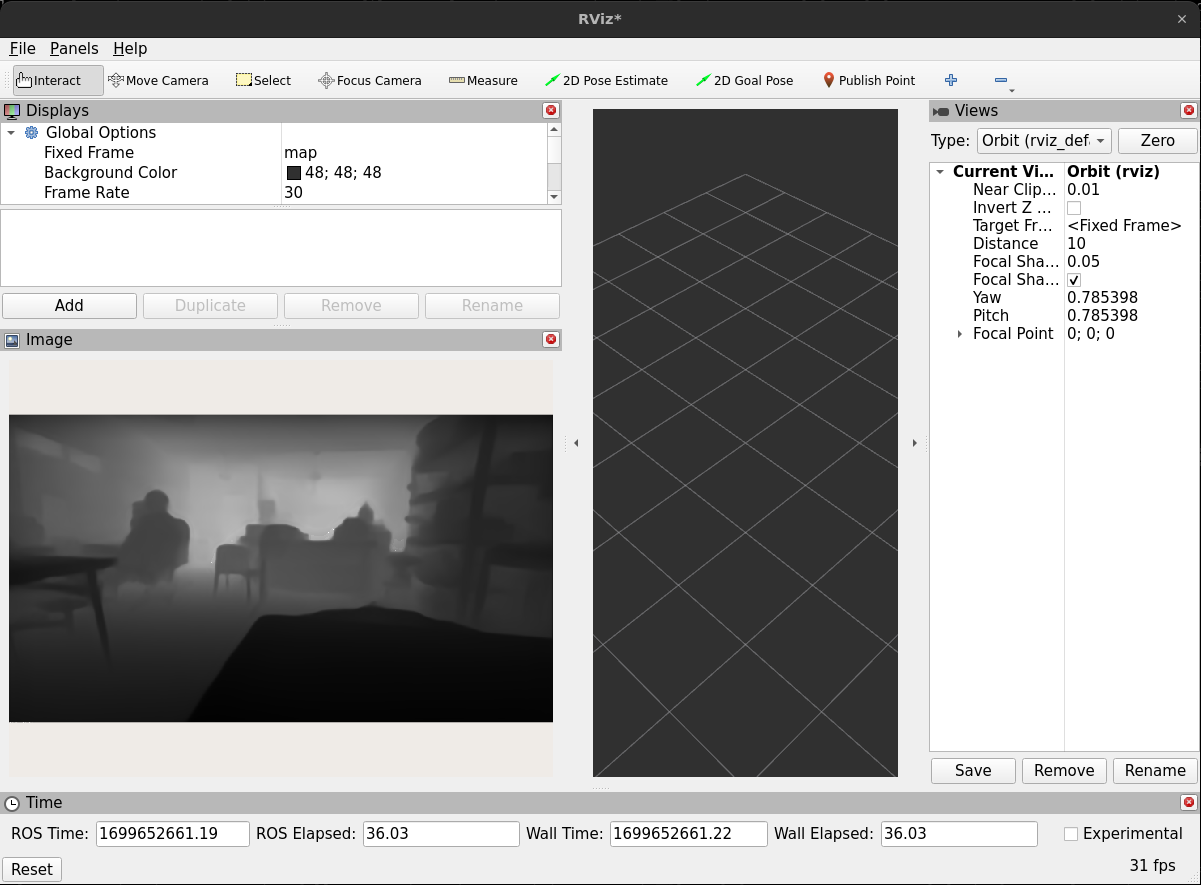

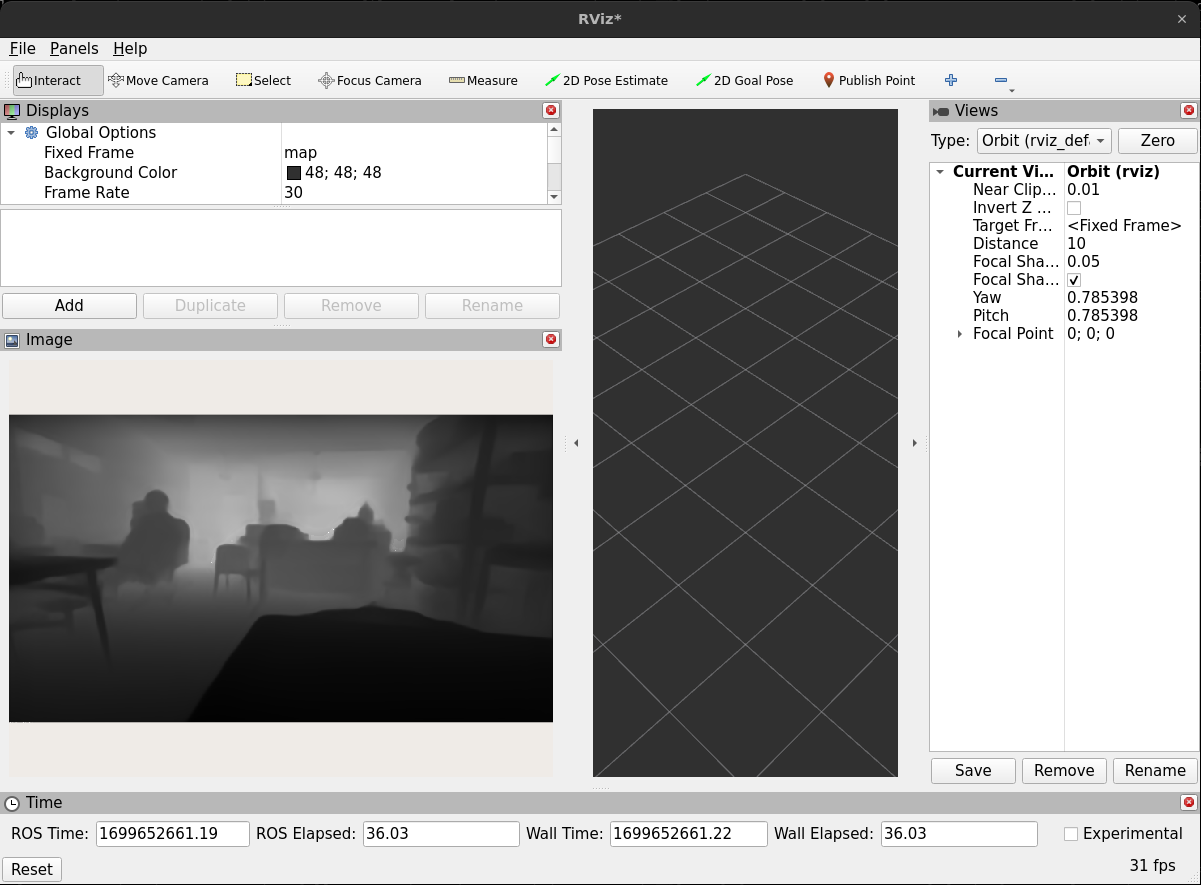

GUI

DEPTH MAP

💬 ROS2 Message

The depth map gets published as sensor_msg/Image in the /mono_depth/depthMap topic

⚠️ Note

1) There is a know problem with MiDAS which doesnt allow tracing the network. 2) If the input image dimensions are changed, change the source code to match your image dimension inputs.

CONTRIBUTING

|

depthstream-accelerator-ros2-integrated-monocular-depth-inference repositorymonocular_depth |

ROS Distro

|

Repository Summary

| Description | DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments. |

| Checkout URI | https://github.com/jagennath-hari/depthstream-accelerator-ros2-integrated-monocular-depth-inference.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-03-17 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| monocular_depth | 0.0.0 |

README

DepthStream-Accelerator-ROS2-Integrated-Monocular-Depth-Inference

DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments.

🏁 Dependencies

1) NVIDIA Driver (Official Link) 2) CUDA Toolkit (Official Link) 3) cuDNN (Official Link) 4) TensorRT (Official Link) 5) OpenCV CUDA (Github Guide) 6) Miniconda (Official Link) 7) ROS 2 Humble (Official Link) 8) ZoeDepth (Official Link)

⚙️ Creating the Engine File

ONNX File

Create the onnx file cd monocular_depth/scripts/ZoeDepth/ && python trt_convert.py. The ONNX file gets saved in the working directory as zoe_nk.onnx, configure the input dimensions as per your input image dimensions (h, w).

You can download a prebuilt .onnx file from LINK.

TensorRT engine creation

Once you have the .onnx file created go into the tensorRT trtexec directory. Mostly this is cd /usr/src/tensorrt/bin/. Now it is time to create the engine file, this could take a few minutes to create. Run the command below,

./trtexec --onnx=zoe_nk.onnx --builderOptimizationLevel=3 --useSpinWait --useRuntime=full --useCudaGraph --precisionConstraints=obey --allowGPUFallback --tacticSources=+CUBLAS,+CUDNN,+JIT_CONVOLUTIONS,+CUBLAS_LT --inputIOFormats=fp32:chw --outputIOFormats=fp32:chw --sparsity=enable --layerOutputTypes=fp32 --layerPrecisions=fp32 --saveEngine=zoe_nk.trt

🖼️ Running Depth Estimation

Build the ROS2 workspace

colcon build --symlink-install --cmake-args=-DCMAKE_BUILD_TYPE=Release --parallel-workers $(nproc)

Inference

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=true With GUI.

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=false Without GUI.

GUI

DEPTH MAP

💬 ROS2 Message

The depth map gets published as sensor_msg/Image in the /mono_depth/depthMap topic

⚠️ Note

1) There is a know problem with MiDAS which doesnt allow tracing the network. 2) If the input image dimensions are changed, change the source code to match your image dimension inputs.

CONTRIBUTING

|

depthstream-accelerator-ros2-integrated-monocular-depth-inference repositorymonocular_depth |

ROS Distro

|

Repository Summary

| Description | DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments. |

| Checkout URI | https://github.com/jagennath-hari/depthstream-accelerator-ros2-integrated-monocular-depth-inference.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-03-17 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| monocular_depth | 0.0.0 |

README

DepthStream-Accelerator-ROS2-Integrated-Monocular-Depth-Inference

DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments.

🏁 Dependencies

1) NVIDIA Driver (Official Link) 2) CUDA Toolkit (Official Link) 3) cuDNN (Official Link) 4) TensorRT (Official Link) 5) OpenCV CUDA (Github Guide) 6) Miniconda (Official Link) 7) ROS 2 Humble (Official Link) 8) ZoeDepth (Official Link)

⚙️ Creating the Engine File

ONNX File

Create the onnx file cd monocular_depth/scripts/ZoeDepth/ && python trt_convert.py. The ONNX file gets saved in the working directory as zoe_nk.onnx, configure the input dimensions as per your input image dimensions (h, w).

You can download a prebuilt .onnx file from LINK.

TensorRT engine creation

Once you have the .onnx file created go into the tensorRT trtexec directory. Mostly this is cd /usr/src/tensorrt/bin/. Now it is time to create the engine file, this could take a few minutes to create. Run the command below,

./trtexec --onnx=zoe_nk.onnx --builderOptimizationLevel=3 --useSpinWait --useRuntime=full --useCudaGraph --precisionConstraints=obey --allowGPUFallback --tacticSources=+CUBLAS,+CUDNN,+JIT_CONVOLUTIONS,+CUBLAS_LT --inputIOFormats=fp32:chw --outputIOFormats=fp32:chw --sparsity=enable --layerOutputTypes=fp32 --layerPrecisions=fp32 --saveEngine=zoe_nk.trt

🖼️ Running Depth Estimation

Build the ROS2 workspace

colcon build --symlink-install --cmake-args=-DCMAKE_BUILD_TYPE=Release --parallel-workers $(nproc)

Inference

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=true With GUI.

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=false Without GUI.

GUI

DEPTH MAP

💬 ROS2 Message

The depth map gets published as sensor_msg/Image in the /mono_depth/depthMap topic

⚠️ Note

1) There is a know problem with MiDAS which doesnt allow tracing the network. 2) If the input image dimensions are changed, change the source code to match your image dimension inputs.

CONTRIBUTING

|

depthstream-accelerator-ros2-integrated-monocular-depth-inference repositorymonocular_depth |

ROS Distro

|

Repository Summary

| Description | DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments. |

| Checkout URI | https://github.com/jagennath-hari/depthstream-accelerator-ros2-integrated-monocular-depth-inference.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-03-17 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| monocular_depth | 0.0.0 |

README

DepthStream-Accelerator-ROS2-Integrated-Monocular-Depth-Inference

DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments.

🏁 Dependencies

1) NVIDIA Driver (Official Link) 2) CUDA Toolkit (Official Link) 3) cuDNN (Official Link) 4) TensorRT (Official Link) 5) OpenCV CUDA (Github Guide) 6) Miniconda (Official Link) 7) ROS 2 Humble (Official Link) 8) ZoeDepth (Official Link)

⚙️ Creating the Engine File

ONNX File

Create the onnx file cd monocular_depth/scripts/ZoeDepth/ && python trt_convert.py. The ONNX file gets saved in the working directory as zoe_nk.onnx, configure the input dimensions as per your input image dimensions (h, w).

You can download a prebuilt .onnx file from LINK.

TensorRT engine creation

Once you have the .onnx file created go into the tensorRT trtexec directory. Mostly this is cd /usr/src/tensorrt/bin/. Now it is time to create the engine file, this could take a few minutes to create. Run the command below,

./trtexec --onnx=zoe_nk.onnx --builderOptimizationLevel=3 --useSpinWait --useRuntime=full --useCudaGraph --precisionConstraints=obey --allowGPUFallback --tacticSources=+CUBLAS,+CUDNN,+JIT_CONVOLUTIONS,+CUBLAS_LT --inputIOFormats=fp32:chw --outputIOFormats=fp32:chw --sparsity=enable --layerOutputTypes=fp32 --layerPrecisions=fp32 --saveEngine=zoe_nk.trt

🖼️ Running Depth Estimation

Build the ROS2 workspace

colcon build --symlink-install --cmake-args=-DCMAKE_BUILD_TYPE=Release --parallel-workers $(nproc)

Inference

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=true With GUI.

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=false Without GUI.

GUI

DEPTH MAP

💬 ROS2 Message

The depth map gets published as sensor_msg/Image in the /mono_depth/depthMap topic

⚠️ Note

1) There is a know problem with MiDAS which doesnt allow tracing the network. 2) If the input image dimensions are changed, change the source code to match your image dimension inputs.

CONTRIBUTING

|

depthstream-accelerator-ros2-integrated-monocular-depth-inference repositorymonocular_depth |

ROS Distro

|

Repository Summary

| Description | DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments. |

| Checkout URI | https://github.com/jagennath-hari/depthstream-accelerator-ros2-integrated-monocular-depth-inference.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-03-17 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| monocular_depth | 0.0.0 |

README

DepthStream-Accelerator-ROS2-Integrated-Monocular-Depth-Inference

DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments.

🏁 Dependencies

1) NVIDIA Driver (Official Link) 2) CUDA Toolkit (Official Link) 3) cuDNN (Official Link) 4) TensorRT (Official Link) 5) OpenCV CUDA (Github Guide) 6) Miniconda (Official Link) 7) ROS 2 Humble (Official Link) 8) ZoeDepth (Official Link)

⚙️ Creating the Engine File

ONNX File

Create the onnx file cd monocular_depth/scripts/ZoeDepth/ && python trt_convert.py. The ONNX file gets saved in the working directory as zoe_nk.onnx, configure the input dimensions as per your input image dimensions (h, w).

You can download a prebuilt .onnx file from LINK.

TensorRT engine creation

Once you have the .onnx file created go into the tensorRT trtexec directory. Mostly this is cd /usr/src/tensorrt/bin/. Now it is time to create the engine file, this could take a few minutes to create. Run the command below,

./trtexec --onnx=zoe_nk.onnx --builderOptimizationLevel=3 --useSpinWait --useRuntime=full --useCudaGraph --precisionConstraints=obey --allowGPUFallback --tacticSources=+CUBLAS,+CUDNN,+JIT_CONVOLUTIONS,+CUBLAS_LT --inputIOFormats=fp32:chw --outputIOFormats=fp32:chw --sparsity=enable --layerOutputTypes=fp32 --layerPrecisions=fp32 --saveEngine=zoe_nk.trt

🖼️ Running Depth Estimation

Build the ROS2 workspace

colcon build --symlink-install --cmake-args=-DCMAKE_BUILD_TYPE=Release --parallel-workers $(nproc)

Inference

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=true With GUI.

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=false Without GUI.

GUI

DEPTH MAP

💬 ROS2 Message

The depth map gets published as sensor_msg/Image in the /mono_depth/depthMap topic

⚠️ Note

1) There is a know problem with MiDAS which doesnt allow tracing the network. 2) If the input image dimensions are changed, change the source code to match your image dimension inputs.

CONTRIBUTING

|

depthstream-accelerator-ros2-integrated-monocular-depth-inference repositorymonocular_depth |

ROS Distro

|

Repository Summary

| Description | DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments. |

| Checkout URI | https://github.com/jagennath-hari/depthstream-accelerator-ros2-integrated-monocular-depth-inference.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-03-17 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| monocular_depth | 0.0.0 |

README

DepthStream-Accelerator-ROS2-Integrated-Monocular-Depth-Inference

DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments.

🏁 Dependencies

1) NVIDIA Driver (Official Link) 2) CUDA Toolkit (Official Link) 3) cuDNN (Official Link) 4) TensorRT (Official Link) 5) OpenCV CUDA (Github Guide) 6) Miniconda (Official Link) 7) ROS 2 Humble (Official Link) 8) ZoeDepth (Official Link)

⚙️ Creating the Engine File

ONNX File

Create the onnx file cd monocular_depth/scripts/ZoeDepth/ && python trt_convert.py. The ONNX file gets saved in the working directory as zoe_nk.onnx, configure the input dimensions as per your input image dimensions (h, w).

You can download a prebuilt .onnx file from LINK.

TensorRT engine creation

Once you have the .onnx file created go into the tensorRT trtexec directory. Mostly this is cd /usr/src/tensorrt/bin/. Now it is time to create the engine file, this could take a few minutes to create. Run the command below,

./trtexec --onnx=zoe_nk.onnx --builderOptimizationLevel=3 --useSpinWait --useRuntime=full --useCudaGraph --precisionConstraints=obey --allowGPUFallback --tacticSources=+CUBLAS,+CUDNN,+JIT_CONVOLUTIONS,+CUBLAS_LT --inputIOFormats=fp32:chw --outputIOFormats=fp32:chw --sparsity=enable --layerOutputTypes=fp32 --layerPrecisions=fp32 --saveEngine=zoe_nk.trt

🖼️ Running Depth Estimation

Build the ROS2 workspace

colcon build --symlink-install --cmake-args=-DCMAKE_BUILD_TYPE=Release --parallel-workers $(nproc)

Inference

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=true With GUI.

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=false Without GUI.

GUI

DEPTH MAP

💬 ROS2 Message

The depth map gets published as sensor_msg/Image in the /mono_depth/depthMap topic

⚠️ Note

1) There is a know problem with MiDAS which doesnt allow tracing the network. 2) If the input image dimensions are changed, change the source code to match your image dimension inputs.

CONTRIBUTING

|

depthstream-accelerator-ros2-integrated-monocular-depth-inference repositorymonocular_depth |

ROS Distro

|

Repository Summary

| Description | DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments. |

| Checkout URI | https://github.com/jagennath-hari/depthstream-accelerator-ros2-integrated-monocular-depth-inference.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-03-17 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| monocular_depth | 0.0.0 |

README

DepthStream-Accelerator-ROS2-Integrated-Monocular-Depth-Inference

DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments.

🏁 Dependencies

1) NVIDIA Driver (Official Link) 2) CUDA Toolkit (Official Link) 3) cuDNN (Official Link) 4) TensorRT (Official Link) 5) OpenCV CUDA (Github Guide) 6) Miniconda (Official Link) 7) ROS 2 Humble (Official Link) 8) ZoeDepth (Official Link)

⚙️ Creating the Engine File

ONNX File

Create the onnx file cd monocular_depth/scripts/ZoeDepth/ && python trt_convert.py. The ONNX file gets saved in the working directory as zoe_nk.onnx, configure the input dimensions as per your input image dimensions (h, w).

You can download a prebuilt .onnx file from LINK.

TensorRT engine creation

Once you have the .onnx file created go into the tensorRT trtexec directory. Mostly this is cd /usr/src/tensorrt/bin/. Now it is time to create the engine file, this could take a few minutes to create. Run the command below,

./trtexec --onnx=zoe_nk.onnx --builderOptimizationLevel=3 --useSpinWait --useRuntime=full --useCudaGraph --precisionConstraints=obey --allowGPUFallback --tacticSources=+CUBLAS,+CUDNN,+JIT_CONVOLUTIONS,+CUBLAS_LT --inputIOFormats=fp32:chw --outputIOFormats=fp32:chw --sparsity=enable --layerOutputTypes=fp32 --layerPrecisions=fp32 --saveEngine=zoe_nk.trt

🖼️ Running Depth Estimation

Build the ROS2 workspace

colcon build --symlink-install --cmake-args=-DCMAKE_BUILD_TYPE=Release --parallel-workers $(nproc)

Inference

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=true With GUI.

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=false Without GUI.

GUI

DEPTH MAP

💬 ROS2 Message

The depth map gets published as sensor_msg/Image in the /mono_depth/depthMap topic

⚠️ Note

1) There is a know problem with MiDAS which doesnt allow tracing the network. 2) If the input image dimensions are changed, change the source code to match your image dimension inputs.

CONTRIBUTING

|

depthstream-accelerator-ros2-integrated-monocular-depth-inference repositorymonocular_depth |

ROS Distro

|

Repository Summary

| Description | DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments. |

| Checkout URI | https://github.com/jagennath-hari/depthstream-accelerator-ros2-integrated-monocular-depth-inference.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-03-17 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| monocular_depth | 0.0.0 |

README

DepthStream-Accelerator-ROS2-Integrated-Monocular-Depth-Inference

DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments.

🏁 Dependencies

1) NVIDIA Driver (Official Link) 2) CUDA Toolkit (Official Link) 3) cuDNN (Official Link) 4) TensorRT (Official Link) 5) OpenCV CUDA (Github Guide) 6) Miniconda (Official Link) 7) ROS 2 Humble (Official Link) 8) ZoeDepth (Official Link)

⚙️ Creating the Engine File

ONNX File

Create the onnx file cd monocular_depth/scripts/ZoeDepth/ && python trt_convert.py. The ONNX file gets saved in the working directory as zoe_nk.onnx, configure the input dimensions as per your input image dimensions (h, w).

You can download a prebuilt .onnx file from LINK.

TensorRT engine creation

Once you have the .onnx file created go into the tensorRT trtexec directory. Mostly this is cd /usr/src/tensorrt/bin/. Now it is time to create the engine file, this could take a few minutes to create. Run the command below,

./trtexec --onnx=zoe_nk.onnx --builderOptimizationLevel=3 --useSpinWait --useRuntime=full --useCudaGraph --precisionConstraints=obey --allowGPUFallback --tacticSources=+CUBLAS,+CUDNN,+JIT_CONVOLUTIONS,+CUBLAS_LT --inputIOFormats=fp32:chw --outputIOFormats=fp32:chw --sparsity=enable --layerOutputTypes=fp32 --layerPrecisions=fp32 --saveEngine=zoe_nk.trt

🖼️ Running Depth Estimation

Build the ROS2 workspace

colcon build --symlink-install --cmake-args=-DCMAKE_BUILD_TYPE=Release --parallel-workers $(nproc)

Inference

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=true With GUI.

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=false Without GUI.

GUI

DEPTH MAP

💬 ROS2 Message

The depth map gets published as sensor_msg/Image in the /mono_depth/depthMap topic

⚠️ Note

1) There is a know problem with MiDAS which doesnt allow tracing the network. 2) If the input image dimensions are changed, change the source code to match your image dimension inputs.

CONTRIBUTING

|

depthstream-accelerator-ros2-integrated-monocular-depth-inference repositorymonocular_depth |

ROS Distro

|

Repository Summary

| Description | DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments. |

| Checkout URI | https://github.com/jagennath-hari/depthstream-accelerator-ros2-integrated-monocular-depth-inference.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-03-17 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| monocular_depth | 0.0.0 |

README

DepthStream-Accelerator-ROS2-Integrated-Monocular-Depth-Inference

DepthStream Accelerator: A TensorRT-optimized monocular depth estimation tool with ROS2 integration for C++. It offers high-speed, accurate depth perception, perfect for real-time applications in robotics, autonomous vehicles, and interactive 3D environments.

🏁 Dependencies

1) NVIDIA Driver (Official Link) 2) CUDA Toolkit (Official Link) 3) cuDNN (Official Link) 4) TensorRT (Official Link) 5) OpenCV CUDA (Github Guide) 6) Miniconda (Official Link) 7) ROS 2 Humble (Official Link) 8) ZoeDepth (Official Link)

⚙️ Creating the Engine File

ONNX File

Create the onnx file cd monocular_depth/scripts/ZoeDepth/ && python trt_convert.py. The ONNX file gets saved in the working directory as zoe_nk.onnx, configure the input dimensions as per your input image dimensions (h, w).

You can download a prebuilt .onnx file from LINK.

TensorRT engine creation

Once you have the .onnx file created go into the tensorRT trtexec directory. Mostly this is cd /usr/src/tensorrt/bin/. Now it is time to create the engine file, this could take a few minutes to create. Run the command below,

./trtexec --onnx=zoe_nk.onnx --builderOptimizationLevel=3 --useSpinWait --useRuntime=full --useCudaGraph --precisionConstraints=obey --allowGPUFallback --tacticSources=+CUBLAS,+CUDNN,+JIT_CONVOLUTIONS,+CUBLAS_LT --inputIOFormats=fp32:chw --outputIOFormats=fp32:chw --sparsity=enable --layerOutputTypes=fp32 --layerPrecisions=fp32 --saveEngine=zoe_nk.trt

🖼️ Running Depth Estimation

Build the ROS2 workspace

colcon build --symlink-install --cmake-args=-DCMAKE_BUILD_TYPE=Release --parallel-workers $(nproc)

Inference

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=true With GUI.

ros2 launch monocular_depth mono_depth.launch.py trt_path:=zoe_nk.trt image_topic:=/rgb/image_rect_color gui:=false Without GUI.

GUI

DEPTH MAP

💬 ROS2 Message

The depth map gets published as sensor_msg/Image in the /mono_depth/depthMap topic

⚠️ Note

1) There is a know problem with MiDAS which doesnt allow tracing the network. 2) If the input image dimensions are changed, change the source code to match your image dimension inputs.