Repository Summary

| Description | Automated, hardware-independent Hand-Eye Calibration |

| Checkout URI | https://github.com/ifl-camp/easy_handeye.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| easy_handeye | 0.4.3 |

| easy_handeye_msgs | 0.4.3 |

| rqt_easy_handeye | 0.4.3 |

README

easy_handeye: automated, hardware-independent Hand-Eye Calibration for ROS1

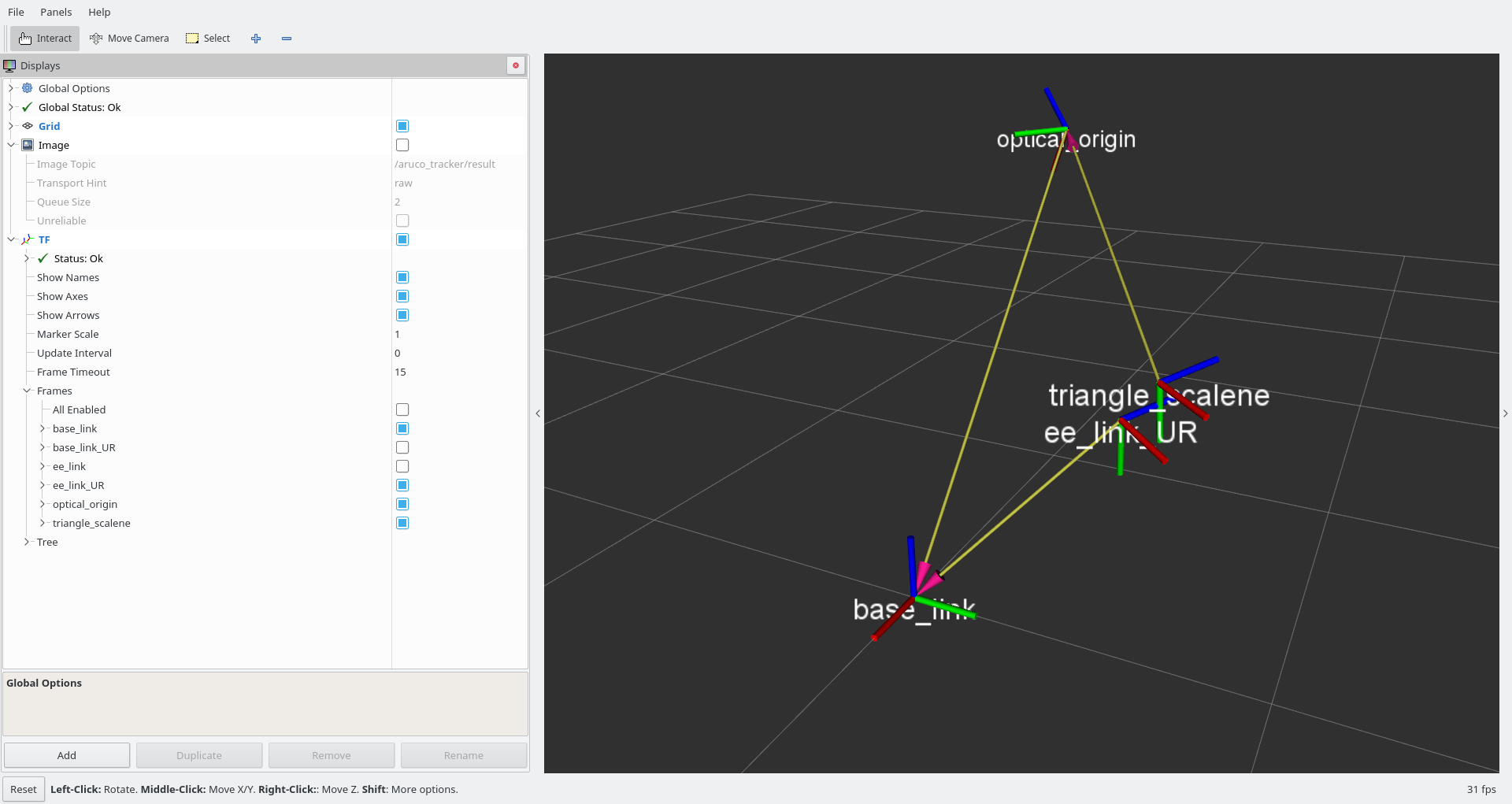

This package provides functionality and a GUI to:

-

sample the robot position and tracking system output via

tf, - compute the eye-on-base or eye-in-hand calibration matrix through the OpenCV library’s Tsai-Lenz algorithm implementation,

- store the result of the calibration,

-

publish the result of the calibration procedure as a

tftransform at each subsequent system startup, - (optional) automatically move a robot around a starting pose via

MoveIt!to acquire the samples.

The intended result is to make it easy and straightforward to perform the calibration, and to keep it up-to-date throughout the system. Two launch files are provided to be run, respectively to perform the calibration and check its result. A further launch file can be integrated into your own launch files, to make use of the result of the calibration in a transparent way: if the calibration is performed again, the updated result will be used without further action required.

You can try out this software in a simulator, through the

easy_handeye_demo package. This package also serves as an

example for integrating easy_handeye into your own launch scripts.

NOTE: a (development) ROS2 version of this package is available here

News

- version 0.4.3

- documentation and bug fixes

- version 0.4.2

- fixes for the freehand robot movement scenario

- version 0.4.1

- fixed a bug that prevented loading and publishing the calibration - thanks to @lyh458!

- version 0.4.0

- switched to OpenCV as a backend for the algorithm implementation

- added UI element to pick the calibration algorithm (Tsai-Lenz, Park, Horaud, Andreff, Daniilidis)

- version 0.3.1

- restored compatibility with Melodic and Kinetic along with Noetic

- version 0.3.0

- ROS Noetic compatibility

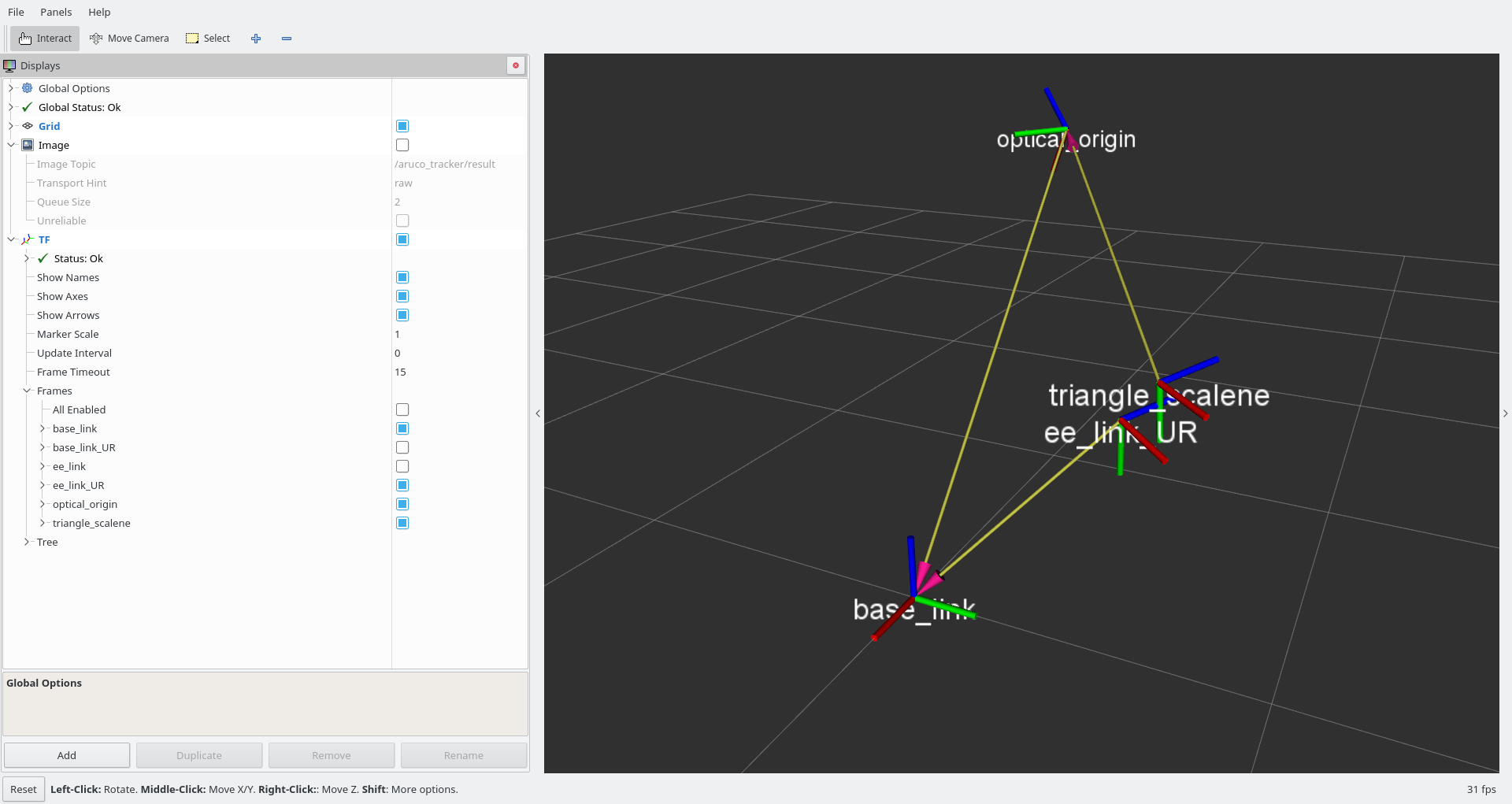

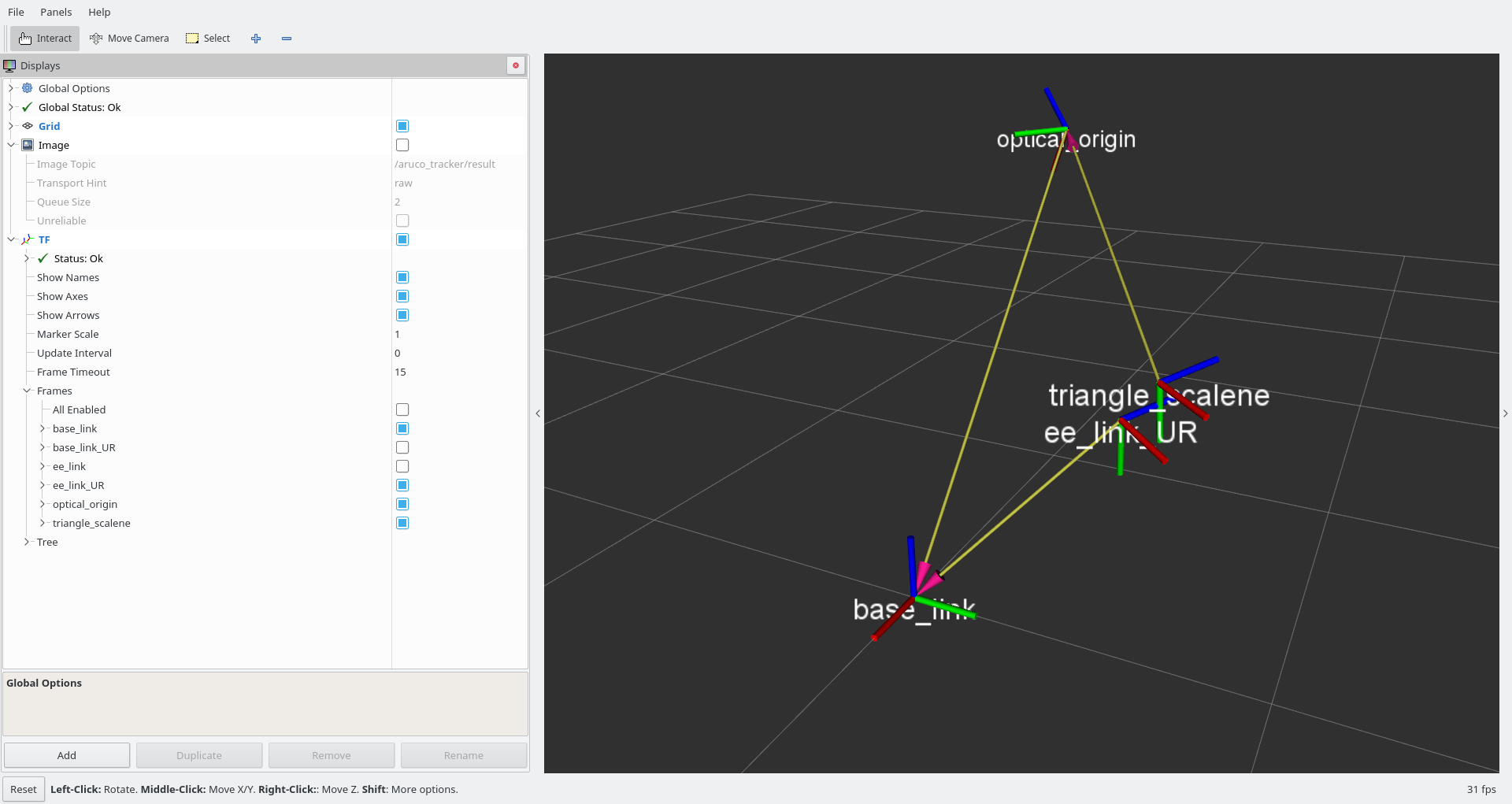

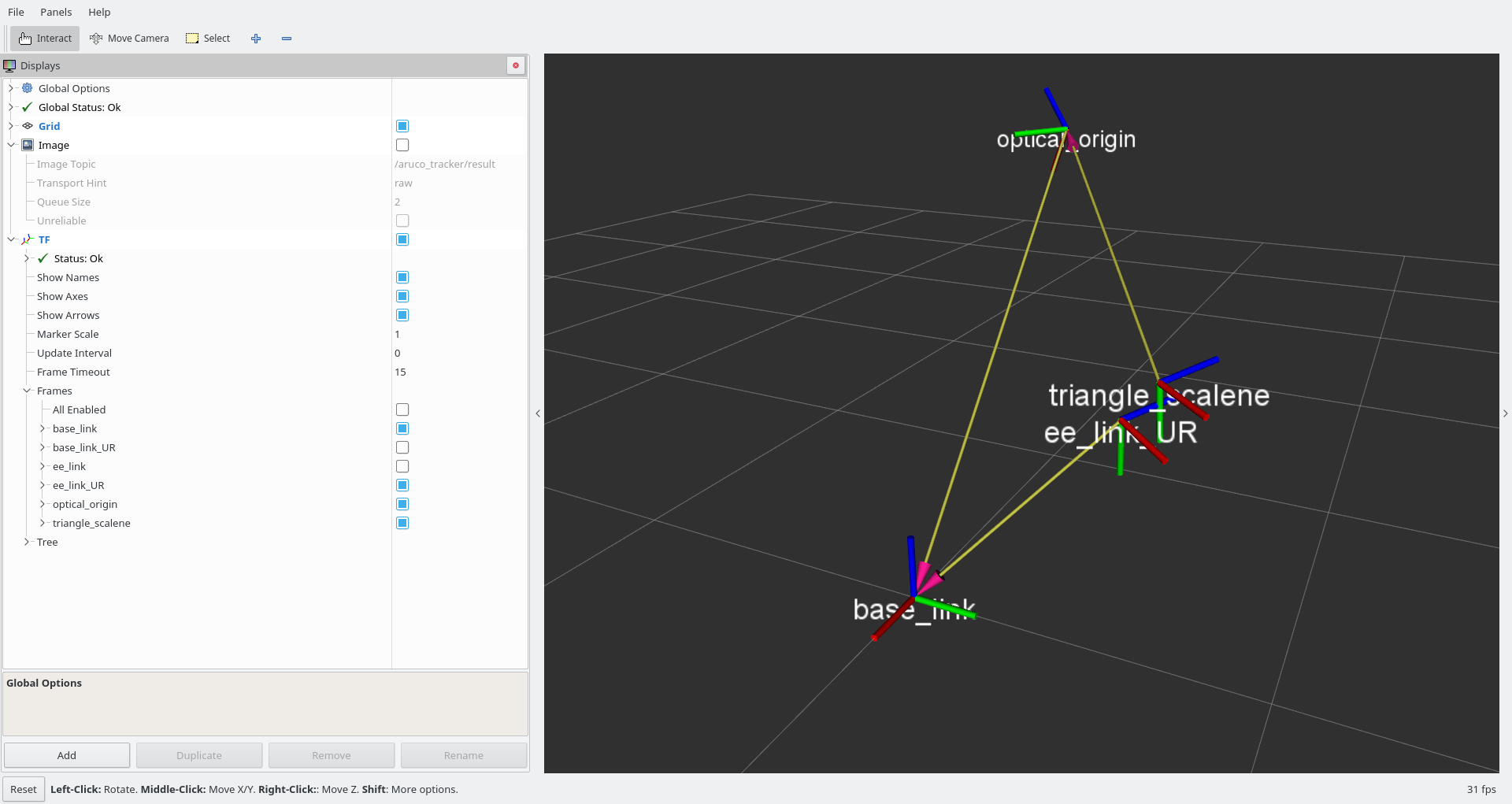

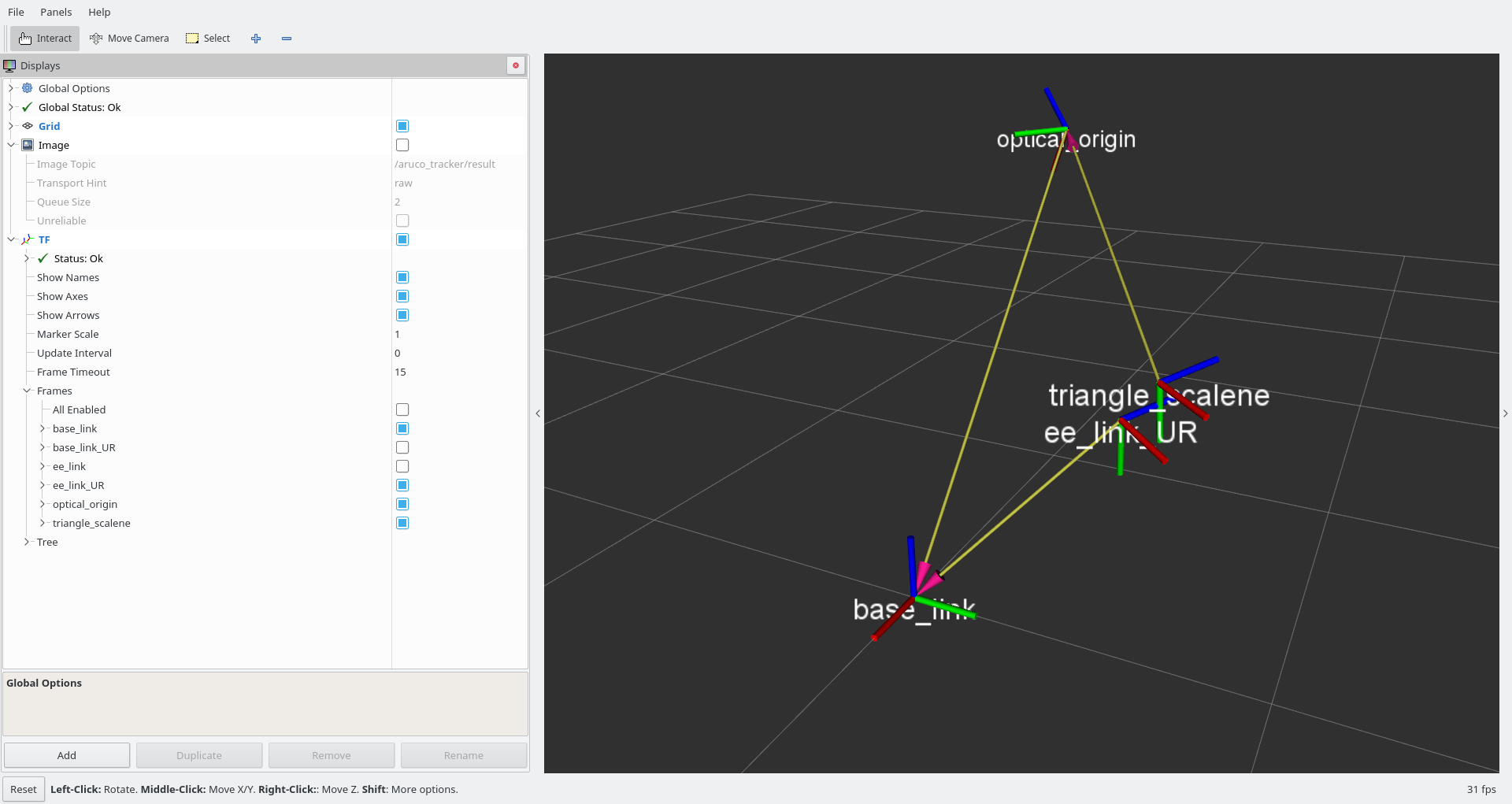

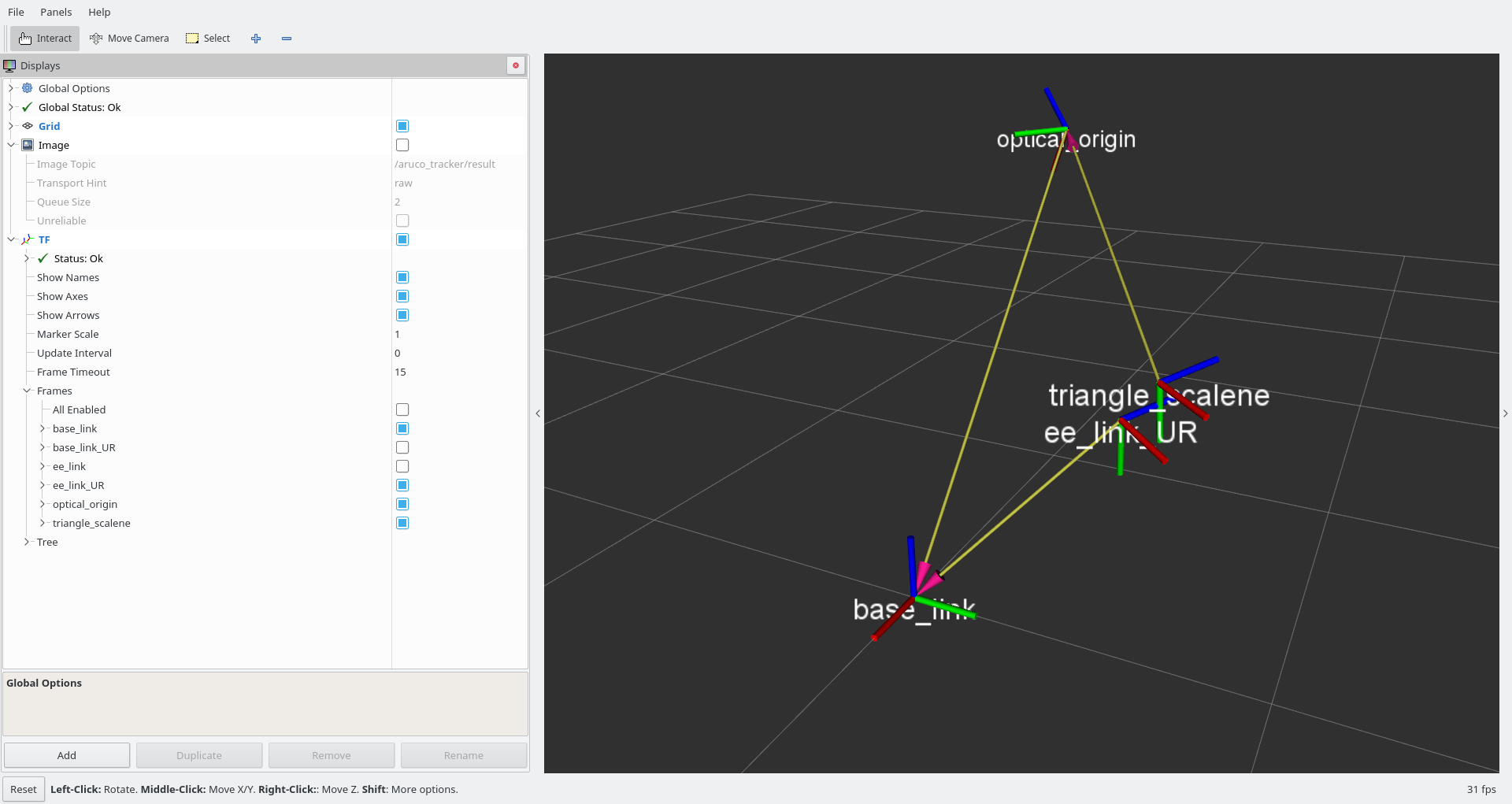

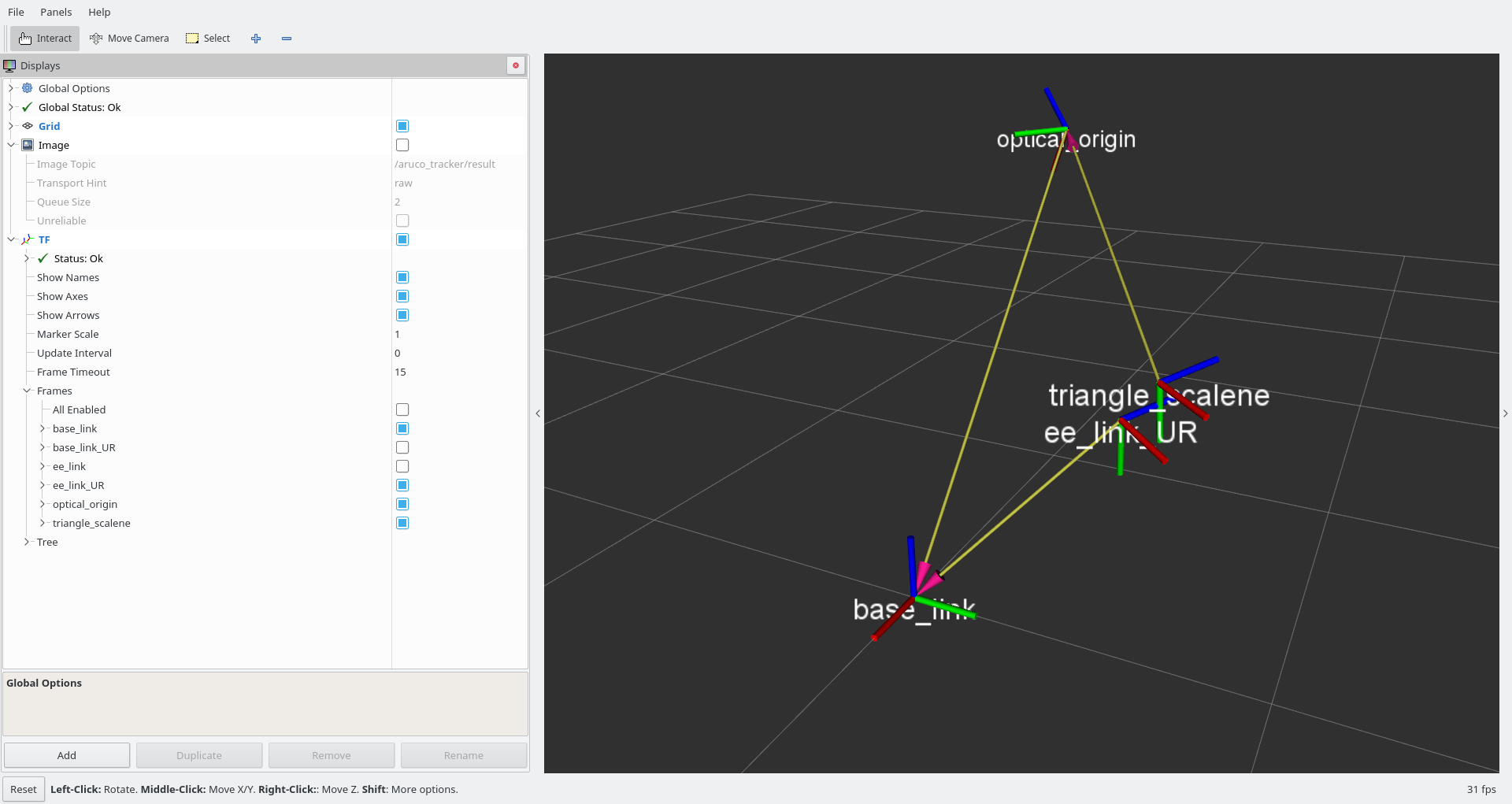

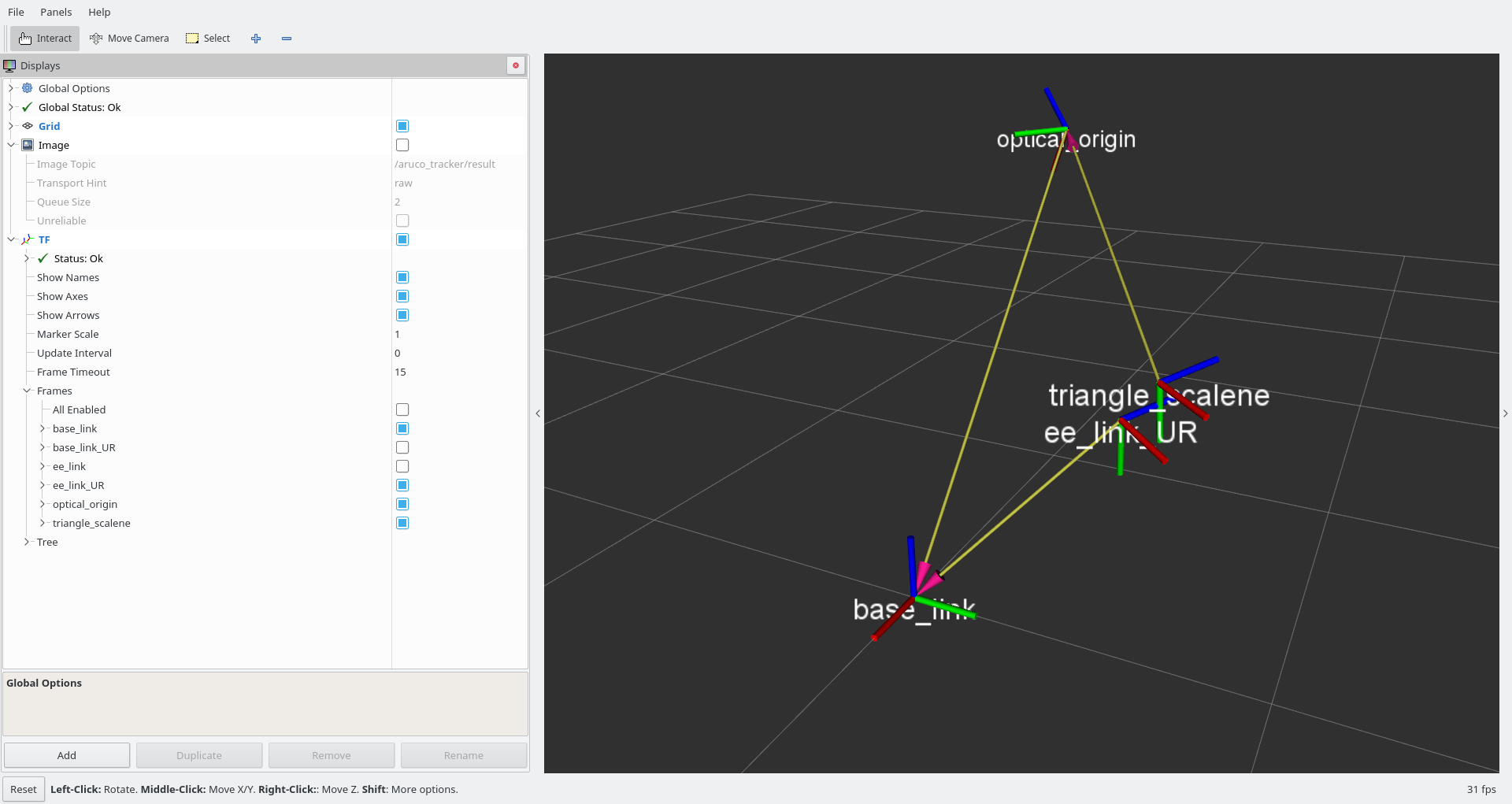

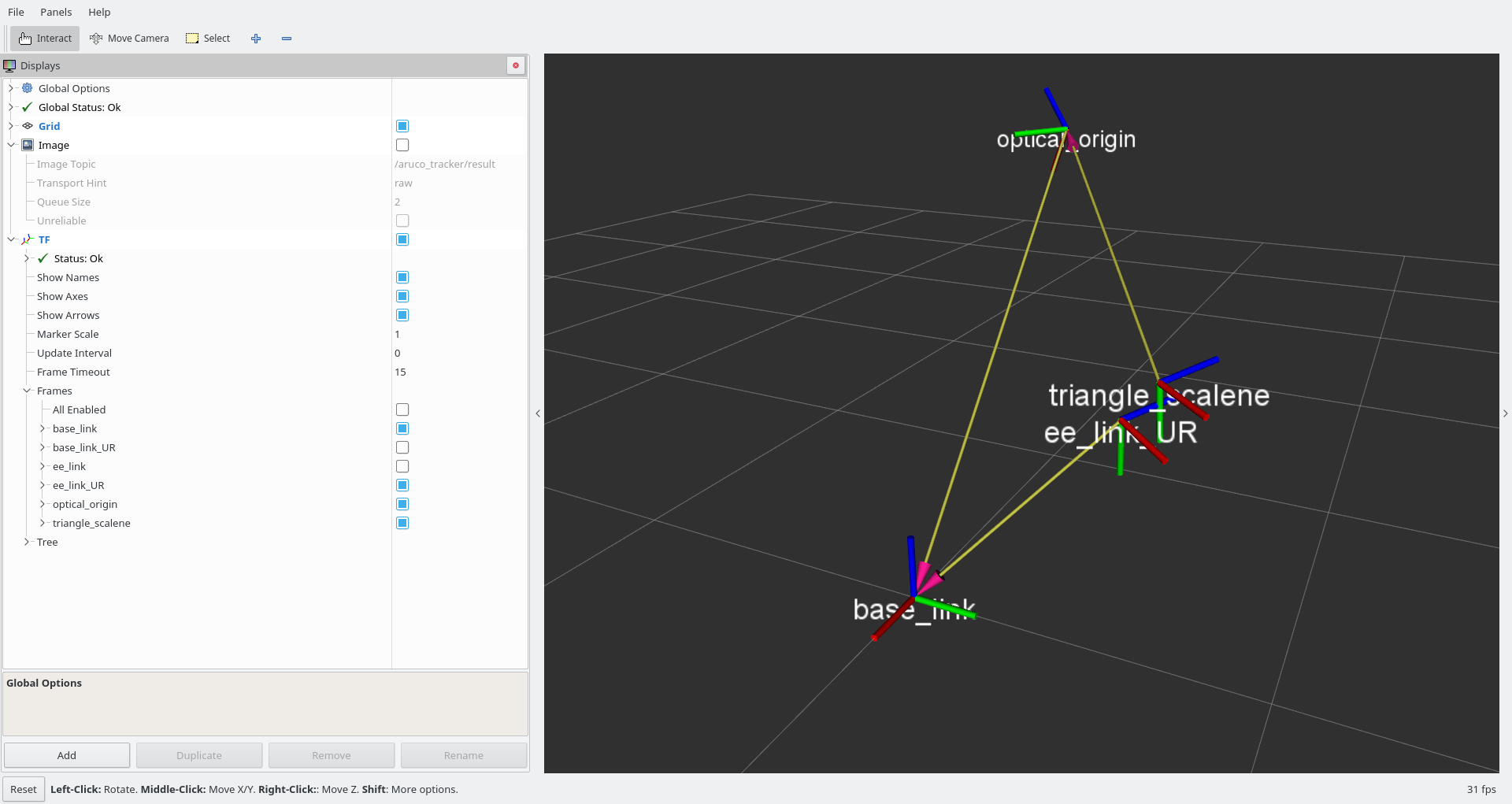

- added “evaluator” GUI to evaluate the accuracy of the calibration while running

check_calibration.launch

Use Cases

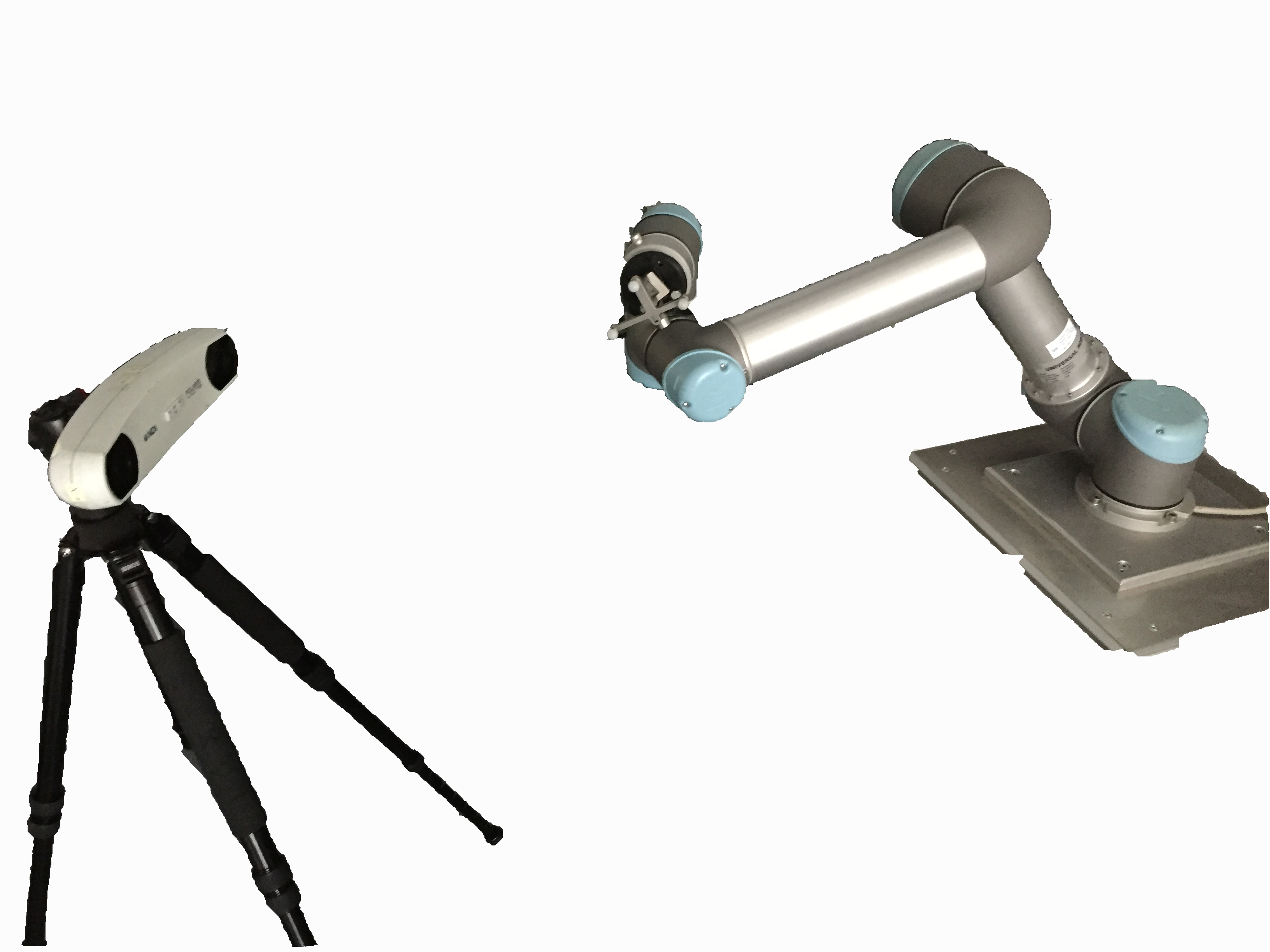

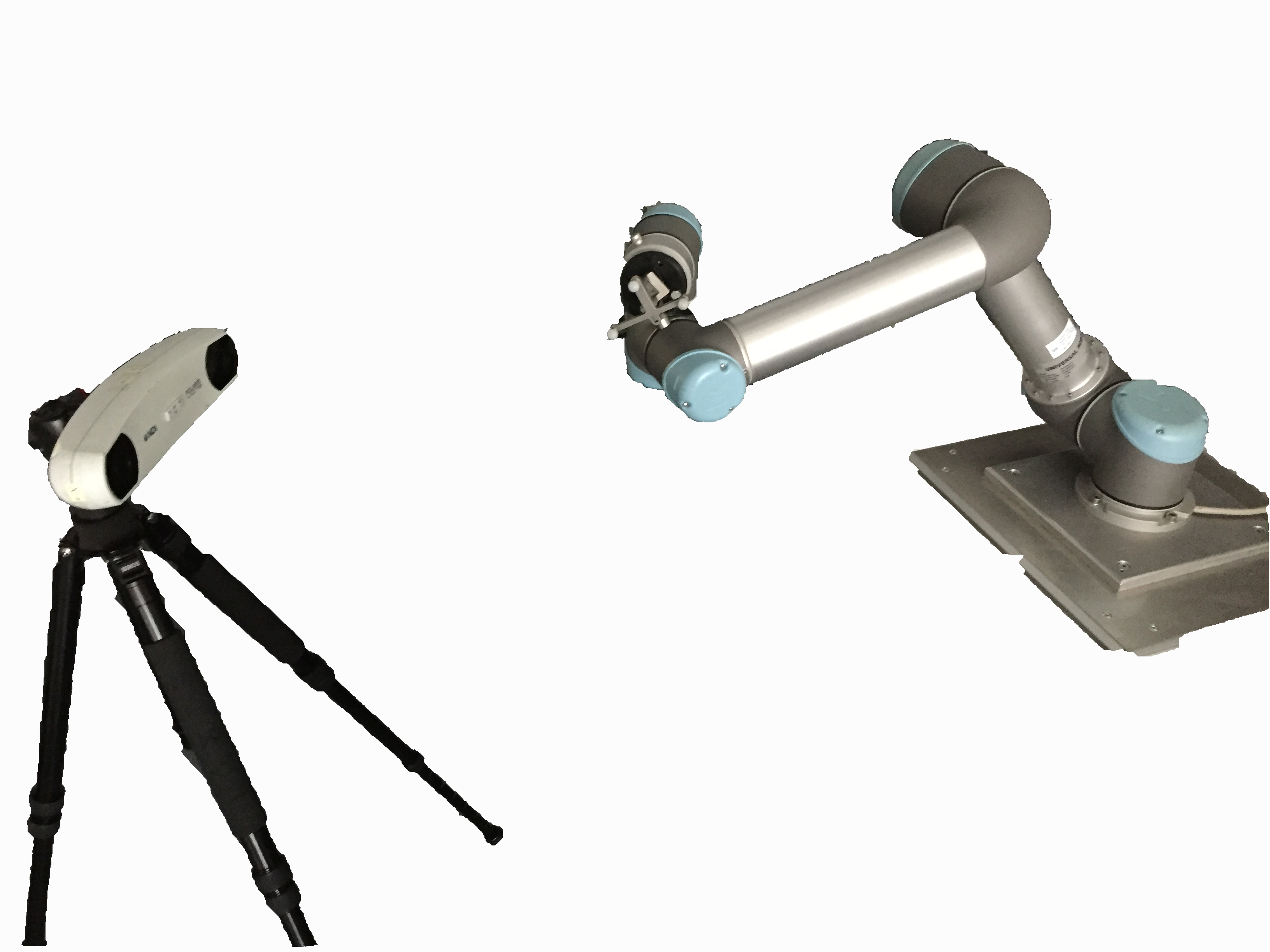

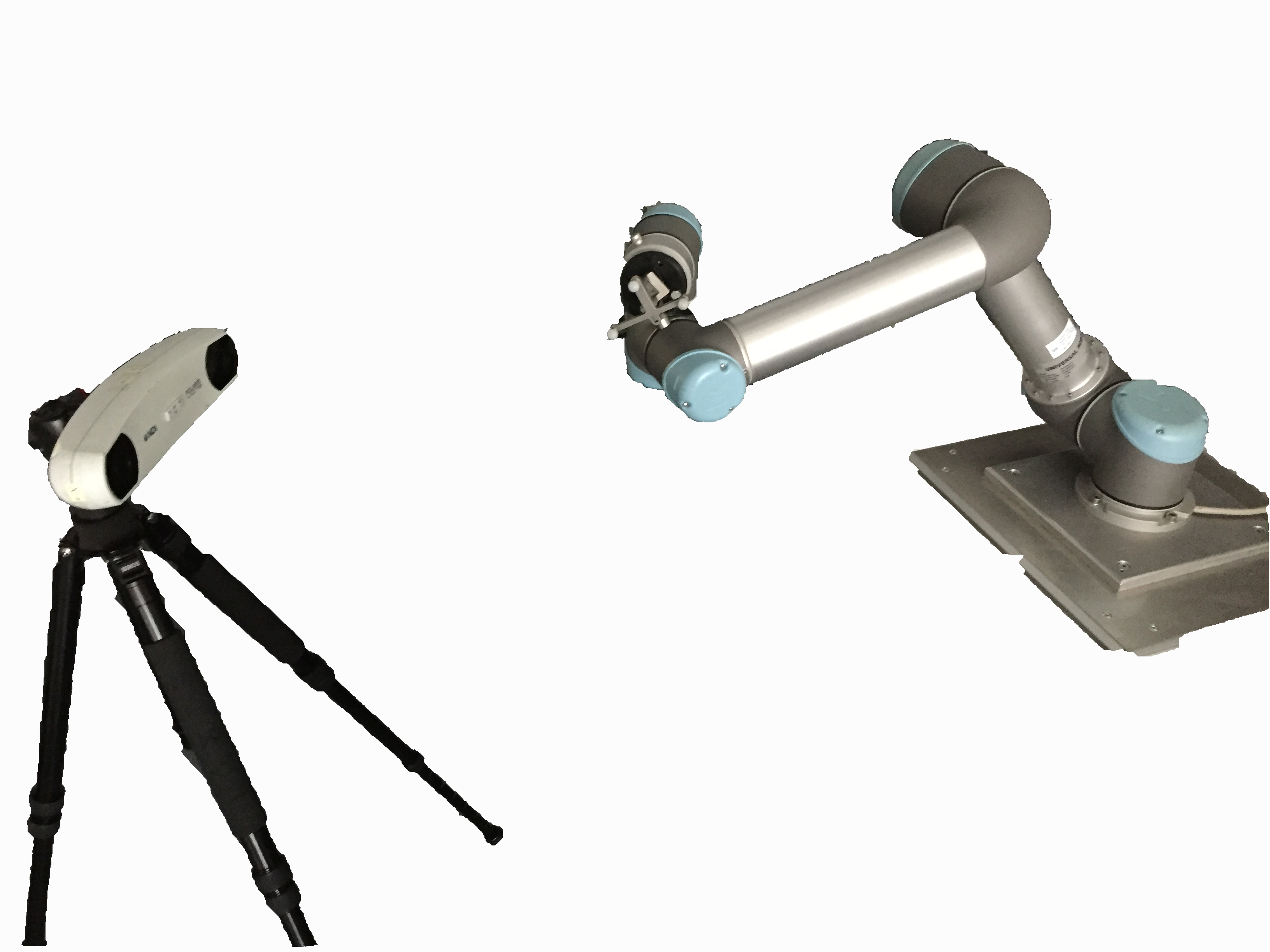

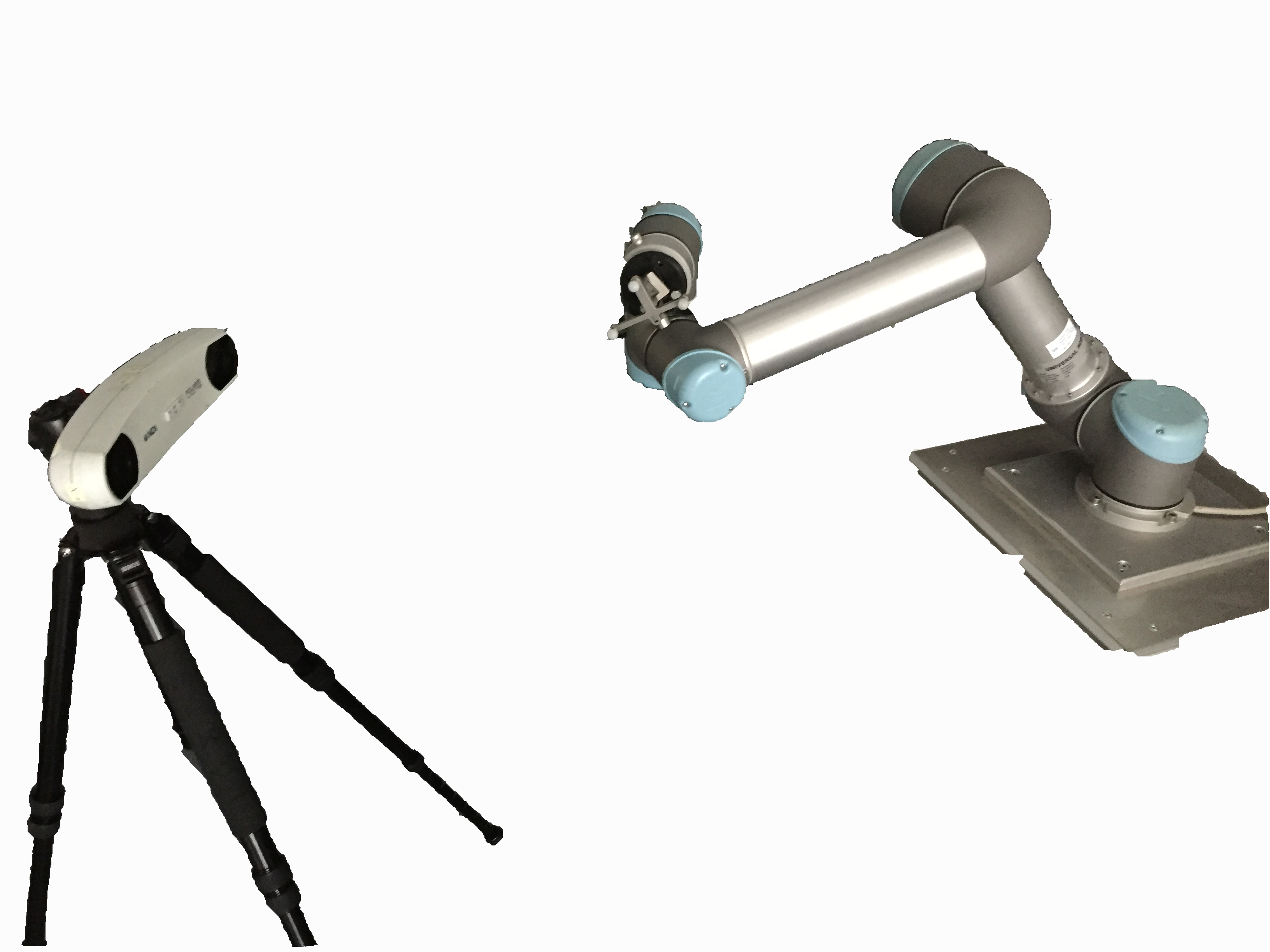

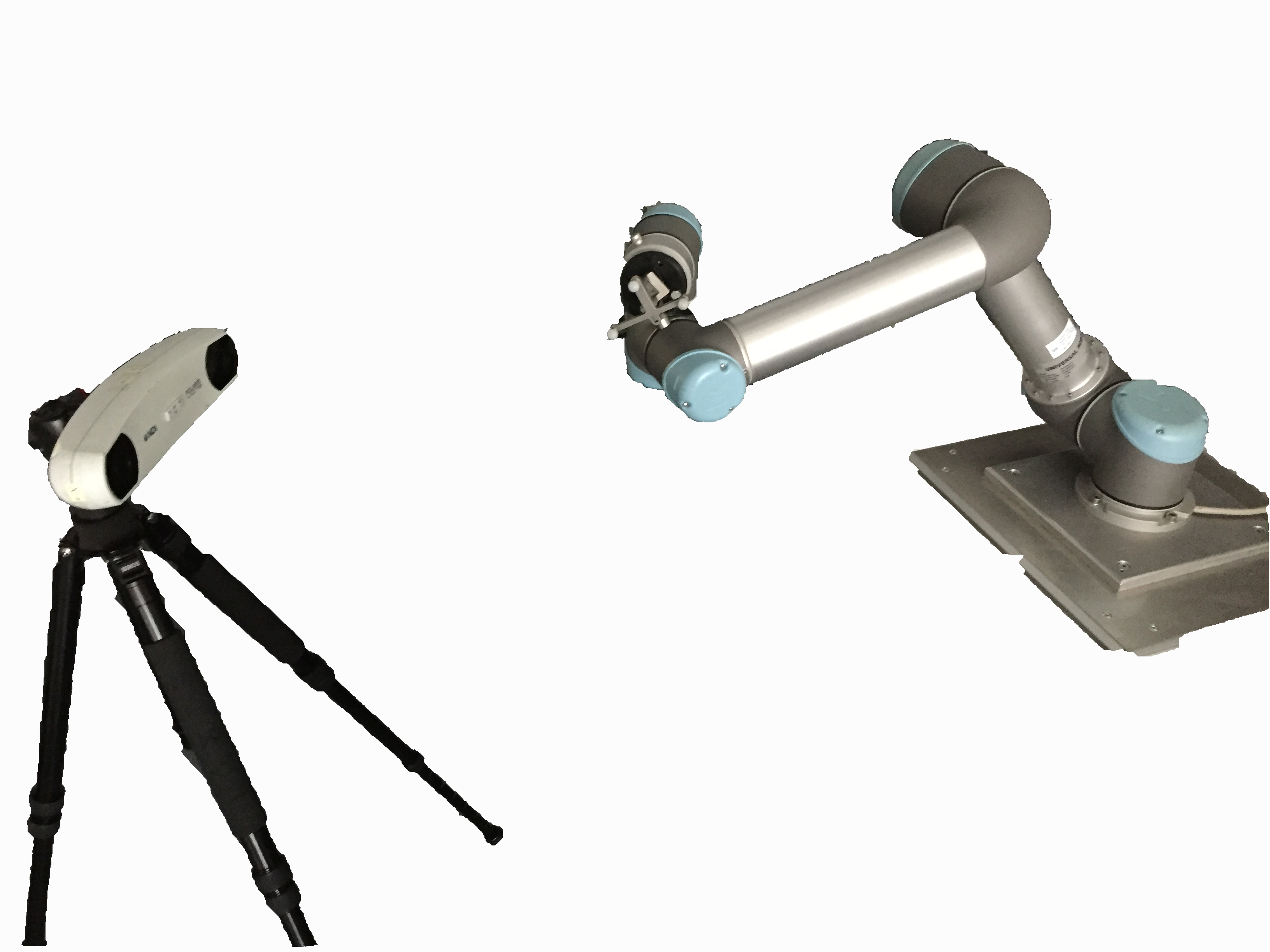

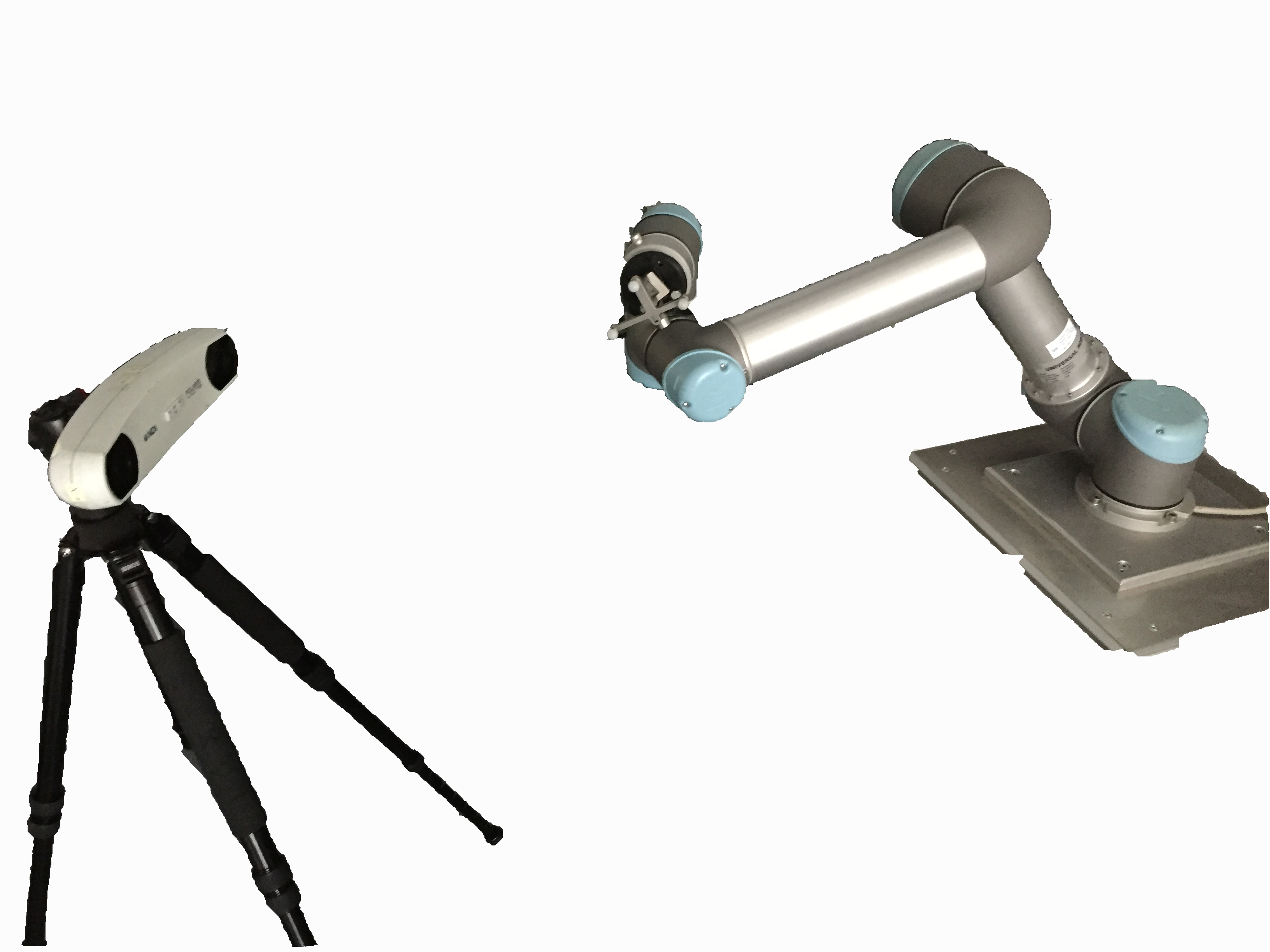

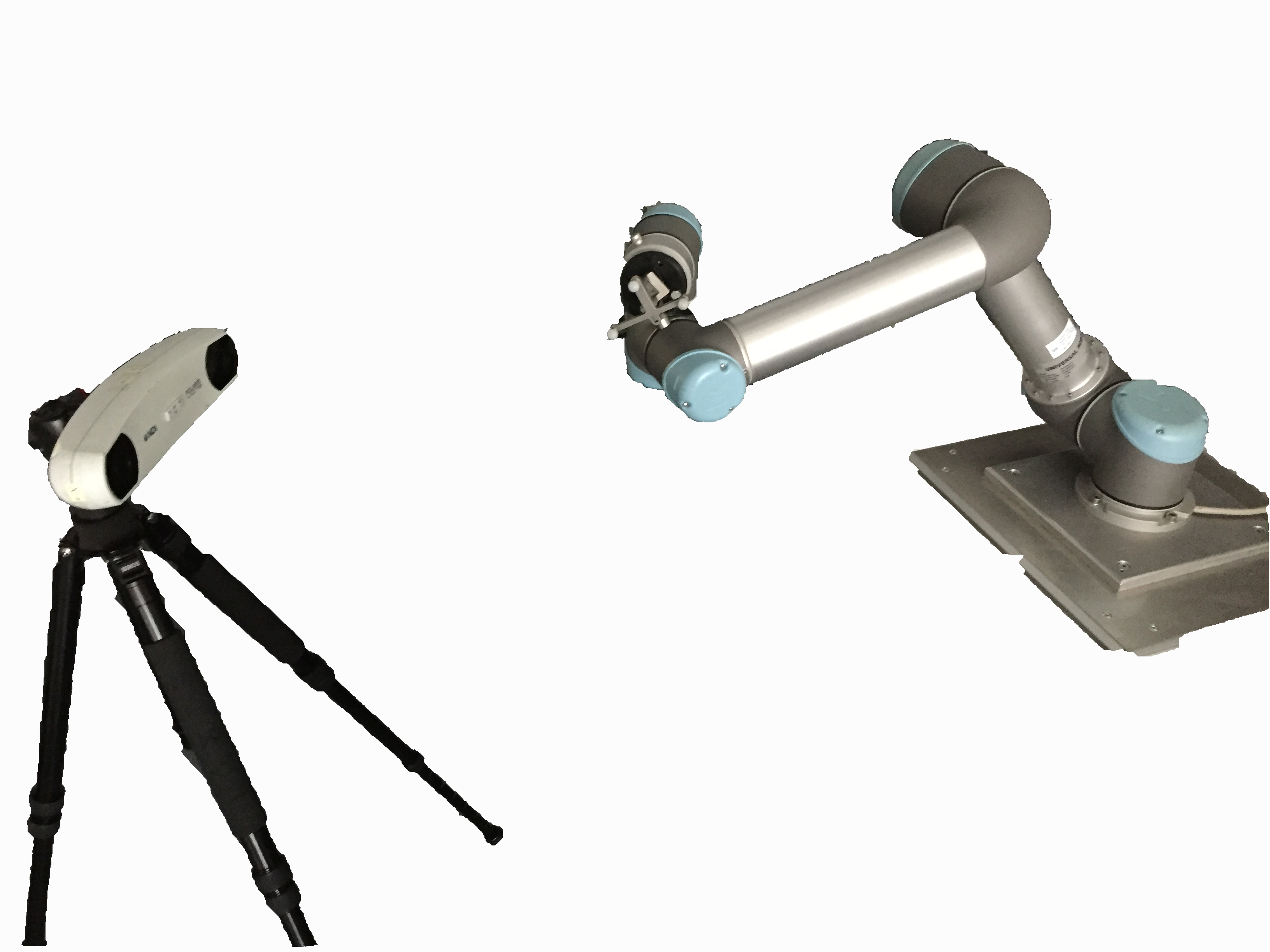

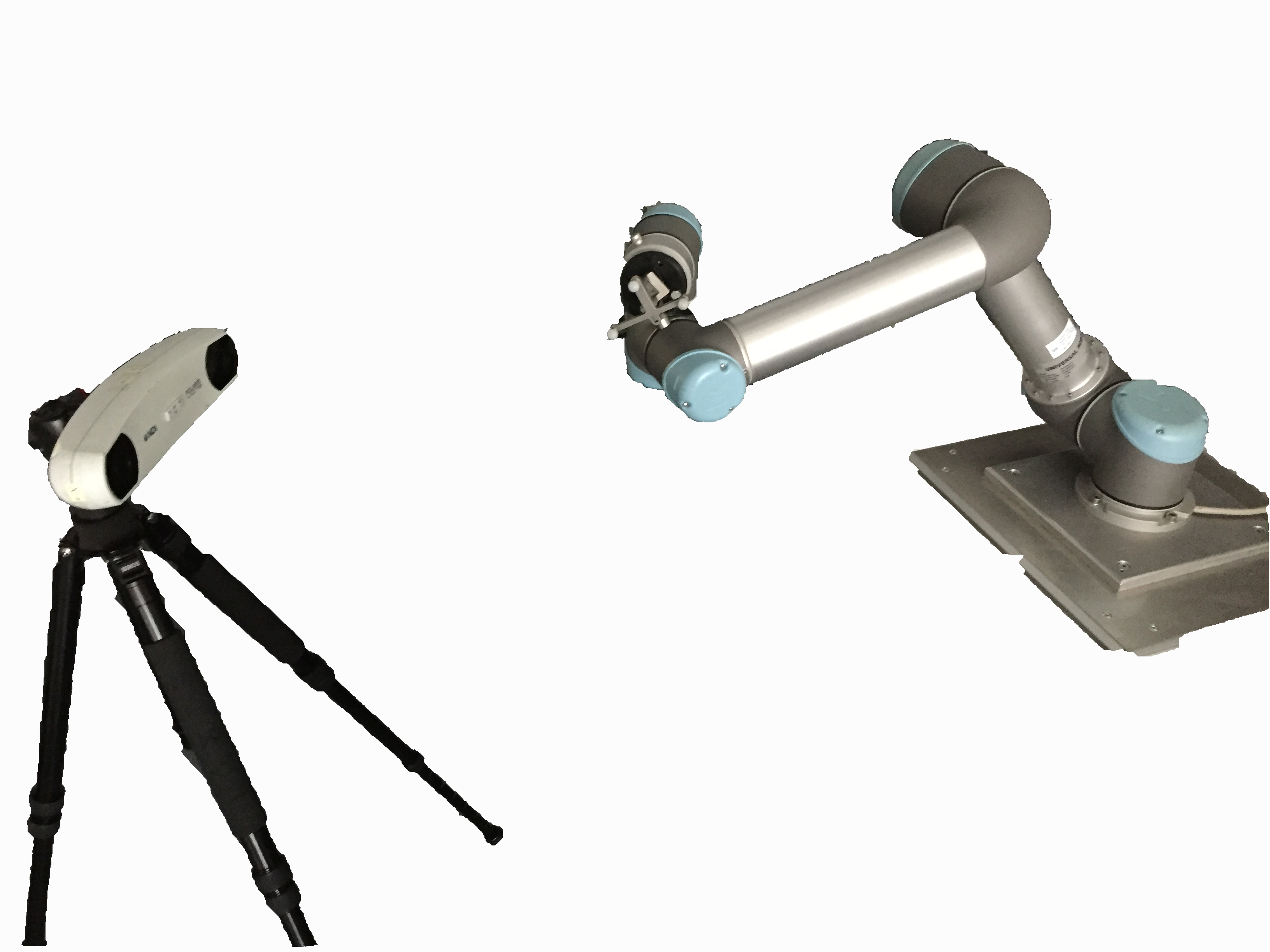

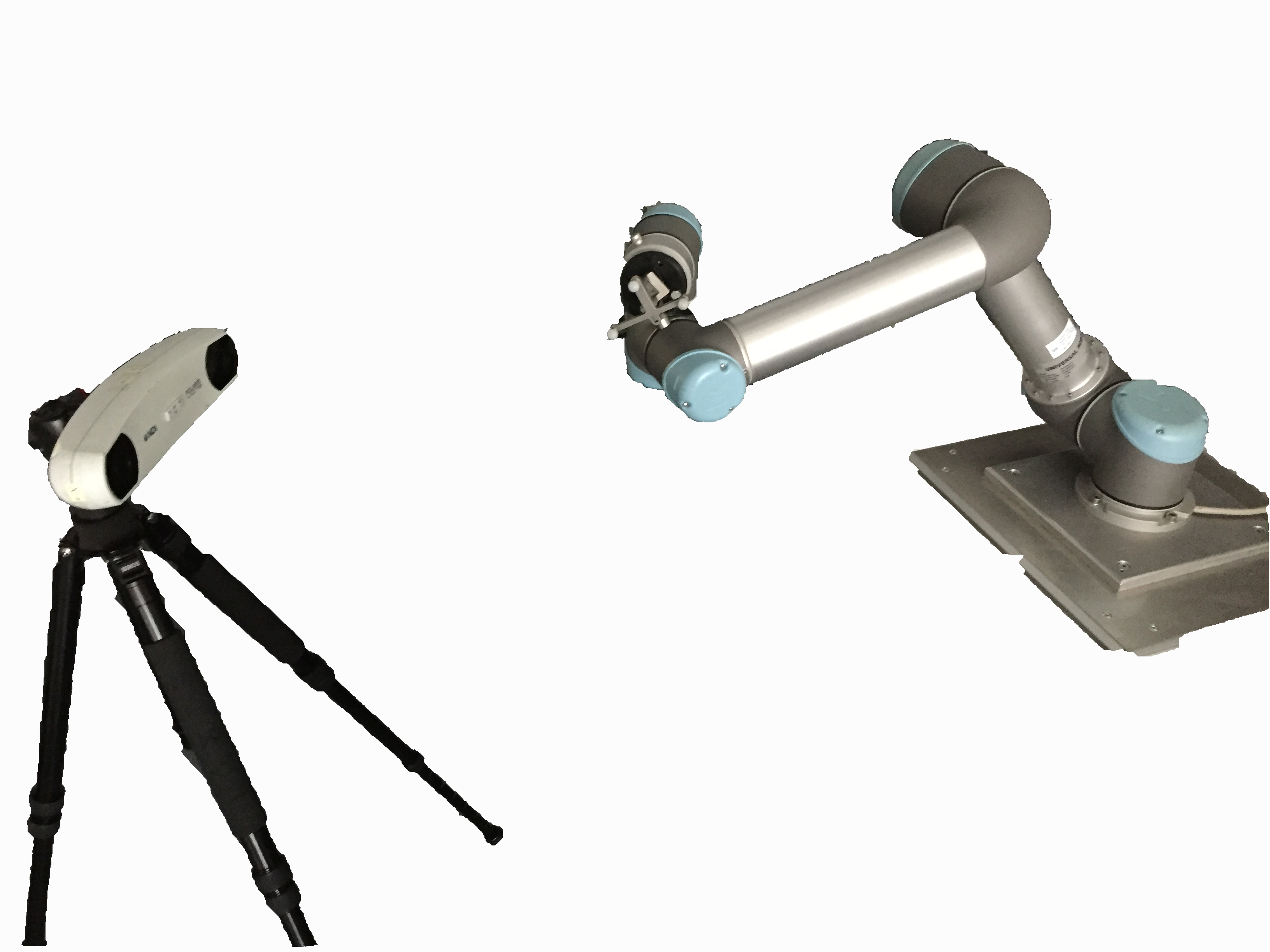

If you are unfamiliar with Tsai’s hand-eye calibration [1], it can be used in two ways:

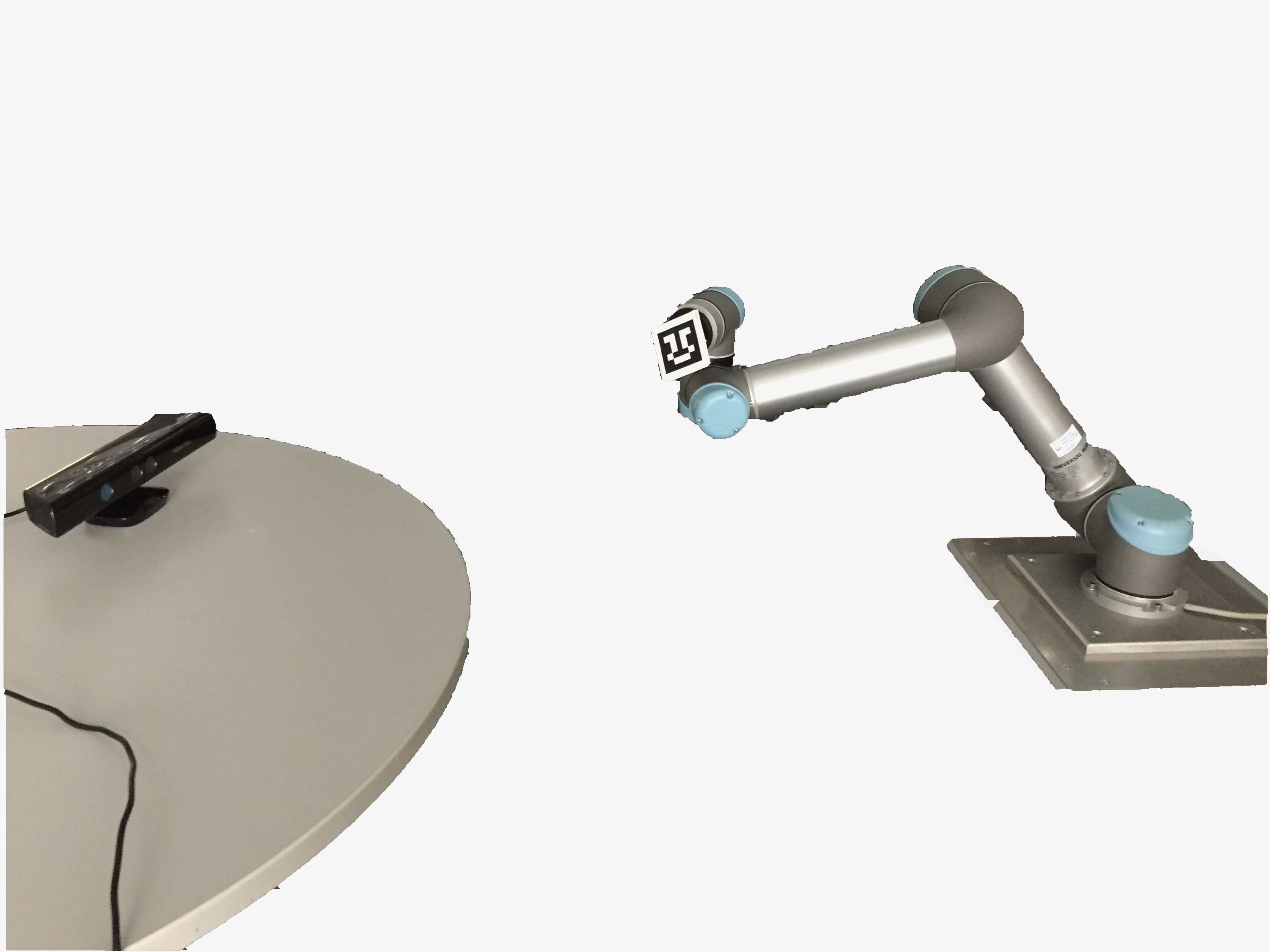

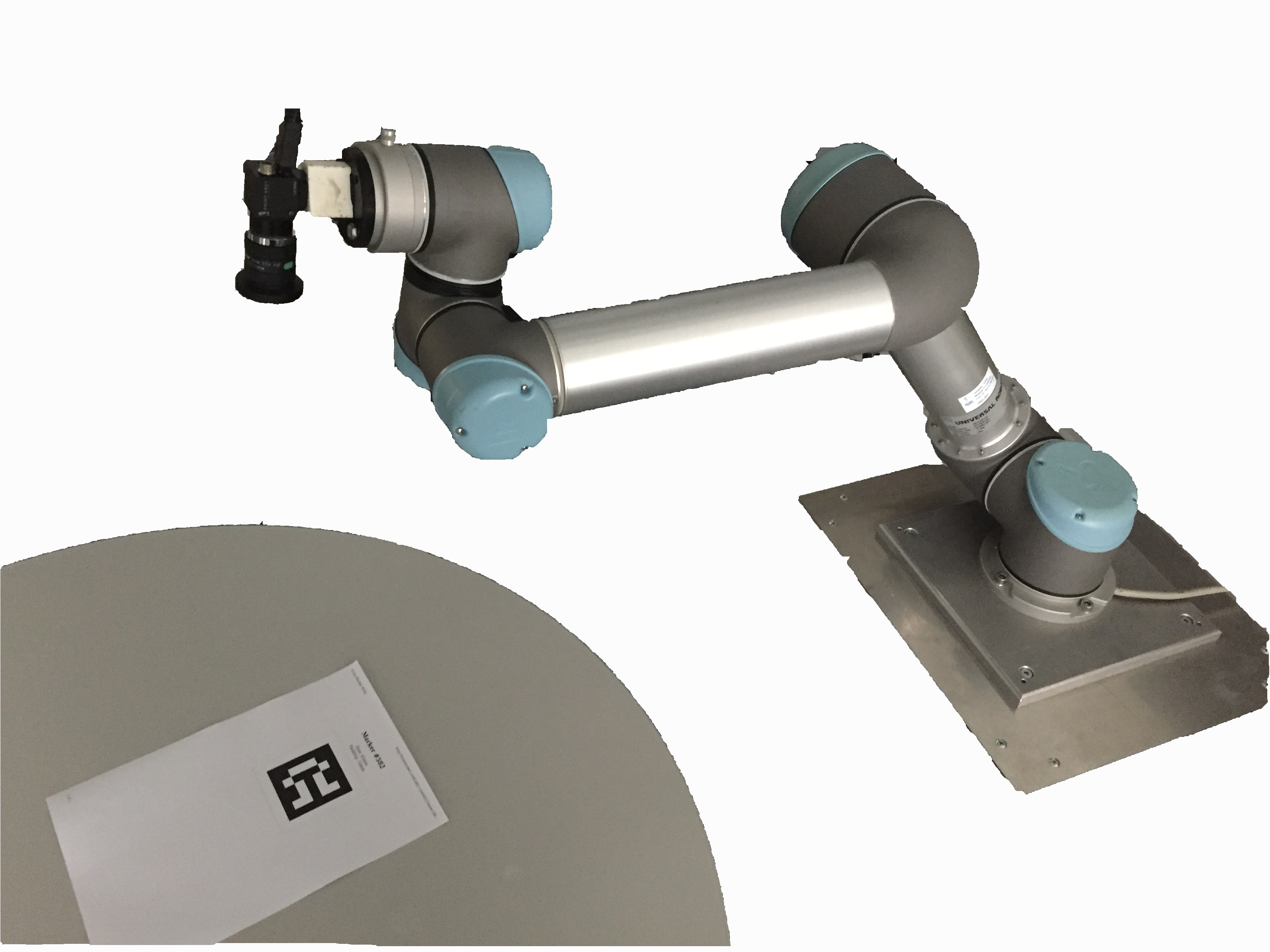

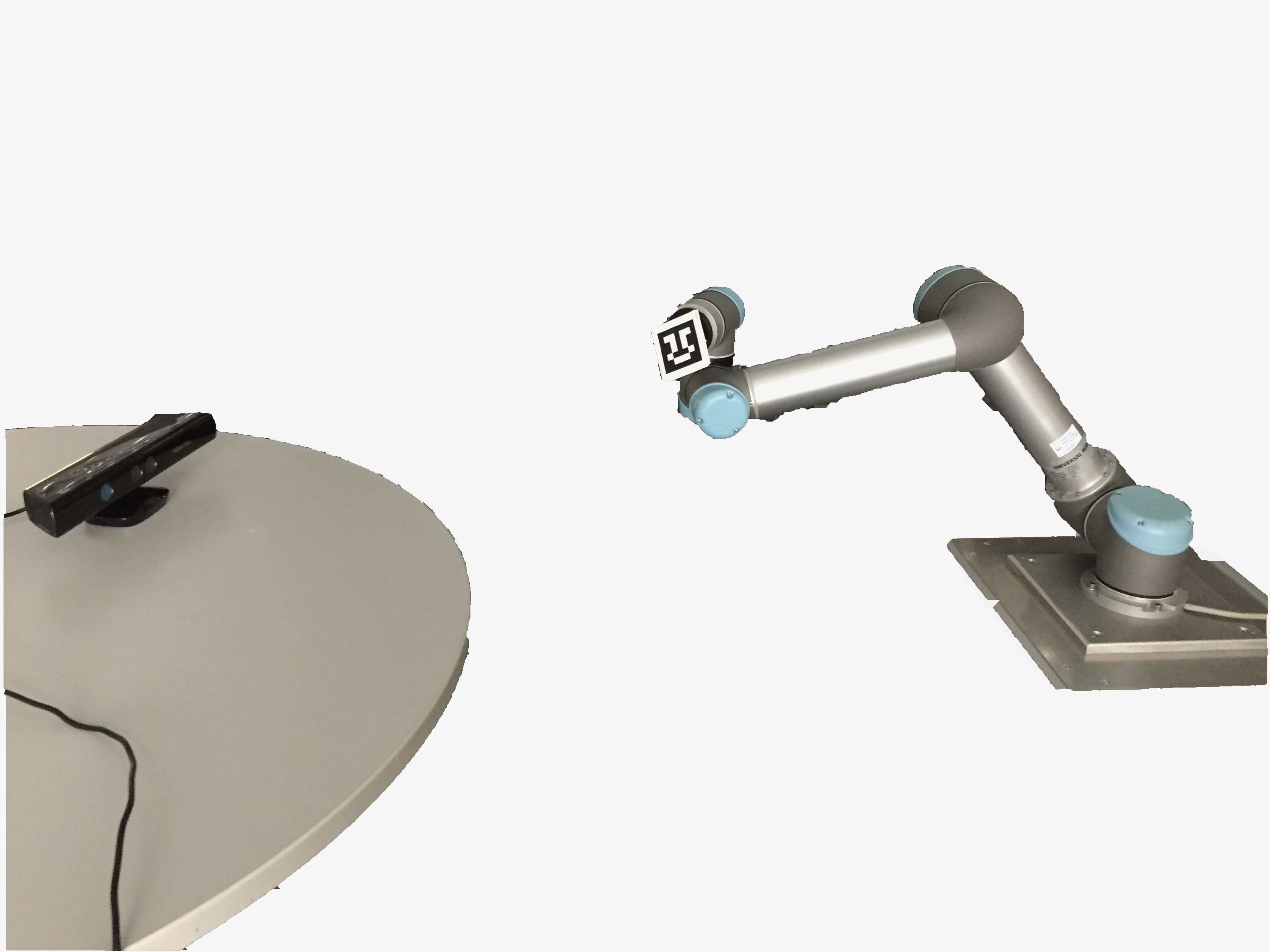

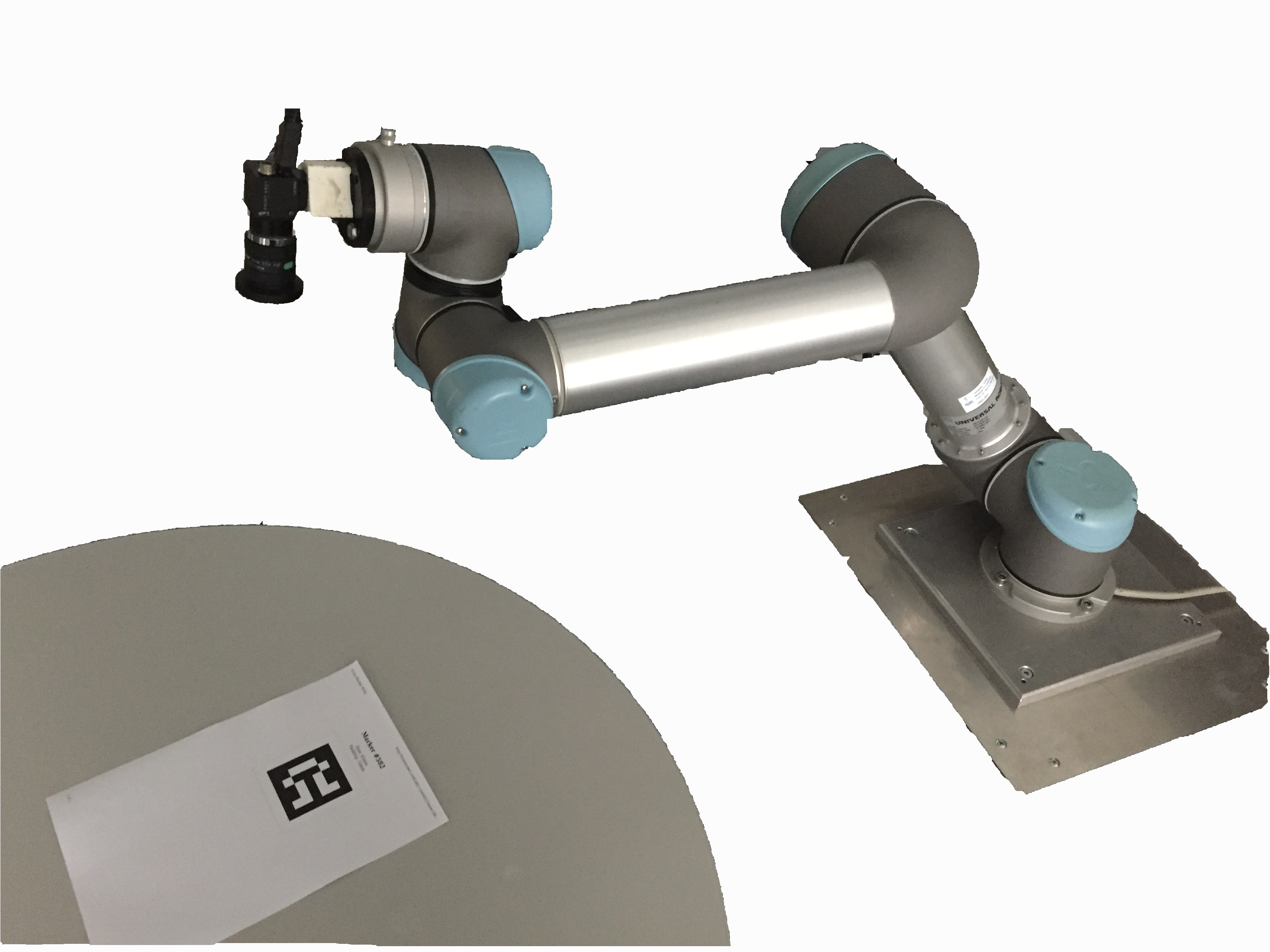

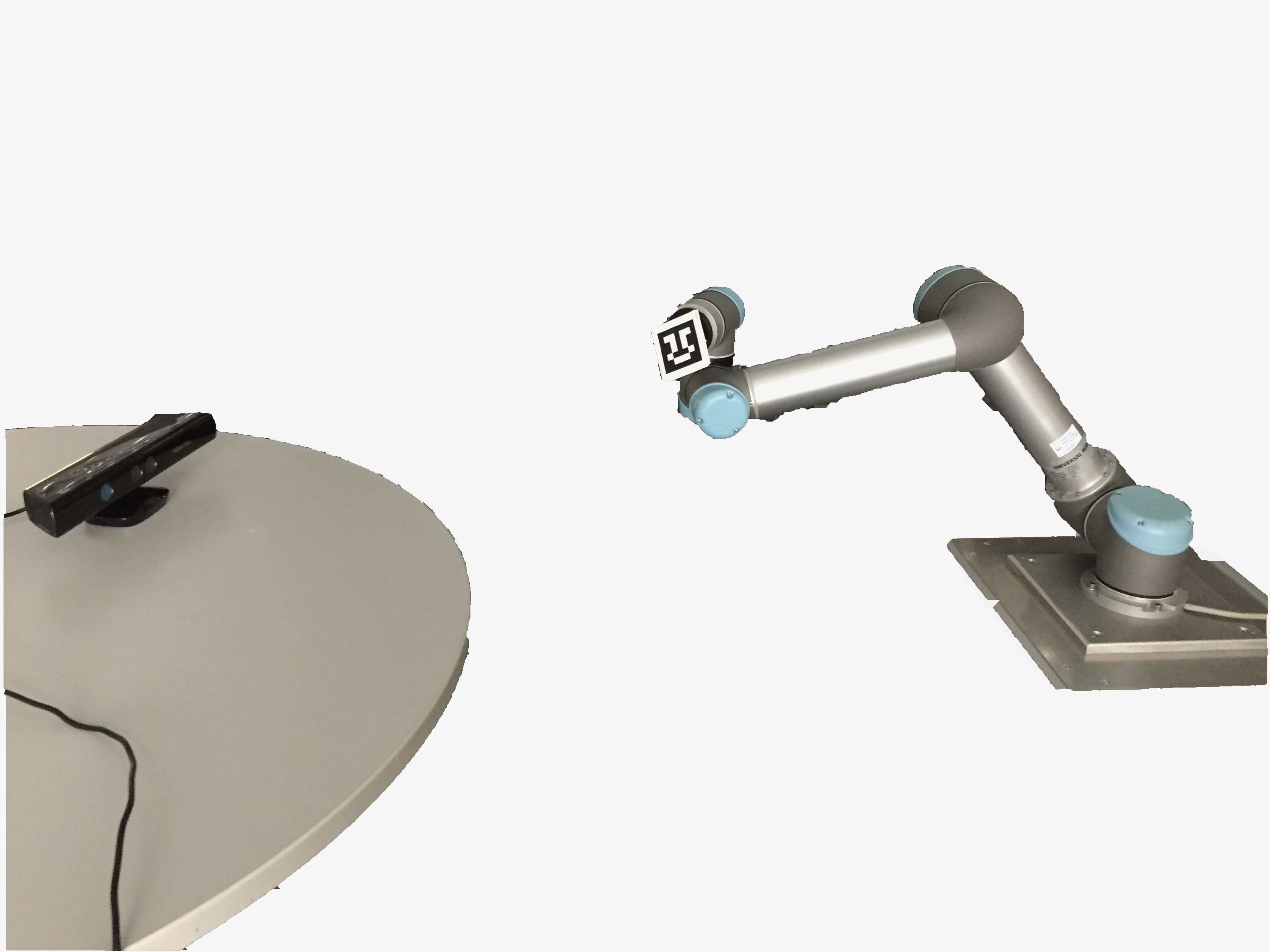

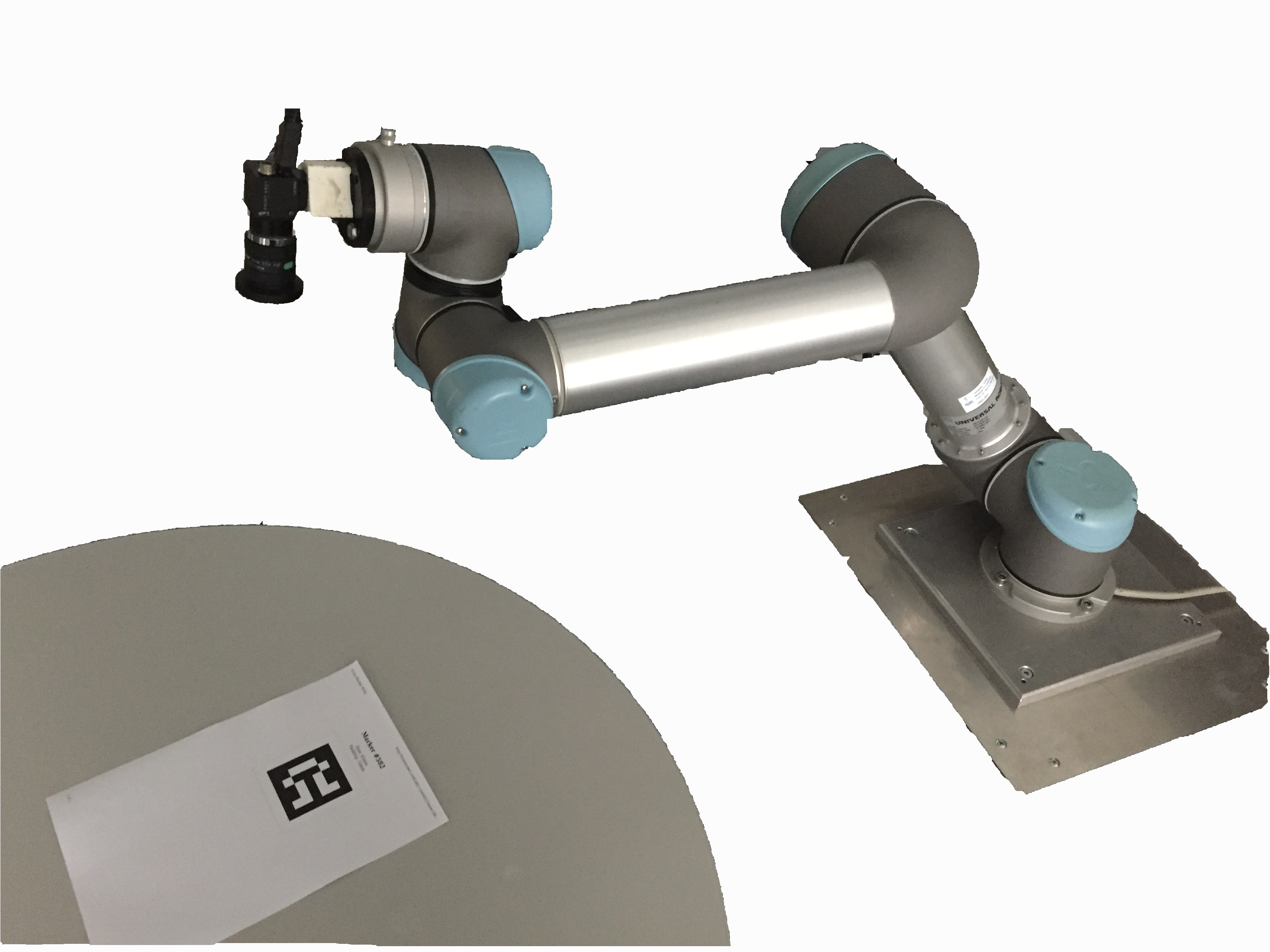

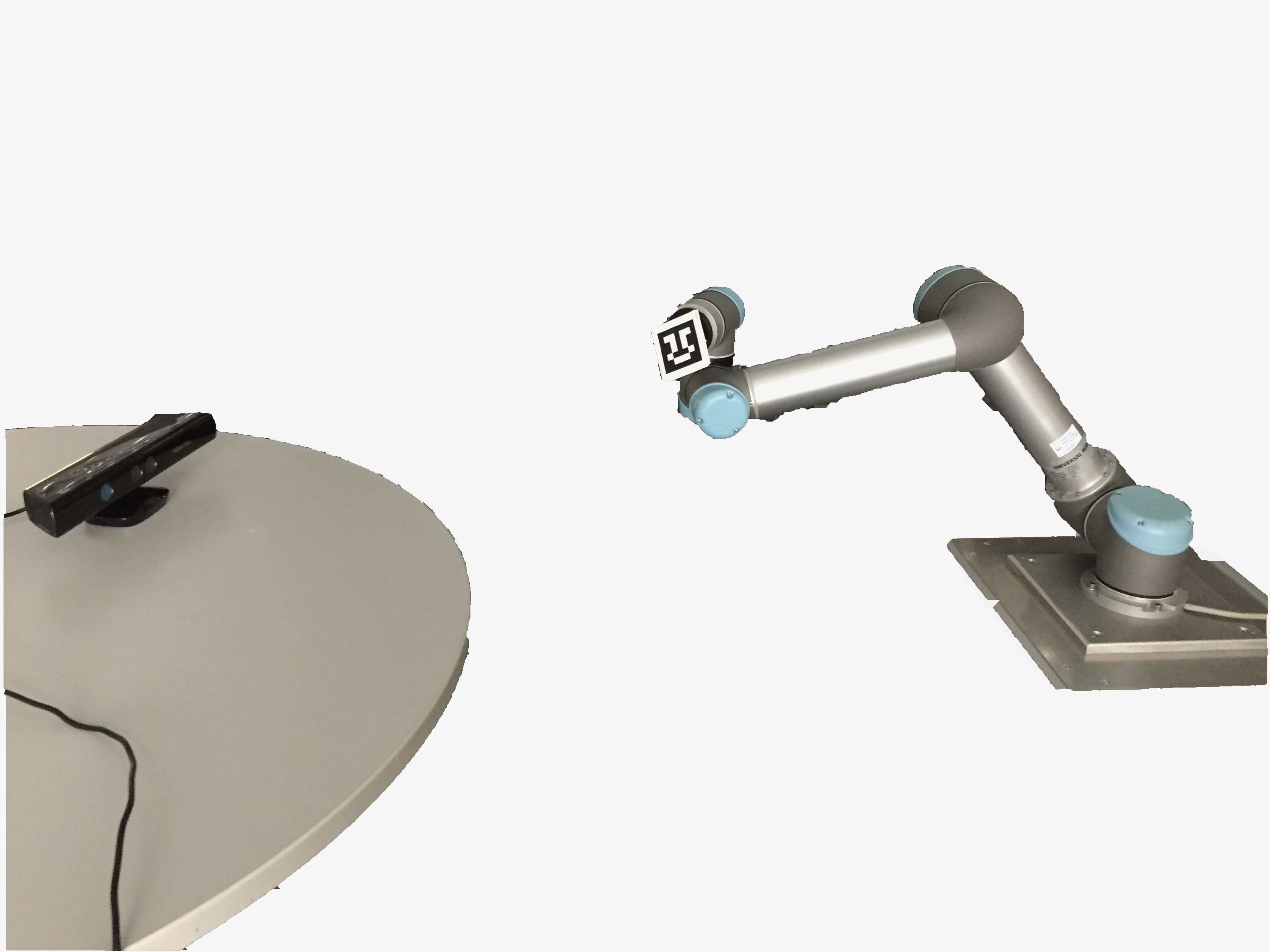

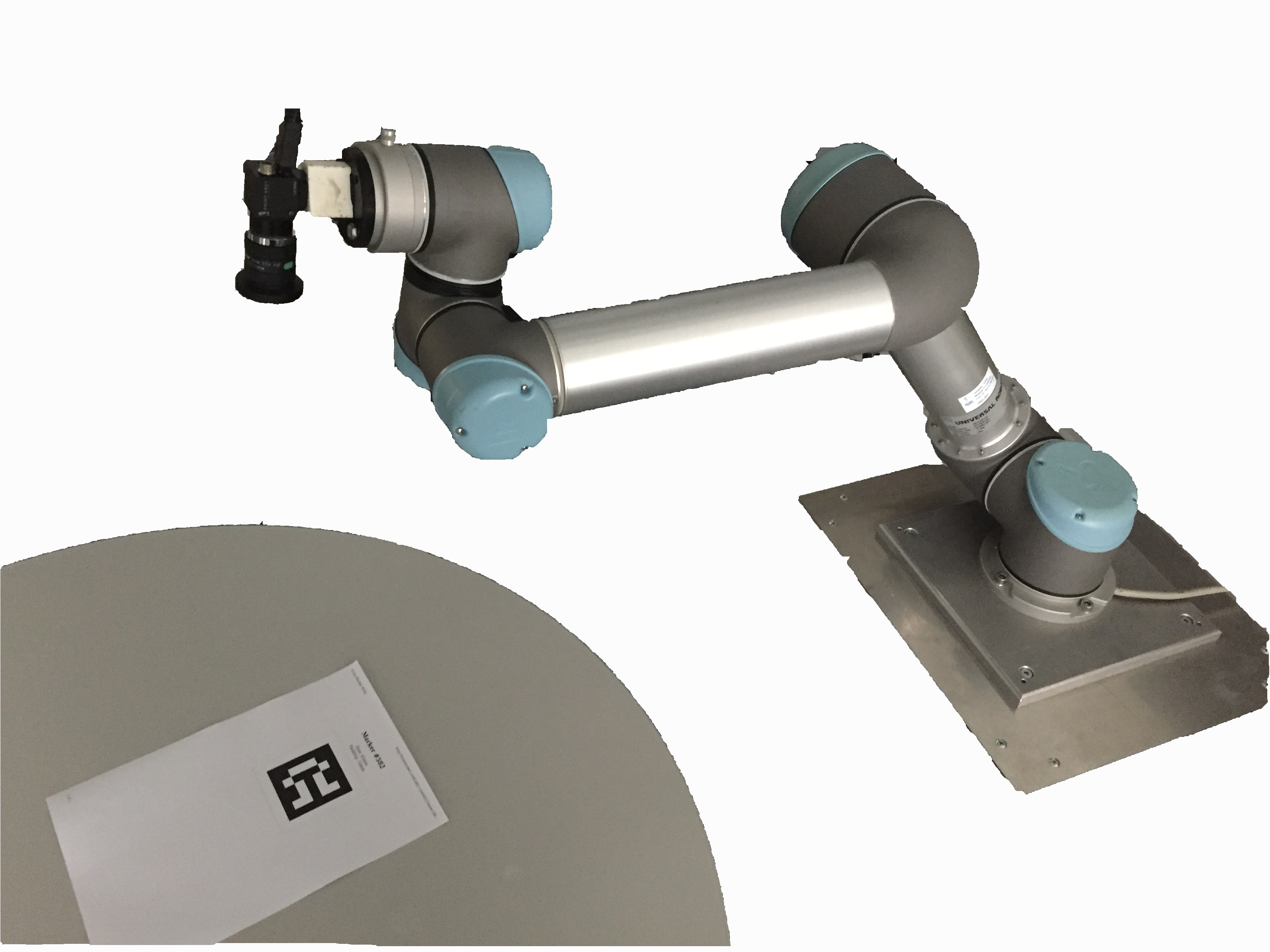

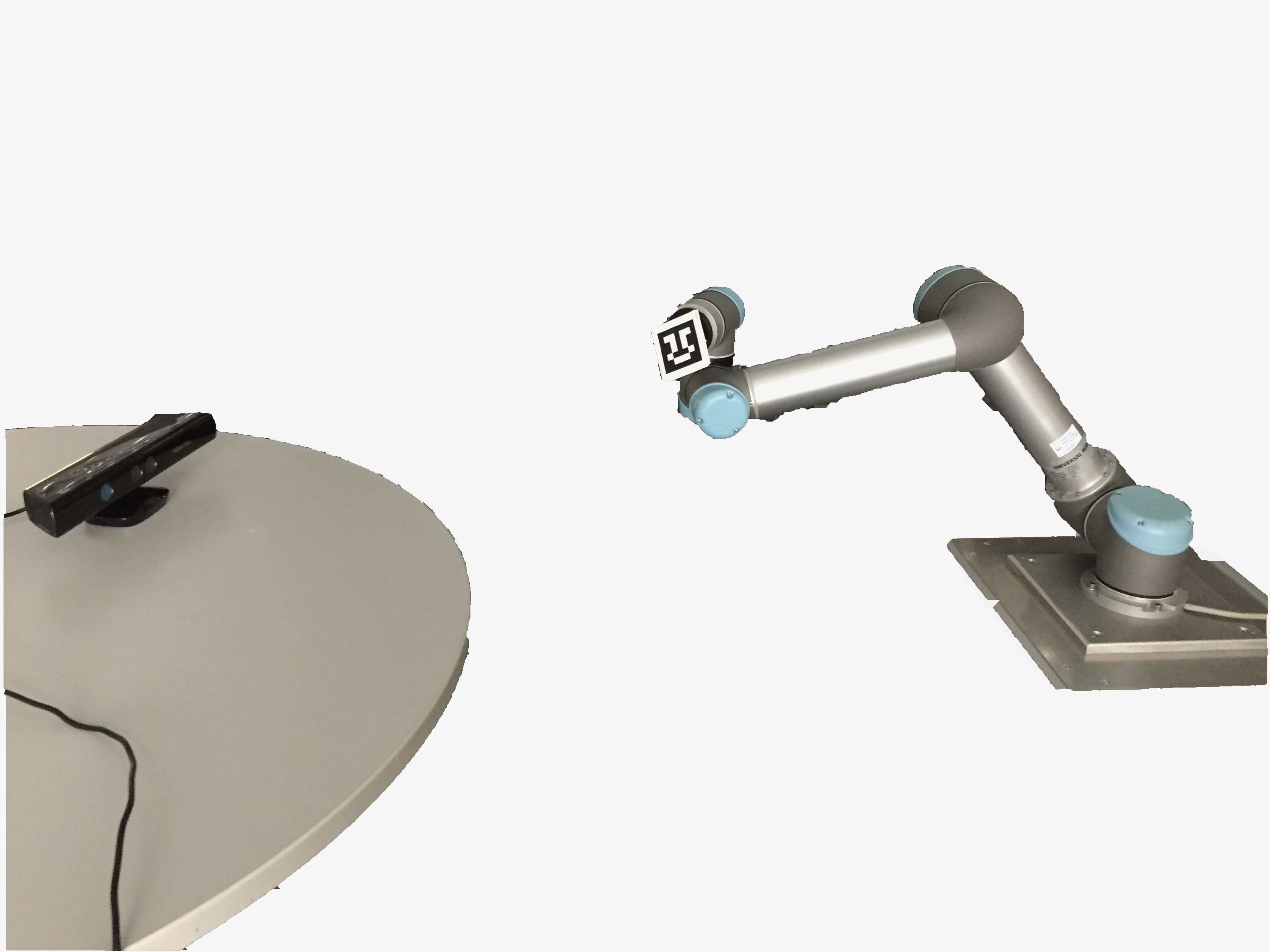

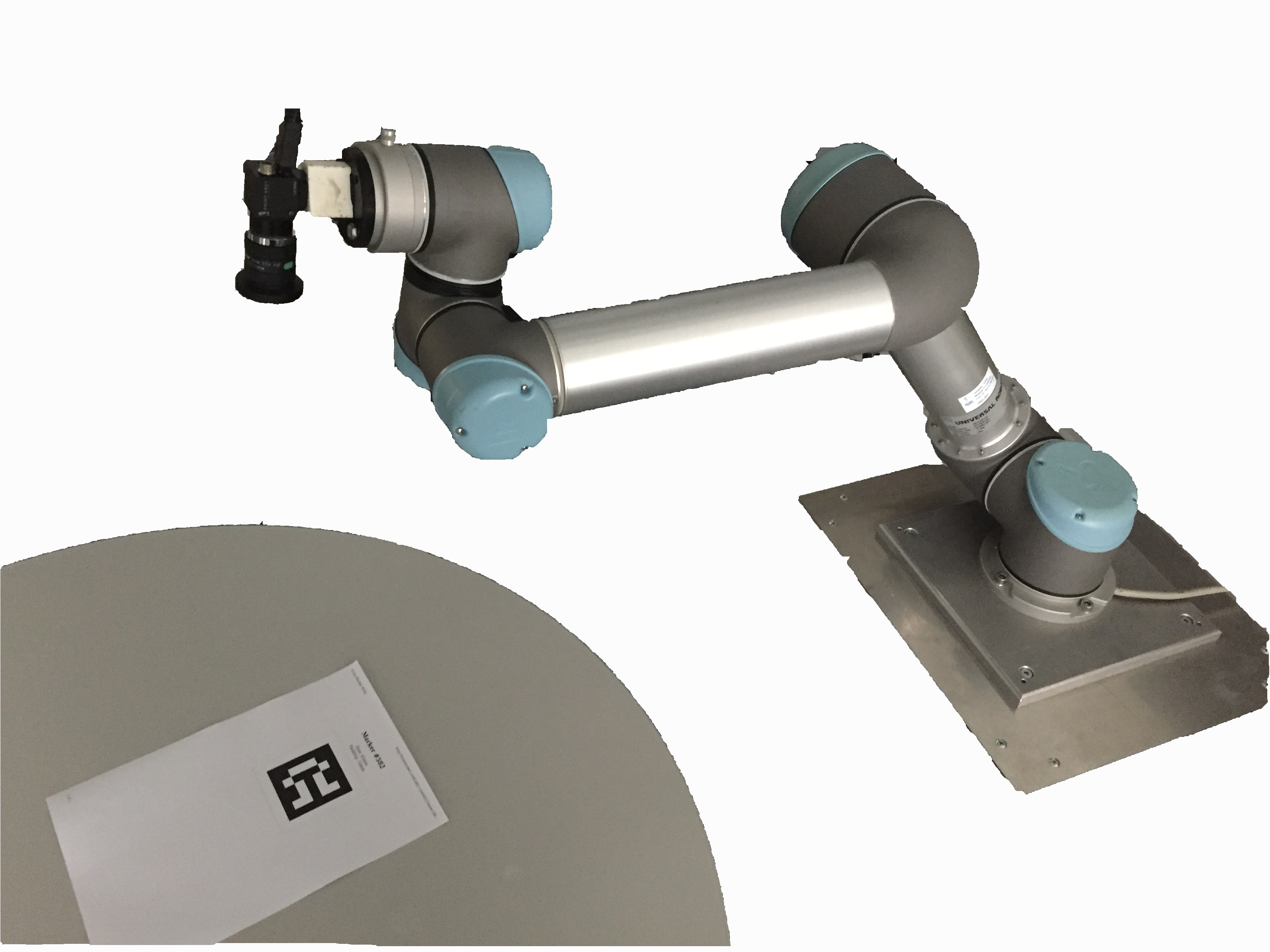

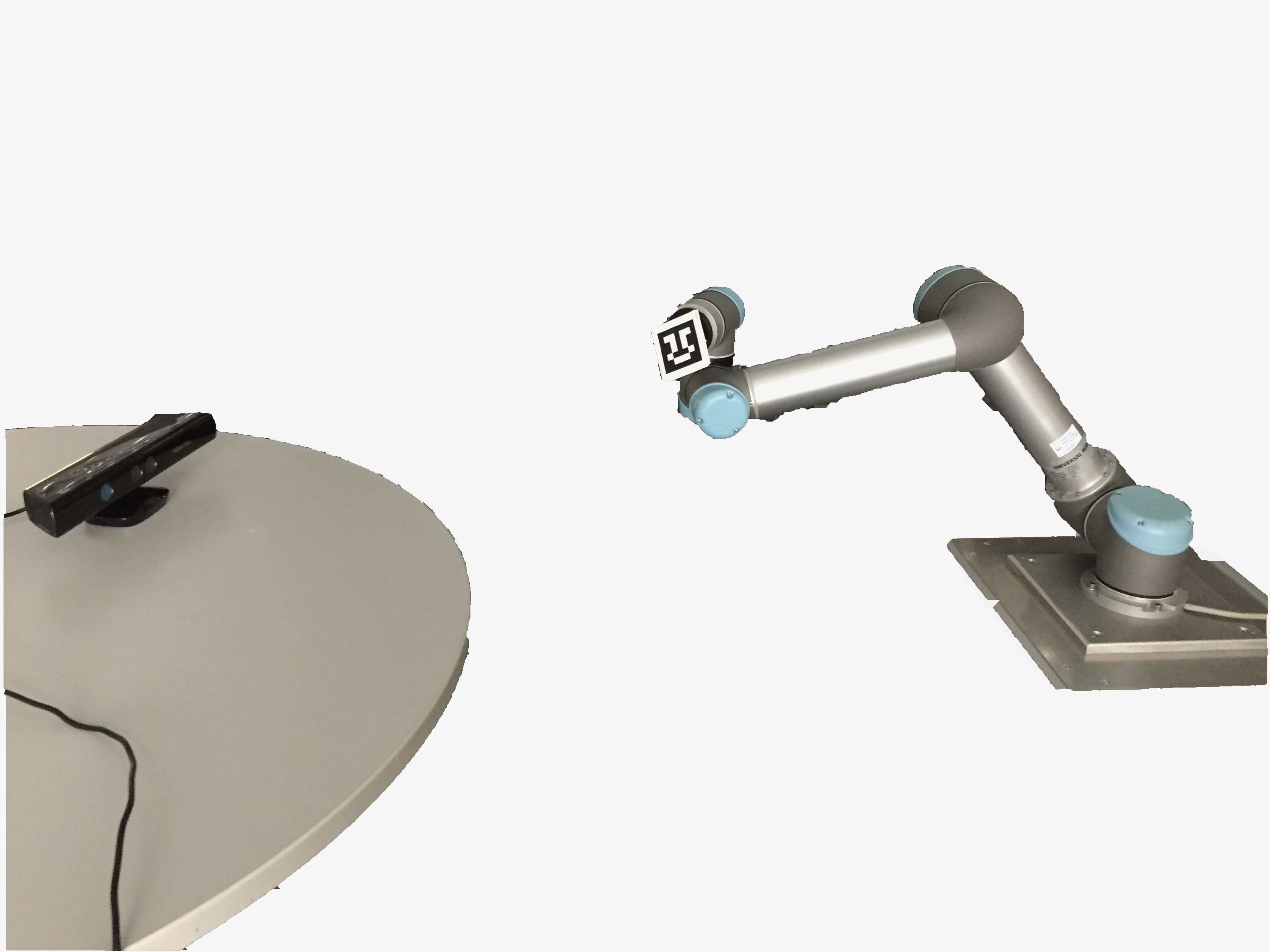

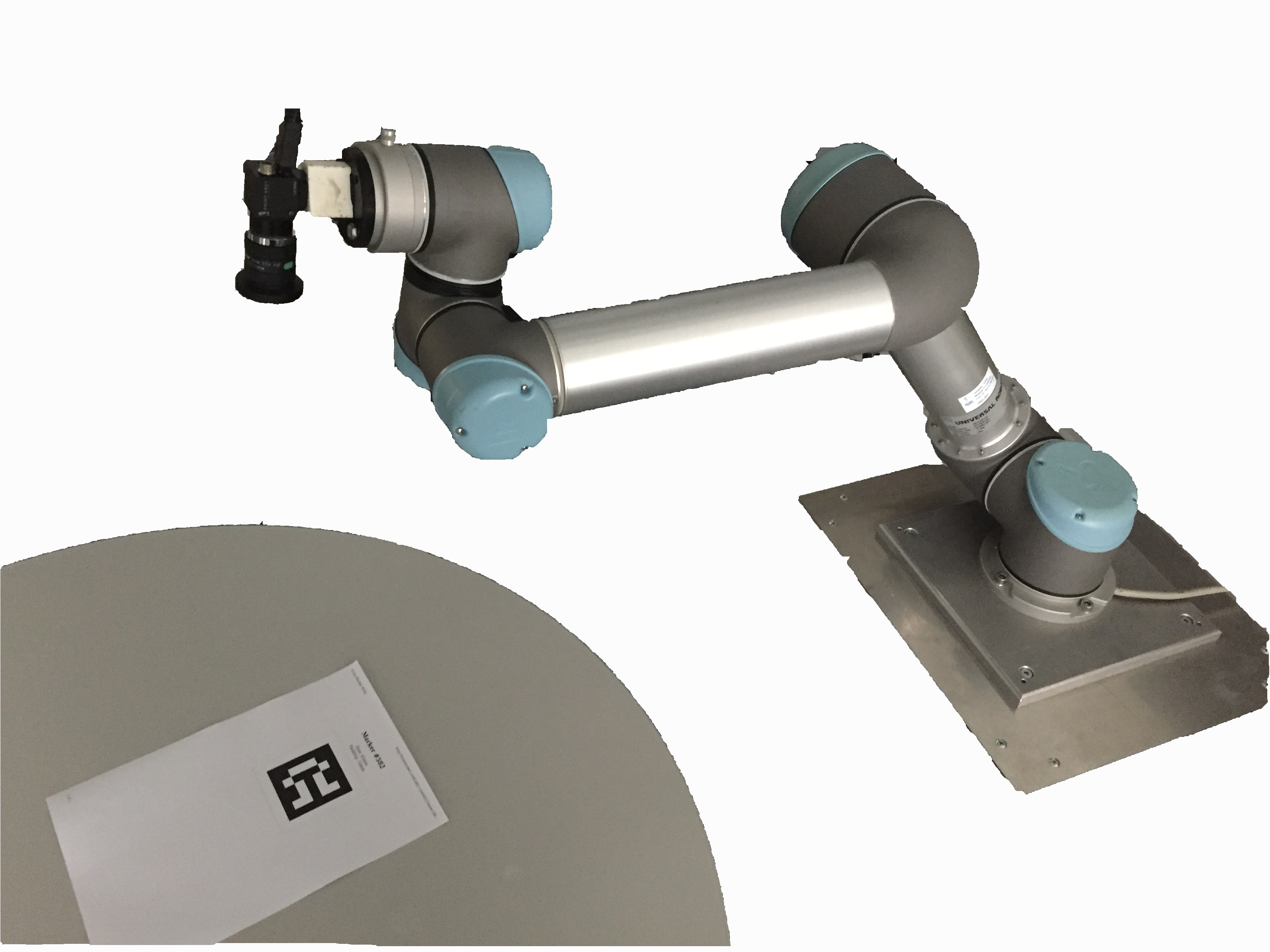

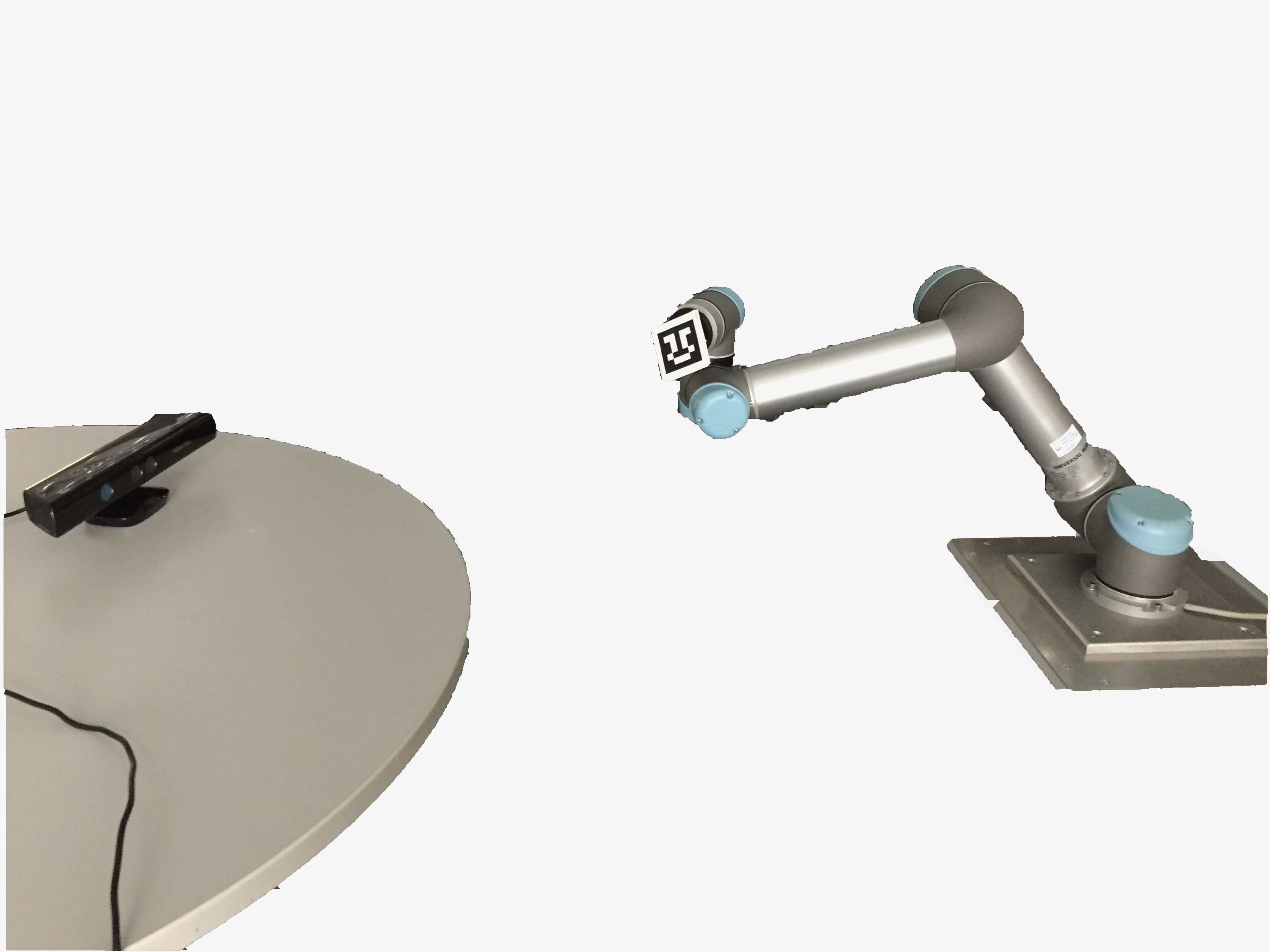

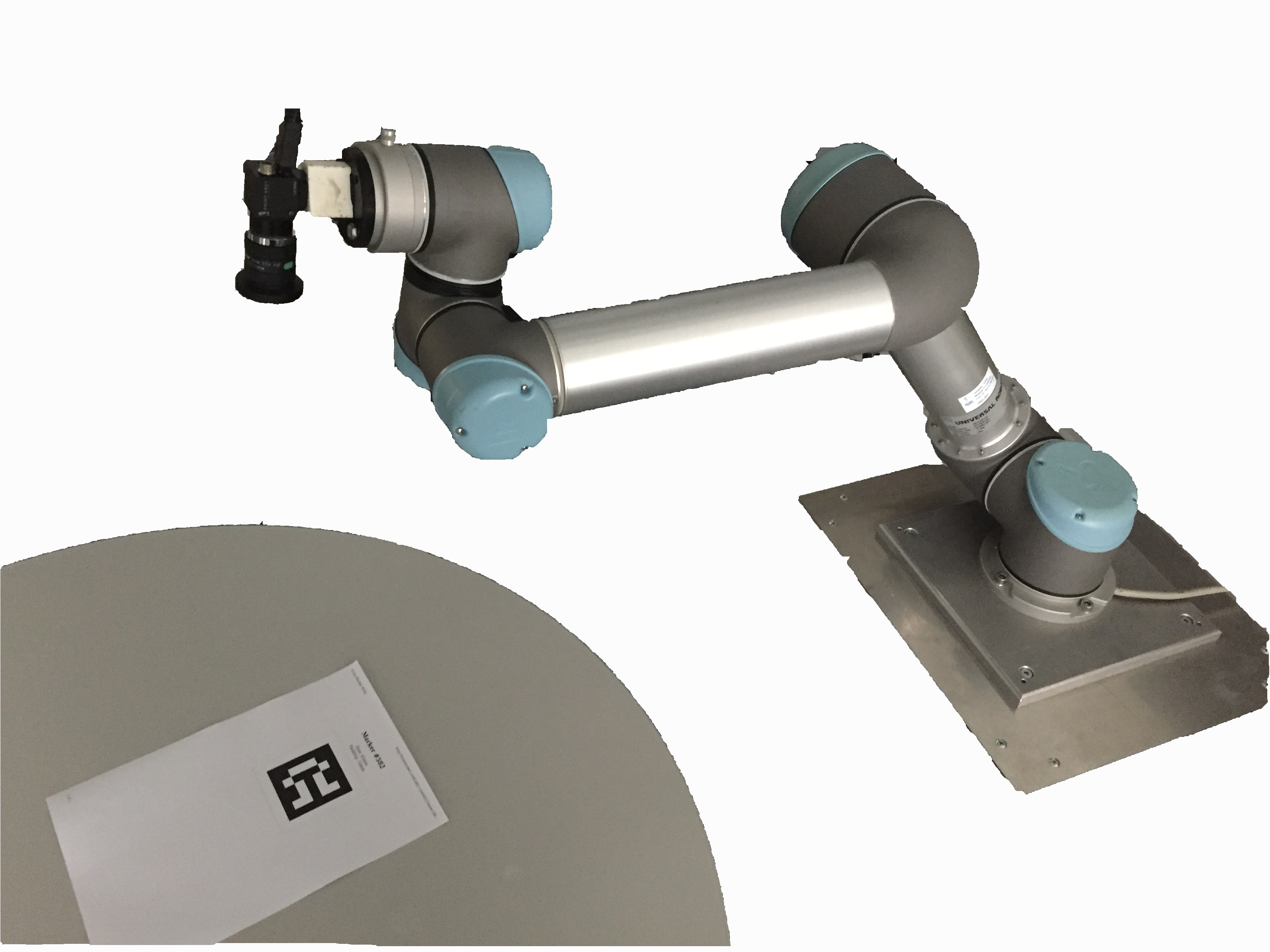

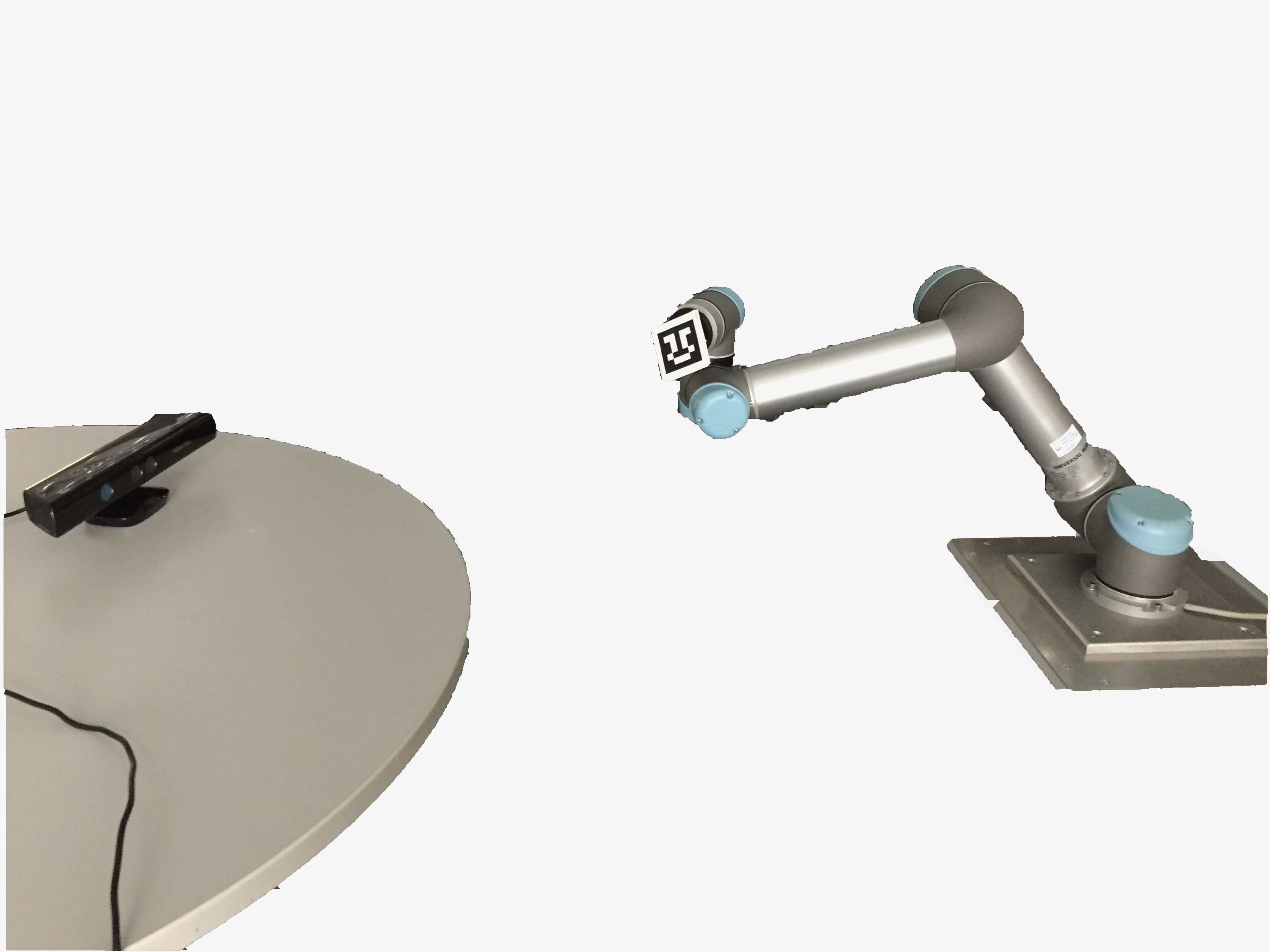

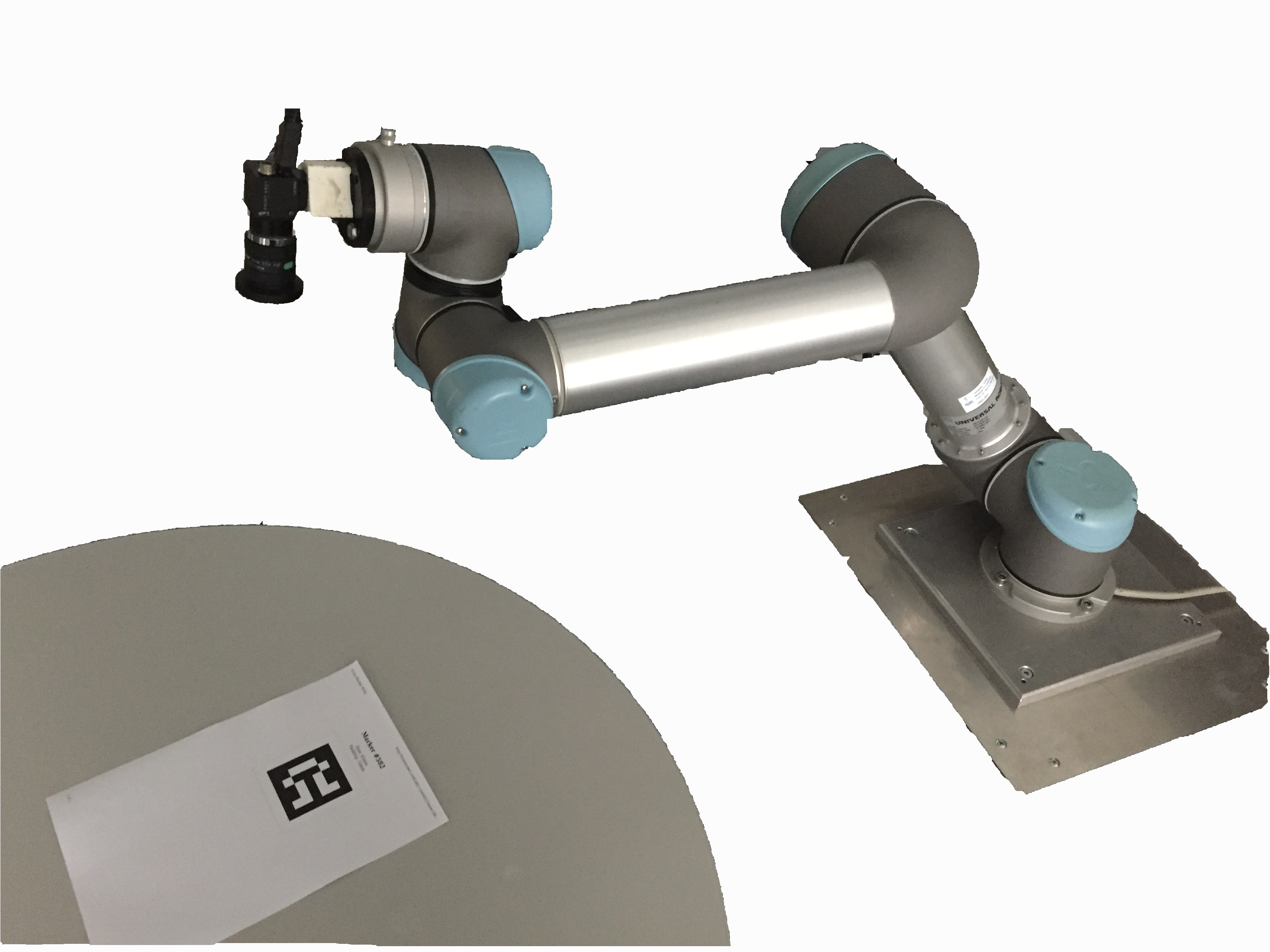

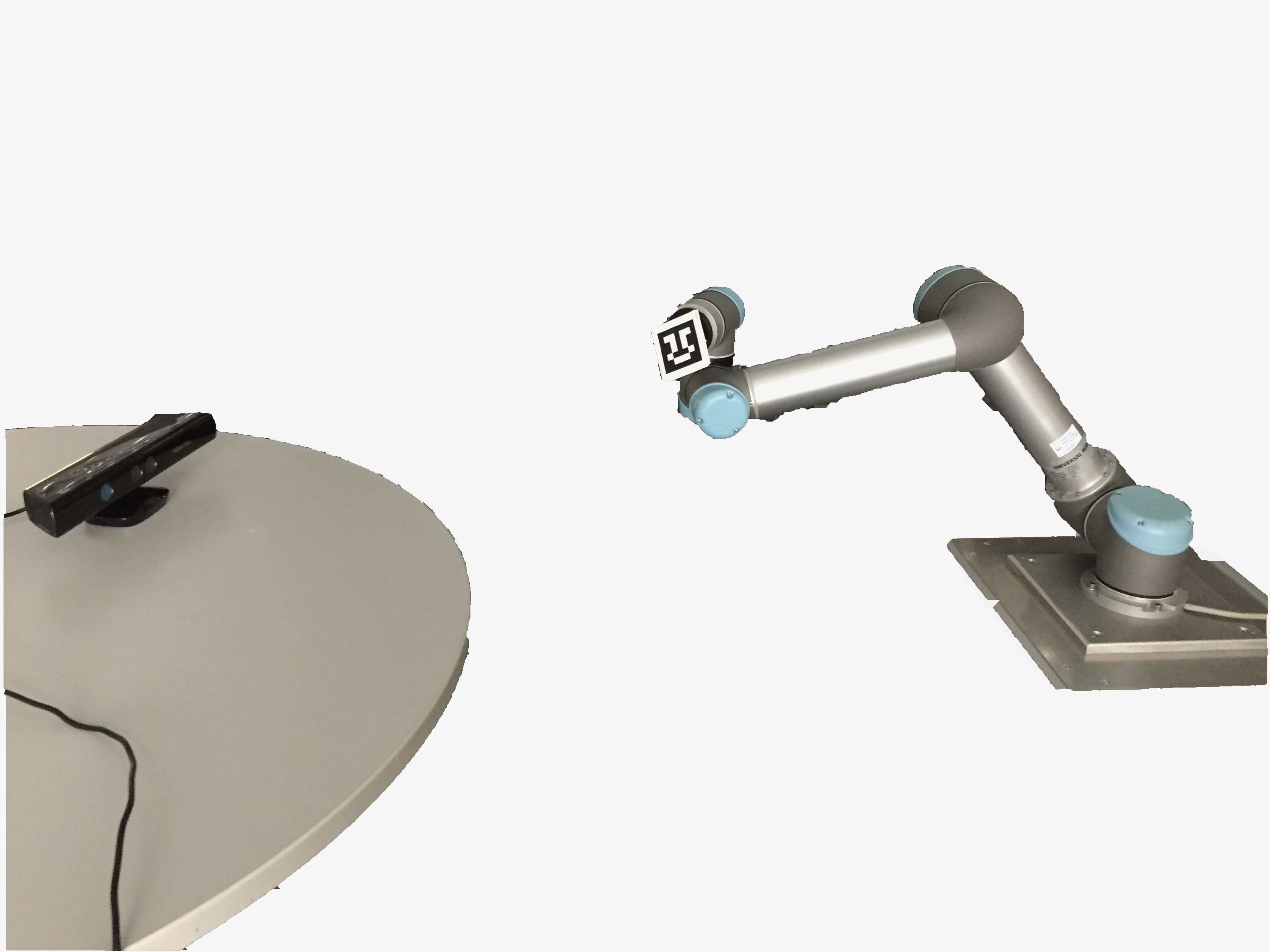

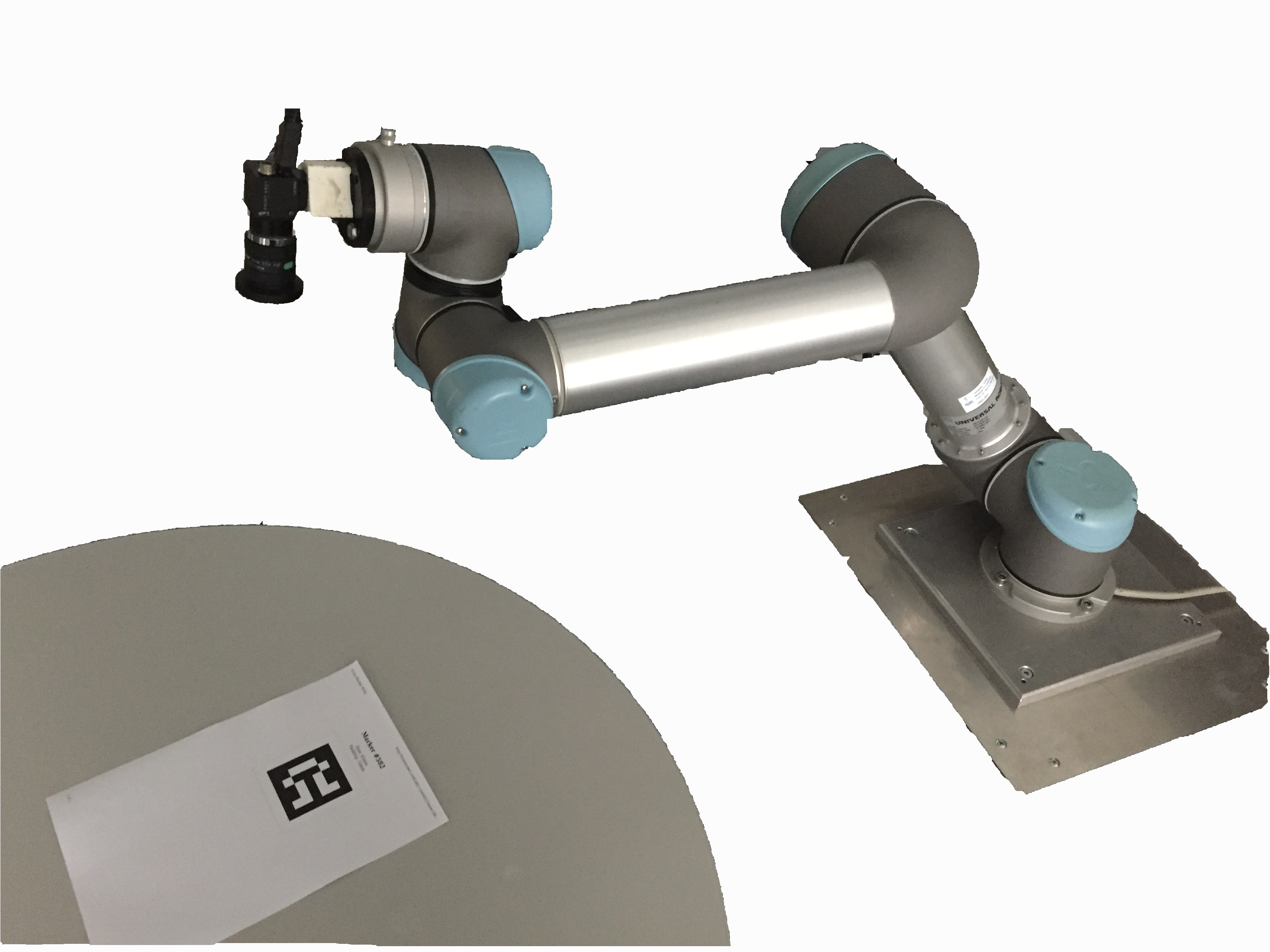

- eye-in-hand to compute the static transform between the reference frames of a robot’s hand effector and that of a tracking system, e.g. the optical frame of an RGB camera used to track AR markers. In this case, the camera is mounted on the end-effector, and you place the visual target so that it is fixed relative to the base of the robot; for example, you can place an AR marker on a table.

- eye-on-base to compute the static transform from a robot’s base to a tracking system, e.g. the optical frame of a camera standing on a tripod next to the robot. In this case you can attach a marker, e.g. an AR marker, to the end-effector of the robot.

A relevant example of an eye-on-base calibration is finding the position of an RGBD camera with respect to a robot for object collision avoidance, e.g. with MoveIt!: an example launch file is provided to perform this common task between an Universal Robot and a Kinect through aruco. eye-on-hand can be used for vision-guided tasks.

The (arguably) best part is, that you do not have to care about the placement of the auxiliary marker (the one on the table in the eye-in-hand case, or on the robot in the eye-on-base case). The algorithm will “erase” that transformation out, and only return the transformation you are interested in.

| eye-on-base | eye-on-hand |

|---|---|

|

|

Getting started

- clone this repository into your catkin workspace:

cd ~/catkin_ws/src # replace with path to your workspace

git clone https://github.com/IFL-CAMP/easy_handeye

- satisfy dependencies

cd .. # now we are inside ~/catkin_ws

rosdep install -iyr --from-paths src

- build

catkin build

Usage

Two launch files, one for computing and one for publishing the calibration respectively, are provided to be included in your own. The default arguments should be overridden to specify the correct tf reference frames, and to avoid conflicts when using multiple calibrations at once.

The suggested integration is:

- create a new

handeye_calibrate.launchfile, which includes the robot’s and tracking system’s launch files, as well aseasy_handeye’scalibrate.launchas illustrated below in the next section “Calibration” - in each of your launch files where you need the result of the calibration, include

easy_handeye’spublish.launchas illustrated below in the section “Publishing”

Calibration

For both use cases, you can either launch the calibrate.launch

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | Automated, hardware-independent Hand-Eye Calibration |

| Checkout URI | https://github.com/ifl-camp/easy_handeye.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| easy_handeye | 0.4.3 |

| easy_handeye_msgs | 0.4.3 |

| rqt_easy_handeye | 0.4.3 |

README

easy_handeye: automated, hardware-independent Hand-Eye Calibration for ROS1

This package provides functionality and a GUI to:

-

sample the robot position and tracking system output via

tf, - compute the eye-on-base or eye-in-hand calibration matrix through the OpenCV library’s Tsai-Lenz algorithm implementation,

- store the result of the calibration,

-

publish the result of the calibration procedure as a

tftransform at each subsequent system startup, - (optional) automatically move a robot around a starting pose via

MoveIt!to acquire the samples.

The intended result is to make it easy and straightforward to perform the calibration, and to keep it up-to-date throughout the system. Two launch files are provided to be run, respectively to perform the calibration and check its result. A further launch file can be integrated into your own launch files, to make use of the result of the calibration in a transparent way: if the calibration is performed again, the updated result will be used without further action required.

You can try out this software in a simulator, through the

easy_handeye_demo package. This package also serves as an

example for integrating easy_handeye into your own launch scripts.

NOTE: a (development) ROS2 version of this package is available here

News

- version 0.4.3

- documentation and bug fixes

- version 0.4.2

- fixes for the freehand robot movement scenario

- version 0.4.1

- fixed a bug that prevented loading and publishing the calibration - thanks to @lyh458!

- version 0.4.0

- switched to OpenCV as a backend for the algorithm implementation

- added UI element to pick the calibration algorithm (Tsai-Lenz, Park, Horaud, Andreff, Daniilidis)

- version 0.3.1

- restored compatibility with Melodic and Kinetic along with Noetic

- version 0.3.0

- ROS Noetic compatibility

- added “evaluator” GUI to evaluate the accuracy of the calibration while running

check_calibration.launch

Use Cases

If you are unfamiliar with Tsai’s hand-eye calibration [1], it can be used in two ways:

- eye-in-hand to compute the static transform between the reference frames of a robot’s hand effector and that of a tracking system, e.g. the optical frame of an RGB camera used to track AR markers. In this case, the camera is mounted on the end-effector, and you place the visual target so that it is fixed relative to the base of the robot; for example, you can place an AR marker on a table.

- eye-on-base to compute the static transform from a robot’s base to a tracking system, e.g. the optical frame of a camera standing on a tripod next to the robot. In this case you can attach a marker, e.g. an AR marker, to the end-effector of the robot.

A relevant example of an eye-on-base calibration is finding the position of an RGBD camera with respect to a robot for object collision avoidance, e.g. with MoveIt!: an example launch file is provided to perform this common task between an Universal Robot and a Kinect through aruco. eye-on-hand can be used for vision-guided tasks.

The (arguably) best part is, that you do not have to care about the placement of the auxiliary marker (the one on the table in the eye-in-hand case, or on the robot in the eye-on-base case). The algorithm will “erase” that transformation out, and only return the transformation you are interested in.

| eye-on-base | eye-on-hand |

|---|---|

|

|

Getting started

- clone this repository into your catkin workspace:

cd ~/catkin_ws/src # replace with path to your workspace

git clone https://github.com/IFL-CAMP/easy_handeye

- satisfy dependencies

cd .. # now we are inside ~/catkin_ws

rosdep install -iyr --from-paths src

- build

catkin build

Usage

Two launch files, one for computing and one for publishing the calibration respectively, are provided to be included in your own. The default arguments should be overridden to specify the correct tf reference frames, and to avoid conflicts when using multiple calibrations at once.

The suggested integration is:

- create a new

handeye_calibrate.launchfile, which includes the robot’s and tracking system’s launch files, as well aseasy_handeye’scalibrate.launchas illustrated below in the next section “Calibration” - in each of your launch files where you need the result of the calibration, include

easy_handeye’spublish.launchas illustrated below in the section “Publishing”

Calibration

For both use cases, you can either launch the calibrate.launch

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | Automated, hardware-independent Hand-Eye Calibration |

| Checkout URI | https://github.com/ifl-camp/easy_handeye.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| easy_handeye | 0.4.3 |

| easy_handeye_msgs | 0.4.3 |

| rqt_easy_handeye | 0.4.3 |

README

easy_handeye: automated, hardware-independent Hand-Eye Calibration for ROS1

This package provides functionality and a GUI to:

-

sample the robot position and tracking system output via

tf, - compute the eye-on-base or eye-in-hand calibration matrix through the OpenCV library’s Tsai-Lenz algorithm implementation,

- store the result of the calibration,

-

publish the result of the calibration procedure as a

tftransform at each subsequent system startup, - (optional) automatically move a robot around a starting pose via

MoveIt!to acquire the samples.

The intended result is to make it easy and straightforward to perform the calibration, and to keep it up-to-date throughout the system. Two launch files are provided to be run, respectively to perform the calibration and check its result. A further launch file can be integrated into your own launch files, to make use of the result of the calibration in a transparent way: if the calibration is performed again, the updated result will be used without further action required.

You can try out this software in a simulator, through the

easy_handeye_demo package. This package also serves as an

example for integrating easy_handeye into your own launch scripts.

NOTE: a (development) ROS2 version of this package is available here

News

- version 0.4.3

- documentation and bug fixes

- version 0.4.2

- fixes for the freehand robot movement scenario

- version 0.4.1

- fixed a bug that prevented loading and publishing the calibration - thanks to @lyh458!

- version 0.4.0

- switched to OpenCV as a backend for the algorithm implementation

- added UI element to pick the calibration algorithm (Tsai-Lenz, Park, Horaud, Andreff, Daniilidis)

- version 0.3.1

- restored compatibility with Melodic and Kinetic along with Noetic

- version 0.3.0

- ROS Noetic compatibility

- added “evaluator” GUI to evaluate the accuracy of the calibration while running

check_calibration.launch

Use Cases

If you are unfamiliar with Tsai’s hand-eye calibration [1], it can be used in two ways:

- eye-in-hand to compute the static transform between the reference frames of a robot’s hand effector and that of a tracking system, e.g. the optical frame of an RGB camera used to track AR markers. In this case, the camera is mounted on the end-effector, and you place the visual target so that it is fixed relative to the base of the robot; for example, you can place an AR marker on a table.

- eye-on-base to compute the static transform from a robot’s base to a tracking system, e.g. the optical frame of a camera standing on a tripod next to the robot. In this case you can attach a marker, e.g. an AR marker, to the end-effector of the robot.

A relevant example of an eye-on-base calibration is finding the position of an RGBD camera with respect to a robot for object collision avoidance, e.g. with MoveIt!: an example launch file is provided to perform this common task between an Universal Robot and a Kinect through aruco. eye-on-hand can be used for vision-guided tasks.

The (arguably) best part is, that you do not have to care about the placement of the auxiliary marker (the one on the table in the eye-in-hand case, or on the robot in the eye-on-base case). The algorithm will “erase” that transformation out, and only return the transformation you are interested in.

| eye-on-base | eye-on-hand |

|---|---|

|

|

Getting started

- clone this repository into your catkin workspace:

cd ~/catkin_ws/src # replace with path to your workspace

git clone https://github.com/IFL-CAMP/easy_handeye

- satisfy dependencies

cd .. # now we are inside ~/catkin_ws

rosdep install -iyr --from-paths src

- build

catkin build

Usage

Two launch files, one for computing and one for publishing the calibration respectively, are provided to be included in your own. The default arguments should be overridden to specify the correct tf reference frames, and to avoid conflicts when using multiple calibrations at once.

The suggested integration is:

- create a new

handeye_calibrate.launchfile, which includes the robot’s and tracking system’s launch files, as well aseasy_handeye’scalibrate.launchas illustrated below in the next section “Calibration” - in each of your launch files where you need the result of the calibration, include

easy_handeye’spublish.launchas illustrated below in the section “Publishing”

Calibration

For both use cases, you can either launch the calibrate.launch

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | Automated, hardware-independent Hand-Eye Calibration |

| Checkout URI | https://github.com/ifl-camp/easy_handeye.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| easy_handeye | 0.4.3 |

| easy_handeye_msgs | 0.4.3 |

| rqt_easy_handeye | 0.4.3 |

README

easy_handeye: automated, hardware-independent Hand-Eye Calibration for ROS1

This package provides functionality and a GUI to:

-

sample the robot position and tracking system output via

tf, - compute the eye-on-base or eye-in-hand calibration matrix through the OpenCV library’s Tsai-Lenz algorithm implementation,

- store the result of the calibration,

-

publish the result of the calibration procedure as a

tftransform at each subsequent system startup, - (optional) automatically move a robot around a starting pose via

MoveIt!to acquire the samples.

The intended result is to make it easy and straightforward to perform the calibration, and to keep it up-to-date throughout the system. Two launch files are provided to be run, respectively to perform the calibration and check its result. A further launch file can be integrated into your own launch files, to make use of the result of the calibration in a transparent way: if the calibration is performed again, the updated result will be used without further action required.

You can try out this software in a simulator, through the

easy_handeye_demo package. This package also serves as an

example for integrating easy_handeye into your own launch scripts.

NOTE: a (development) ROS2 version of this package is available here

News

- version 0.4.3

- documentation and bug fixes

- version 0.4.2

- fixes for the freehand robot movement scenario

- version 0.4.1

- fixed a bug that prevented loading and publishing the calibration - thanks to @lyh458!

- version 0.4.0

- switched to OpenCV as a backend for the algorithm implementation

- added UI element to pick the calibration algorithm (Tsai-Lenz, Park, Horaud, Andreff, Daniilidis)

- version 0.3.1

- restored compatibility with Melodic and Kinetic along with Noetic

- version 0.3.0

- ROS Noetic compatibility

- added “evaluator” GUI to evaluate the accuracy of the calibration while running

check_calibration.launch

Use Cases

If you are unfamiliar with Tsai’s hand-eye calibration [1], it can be used in two ways:

- eye-in-hand to compute the static transform between the reference frames of a robot’s hand effector and that of a tracking system, e.g. the optical frame of an RGB camera used to track AR markers. In this case, the camera is mounted on the end-effector, and you place the visual target so that it is fixed relative to the base of the robot; for example, you can place an AR marker on a table.

- eye-on-base to compute the static transform from a robot’s base to a tracking system, e.g. the optical frame of a camera standing on a tripod next to the robot. In this case you can attach a marker, e.g. an AR marker, to the end-effector of the robot.

A relevant example of an eye-on-base calibration is finding the position of an RGBD camera with respect to a robot for object collision avoidance, e.g. with MoveIt!: an example launch file is provided to perform this common task between an Universal Robot and a Kinect through aruco. eye-on-hand can be used for vision-guided tasks.

The (arguably) best part is, that you do not have to care about the placement of the auxiliary marker (the one on the table in the eye-in-hand case, or on the robot in the eye-on-base case). The algorithm will “erase” that transformation out, and only return the transformation you are interested in.

| eye-on-base | eye-on-hand |

|---|---|

|

|

Getting started

- clone this repository into your catkin workspace:

cd ~/catkin_ws/src # replace with path to your workspace

git clone https://github.com/IFL-CAMP/easy_handeye

- satisfy dependencies

cd .. # now we are inside ~/catkin_ws

rosdep install -iyr --from-paths src

- build

catkin build

Usage

Two launch files, one for computing and one for publishing the calibration respectively, are provided to be included in your own. The default arguments should be overridden to specify the correct tf reference frames, and to avoid conflicts when using multiple calibrations at once.

The suggested integration is:

- create a new

handeye_calibrate.launchfile, which includes the robot’s and tracking system’s launch files, as well aseasy_handeye’scalibrate.launchas illustrated below in the next section “Calibration” - in each of your launch files where you need the result of the calibration, include

easy_handeye’spublish.launchas illustrated below in the section “Publishing”

Calibration

For both use cases, you can either launch the calibrate.launch

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | Automated, hardware-independent Hand-Eye Calibration |

| Checkout URI | https://github.com/ifl-camp/easy_handeye.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| easy_handeye | 0.4.3 |

| easy_handeye_msgs | 0.4.3 |

| rqt_easy_handeye | 0.4.3 |

README

easy_handeye: automated, hardware-independent Hand-Eye Calibration for ROS1

This package provides functionality and a GUI to:

-

sample the robot position and tracking system output via

tf, - compute the eye-on-base or eye-in-hand calibration matrix through the OpenCV library’s Tsai-Lenz algorithm implementation,

- store the result of the calibration,

-

publish the result of the calibration procedure as a

tftransform at each subsequent system startup, - (optional) automatically move a robot around a starting pose via

MoveIt!to acquire the samples.

The intended result is to make it easy and straightforward to perform the calibration, and to keep it up-to-date throughout the system. Two launch files are provided to be run, respectively to perform the calibration and check its result. A further launch file can be integrated into your own launch files, to make use of the result of the calibration in a transparent way: if the calibration is performed again, the updated result will be used without further action required.

You can try out this software in a simulator, through the

easy_handeye_demo package. This package also serves as an

example for integrating easy_handeye into your own launch scripts.

NOTE: a (development) ROS2 version of this package is available here

News

- version 0.4.3

- documentation and bug fixes

- version 0.4.2

- fixes for the freehand robot movement scenario

- version 0.4.1

- fixed a bug that prevented loading and publishing the calibration - thanks to @lyh458!

- version 0.4.0

- switched to OpenCV as a backend for the algorithm implementation

- added UI element to pick the calibration algorithm (Tsai-Lenz, Park, Horaud, Andreff, Daniilidis)

- version 0.3.1

- restored compatibility with Melodic and Kinetic along with Noetic

- version 0.3.0

- ROS Noetic compatibility

- added “evaluator” GUI to evaluate the accuracy of the calibration while running

check_calibration.launch

Use Cases

If you are unfamiliar with Tsai’s hand-eye calibration [1], it can be used in two ways:

- eye-in-hand to compute the static transform between the reference frames of a robot’s hand effector and that of a tracking system, e.g. the optical frame of an RGB camera used to track AR markers. In this case, the camera is mounted on the end-effector, and you place the visual target so that it is fixed relative to the base of the robot; for example, you can place an AR marker on a table.

- eye-on-base to compute the static transform from a robot’s base to a tracking system, e.g. the optical frame of a camera standing on a tripod next to the robot. In this case you can attach a marker, e.g. an AR marker, to the end-effector of the robot.

A relevant example of an eye-on-base calibration is finding the position of an RGBD camera with respect to a robot for object collision avoidance, e.g. with MoveIt!: an example launch file is provided to perform this common task between an Universal Robot and a Kinect through aruco. eye-on-hand can be used for vision-guided tasks.

The (arguably) best part is, that you do not have to care about the placement of the auxiliary marker (the one on the table in the eye-in-hand case, or on the robot in the eye-on-base case). The algorithm will “erase” that transformation out, and only return the transformation you are interested in.

| eye-on-base | eye-on-hand |

|---|---|

|

|

Getting started

- clone this repository into your catkin workspace:

cd ~/catkin_ws/src # replace with path to your workspace

git clone https://github.com/IFL-CAMP/easy_handeye

- satisfy dependencies

cd .. # now we are inside ~/catkin_ws

rosdep install -iyr --from-paths src

- build

catkin build

Usage

Two launch files, one for computing and one for publishing the calibration respectively, are provided to be included in your own. The default arguments should be overridden to specify the correct tf reference frames, and to avoid conflicts when using multiple calibrations at once.

The suggested integration is:

- create a new

handeye_calibrate.launchfile, which includes the robot’s and tracking system’s launch files, as well aseasy_handeye’scalibrate.launchas illustrated below in the next section “Calibration” - in each of your launch files where you need the result of the calibration, include

easy_handeye’spublish.launchas illustrated below in the section “Publishing”

Calibration

For both use cases, you can either launch the calibrate.launch

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | Automated, hardware-independent Hand-Eye Calibration |

| Checkout URI | https://github.com/ifl-camp/easy_handeye.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| easy_handeye | 0.4.3 |

| easy_handeye_msgs | 0.4.3 |

| rqt_easy_handeye | 0.4.3 |

README

easy_handeye: automated, hardware-independent Hand-Eye Calibration for ROS1

This package provides functionality and a GUI to:

-

sample the robot position and tracking system output via

tf, - compute the eye-on-base or eye-in-hand calibration matrix through the OpenCV library’s Tsai-Lenz algorithm implementation,

- store the result of the calibration,

-

publish the result of the calibration procedure as a

tftransform at each subsequent system startup, - (optional) automatically move a robot around a starting pose via

MoveIt!to acquire the samples.

The intended result is to make it easy and straightforward to perform the calibration, and to keep it up-to-date throughout the system. Two launch files are provided to be run, respectively to perform the calibration and check its result. A further launch file can be integrated into your own launch files, to make use of the result of the calibration in a transparent way: if the calibration is performed again, the updated result will be used without further action required.

You can try out this software in a simulator, through the

easy_handeye_demo package. This package also serves as an

example for integrating easy_handeye into your own launch scripts.

NOTE: a (development) ROS2 version of this package is available here

News

- version 0.4.3

- documentation and bug fixes

- version 0.4.2

- fixes for the freehand robot movement scenario

- version 0.4.1

- fixed a bug that prevented loading and publishing the calibration - thanks to @lyh458!

- version 0.4.0

- switched to OpenCV as a backend for the algorithm implementation

- added UI element to pick the calibration algorithm (Tsai-Lenz, Park, Horaud, Andreff, Daniilidis)

- version 0.3.1

- restored compatibility with Melodic and Kinetic along with Noetic

- version 0.3.0

- ROS Noetic compatibility

- added “evaluator” GUI to evaluate the accuracy of the calibration while running

check_calibration.launch

Use Cases

If you are unfamiliar with Tsai’s hand-eye calibration [1], it can be used in two ways:

- eye-in-hand to compute the static transform between the reference frames of a robot’s hand effector and that of a tracking system, e.g. the optical frame of an RGB camera used to track AR markers. In this case, the camera is mounted on the end-effector, and you place the visual target so that it is fixed relative to the base of the robot; for example, you can place an AR marker on a table.

- eye-on-base to compute the static transform from a robot’s base to a tracking system, e.g. the optical frame of a camera standing on a tripod next to the robot. In this case you can attach a marker, e.g. an AR marker, to the end-effector of the robot.

A relevant example of an eye-on-base calibration is finding the position of an RGBD camera with respect to a robot for object collision avoidance, e.g. with MoveIt!: an example launch file is provided to perform this common task between an Universal Robot and a Kinect through aruco. eye-on-hand can be used for vision-guided tasks.

The (arguably) best part is, that you do not have to care about the placement of the auxiliary marker (the one on the table in the eye-in-hand case, or on the robot in the eye-on-base case). The algorithm will “erase” that transformation out, and only return the transformation you are interested in.

| eye-on-base | eye-on-hand |

|---|---|

|

|

Getting started

- clone this repository into your catkin workspace:

cd ~/catkin_ws/src # replace with path to your workspace

git clone https://github.com/IFL-CAMP/easy_handeye

- satisfy dependencies

cd .. # now we are inside ~/catkin_ws

rosdep install -iyr --from-paths src

- build

catkin build

Usage

Two launch files, one for computing and one for publishing the calibration respectively, are provided to be included in your own. The default arguments should be overridden to specify the correct tf reference frames, and to avoid conflicts when using multiple calibrations at once.

The suggested integration is:

- create a new

handeye_calibrate.launchfile, which includes the robot’s and tracking system’s launch files, as well aseasy_handeye’scalibrate.launchas illustrated below in the next section “Calibration” - in each of your launch files where you need the result of the calibration, include

easy_handeye’spublish.launchas illustrated below in the section “Publishing”

Calibration

For both use cases, you can either launch the calibrate.launch

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | Automated, hardware-independent Hand-Eye Calibration |

| Checkout URI | https://github.com/ifl-camp/easy_handeye.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| easy_handeye | 0.4.3 |

| easy_handeye_msgs | 0.4.3 |

| rqt_easy_handeye | 0.4.3 |

README

easy_handeye: automated, hardware-independent Hand-Eye Calibration for ROS1

This package provides functionality and a GUI to:

-

sample the robot position and tracking system output via

tf, - compute the eye-on-base or eye-in-hand calibration matrix through the OpenCV library’s Tsai-Lenz algorithm implementation,

- store the result of the calibration,

-

publish the result of the calibration procedure as a

tftransform at each subsequent system startup, - (optional) automatically move a robot around a starting pose via

MoveIt!to acquire the samples.

The intended result is to make it easy and straightforward to perform the calibration, and to keep it up-to-date throughout the system. Two launch files are provided to be run, respectively to perform the calibration and check its result. A further launch file can be integrated into your own launch files, to make use of the result of the calibration in a transparent way: if the calibration is performed again, the updated result will be used without further action required.

You can try out this software in a simulator, through the

easy_handeye_demo package. This package also serves as an

example for integrating easy_handeye into your own launch scripts.

NOTE: a (development) ROS2 version of this package is available here

News

- version 0.4.3

- documentation and bug fixes

- version 0.4.2

- fixes for the freehand robot movement scenario

- version 0.4.1

- fixed a bug that prevented loading and publishing the calibration - thanks to @lyh458!

- version 0.4.0

- switched to OpenCV as a backend for the algorithm implementation

- added UI element to pick the calibration algorithm (Tsai-Lenz, Park, Horaud, Andreff, Daniilidis)

- version 0.3.1

- restored compatibility with Melodic and Kinetic along with Noetic

- version 0.3.0

- ROS Noetic compatibility

- added “evaluator” GUI to evaluate the accuracy of the calibration while running

check_calibration.launch

Use Cases

If you are unfamiliar with Tsai’s hand-eye calibration [1], it can be used in two ways:

- eye-in-hand to compute the static transform between the reference frames of a robot’s hand effector and that of a tracking system, e.g. the optical frame of an RGB camera used to track AR markers. In this case, the camera is mounted on the end-effector, and you place the visual target so that it is fixed relative to the base of the robot; for example, you can place an AR marker on a table.

- eye-on-base to compute the static transform from a robot’s base to a tracking system, e.g. the optical frame of a camera standing on a tripod next to the robot. In this case you can attach a marker, e.g. an AR marker, to the end-effector of the robot.

A relevant example of an eye-on-base calibration is finding the position of an RGBD camera with respect to a robot for object collision avoidance, e.g. with MoveIt!: an example launch file is provided to perform this common task between an Universal Robot and a Kinect through aruco. eye-on-hand can be used for vision-guided tasks.

The (arguably) best part is, that you do not have to care about the placement of the auxiliary marker (the one on the table in the eye-in-hand case, or on the robot in the eye-on-base case). The algorithm will “erase” that transformation out, and only return the transformation you are interested in.

| eye-on-base | eye-on-hand |

|---|---|

|

|

Getting started

- clone this repository into your catkin workspace:

cd ~/catkin_ws/src # replace with path to your workspace

git clone https://github.com/IFL-CAMP/easy_handeye

- satisfy dependencies

cd .. # now we are inside ~/catkin_ws

rosdep install -iyr --from-paths src

- build

catkin build

Usage

Two launch files, one for computing and one for publishing the calibration respectively, are provided to be included in your own. The default arguments should be overridden to specify the correct tf reference frames, and to avoid conflicts when using multiple calibrations at once.

The suggested integration is:

- create a new

handeye_calibrate.launchfile, which includes the robot’s and tracking system’s launch files, as well aseasy_handeye’scalibrate.launchas illustrated below in the next section “Calibration” - in each of your launch files where you need the result of the calibration, include

easy_handeye’spublish.launchas illustrated below in the section “Publishing”

Calibration

For both use cases, you can either launch the calibrate.launch

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | Automated, hardware-independent Hand-Eye Calibration |

| Checkout URI | https://github.com/ifl-camp/easy_handeye.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| easy_handeye | 0.4.3 |

| easy_handeye_msgs | 0.4.3 |

| rqt_easy_handeye | 0.4.3 |

README

easy_handeye: automated, hardware-independent Hand-Eye Calibration for ROS1

This package provides functionality and a GUI to:

-

sample the robot position and tracking system output via

tf, - compute the eye-on-base or eye-in-hand calibration matrix through the OpenCV library’s Tsai-Lenz algorithm implementation,

- store the result of the calibration,

-

publish the result of the calibration procedure as a

tftransform at each subsequent system startup, - (optional) automatically move a robot around a starting pose via

MoveIt!to acquire the samples.

The intended result is to make it easy and straightforward to perform the calibration, and to keep it up-to-date throughout the system. Two launch files are provided to be run, respectively to perform the calibration and check its result. A further launch file can be integrated into your own launch files, to make use of the result of the calibration in a transparent way: if the calibration is performed again, the updated result will be used without further action required.

You can try out this software in a simulator, through the

easy_handeye_demo package. This package also serves as an

example for integrating easy_handeye into your own launch scripts.

NOTE: a (development) ROS2 version of this package is available here

News

- version 0.4.3

- documentation and bug fixes

- version 0.4.2

- fixes for the freehand robot movement scenario

- version 0.4.1

- fixed a bug that prevented loading and publishing the calibration - thanks to @lyh458!

- version 0.4.0

- switched to OpenCV as a backend for the algorithm implementation

- added UI element to pick the calibration algorithm (Tsai-Lenz, Park, Horaud, Andreff, Daniilidis)

- version 0.3.1

- restored compatibility with Melodic and Kinetic along with Noetic

- version 0.3.0

- ROS Noetic compatibility

- added “evaluator” GUI to evaluate the accuracy of the calibration while running

check_calibration.launch

Use Cases

If you are unfamiliar with Tsai’s hand-eye calibration [1], it can be used in two ways:

- eye-in-hand to compute the static transform between the reference frames of a robot’s hand effector and that of a tracking system, e.g. the optical frame of an RGB camera used to track AR markers. In this case, the camera is mounted on the end-effector, and you place the visual target so that it is fixed relative to the base of the robot; for example, you can place an AR marker on a table.

- eye-on-base to compute the static transform from a robot’s base to a tracking system, e.g. the optical frame of a camera standing on a tripod next to the robot. In this case you can attach a marker, e.g. an AR marker, to the end-effector of the robot.

A relevant example of an eye-on-base calibration is finding the position of an RGBD camera with respect to a robot for object collision avoidance, e.g. with MoveIt!: an example launch file is provided to perform this common task between an Universal Robot and a Kinect through aruco. eye-on-hand can be used for vision-guided tasks.

The (arguably) best part is, that you do not have to care about the placement of the auxiliary marker (the one on the table in the eye-in-hand case, or on the robot in the eye-on-base case). The algorithm will “erase” that transformation out, and only return the transformation you are interested in.

| eye-on-base | eye-on-hand |

|---|---|

|

|

Getting started

- clone this repository into your catkin workspace:

cd ~/catkin_ws/src # replace with path to your workspace

git clone https://github.com/IFL-CAMP/easy_handeye

- satisfy dependencies

cd .. # now we are inside ~/catkin_ws

rosdep install -iyr --from-paths src

- build

catkin build

Usage

Two launch files, one for computing and one for publishing the calibration respectively, are provided to be included in your own. The default arguments should be overridden to specify the correct tf reference frames, and to avoid conflicts when using multiple calibrations at once.

The suggested integration is:

- create a new

handeye_calibrate.launchfile, which includes the robot’s and tracking system’s launch files, as well aseasy_handeye’scalibrate.launchas illustrated below in the next section “Calibration” - in each of your launch files where you need the result of the calibration, include

easy_handeye’spublish.launchas illustrated below in the section “Publishing”

Calibration

For both use cases, you can either launch the calibrate.launch

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | Automated, hardware-independent Hand-Eye Calibration |

| Checkout URI | https://github.com/ifl-camp/easy_handeye.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| easy_handeye | 0.4.3 |

| easy_handeye_msgs | 0.4.3 |

| rqt_easy_handeye | 0.4.3 |

README

easy_handeye: automated, hardware-independent Hand-Eye Calibration for ROS1

This package provides functionality and a GUI to:

-

sample the robot position and tracking system output via

tf, - compute the eye-on-base or eye-in-hand calibration matrix through the OpenCV library’s Tsai-Lenz algorithm implementation,

- store the result of the calibration,

-

publish the result of the calibration procedure as a

tftransform at each subsequent system startup, - (optional) automatically move a robot around a starting pose via

MoveIt!to acquire the samples.

The intended result is to make it easy and straightforward to perform the calibration, and to keep it up-to-date throughout the system. Two launch files are provided to be run, respectively to perform the calibration and check its result. A further launch file can be integrated into your own launch files, to make use of the result of the calibration in a transparent way: if the calibration is performed again, the updated result will be used without further action required.

You can try out this software in a simulator, through the

easy_handeye_demo package. This package also serves as an

example for integrating easy_handeye into your own launch scripts.

NOTE: a (development) ROS2 version of this package is available here

News

- version 0.4.3

- documentation and bug fixes

- version 0.4.2

- fixes for the freehand robot movement scenario

- version 0.4.1

- fixed a bug that prevented loading and publishing the calibration - thanks to @lyh458!

- version 0.4.0

- switched to OpenCV as a backend for the algorithm implementation

- added UI element to pick the calibration algorithm (Tsai-Lenz, Park, Horaud, Andreff, Daniilidis)

- version 0.3.1

- restored compatibility with Melodic and Kinetic along with Noetic

- version 0.3.0

- ROS Noetic compatibility

- added “evaluator” GUI to evaluate the accuracy of the calibration while running

check_calibration.launch

Use Cases

If you are unfamiliar with Tsai’s hand-eye calibration [1], it can be used in two ways:

- eye-in-hand to compute the static transform between the reference frames of a robot’s hand effector and that of a tracking system, e.g. the optical frame of an RGB camera used to track AR markers. In this case, the camera is mounted on the end-effector, and you place the visual target so that it is fixed relative to the base of the robot; for example, you can place an AR marker on a table.

- eye-on-base to compute the static transform from a robot’s base to a tracking system, e.g. the optical frame of a camera standing on a tripod next to the robot. In this case you can attach a marker, e.g. an AR marker, to the end-effector of the robot.

A relevant example of an eye-on-base calibration is finding the position of an RGBD camera with respect to a robot for object collision avoidance, e.g. with MoveIt!: an example launch file is provided to perform this common task between an Universal Robot and a Kinect through aruco. eye-on-hand can be used for vision-guided tasks.

The (arguably) best part is, that you do not have to care about the placement of the auxiliary marker (the one on the table in the eye-in-hand case, or on the robot in the eye-on-base case). The algorithm will “erase” that transformation out, and only return the transformation you are interested in.

| eye-on-base | eye-on-hand |

|---|---|

|

|

Getting started

- clone this repository into your catkin workspace:

cd ~/catkin_ws/src # replace with path to your workspace

git clone https://github.com/IFL-CAMP/easy_handeye

- satisfy dependencies

cd .. # now we are inside ~/catkin_ws

rosdep install -iyr --from-paths src

- build

catkin build

Usage

Two launch files, one for computing and one for publishing the calibration respectively, are provided to be included in your own. The default arguments should be overridden to specify the correct tf reference frames, and to avoid conflicts when using multiple calibrations at once.

The suggested integration is:

- create a new

handeye_calibrate.launchfile, which includes the robot’s and tracking system’s launch files, as well aseasy_handeye’scalibrate.launchas illustrated below in the next section “Calibration” - in each of your launch files where you need the result of the calibration, include

easy_handeye’spublish.launchas illustrated below in the section “Publishing”

Calibration

For both use cases, you can either launch the calibrate.launch

File truncated at 100 lines see the full file