|

airship repositoryairship_chat airship_description airship_grasp airship_interface airship_localization airship_navigation airship_object airship_perception airship_planner |

ROS Distro

|

Repository Summary

| Description | |

| Checkout URI | https://github.com/airs-cuhk/airship.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-07-18 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| airship_chat | 0.0.0 |

| airship_description | 0.0.0 |

| airship_grasp | 0.0.0 |

| airship_interface | 0.0.1 |

| airship_localization | 0.0.0 |

| airship_navigation | 0.0.0 |

| airship_object | 0.0.0 |

| airship_perception | 0.0.0 |

| airship_planner | 0.0.0 |

README

AIRSHIP: Empowering Intelligent Robots through Embodied AI, Stronger United, Yet Distinct.

Welcome to AIRSHIP GitLab page! AIRSHIP is an open-sourced embodied AI robotic software stack to empower various forms of intelligent robots.

Table of Contents

- Introduction

- Prerequisites

- Hardware Architecture

- Software Architecture

- Modules and Folders

- System Setup

- Quick Starts

- Acknowledgement

Introduction

While embodied AI holds immense potential for shaping the future economy, it presents significant challenges, particularly in the realm of computing. Achieving the necessary flexibility, efficiency, and scalability demands sophisticated computational resources, but the most pressing challenge remains software complexity. Complexity often leads to inflexibility.

Embodied AI systems must seamlessly integrate a wide array of functionalities, from environmental perception and physical interaction to the execution of complex tasks. This requires the harmonious operation of components such as sensor data analysis, advanced algorithmic processing, and precise actuator control. To support the diverse range of robotic forms and their specific tasks, a versatile and adaptable software stack is essential. However, creating a unified software architecture that ensures cohesive operation across these varied elements introduces substantial complexity, making it difficult to build a streamlined and efficient software ecosystem.

AIRSHIP has been developed to tackle the problem of software complexity in embodied AI. Its mission is to provide an easy-to-deploy software stack that empowers a wide variety of intelligent robots, thereby facilitating scalability and accelerating the commercialization of the embodied AI sector. AIRSHIP takes inspiration from Android, which played a crucial role in the mobile computing revolution by offering an open-source, flexible platform. Android enabled a wide range of device manufacturers to create smartphones and tablets at different price points, sparking rapid innovation and competition. This led to the widespread availability of affordable and powerful mobile devices. Android’s robust ecosystem, supported by a vast library of apps through the Google Play Store, allowed developers to reach a global audience, significantly advancing mobile technology adoption.

Similarly, AIRSHIP’s vision is to empower robot builders by providing an open-source embodied AI software stack. This platform enables the creation of truly intelligent robots capable of performing a variety of tasks that were previously unattainable at a reasonable cost. AIRSHIP’s motto, “Stronger United, Yet Distinct,” embodies the belief that true intelligence emerges through integration, but such integration should enhance, not constrain, the creative possibilities for robotic designers, allowing for distinct and innovative designs.

To realize this vision, AIRSHIP has been designed with flexibility, extensibility, and intelligence at its core. In this release, AIRSHIP offers both software and hardware specifications, enabling robotic builders to develop complete embodied AI systems for a range of scenarios, including home, retail, and warehouse environments. AIRSHIP is capable of understanding natural language instructions and executing navigation and grasping tasks based on those instructions. The current AIRSHIP robot form factor features a hybrid design that includes a wheeled chassis, a robotic arm, a suite of sensors, and an embedded computing system. However, AIRSHIP is rapidly evolving, with plans to support many more form factors in the near future. The software architecture follows a hierarchical and modular design, incorporating large model capabilities into traditional robot software stacks. This modularity allows developers to customize the AIRSHIP software and swap out modules to meet specific application requirements.

AIRSHIP is distinguished by the following characteristics:

- It is an integrated, open-source embodied robot system that provides detailed hardware specifications and software components.

- It features a modular and flexible software system, where modules can be adapted or replaced for different applications.

- While it is empowered by large models, AIRSHIP maintains computational efficiency, with most software modules running on an embedded computing system, ensuring the system remains performant and accessible for various use cases.

Prerequisits

Computing platform

- Nvidia Jetson Orin AGX

Sensors

- IMU: HiPNUC CH104 9-axis IMU

- RGBD camera for grasping: Intel RealSense D435

- LiDAR: RoboSense Helios 32

- RGBD camera for navigation: Stereo Labs Zed2

Wheeled robot

-

AirsBot2

Robotic arm and gripper

- Elephant Robotics mycobot 630

- Elephant Robotics pro adaptive gripper

Software on Jetson Orin AGX

- Jetpack SDK 6.0

- Ubuntu 22.04

- ROS Humble

- python 3.10

- pytorch 2.3.0

Hardware Architecture

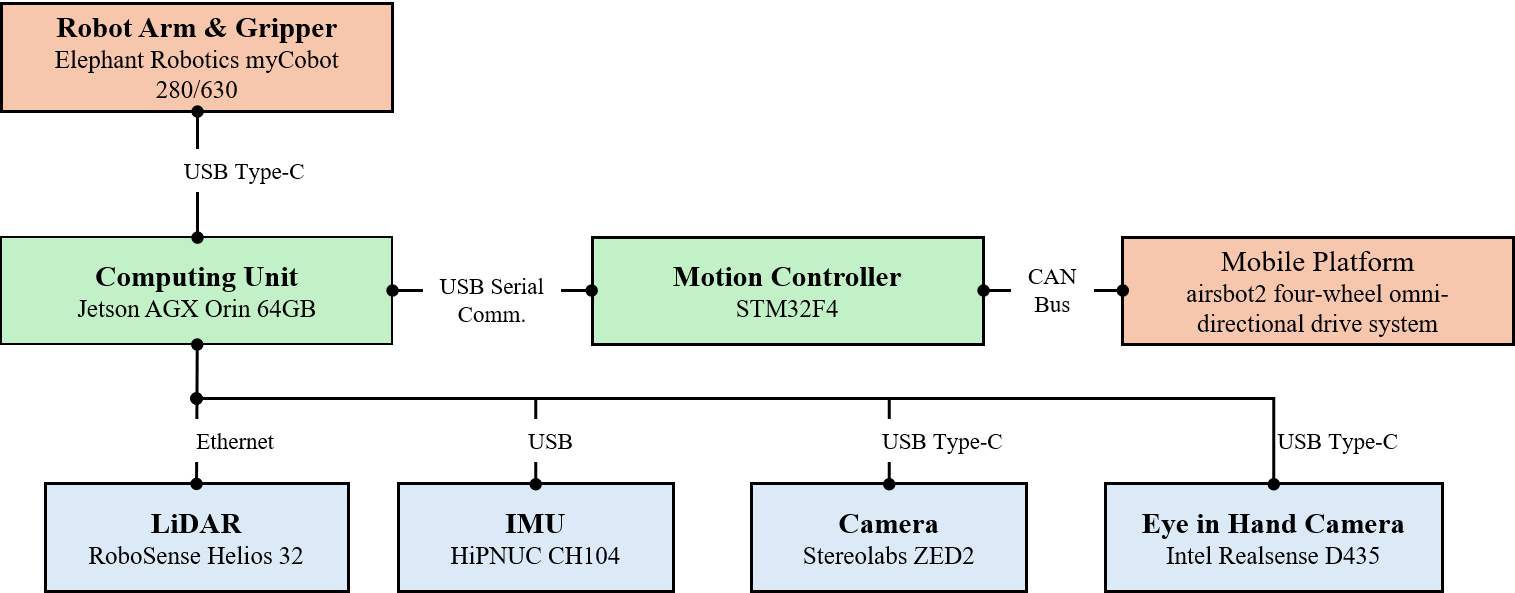

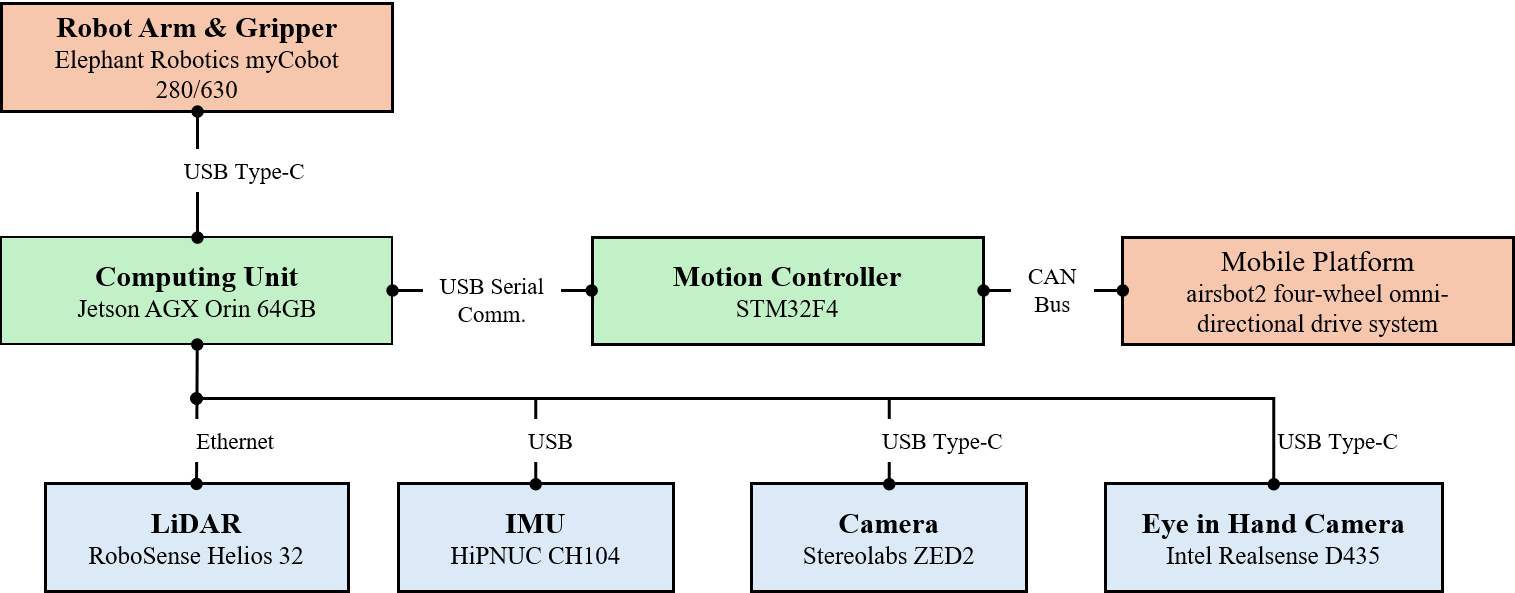

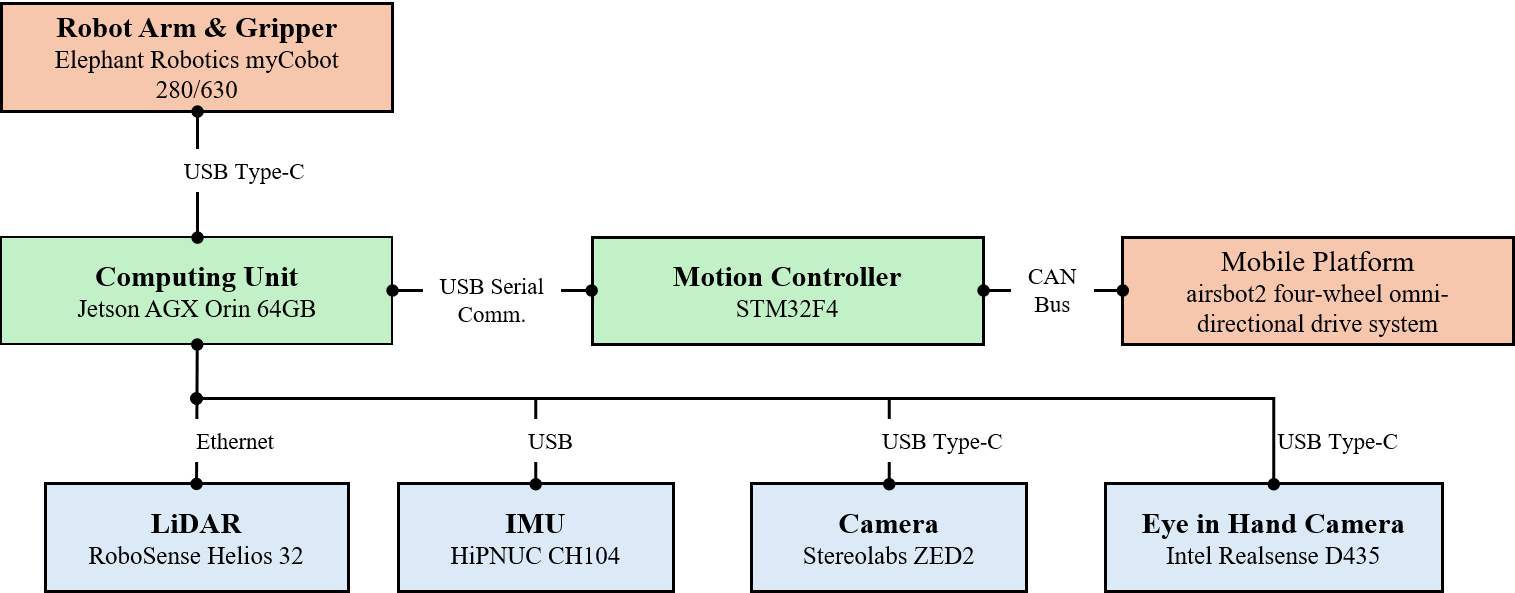

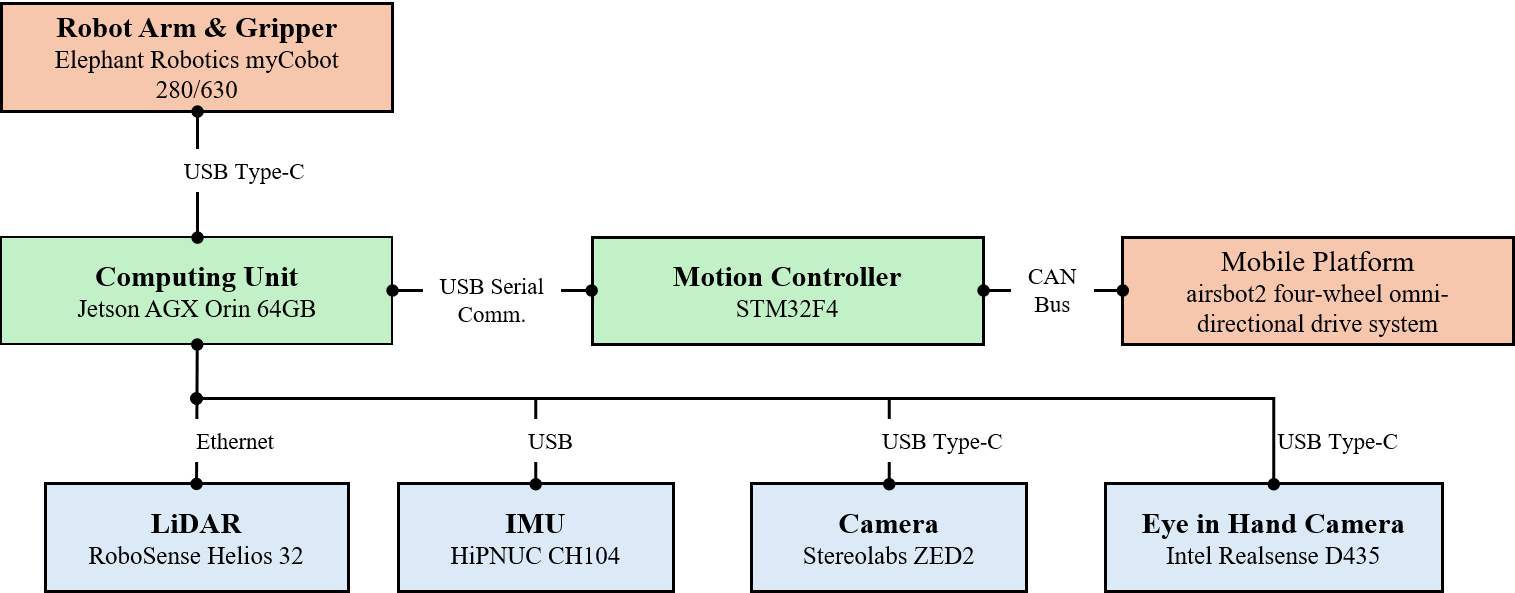

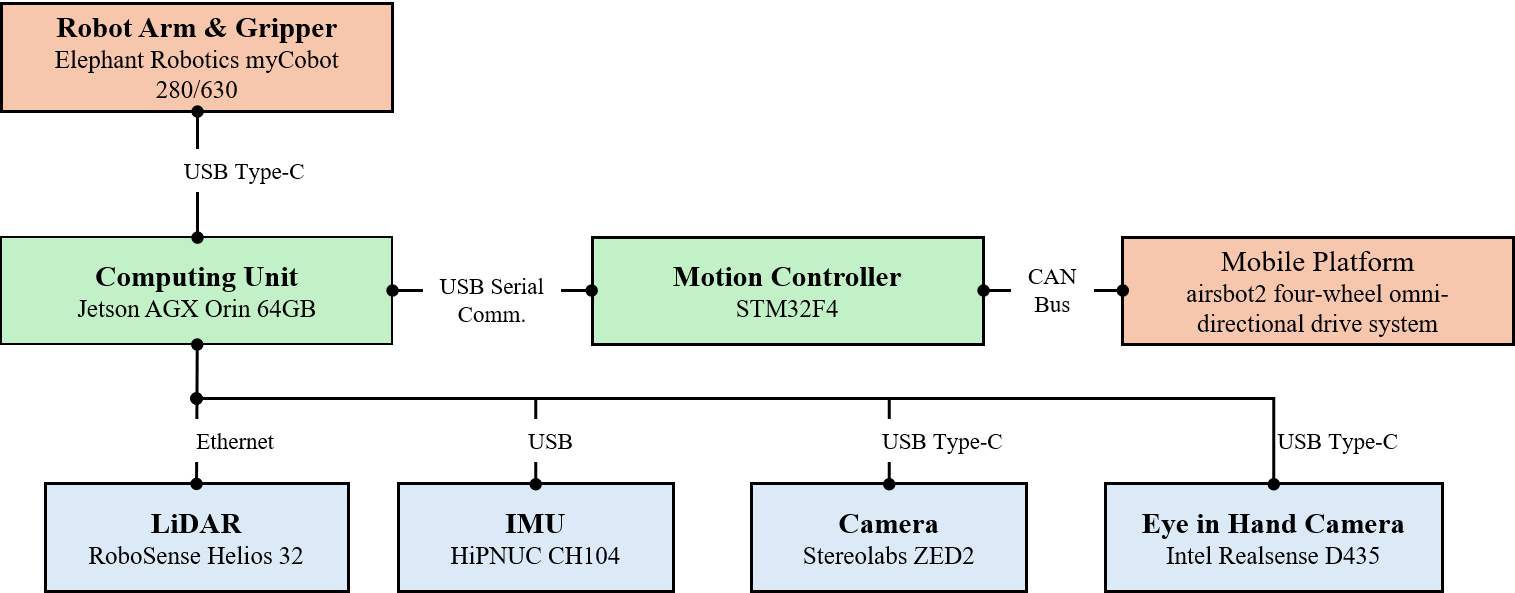

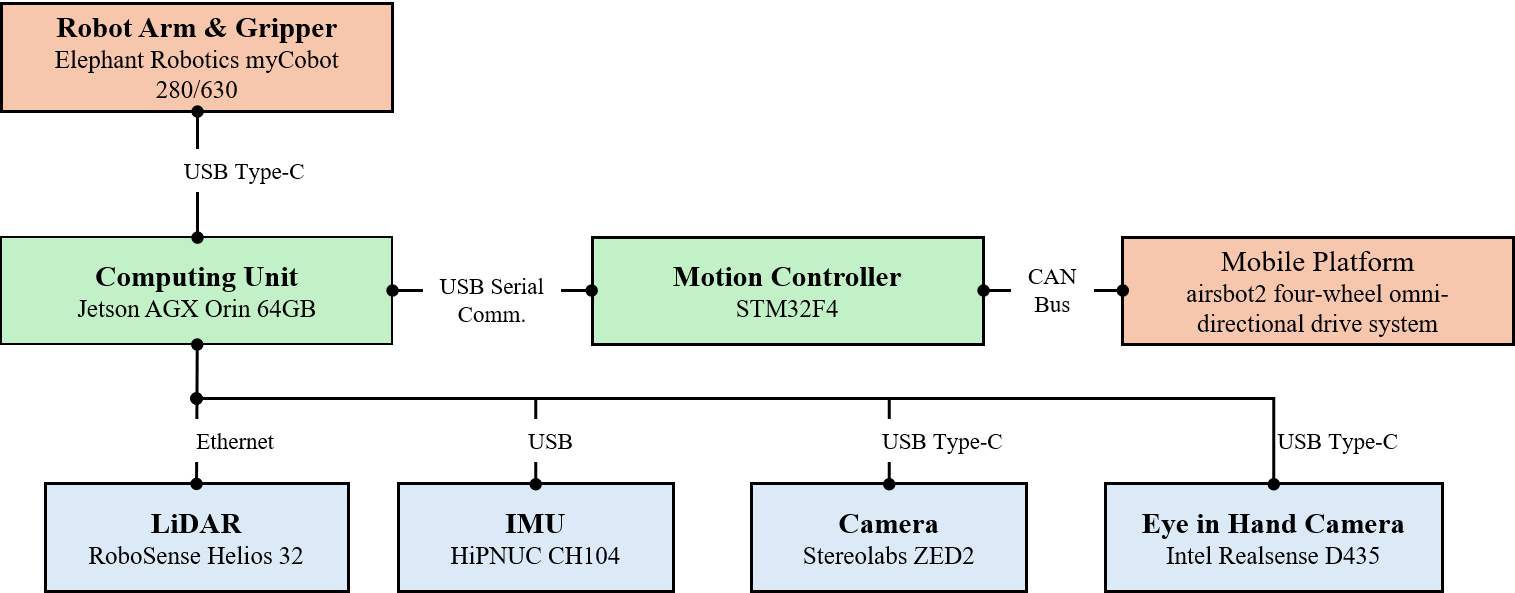

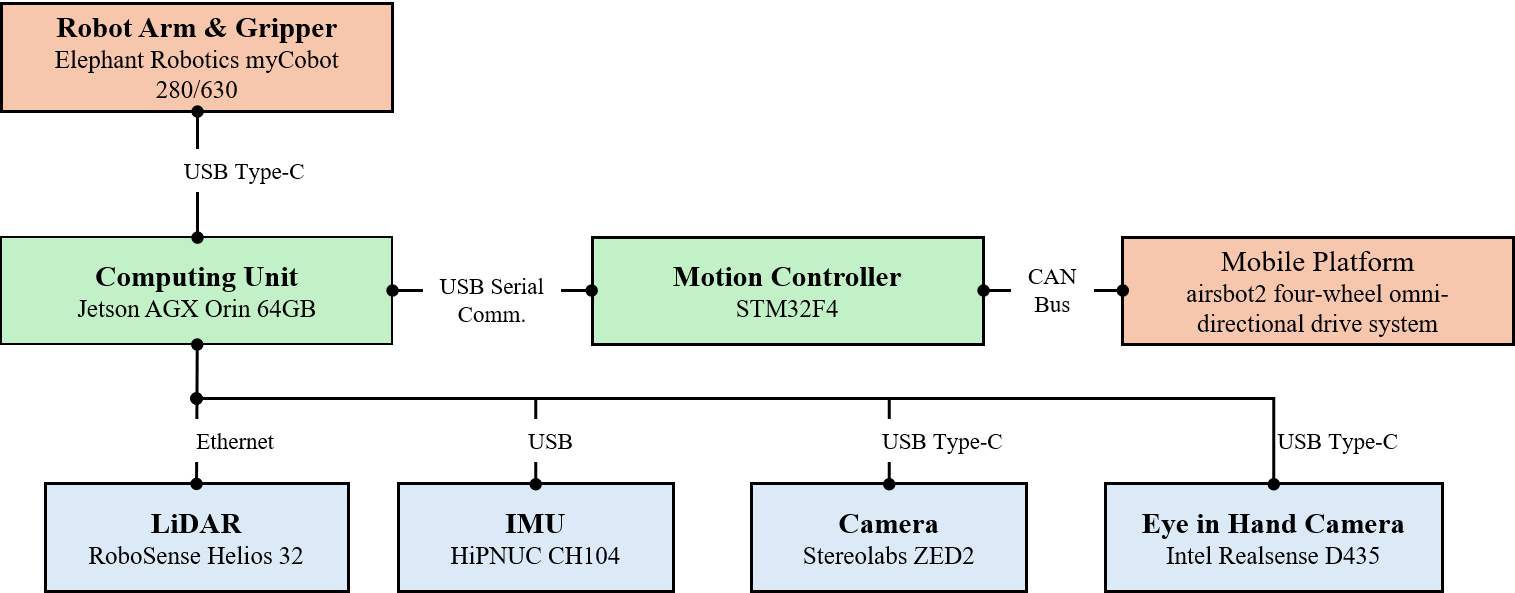

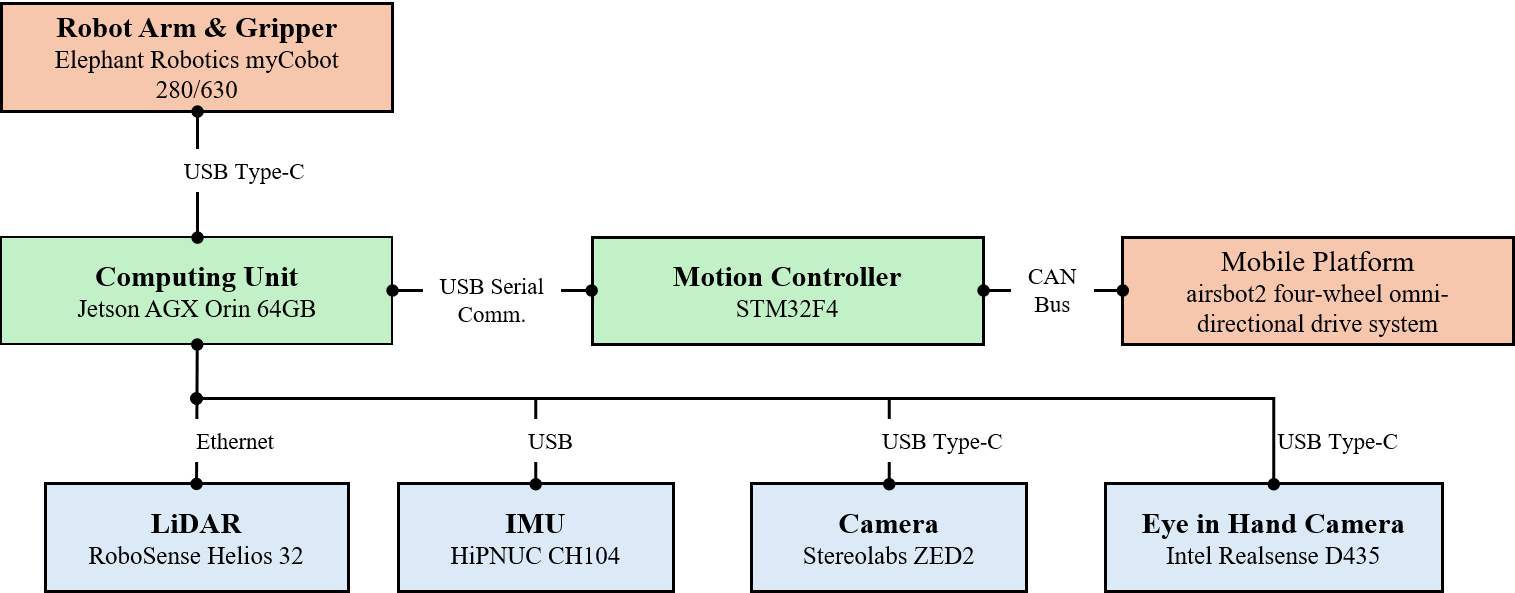

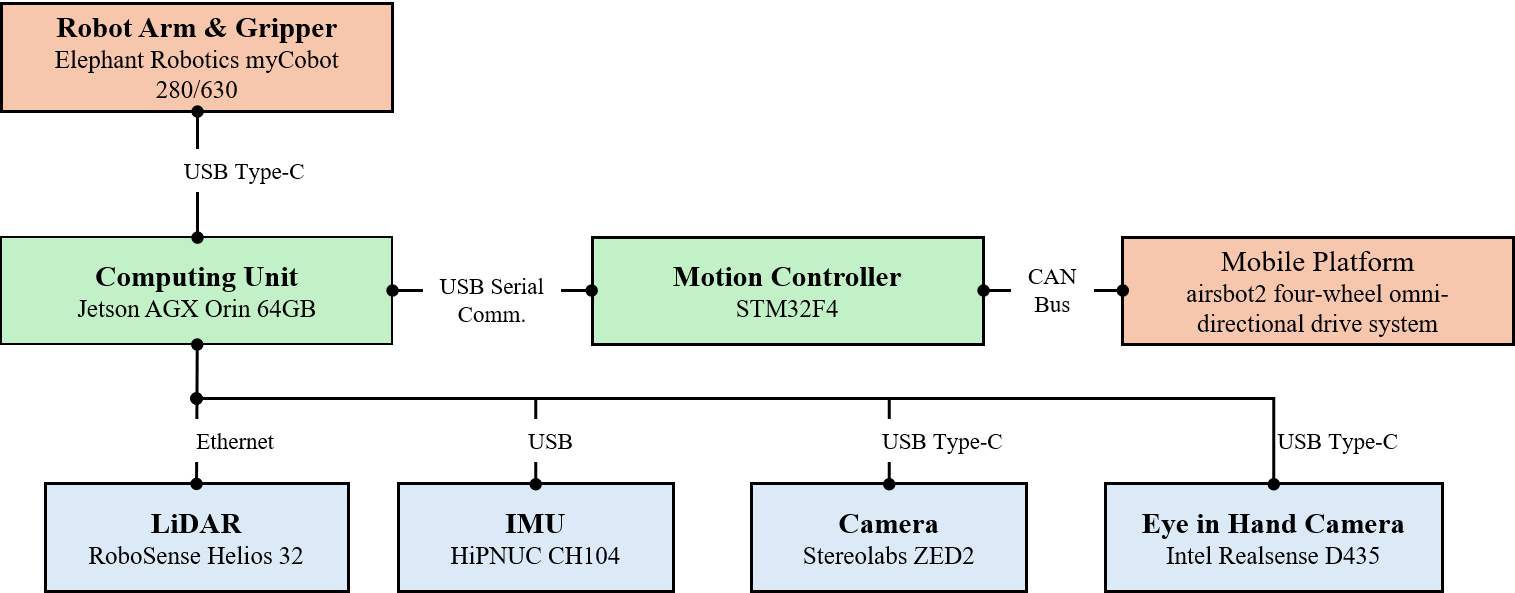

The AIRSHIP hybrid robot comprises a wheeled chassis, a robotic arm with a compatible gripper, an Nvidia Orin computing board, and a sensor suite including a LiDAR, a camera, and an RGBD camera. Detailed hardware specifications are provided in this file. The following figure illustrates the AIRSHIP robot’s hardware architecture.

Software Architecture

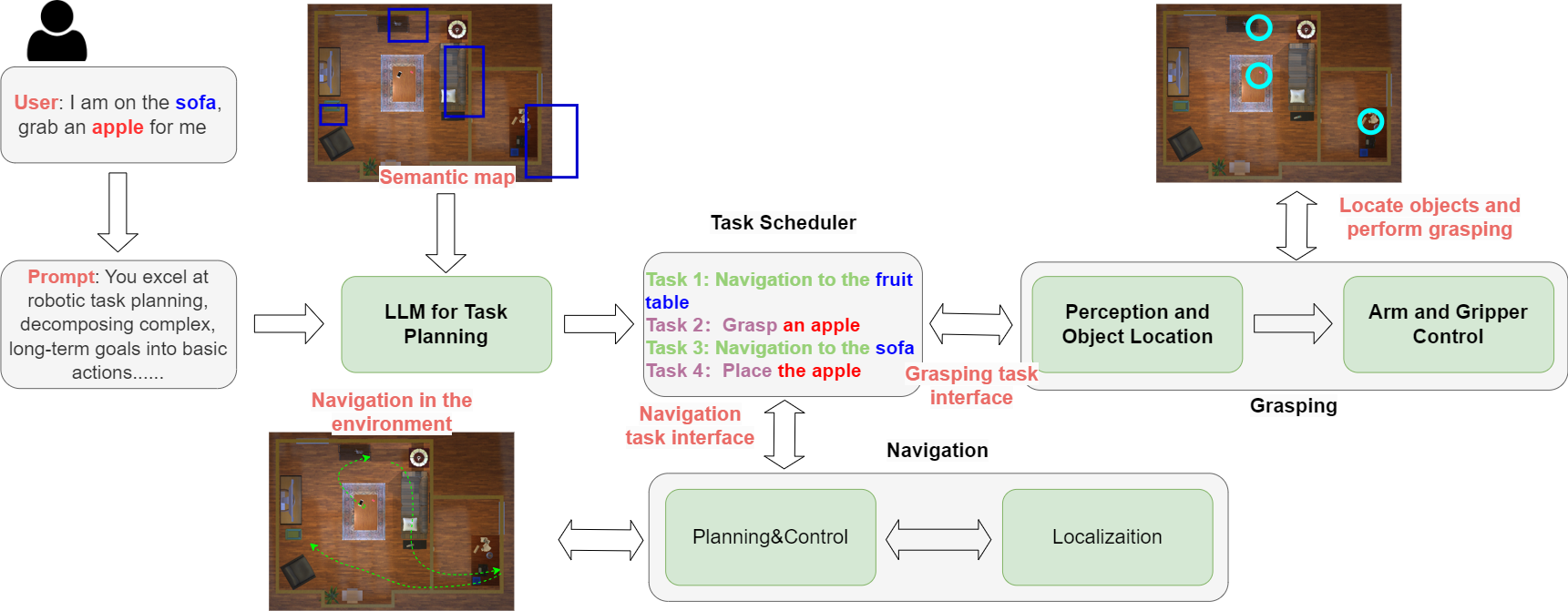

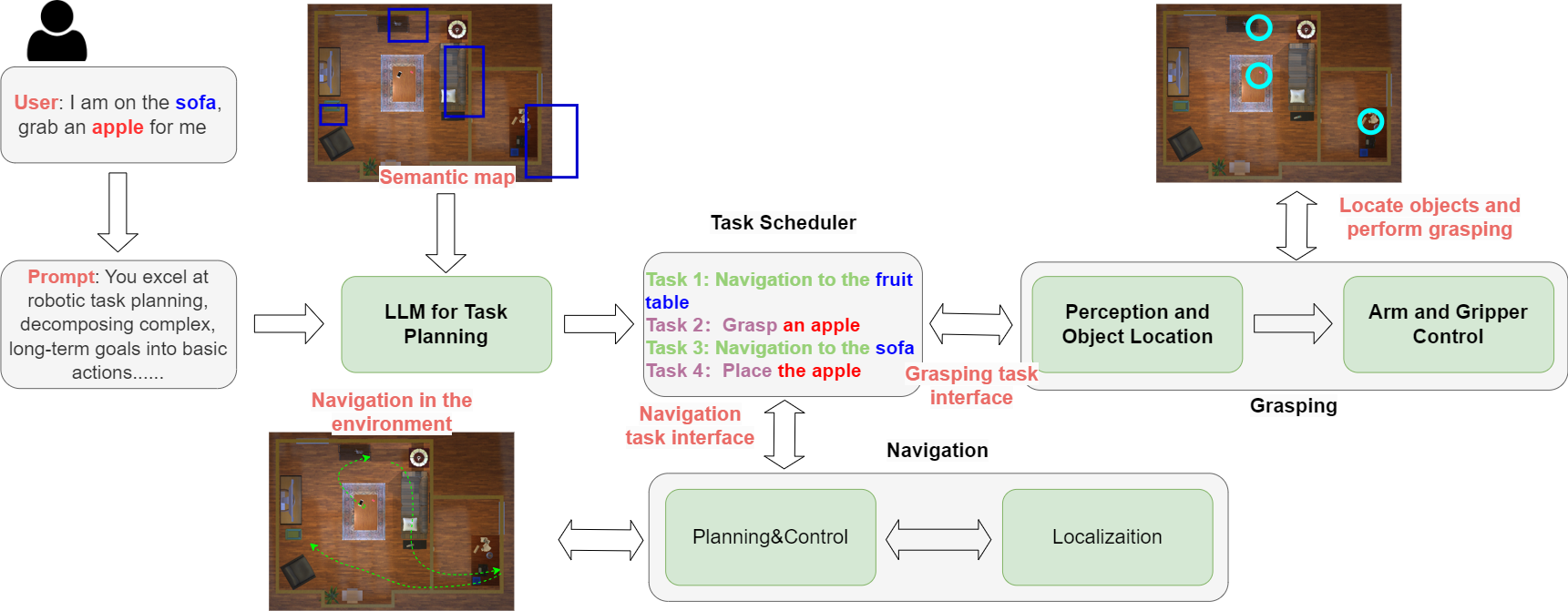

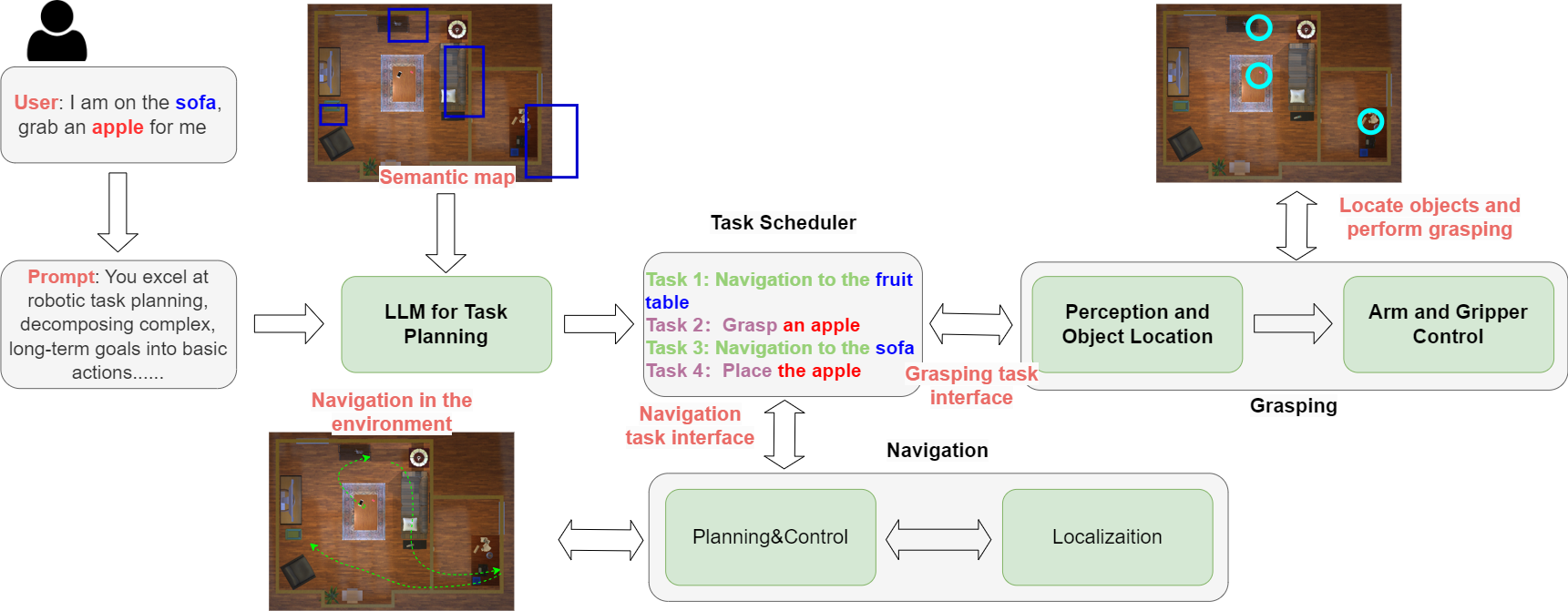

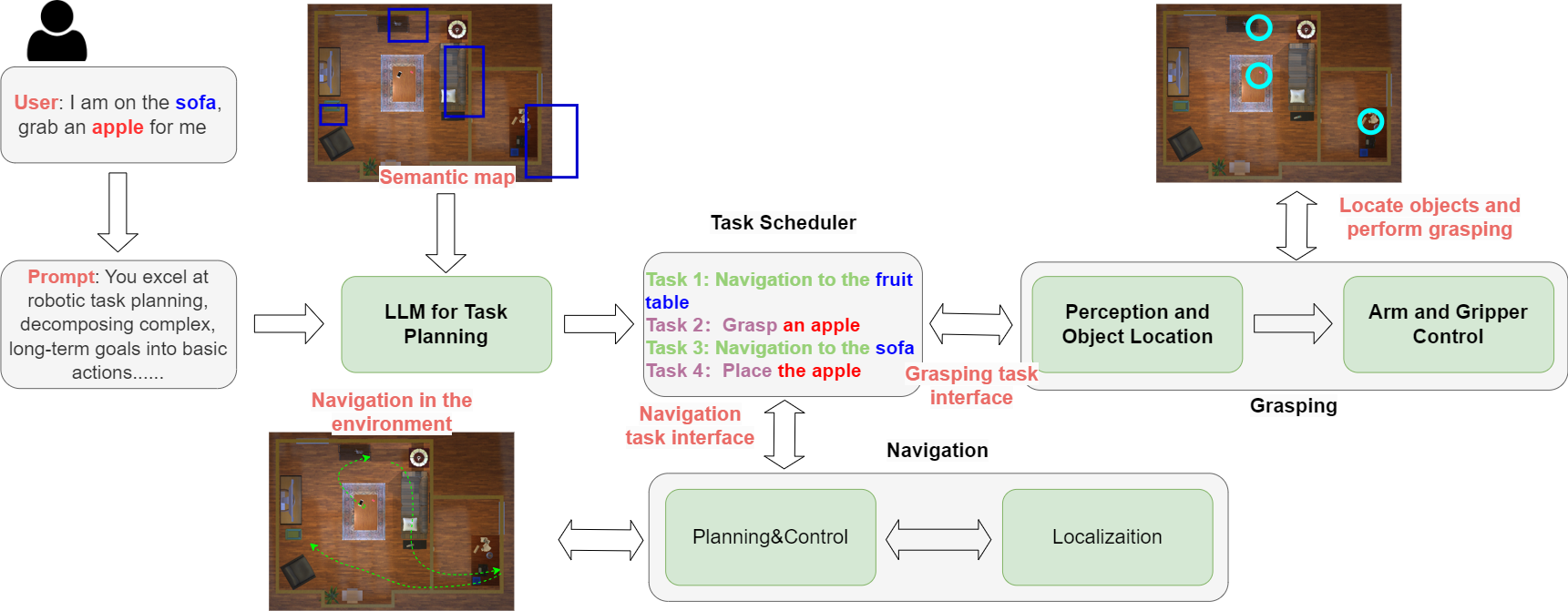

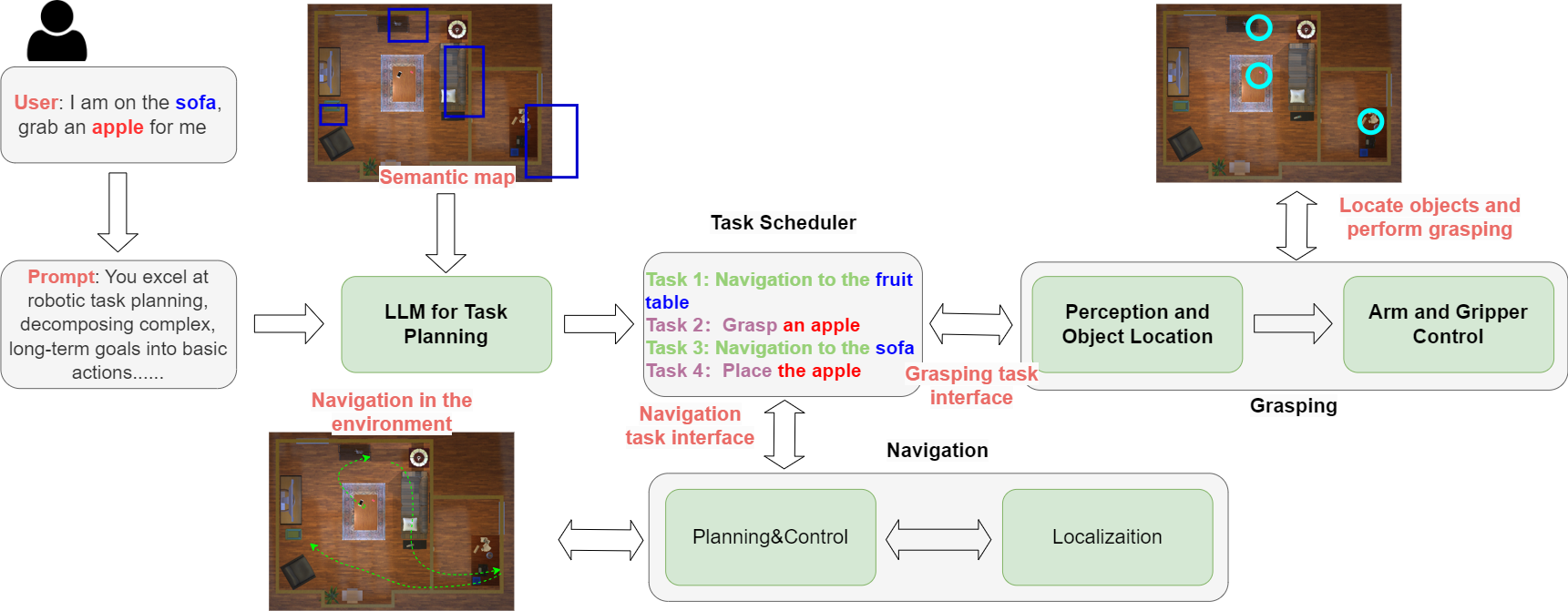

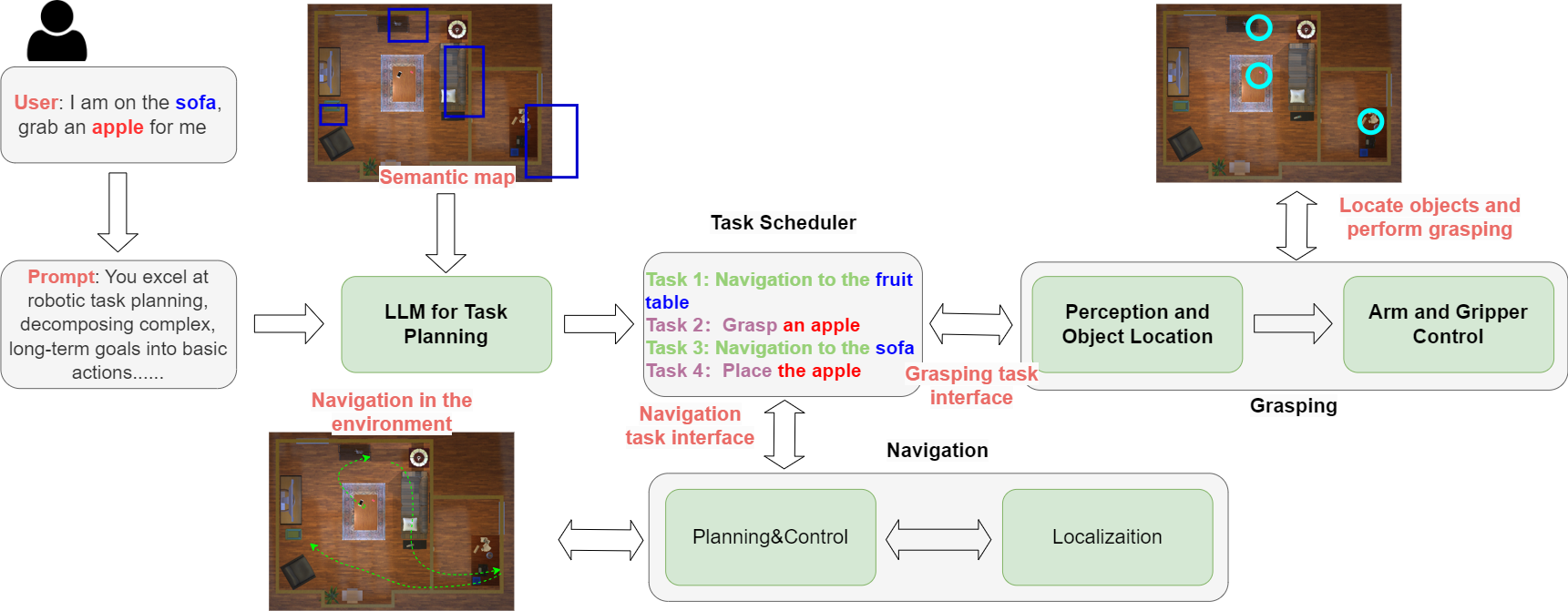

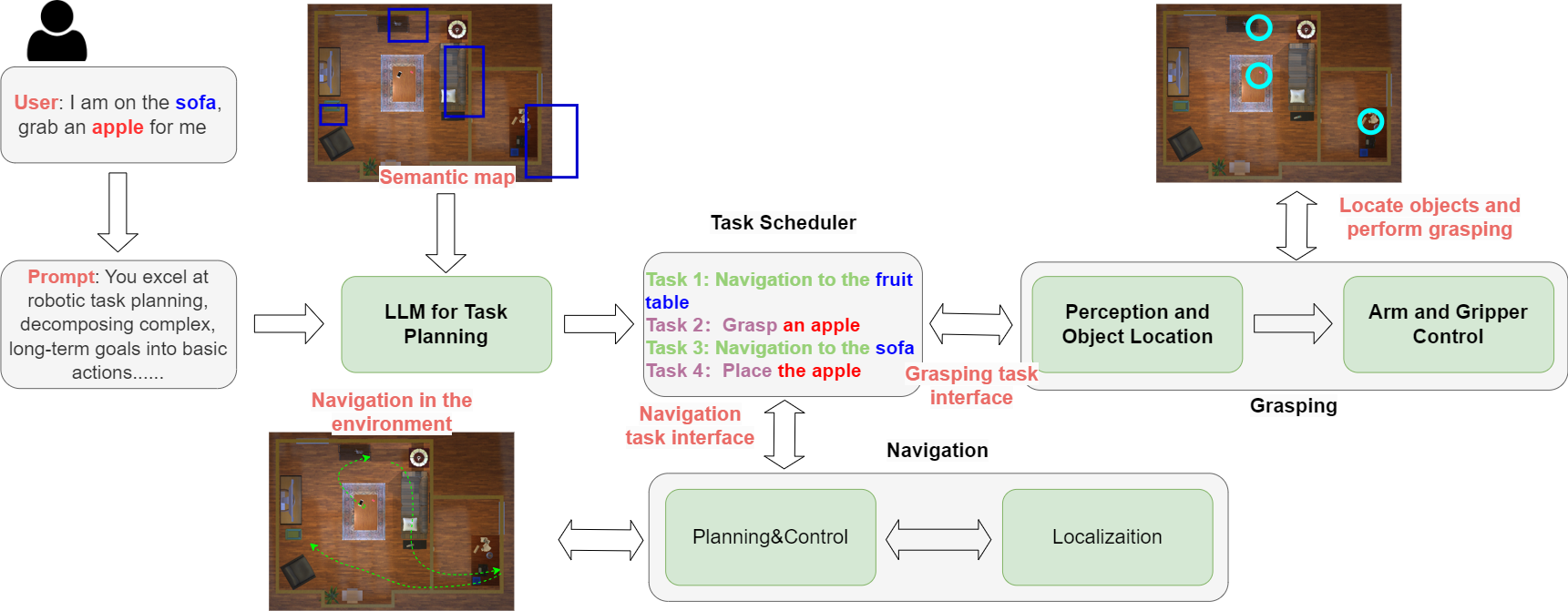

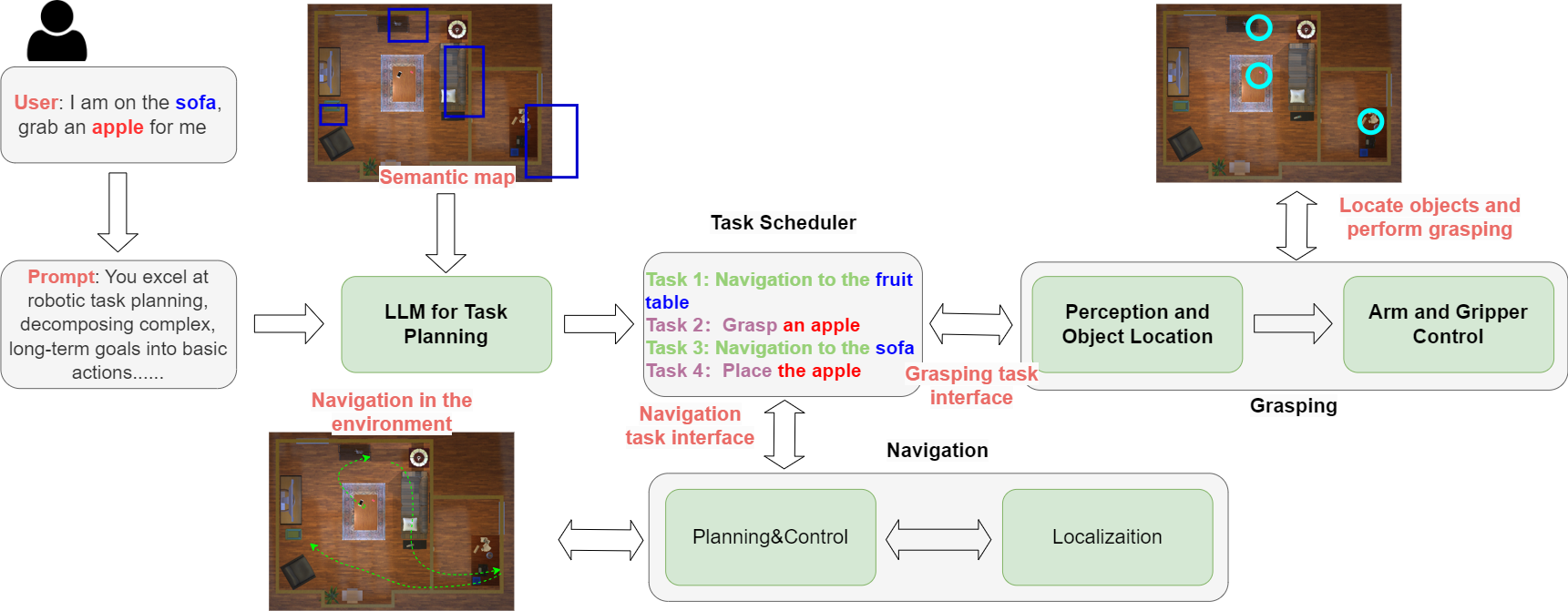

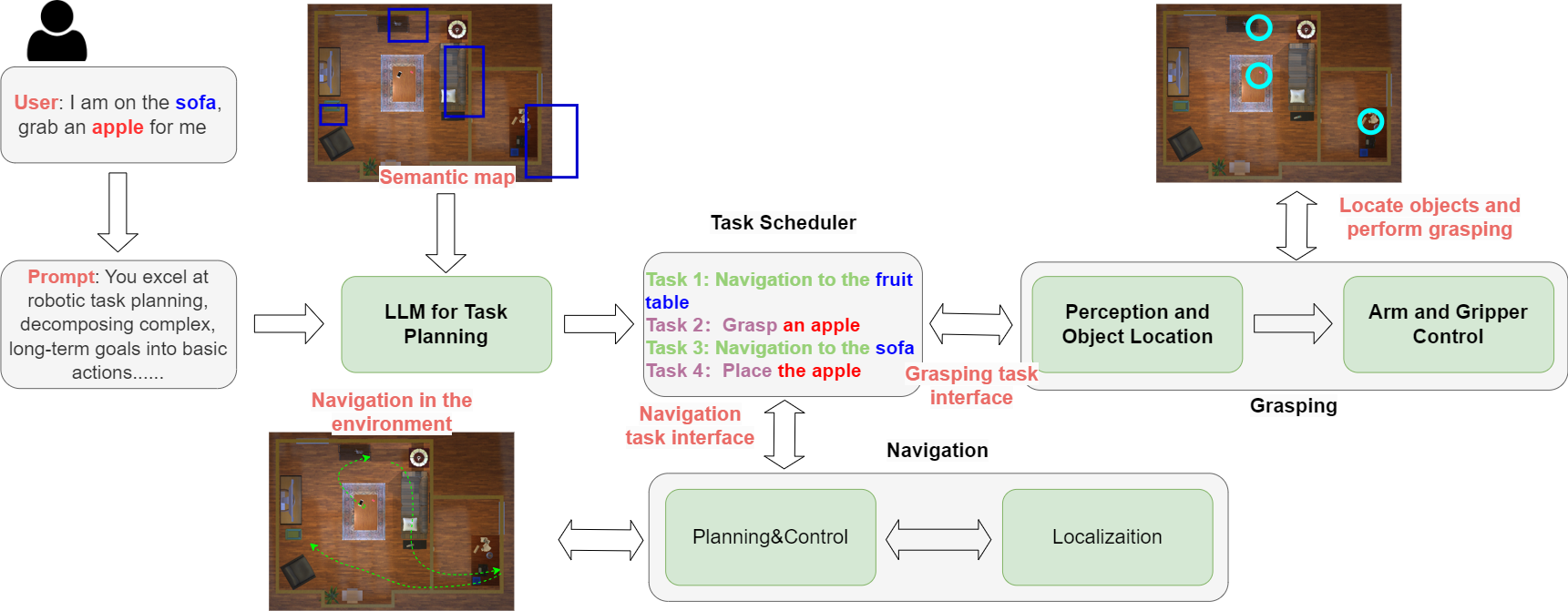

AIRSHIP is designed for scenarios that can be decomposed into sequential navigation and grasping tasks. Leveraging state-of-the-art language and vision foundation models, AIRSHIP augments traditional robotics with embodied AI capabilities, including high-level human instruction comprehension and scene understanding. It integrates these foundation models into existing robotic navigation and grasping software stacks.

The software architecture, illustrated in the above figure, employs an LLM to interpret high-level human instructions and break them down into a series of basic navigation and grasping actions. Navigation is accomplished using a traditional robotic navigation stack encompassing mapping, localization, path planning, and chassis control. The semantic map translates semantic objects into map locations, bridging high-level navigation goals with low-level robotic actions. Grasping is achieved through a neural network that determines gripper pose from visual input, followed by traditional robotic arm control for execution. A vision foundation model performs zero-shot object segmentation, converting semantic grasping tasks into vision-based ones.

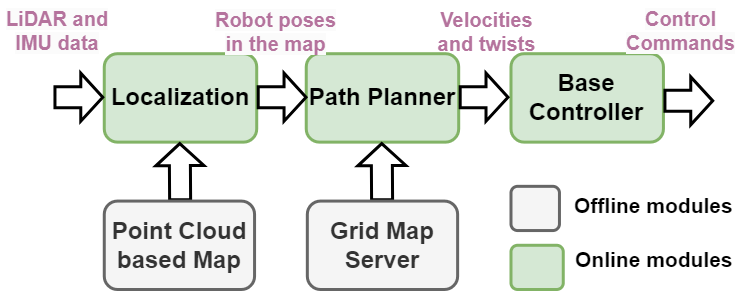

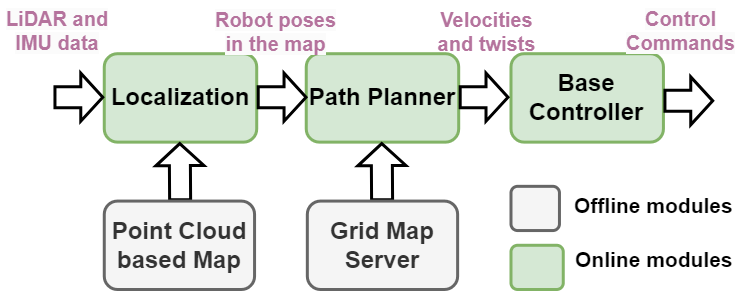

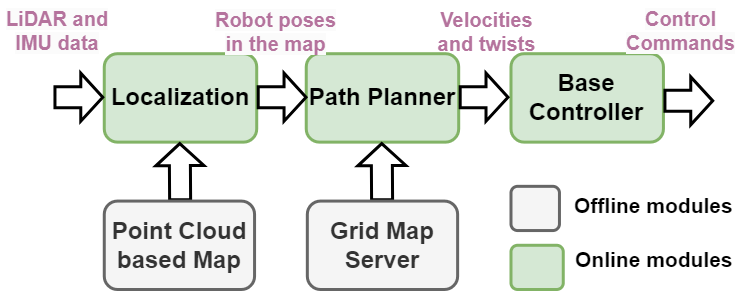

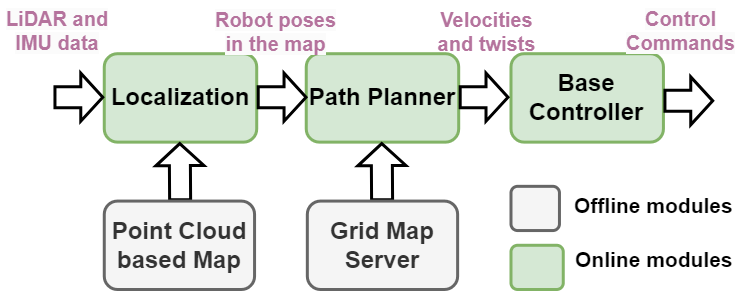

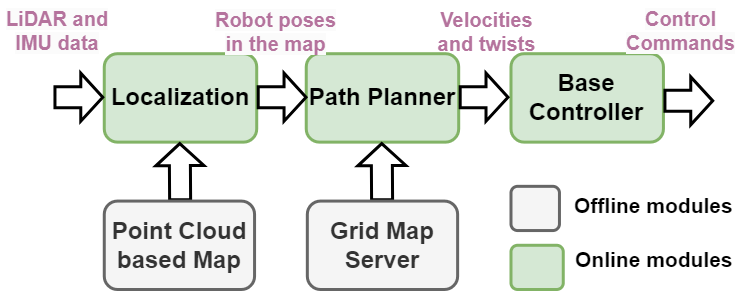

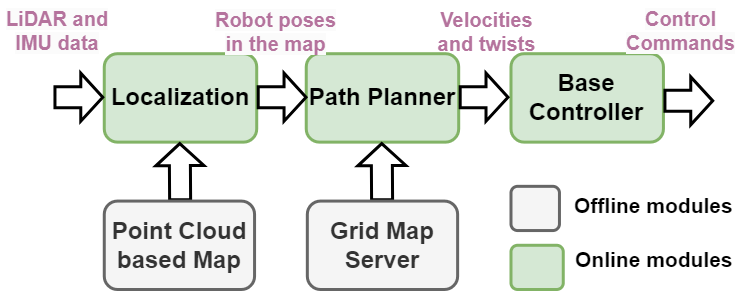

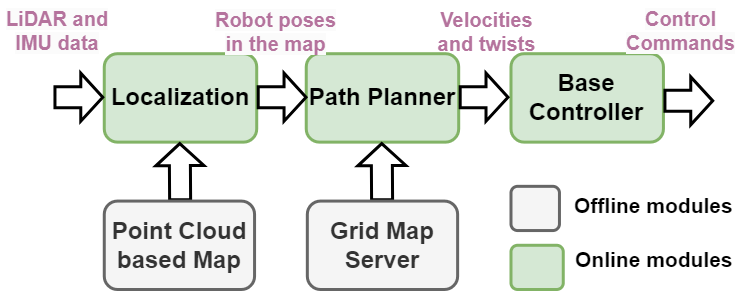

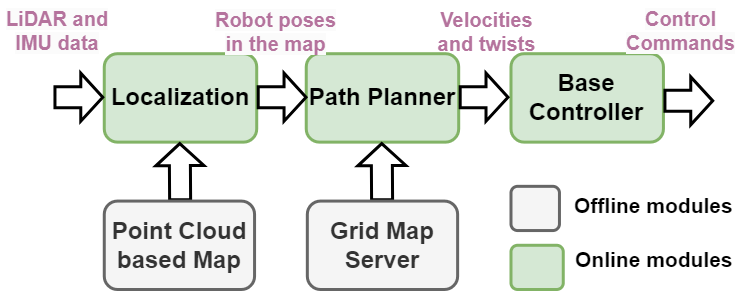

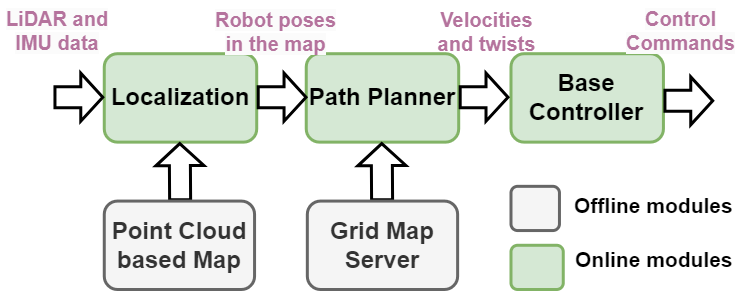

Navigation Software

The navigation software pipeline operates as follows. The localization module fuses LiDAR and IMU data to produce robust odometry and accurately determines the robot’s position within a pre-built point cloud map. The path planning module generates collision-free trajectories using both global and local planners. Subsequently, the path planner provides velocity and twist commands to the base controller, which ultimately produces control signals to follow the planned path.

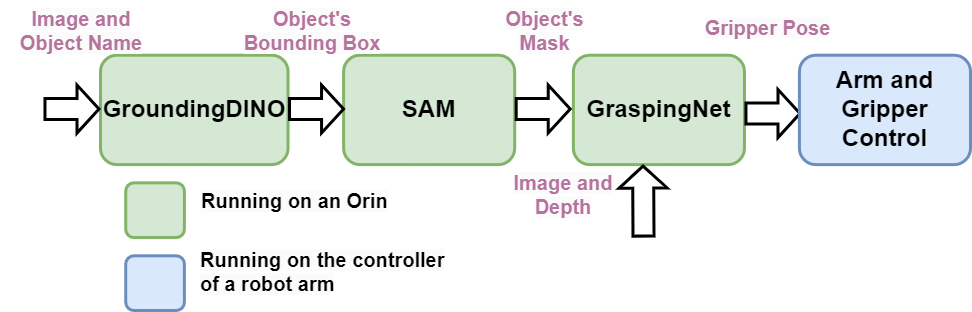

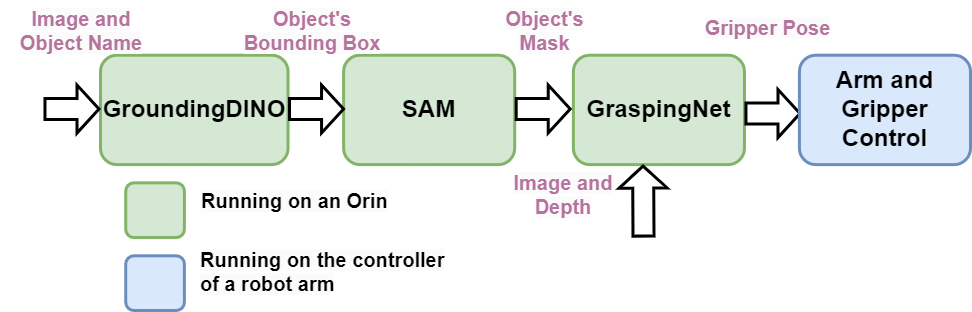

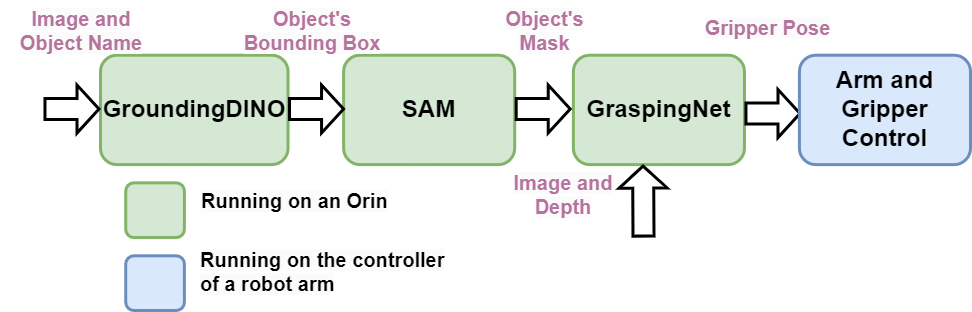

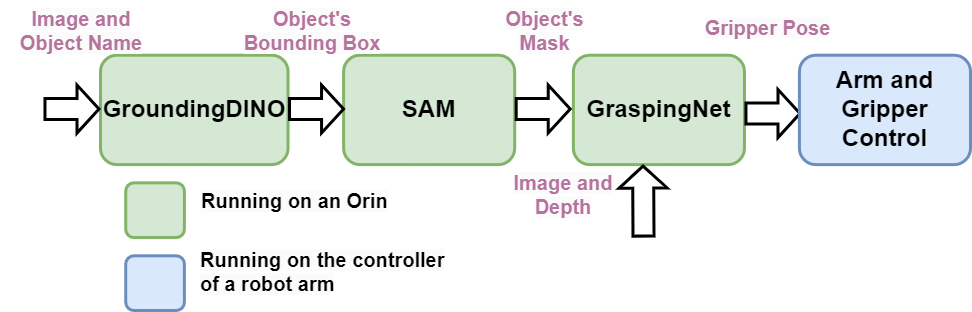

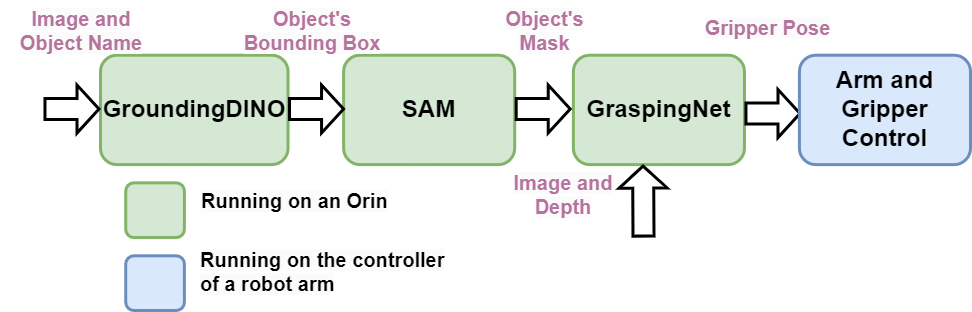

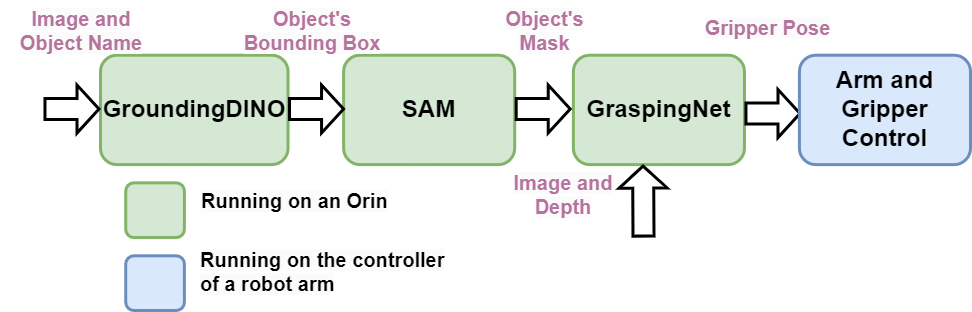

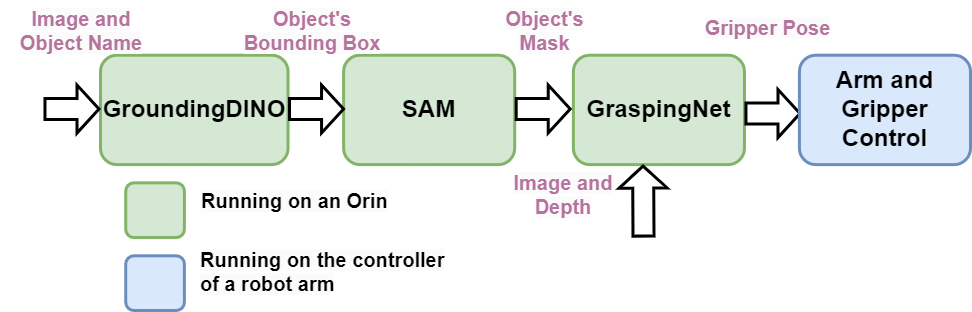

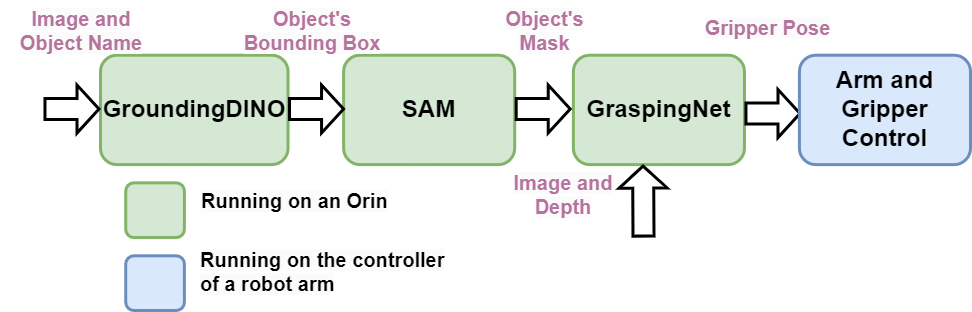

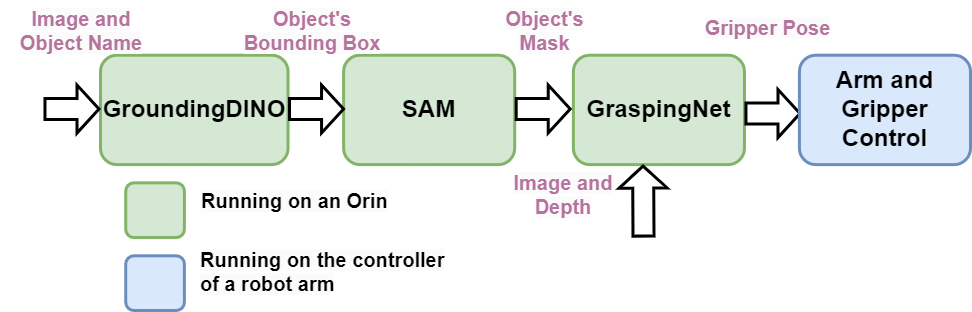

Grasping Software

The grasping software pipeline operates as follows: GroundingDINO receives an image and object name, outputting the object’s bounding box within the image. SAM utilizes this bounding box to generate a pixel-level object mask. GraspingNet processes the RGB and depth images to produce potential gripper poses for all objects in the scene. The object mask filters these poses to identify the optimal grasp for the target object.

Modules and Folders

- airship_chat: the voice user interface module, translating spoken language into text-based instructions

- airship_grasping: the grasping module

- airship_interface: defines topics and services messages used by each module

- airship_localization: the localization module

- airship_navigation: the planning and control module

- airship_perception: the object detection and segmentation module

- airship_planner: the LLM based task planner module

- airship_object: the senamic map module

- airship_description: contains the urdf file, defining the external parameter of robot links.

- docs: system setup on Orin without docker

- script: scripts for installing ros and docker

System Setup

If you don’t want to use docker, please refer to the setup instruction in “./docs/README.md”.

System setup on Nvidia Orin with Docker

-

Log in to your Nvidia Orin

-

Download AIRSHIP package from GitLab

```bash

${DIR_AIRSHIP} is the directory you create for the AIRSHIP package.

Here we take $HOME/airship as an example.

DIR_AIRSHIP=$HOME/airship mkdir -p ${DIR_AIRSHIP}/src

File truncated at 100 lines see the full file

CONTRIBUTING

|

airship repositoryairship_chat airship_description airship_grasp airship_interface airship_localization airship_navigation airship_object airship_perception airship_planner |

ROS Distro

|

Repository Summary

| Description | |

| Checkout URI | https://github.com/airs-cuhk/airship.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-07-18 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| airship_chat | 0.0.0 |

| airship_description | 0.0.0 |

| airship_grasp | 0.0.0 |

| airship_interface | 0.0.1 |

| airship_localization | 0.0.0 |

| airship_navigation | 0.0.0 |

| airship_object | 0.0.0 |

| airship_perception | 0.0.0 |

| airship_planner | 0.0.0 |

README

AIRSHIP: Empowering Intelligent Robots through Embodied AI, Stronger United, Yet Distinct.

Welcome to AIRSHIP GitLab page! AIRSHIP is an open-sourced embodied AI robotic software stack to empower various forms of intelligent robots.

Table of Contents

- Introduction

- Prerequisites

- Hardware Architecture

- Software Architecture

- Modules and Folders

- System Setup

- Quick Starts

- Acknowledgement

Introduction

While embodied AI holds immense potential for shaping the future economy, it presents significant challenges, particularly in the realm of computing. Achieving the necessary flexibility, efficiency, and scalability demands sophisticated computational resources, but the most pressing challenge remains software complexity. Complexity often leads to inflexibility.

Embodied AI systems must seamlessly integrate a wide array of functionalities, from environmental perception and physical interaction to the execution of complex tasks. This requires the harmonious operation of components such as sensor data analysis, advanced algorithmic processing, and precise actuator control. To support the diverse range of robotic forms and their specific tasks, a versatile and adaptable software stack is essential. However, creating a unified software architecture that ensures cohesive operation across these varied elements introduces substantial complexity, making it difficult to build a streamlined and efficient software ecosystem.

AIRSHIP has been developed to tackle the problem of software complexity in embodied AI. Its mission is to provide an easy-to-deploy software stack that empowers a wide variety of intelligent robots, thereby facilitating scalability and accelerating the commercialization of the embodied AI sector. AIRSHIP takes inspiration from Android, which played a crucial role in the mobile computing revolution by offering an open-source, flexible platform. Android enabled a wide range of device manufacturers to create smartphones and tablets at different price points, sparking rapid innovation and competition. This led to the widespread availability of affordable and powerful mobile devices. Android’s robust ecosystem, supported by a vast library of apps through the Google Play Store, allowed developers to reach a global audience, significantly advancing mobile technology adoption.

Similarly, AIRSHIP’s vision is to empower robot builders by providing an open-source embodied AI software stack. This platform enables the creation of truly intelligent robots capable of performing a variety of tasks that were previously unattainable at a reasonable cost. AIRSHIP’s motto, “Stronger United, Yet Distinct,” embodies the belief that true intelligence emerges through integration, but such integration should enhance, not constrain, the creative possibilities for robotic designers, allowing for distinct and innovative designs.

To realize this vision, AIRSHIP has been designed with flexibility, extensibility, and intelligence at its core. In this release, AIRSHIP offers both software and hardware specifications, enabling robotic builders to develop complete embodied AI systems for a range of scenarios, including home, retail, and warehouse environments. AIRSHIP is capable of understanding natural language instructions and executing navigation and grasping tasks based on those instructions. The current AIRSHIP robot form factor features a hybrid design that includes a wheeled chassis, a robotic arm, a suite of sensors, and an embedded computing system. However, AIRSHIP is rapidly evolving, with plans to support many more form factors in the near future. The software architecture follows a hierarchical and modular design, incorporating large model capabilities into traditional robot software stacks. This modularity allows developers to customize the AIRSHIP software and swap out modules to meet specific application requirements.

AIRSHIP is distinguished by the following characteristics:

- It is an integrated, open-source embodied robot system that provides detailed hardware specifications and software components.

- It features a modular and flexible software system, where modules can be adapted or replaced for different applications.

- While it is empowered by large models, AIRSHIP maintains computational efficiency, with most software modules running on an embedded computing system, ensuring the system remains performant and accessible for various use cases.

Prerequisits

Computing platform

- Nvidia Jetson Orin AGX

Sensors

- IMU: HiPNUC CH104 9-axis IMU

- RGBD camera for grasping: Intel RealSense D435

- LiDAR: RoboSense Helios 32

- RGBD camera for navigation: Stereo Labs Zed2

Wheeled robot

-

AirsBot2

Robotic arm and gripper

- Elephant Robotics mycobot 630

- Elephant Robotics pro adaptive gripper

Software on Jetson Orin AGX

- Jetpack SDK 6.0

- Ubuntu 22.04

- ROS Humble

- python 3.10

- pytorch 2.3.0

Hardware Architecture

The AIRSHIP hybrid robot comprises a wheeled chassis, a robotic arm with a compatible gripper, an Nvidia Orin computing board, and a sensor suite including a LiDAR, a camera, and an RGBD camera. Detailed hardware specifications are provided in this file. The following figure illustrates the AIRSHIP robot’s hardware architecture.

Software Architecture

AIRSHIP is designed for scenarios that can be decomposed into sequential navigation and grasping tasks. Leveraging state-of-the-art language and vision foundation models, AIRSHIP augments traditional robotics with embodied AI capabilities, including high-level human instruction comprehension and scene understanding. It integrates these foundation models into existing robotic navigation and grasping software stacks.

The software architecture, illustrated in the above figure, employs an LLM to interpret high-level human instructions and break them down into a series of basic navigation and grasping actions. Navigation is accomplished using a traditional robotic navigation stack encompassing mapping, localization, path planning, and chassis control. The semantic map translates semantic objects into map locations, bridging high-level navigation goals with low-level robotic actions. Grasping is achieved through a neural network that determines gripper pose from visual input, followed by traditional robotic arm control for execution. A vision foundation model performs zero-shot object segmentation, converting semantic grasping tasks into vision-based ones.

Navigation Software

The navigation software pipeline operates as follows. The localization module fuses LiDAR and IMU data to produce robust odometry and accurately determines the robot’s position within a pre-built point cloud map. The path planning module generates collision-free trajectories using both global and local planners. Subsequently, the path planner provides velocity and twist commands to the base controller, which ultimately produces control signals to follow the planned path.

Grasping Software

The grasping software pipeline operates as follows: GroundingDINO receives an image and object name, outputting the object’s bounding box within the image. SAM utilizes this bounding box to generate a pixel-level object mask. GraspingNet processes the RGB and depth images to produce potential gripper poses for all objects in the scene. The object mask filters these poses to identify the optimal grasp for the target object.

Modules and Folders

- airship_chat: the voice user interface module, translating spoken language into text-based instructions

- airship_grasping: the grasping module

- airship_interface: defines topics and services messages used by each module

- airship_localization: the localization module

- airship_navigation: the planning and control module

- airship_perception: the object detection and segmentation module

- airship_planner: the LLM based task planner module

- airship_object: the senamic map module

- airship_description: contains the urdf file, defining the external parameter of robot links.

- docs: system setup on Orin without docker

- script: scripts for installing ros and docker

System Setup

If you don’t want to use docker, please refer to the setup instruction in “./docs/README.md”.

System setup on Nvidia Orin with Docker

-

Log in to your Nvidia Orin

-

Download AIRSHIP package from GitLab

```bash

${DIR_AIRSHIP} is the directory you create for the AIRSHIP package.

Here we take $HOME/airship as an example.

DIR_AIRSHIP=$HOME/airship mkdir -p ${DIR_AIRSHIP}/src

File truncated at 100 lines see the full file

CONTRIBUTING

|

airship repositoryairship_chat airship_description airship_grasp airship_interface airship_localization airship_navigation airship_object airship_perception airship_planner |

ROS Distro

|

Repository Summary

| Description | |

| Checkout URI | https://github.com/airs-cuhk/airship.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-07-18 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| airship_chat | 0.0.0 |

| airship_description | 0.0.0 |

| airship_grasp | 0.0.0 |

| airship_interface | 0.0.1 |

| airship_localization | 0.0.0 |

| airship_navigation | 0.0.0 |

| airship_object | 0.0.0 |

| airship_perception | 0.0.0 |

| airship_planner | 0.0.0 |

README

AIRSHIP: Empowering Intelligent Robots through Embodied AI, Stronger United, Yet Distinct.

Welcome to AIRSHIP GitLab page! AIRSHIP is an open-sourced embodied AI robotic software stack to empower various forms of intelligent robots.

Table of Contents

- Introduction

- Prerequisites

- Hardware Architecture

- Software Architecture

- Modules and Folders

- System Setup

- Quick Starts

- Acknowledgement

Introduction

While embodied AI holds immense potential for shaping the future economy, it presents significant challenges, particularly in the realm of computing. Achieving the necessary flexibility, efficiency, and scalability demands sophisticated computational resources, but the most pressing challenge remains software complexity. Complexity often leads to inflexibility.

Embodied AI systems must seamlessly integrate a wide array of functionalities, from environmental perception and physical interaction to the execution of complex tasks. This requires the harmonious operation of components such as sensor data analysis, advanced algorithmic processing, and precise actuator control. To support the diverse range of robotic forms and their specific tasks, a versatile and adaptable software stack is essential. However, creating a unified software architecture that ensures cohesive operation across these varied elements introduces substantial complexity, making it difficult to build a streamlined and efficient software ecosystem.

AIRSHIP has been developed to tackle the problem of software complexity in embodied AI. Its mission is to provide an easy-to-deploy software stack that empowers a wide variety of intelligent robots, thereby facilitating scalability and accelerating the commercialization of the embodied AI sector. AIRSHIP takes inspiration from Android, which played a crucial role in the mobile computing revolution by offering an open-source, flexible platform. Android enabled a wide range of device manufacturers to create smartphones and tablets at different price points, sparking rapid innovation and competition. This led to the widespread availability of affordable and powerful mobile devices. Android’s robust ecosystem, supported by a vast library of apps through the Google Play Store, allowed developers to reach a global audience, significantly advancing mobile technology adoption.

Similarly, AIRSHIP’s vision is to empower robot builders by providing an open-source embodied AI software stack. This platform enables the creation of truly intelligent robots capable of performing a variety of tasks that were previously unattainable at a reasonable cost. AIRSHIP’s motto, “Stronger United, Yet Distinct,” embodies the belief that true intelligence emerges through integration, but such integration should enhance, not constrain, the creative possibilities for robotic designers, allowing for distinct and innovative designs.

To realize this vision, AIRSHIP has been designed with flexibility, extensibility, and intelligence at its core. In this release, AIRSHIP offers both software and hardware specifications, enabling robotic builders to develop complete embodied AI systems for a range of scenarios, including home, retail, and warehouse environments. AIRSHIP is capable of understanding natural language instructions and executing navigation and grasping tasks based on those instructions. The current AIRSHIP robot form factor features a hybrid design that includes a wheeled chassis, a robotic arm, a suite of sensors, and an embedded computing system. However, AIRSHIP is rapidly evolving, with plans to support many more form factors in the near future. The software architecture follows a hierarchical and modular design, incorporating large model capabilities into traditional robot software stacks. This modularity allows developers to customize the AIRSHIP software and swap out modules to meet specific application requirements.

AIRSHIP is distinguished by the following characteristics:

- It is an integrated, open-source embodied robot system that provides detailed hardware specifications and software components.

- It features a modular and flexible software system, where modules can be adapted or replaced for different applications.

- While it is empowered by large models, AIRSHIP maintains computational efficiency, with most software modules running on an embedded computing system, ensuring the system remains performant and accessible for various use cases.

Prerequisits

Computing platform

- Nvidia Jetson Orin AGX

Sensors

- IMU: HiPNUC CH104 9-axis IMU

- RGBD camera for grasping: Intel RealSense D435

- LiDAR: RoboSense Helios 32

- RGBD camera for navigation: Stereo Labs Zed2

Wheeled robot

-

AirsBot2

Robotic arm and gripper

- Elephant Robotics mycobot 630

- Elephant Robotics pro adaptive gripper

Software on Jetson Orin AGX

- Jetpack SDK 6.0

- Ubuntu 22.04

- ROS Humble

- python 3.10

- pytorch 2.3.0

Hardware Architecture

The AIRSHIP hybrid robot comprises a wheeled chassis, a robotic arm with a compatible gripper, an Nvidia Orin computing board, and a sensor suite including a LiDAR, a camera, and an RGBD camera. Detailed hardware specifications are provided in this file. The following figure illustrates the AIRSHIP robot’s hardware architecture.

Software Architecture

AIRSHIP is designed for scenarios that can be decomposed into sequential navigation and grasping tasks. Leveraging state-of-the-art language and vision foundation models, AIRSHIP augments traditional robotics with embodied AI capabilities, including high-level human instruction comprehension and scene understanding. It integrates these foundation models into existing robotic navigation and grasping software stacks.

The software architecture, illustrated in the above figure, employs an LLM to interpret high-level human instructions and break them down into a series of basic navigation and grasping actions. Navigation is accomplished using a traditional robotic navigation stack encompassing mapping, localization, path planning, and chassis control. The semantic map translates semantic objects into map locations, bridging high-level navigation goals with low-level robotic actions. Grasping is achieved through a neural network that determines gripper pose from visual input, followed by traditional robotic arm control for execution. A vision foundation model performs zero-shot object segmentation, converting semantic grasping tasks into vision-based ones.

Navigation Software

The navigation software pipeline operates as follows. The localization module fuses LiDAR and IMU data to produce robust odometry and accurately determines the robot’s position within a pre-built point cloud map. The path planning module generates collision-free trajectories using both global and local planners. Subsequently, the path planner provides velocity and twist commands to the base controller, which ultimately produces control signals to follow the planned path.

Grasping Software

The grasping software pipeline operates as follows: GroundingDINO receives an image and object name, outputting the object’s bounding box within the image. SAM utilizes this bounding box to generate a pixel-level object mask. GraspingNet processes the RGB and depth images to produce potential gripper poses for all objects in the scene. The object mask filters these poses to identify the optimal grasp for the target object.

Modules and Folders

- airship_chat: the voice user interface module, translating spoken language into text-based instructions

- airship_grasping: the grasping module

- airship_interface: defines topics and services messages used by each module

- airship_localization: the localization module

- airship_navigation: the planning and control module

- airship_perception: the object detection and segmentation module

- airship_planner: the LLM based task planner module

- airship_object: the senamic map module

- airship_description: contains the urdf file, defining the external parameter of robot links.

- docs: system setup on Orin without docker

- script: scripts for installing ros and docker

System Setup

If you don’t want to use docker, please refer to the setup instruction in “./docs/README.md”.

System setup on Nvidia Orin with Docker

-

Log in to your Nvidia Orin

-

Download AIRSHIP package from GitLab

```bash

${DIR_AIRSHIP} is the directory you create for the AIRSHIP package.

Here we take $HOME/airship as an example.

DIR_AIRSHIP=$HOME/airship mkdir -p ${DIR_AIRSHIP}/src

File truncated at 100 lines see the full file

CONTRIBUTING

|

airship repositoryairship_chat airship_description airship_grasp airship_interface airship_localization airship_navigation airship_object airship_perception airship_planner |

ROS Distro

|

Repository Summary

| Description | |

| Checkout URI | https://github.com/airs-cuhk/airship.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-07-18 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| airship_chat | 0.0.0 |

| airship_description | 0.0.0 |

| airship_grasp | 0.0.0 |

| airship_interface | 0.0.1 |

| airship_localization | 0.0.0 |

| airship_navigation | 0.0.0 |

| airship_object | 0.0.0 |

| airship_perception | 0.0.0 |

| airship_planner | 0.0.0 |

README

AIRSHIP: Empowering Intelligent Robots through Embodied AI, Stronger United, Yet Distinct.

Welcome to AIRSHIP GitLab page! AIRSHIP is an open-sourced embodied AI robotic software stack to empower various forms of intelligent robots.

Table of Contents

- Introduction

- Prerequisites

- Hardware Architecture

- Software Architecture

- Modules and Folders

- System Setup

- Quick Starts

- Acknowledgement

Introduction

While embodied AI holds immense potential for shaping the future economy, it presents significant challenges, particularly in the realm of computing. Achieving the necessary flexibility, efficiency, and scalability demands sophisticated computational resources, but the most pressing challenge remains software complexity. Complexity often leads to inflexibility.

Embodied AI systems must seamlessly integrate a wide array of functionalities, from environmental perception and physical interaction to the execution of complex tasks. This requires the harmonious operation of components such as sensor data analysis, advanced algorithmic processing, and precise actuator control. To support the diverse range of robotic forms and their specific tasks, a versatile and adaptable software stack is essential. However, creating a unified software architecture that ensures cohesive operation across these varied elements introduces substantial complexity, making it difficult to build a streamlined and efficient software ecosystem.

AIRSHIP has been developed to tackle the problem of software complexity in embodied AI. Its mission is to provide an easy-to-deploy software stack that empowers a wide variety of intelligent robots, thereby facilitating scalability and accelerating the commercialization of the embodied AI sector. AIRSHIP takes inspiration from Android, which played a crucial role in the mobile computing revolution by offering an open-source, flexible platform. Android enabled a wide range of device manufacturers to create smartphones and tablets at different price points, sparking rapid innovation and competition. This led to the widespread availability of affordable and powerful mobile devices. Android’s robust ecosystem, supported by a vast library of apps through the Google Play Store, allowed developers to reach a global audience, significantly advancing mobile technology adoption.

Similarly, AIRSHIP’s vision is to empower robot builders by providing an open-source embodied AI software stack. This platform enables the creation of truly intelligent robots capable of performing a variety of tasks that were previously unattainable at a reasonable cost. AIRSHIP’s motto, “Stronger United, Yet Distinct,” embodies the belief that true intelligence emerges through integration, but such integration should enhance, not constrain, the creative possibilities for robotic designers, allowing for distinct and innovative designs.

To realize this vision, AIRSHIP has been designed with flexibility, extensibility, and intelligence at its core. In this release, AIRSHIP offers both software and hardware specifications, enabling robotic builders to develop complete embodied AI systems for a range of scenarios, including home, retail, and warehouse environments. AIRSHIP is capable of understanding natural language instructions and executing navigation and grasping tasks based on those instructions. The current AIRSHIP robot form factor features a hybrid design that includes a wheeled chassis, a robotic arm, a suite of sensors, and an embedded computing system. However, AIRSHIP is rapidly evolving, with plans to support many more form factors in the near future. The software architecture follows a hierarchical and modular design, incorporating large model capabilities into traditional robot software stacks. This modularity allows developers to customize the AIRSHIP software and swap out modules to meet specific application requirements.

AIRSHIP is distinguished by the following characteristics:

- It is an integrated, open-source embodied robot system that provides detailed hardware specifications and software components.

- It features a modular and flexible software system, where modules can be adapted or replaced for different applications.

- While it is empowered by large models, AIRSHIP maintains computational efficiency, with most software modules running on an embedded computing system, ensuring the system remains performant and accessible for various use cases.

Prerequisits

Computing platform

- Nvidia Jetson Orin AGX

Sensors

- IMU: HiPNUC CH104 9-axis IMU

- RGBD camera for grasping: Intel RealSense D435

- LiDAR: RoboSense Helios 32

- RGBD camera for navigation: Stereo Labs Zed2

Wheeled robot

-

AirsBot2

Robotic arm and gripper

- Elephant Robotics mycobot 630

- Elephant Robotics pro adaptive gripper

Software on Jetson Orin AGX

- Jetpack SDK 6.0

- Ubuntu 22.04

- ROS Humble

- python 3.10

- pytorch 2.3.0

Hardware Architecture

The AIRSHIP hybrid robot comprises a wheeled chassis, a robotic arm with a compatible gripper, an Nvidia Orin computing board, and a sensor suite including a LiDAR, a camera, and an RGBD camera. Detailed hardware specifications are provided in this file. The following figure illustrates the AIRSHIP robot’s hardware architecture.

Software Architecture

AIRSHIP is designed for scenarios that can be decomposed into sequential navigation and grasping tasks. Leveraging state-of-the-art language and vision foundation models, AIRSHIP augments traditional robotics with embodied AI capabilities, including high-level human instruction comprehension and scene understanding. It integrates these foundation models into existing robotic navigation and grasping software stacks.

The software architecture, illustrated in the above figure, employs an LLM to interpret high-level human instructions and break them down into a series of basic navigation and grasping actions. Navigation is accomplished using a traditional robotic navigation stack encompassing mapping, localization, path planning, and chassis control. The semantic map translates semantic objects into map locations, bridging high-level navigation goals with low-level robotic actions. Grasping is achieved through a neural network that determines gripper pose from visual input, followed by traditional robotic arm control for execution. A vision foundation model performs zero-shot object segmentation, converting semantic grasping tasks into vision-based ones.

Navigation Software

The navigation software pipeline operates as follows. The localization module fuses LiDAR and IMU data to produce robust odometry and accurately determines the robot’s position within a pre-built point cloud map. The path planning module generates collision-free trajectories using both global and local planners. Subsequently, the path planner provides velocity and twist commands to the base controller, which ultimately produces control signals to follow the planned path.

Grasping Software

The grasping software pipeline operates as follows: GroundingDINO receives an image and object name, outputting the object’s bounding box within the image. SAM utilizes this bounding box to generate a pixel-level object mask. GraspingNet processes the RGB and depth images to produce potential gripper poses for all objects in the scene. The object mask filters these poses to identify the optimal grasp for the target object.

Modules and Folders

- airship_chat: the voice user interface module, translating spoken language into text-based instructions

- airship_grasping: the grasping module

- airship_interface: defines topics and services messages used by each module

- airship_localization: the localization module

- airship_navigation: the planning and control module

- airship_perception: the object detection and segmentation module

- airship_planner: the LLM based task planner module

- airship_object: the senamic map module

- airship_description: contains the urdf file, defining the external parameter of robot links.

- docs: system setup on Orin without docker

- script: scripts for installing ros and docker

System Setup

If you don’t want to use docker, please refer to the setup instruction in “./docs/README.md”.

System setup on Nvidia Orin with Docker

-

Log in to your Nvidia Orin

-

Download AIRSHIP package from GitLab

```bash

${DIR_AIRSHIP} is the directory you create for the AIRSHIP package.

Here we take $HOME/airship as an example.

DIR_AIRSHIP=$HOME/airship mkdir -p ${DIR_AIRSHIP}/src

File truncated at 100 lines see the full file

CONTRIBUTING

|

airship repositoryairship_chat airship_description airship_grasp airship_interface airship_localization airship_navigation airship_object airship_perception airship_planner |

ROS Distro

|

Repository Summary

| Description | |

| Checkout URI | https://github.com/airs-cuhk/airship.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-07-18 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| airship_chat | 0.0.0 |

| airship_description | 0.0.0 |

| airship_grasp | 0.0.0 |

| airship_interface | 0.0.1 |

| airship_localization | 0.0.0 |

| airship_navigation | 0.0.0 |

| airship_object | 0.0.0 |

| airship_perception | 0.0.0 |

| airship_planner | 0.0.0 |

README

AIRSHIP: Empowering Intelligent Robots through Embodied AI, Stronger United, Yet Distinct.

Welcome to AIRSHIP GitLab page! AIRSHIP is an open-sourced embodied AI robotic software stack to empower various forms of intelligent robots.

Table of Contents

- Introduction

- Prerequisites

- Hardware Architecture

- Software Architecture

- Modules and Folders

- System Setup

- Quick Starts

- Acknowledgement

Introduction

While embodied AI holds immense potential for shaping the future economy, it presents significant challenges, particularly in the realm of computing. Achieving the necessary flexibility, efficiency, and scalability demands sophisticated computational resources, but the most pressing challenge remains software complexity. Complexity often leads to inflexibility.

Embodied AI systems must seamlessly integrate a wide array of functionalities, from environmental perception and physical interaction to the execution of complex tasks. This requires the harmonious operation of components such as sensor data analysis, advanced algorithmic processing, and precise actuator control. To support the diverse range of robotic forms and their specific tasks, a versatile and adaptable software stack is essential. However, creating a unified software architecture that ensures cohesive operation across these varied elements introduces substantial complexity, making it difficult to build a streamlined and efficient software ecosystem.

AIRSHIP has been developed to tackle the problem of software complexity in embodied AI. Its mission is to provide an easy-to-deploy software stack that empowers a wide variety of intelligent robots, thereby facilitating scalability and accelerating the commercialization of the embodied AI sector. AIRSHIP takes inspiration from Android, which played a crucial role in the mobile computing revolution by offering an open-source, flexible platform. Android enabled a wide range of device manufacturers to create smartphones and tablets at different price points, sparking rapid innovation and competition. This led to the widespread availability of affordable and powerful mobile devices. Android’s robust ecosystem, supported by a vast library of apps through the Google Play Store, allowed developers to reach a global audience, significantly advancing mobile technology adoption.

Similarly, AIRSHIP’s vision is to empower robot builders by providing an open-source embodied AI software stack. This platform enables the creation of truly intelligent robots capable of performing a variety of tasks that were previously unattainable at a reasonable cost. AIRSHIP’s motto, “Stronger United, Yet Distinct,” embodies the belief that true intelligence emerges through integration, but such integration should enhance, not constrain, the creative possibilities for robotic designers, allowing for distinct and innovative designs.

To realize this vision, AIRSHIP has been designed with flexibility, extensibility, and intelligence at its core. In this release, AIRSHIP offers both software and hardware specifications, enabling robotic builders to develop complete embodied AI systems for a range of scenarios, including home, retail, and warehouse environments. AIRSHIP is capable of understanding natural language instructions and executing navigation and grasping tasks based on those instructions. The current AIRSHIP robot form factor features a hybrid design that includes a wheeled chassis, a robotic arm, a suite of sensors, and an embedded computing system. However, AIRSHIP is rapidly evolving, with plans to support many more form factors in the near future. The software architecture follows a hierarchical and modular design, incorporating large model capabilities into traditional robot software stacks. This modularity allows developers to customize the AIRSHIP software and swap out modules to meet specific application requirements.

AIRSHIP is distinguished by the following characteristics:

- It is an integrated, open-source embodied robot system that provides detailed hardware specifications and software components.

- It features a modular and flexible software system, where modules can be adapted or replaced for different applications.

- While it is empowered by large models, AIRSHIP maintains computational efficiency, with most software modules running on an embedded computing system, ensuring the system remains performant and accessible for various use cases.

Prerequisits

Computing platform

- Nvidia Jetson Orin AGX

Sensors

- IMU: HiPNUC CH104 9-axis IMU

- RGBD camera for grasping: Intel RealSense D435

- LiDAR: RoboSense Helios 32

- RGBD camera for navigation: Stereo Labs Zed2

Wheeled robot

-

AirsBot2

Robotic arm and gripper

- Elephant Robotics mycobot 630

- Elephant Robotics pro adaptive gripper

Software on Jetson Orin AGX

- Jetpack SDK 6.0

- Ubuntu 22.04

- ROS Humble

- python 3.10

- pytorch 2.3.0

Hardware Architecture

The AIRSHIP hybrid robot comprises a wheeled chassis, a robotic arm with a compatible gripper, an Nvidia Orin computing board, and a sensor suite including a LiDAR, a camera, and an RGBD camera. Detailed hardware specifications are provided in this file. The following figure illustrates the AIRSHIP robot’s hardware architecture.

Software Architecture

AIRSHIP is designed for scenarios that can be decomposed into sequential navigation and grasping tasks. Leveraging state-of-the-art language and vision foundation models, AIRSHIP augments traditional robotics with embodied AI capabilities, including high-level human instruction comprehension and scene understanding. It integrates these foundation models into existing robotic navigation and grasping software stacks.

The software architecture, illustrated in the above figure, employs an LLM to interpret high-level human instructions and break them down into a series of basic navigation and grasping actions. Navigation is accomplished using a traditional robotic navigation stack encompassing mapping, localization, path planning, and chassis control. The semantic map translates semantic objects into map locations, bridging high-level navigation goals with low-level robotic actions. Grasping is achieved through a neural network that determines gripper pose from visual input, followed by traditional robotic arm control for execution. A vision foundation model performs zero-shot object segmentation, converting semantic grasping tasks into vision-based ones.

Navigation Software

The navigation software pipeline operates as follows. The localization module fuses LiDAR and IMU data to produce robust odometry and accurately determines the robot’s position within a pre-built point cloud map. The path planning module generates collision-free trajectories using both global and local planners. Subsequently, the path planner provides velocity and twist commands to the base controller, which ultimately produces control signals to follow the planned path.

Grasping Software

The grasping software pipeline operates as follows: GroundingDINO receives an image and object name, outputting the object’s bounding box within the image. SAM utilizes this bounding box to generate a pixel-level object mask. GraspingNet processes the RGB and depth images to produce potential gripper poses for all objects in the scene. The object mask filters these poses to identify the optimal grasp for the target object.

Modules and Folders

- airship_chat: the voice user interface module, translating spoken language into text-based instructions

- airship_grasping: the grasping module

- airship_interface: defines topics and services messages used by each module

- airship_localization: the localization module

- airship_navigation: the planning and control module

- airship_perception: the object detection and segmentation module

- airship_planner: the LLM based task planner module

- airship_object: the senamic map module

- airship_description: contains the urdf file, defining the external parameter of robot links.

- docs: system setup on Orin without docker

- script: scripts for installing ros and docker

System Setup

If you don’t want to use docker, please refer to the setup instruction in “./docs/README.md”.

System setup on Nvidia Orin with Docker

-

Log in to your Nvidia Orin

-

Download AIRSHIP package from GitLab

```bash

${DIR_AIRSHIP} is the directory you create for the AIRSHIP package.

Here we take $HOME/airship as an example.

DIR_AIRSHIP=$HOME/airship mkdir -p ${DIR_AIRSHIP}/src

File truncated at 100 lines see the full file

CONTRIBUTING

|

airship repositoryairship_chat airship_description airship_grasp airship_interface airship_localization airship_navigation airship_object airship_perception airship_planner |

ROS Distro

|

Repository Summary

| Description | |

| Checkout URI | https://github.com/airs-cuhk/airship.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-07-18 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| airship_chat | 0.0.0 |

| airship_description | 0.0.0 |

| airship_grasp | 0.0.0 |

| airship_interface | 0.0.1 |

| airship_localization | 0.0.0 |

| airship_navigation | 0.0.0 |

| airship_object | 0.0.0 |

| airship_perception | 0.0.0 |

| airship_planner | 0.0.0 |

README

AIRSHIP: Empowering Intelligent Robots through Embodied AI, Stronger United, Yet Distinct.

Welcome to AIRSHIP GitLab page! AIRSHIP is an open-sourced embodied AI robotic software stack to empower various forms of intelligent robots.

Table of Contents

- Introduction

- Prerequisites

- Hardware Architecture

- Software Architecture

- Modules and Folders

- System Setup

- Quick Starts

- Acknowledgement

Introduction

While embodied AI holds immense potential for shaping the future economy, it presents significant challenges, particularly in the realm of computing. Achieving the necessary flexibility, efficiency, and scalability demands sophisticated computational resources, but the most pressing challenge remains software complexity. Complexity often leads to inflexibility.

Embodied AI systems must seamlessly integrate a wide array of functionalities, from environmental perception and physical interaction to the execution of complex tasks. This requires the harmonious operation of components such as sensor data analysis, advanced algorithmic processing, and precise actuator control. To support the diverse range of robotic forms and their specific tasks, a versatile and adaptable software stack is essential. However, creating a unified software architecture that ensures cohesive operation across these varied elements introduces substantial complexity, making it difficult to build a streamlined and efficient software ecosystem.

AIRSHIP has been developed to tackle the problem of software complexity in embodied AI. Its mission is to provide an easy-to-deploy software stack that empowers a wide variety of intelligent robots, thereby facilitating scalability and accelerating the commercialization of the embodied AI sector. AIRSHIP takes inspiration from Android, which played a crucial role in the mobile computing revolution by offering an open-source, flexible platform. Android enabled a wide range of device manufacturers to create smartphones and tablets at different price points, sparking rapid innovation and competition. This led to the widespread availability of affordable and powerful mobile devices. Android’s robust ecosystem, supported by a vast library of apps through the Google Play Store, allowed developers to reach a global audience, significantly advancing mobile technology adoption.

Similarly, AIRSHIP’s vision is to empower robot builders by providing an open-source embodied AI software stack. This platform enables the creation of truly intelligent robots capable of performing a variety of tasks that were previously unattainable at a reasonable cost. AIRSHIP’s motto, “Stronger United, Yet Distinct,” embodies the belief that true intelligence emerges through integration, but such integration should enhance, not constrain, the creative possibilities for robotic designers, allowing for distinct and innovative designs.

To realize this vision, AIRSHIP has been designed with flexibility, extensibility, and intelligence at its core. In this release, AIRSHIP offers both software and hardware specifications, enabling robotic builders to develop complete embodied AI systems for a range of scenarios, including home, retail, and warehouse environments. AIRSHIP is capable of understanding natural language instructions and executing navigation and grasping tasks based on those instructions. The current AIRSHIP robot form factor features a hybrid design that includes a wheeled chassis, a robotic arm, a suite of sensors, and an embedded computing system. However, AIRSHIP is rapidly evolving, with plans to support many more form factors in the near future. The software architecture follows a hierarchical and modular design, incorporating large model capabilities into traditional robot software stacks. This modularity allows developers to customize the AIRSHIP software and swap out modules to meet specific application requirements.

AIRSHIP is distinguished by the following characteristics:

- It is an integrated, open-source embodied robot system that provides detailed hardware specifications and software components.

- It features a modular and flexible software system, where modules can be adapted or replaced for different applications.

- While it is empowered by large models, AIRSHIP maintains computational efficiency, with most software modules running on an embedded computing system, ensuring the system remains performant and accessible for various use cases.

Prerequisits

Computing platform

- Nvidia Jetson Orin AGX

Sensors

- IMU: HiPNUC CH104 9-axis IMU

- RGBD camera for grasping: Intel RealSense D435

- LiDAR: RoboSense Helios 32

- RGBD camera for navigation: Stereo Labs Zed2

Wheeled robot

-

AirsBot2

Robotic arm and gripper

- Elephant Robotics mycobot 630

- Elephant Robotics pro adaptive gripper

Software on Jetson Orin AGX

- Jetpack SDK 6.0

- Ubuntu 22.04

- ROS Humble

- python 3.10

- pytorch 2.3.0

Hardware Architecture

The AIRSHIP hybrid robot comprises a wheeled chassis, a robotic arm with a compatible gripper, an Nvidia Orin computing board, and a sensor suite including a LiDAR, a camera, and an RGBD camera. Detailed hardware specifications are provided in this file. The following figure illustrates the AIRSHIP robot’s hardware architecture.

Software Architecture

AIRSHIP is designed for scenarios that can be decomposed into sequential navigation and grasping tasks. Leveraging state-of-the-art language and vision foundation models, AIRSHIP augments traditional robotics with embodied AI capabilities, including high-level human instruction comprehension and scene understanding. It integrates these foundation models into existing robotic navigation and grasping software stacks.

The software architecture, illustrated in the above figure, employs an LLM to interpret high-level human instructions and break them down into a series of basic navigation and grasping actions. Navigation is accomplished using a traditional robotic navigation stack encompassing mapping, localization, path planning, and chassis control. The semantic map translates semantic objects into map locations, bridging high-level navigation goals with low-level robotic actions. Grasping is achieved through a neural network that determines gripper pose from visual input, followed by traditional robotic arm control for execution. A vision foundation model performs zero-shot object segmentation, converting semantic grasping tasks into vision-based ones.

Navigation Software

The navigation software pipeline operates as follows. The localization module fuses LiDAR and IMU data to produce robust odometry and accurately determines the robot’s position within a pre-built point cloud map. The path planning module generates collision-free trajectories using both global and local planners. Subsequently, the path planner provides velocity and twist commands to the base controller, which ultimately produces control signals to follow the planned path.

Grasping Software

The grasping software pipeline operates as follows: GroundingDINO receives an image and object name, outputting the object’s bounding box within the image. SAM utilizes this bounding box to generate a pixel-level object mask. GraspingNet processes the RGB and depth images to produce potential gripper poses for all objects in the scene. The object mask filters these poses to identify the optimal grasp for the target object.

Modules and Folders

- airship_chat: the voice user interface module, translating spoken language into text-based instructions

- airship_grasping: the grasping module

- airship_interface: defines topics and services messages used by each module

- airship_localization: the localization module

- airship_navigation: the planning and control module

- airship_perception: the object detection and segmentation module

- airship_planner: the LLM based task planner module

- airship_object: the senamic map module

- airship_description: contains the urdf file, defining the external parameter of robot links.

- docs: system setup on Orin without docker

- script: scripts for installing ros and docker

System Setup

If you don’t want to use docker, please refer to the setup instruction in “./docs/README.md”.

System setup on Nvidia Orin with Docker

-

Log in to your Nvidia Orin

-

Download AIRSHIP package from GitLab

```bash

${DIR_AIRSHIP} is the directory you create for the AIRSHIP package.

Here we take $HOME/airship as an example.

DIR_AIRSHIP=$HOME/airship mkdir -p ${DIR_AIRSHIP}/src

File truncated at 100 lines see the full file

CONTRIBUTING

|

airship repositoryairship_chat airship_description airship_grasp airship_interface airship_localization airship_navigation airship_object airship_perception airship_planner |

ROS Distro

|

Repository Summary

| Description | |

| Checkout URI | https://github.com/airs-cuhk/airship.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-07-18 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| airship_chat | 0.0.0 |

| airship_description | 0.0.0 |

| airship_grasp | 0.0.0 |

| airship_interface | 0.0.1 |

| airship_localization | 0.0.0 |

| airship_navigation | 0.0.0 |

| airship_object | 0.0.0 |

| airship_perception | 0.0.0 |

| airship_planner | 0.0.0 |

README

AIRSHIP: Empowering Intelligent Robots through Embodied AI, Stronger United, Yet Distinct.

Welcome to AIRSHIP GitLab page! AIRSHIP is an open-sourced embodied AI robotic software stack to empower various forms of intelligent robots.

Table of Contents

- Introduction

- Prerequisites

- Hardware Architecture

- Software Architecture

- Modules and Folders

- System Setup

- Quick Starts

- Acknowledgement

Introduction

While embodied AI holds immense potential for shaping the future economy, it presents significant challenges, particularly in the realm of computing. Achieving the necessary flexibility, efficiency, and scalability demands sophisticated computational resources, but the most pressing challenge remains software complexity. Complexity often leads to inflexibility.

Embodied AI systems must seamlessly integrate a wide array of functionalities, from environmental perception and physical interaction to the execution of complex tasks. This requires the harmonious operation of components such as sensor data analysis, advanced algorithmic processing, and precise actuator control. To support the diverse range of robotic forms and their specific tasks, a versatile and adaptable software stack is essential. However, creating a unified software architecture that ensures cohesive operation across these varied elements introduces substantial complexity, making it difficult to build a streamlined and efficient software ecosystem.

AIRSHIP has been developed to tackle the problem of software complexity in embodied AI. Its mission is to provide an easy-to-deploy software stack that empowers a wide variety of intelligent robots, thereby facilitating scalability and accelerating the commercialization of the embodied AI sector. AIRSHIP takes inspiration from Android, which played a crucial role in the mobile computing revolution by offering an open-source, flexible platform. Android enabled a wide range of device manufacturers to create smartphones and tablets at different price points, sparking rapid innovation and competition. This led to the widespread availability of affordable and powerful mobile devices. Android’s robust ecosystem, supported by a vast library of apps through the Google Play Store, allowed developers to reach a global audience, significantly advancing mobile technology adoption.

Similarly, AIRSHIP’s vision is to empower robot builders by providing an open-source embodied AI software stack. This platform enables the creation of truly intelligent robots capable of performing a variety of tasks that were previously unattainable at a reasonable cost. AIRSHIP’s motto, “Stronger United, Yet Distinct,” embodies the belief that true intelligence emerges through integration, but such integration should enhance, not constrain, the creative possibilities for robotic designers, allowing for distinct and innovative designs.

To realize this vision, AIRSHIP has been designed with flexibility, extensibility, and intelligence at its core. In this release, AIRSHIP offers both software and hardware specifications, enabling robotic builders to develop complete embodied AI systems for a range of scenarios, including home, retail, and warehouse environments. AIRSHIP is capable of understanding natural language instructions and executing navigation and grasping tasks based on those instructions. The current AIRSHIP robot form factor features a hybrid design that includes a wheeled chassis, a robotic arm, a suite of sensors, and an embedded computing system. However, AIRSHIP is rapidly evolving, with plans to support many more form factors in the near future. The software architecture follows a hierarchical and modular design, incorporating large model capabilities into traditional robot software stacks. This modularity allows developers to customize the AIRSHIP software and swap out modules to meet specific application requirements.

AIRSHIP is distinguished by the following characteristics:

- It is an integrated, open-source embodied robot system that provides detailed hardware specifications and software components.

- It features a modular and flexible software system, where modules can be adapted or replaced for different applications.

- While it is empowered by large models, AIRSHIP maintains computational efficiency, with most software modules running on an embedded computing system, ensuring the system remains performant and accessible for various use cases.

Prerequisits

Computing platform

- Nvidia Jetson Orin AGX

Sensors

- IMU: HiPNUC CH104 9-axis IMU

- RGBD camera for grasping: Intel RealSense D435

- LiDAR: RoboSense Helios 32

- RGBD camera for navigation: Stereo Labs Zed2

Wheeled robot

-

AirsBot2

Robotic arm and gripper

- Elephant Robotics mycobot 630

- Elephant Robotics pro adaptive gripper

Software on Jetson Orin AGX

- Jetpack SDK 6.0

- Ubuntu 22.04

- ROS Humble

- python 3.10

- pytorch 2.3.0

Hardware Architecture

The AIRSHIP hybrid robot comprises a wheeled chassis, a robotic arm with a compatible gripper, an Nvidia Orin computing board, and a sensor suite including a LiDAR, a camera, and an RGBD camera. Detailed hardware specifications are provided in this file. The following figure illustrates the AIRSHIP robot’s hardware architecture.

Software Architecture

AIRSHIP is designed for scenarios that can be decomposed into sequential navigation and grasping tasks. Leveraging state-of-the-art language and vision foundation models, AIRSHIP augments traditional robotics with embodied AI capabilities, including high-level human instruction comprehension and scene understanding. It integrates these foundation models into existing robotic navigation and grasping software stacks.

The software architecture, illustrated in the above figure, employs an LLM to interpret high-level human instructions and break them down into a series of basic navigation and grasping actions. Navigation is accomplished using a traditional robotic navigation stack encompassing mapping, localization, path planning, and chassis control. The semantic map translates semantic objects into map locations, bridging high-level navigation goals with low-level robotic actions. Grasping is achieved through a neural network that determines gripper pose from visual input, followed by traditional robotic arm control for execution. A vision foundation model performs zero-shot object segmentation, converting semantic grasping tasks into vision-based ones.

Navigation Software

The navigation software pipeline operates as follows. The localization module fuses LiDAR and IMU data to produce robust odometry and accurately determines the robot’s position within a pre-built point cloud map. The path planning module generates collision-free trajectories using both global and local planners. Subsequently, the path planner provides velocity and twist commands to the base controller, which ultimately produces control signals to follow the planned path.

Grasping Software

The grasping software pipeline operates as follows: GroundingDINO receives an image and object name, outputting the object’s bounding box within the image. SAM utilizes this bounding box to generate a pixel-level object mask. GraspingNet processes the RGB and depth images to produce potential gripper poses for all objects in the scene. The object mask filters these poses to identify the optimal grasp for the target object.

Modules and Folders

- airship_chat: the voice user interface module, translating spoken language into text-based instructions

- airship_grasping: the grasping module

- airship_interface: defines topics and services messages used by each module

- airship_localization: the localization module

- airship_navigation: the planning and control module

- airship_perception: the object detection and segmentation module

- airship_planner: the LLM based task planner module

- airship_object: the senamic map module

- airship_description: contains the urdf file, defining the external parameter of robot links.

- docs: system setup on Orin without docker

- script: scripts for installing ros and docker

System Setup

If you don’t want to use docker, please refer to the setup instruction in “./docs/README.md”.

System setup on Nvidia Orin with Docker

-

Log in to your Nvidia Orin

-

Download AIRSHIP package from GitLab

```bash

${DIR_AIRSHIP} is the directory you create for the AIRSHIP package.

Here we take $HOME/airship as an example.

DIR_AIRSHIP=$HOME/airship mkdir -p ${DIR_AIRSHIP}/src

File truncated at 100 lines see the full file

CONTRIBUTING

|

airship repositoryairship_chat airship_description airship_grasp airship_interface airship_localization airship_navigation airship_object airship_perception airship_planner |

ROS Distro

|

Repository Summary

| Description | |

| Checkout URI | https://github.com/airs-cuhk/airship.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-07-18 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| airship_chat | 0.0.0 |

| airship_description | 0.0.0 |

| airship_grasp | 0.0.0 |

| airship_interface | 0.0.1 |

| airship_localization | 0.0.0 |

| airship_navigation | 0.0.0 |

| airship_object | 0.0.0 |

| airship_perception | 0.0.0 |

| airship_planner | 0.0.0 |

README

AIRSHIP: Empowering Intelligent Robots through Embodied AI, Stronger United, Yet Distinct.

Welcome to AIRSHIP GitLab page! AIRSHIP is an open-sourced embodied AI robotic software stack to empower various forms of intelligent robots.

Table of Contents

- Introduction

- Prerequisites

- Hardware Architecture

- Software Architecture

- Modules and Folders

- System Setup

- Quick Starts

- Acknowledgement

Introduction

While embodied AI holds immense potential for shaping the future economy, it presents significant challenges, particularly in the realm of computing. Achieving the necessary flexibility, efficiency, and scalability demands sophisticated computational resources, but the most pressing challenge remains software complexity. Complexity often leads to inflexibility.

Embodied AI systems must seamlessly integrate a wide array of functionalities, from environmental perception and physical interaction to the execution of complex tasks. This requires the harmonious operation of components such as sensor data analysis, advanced algorithmic processing, and precise actuator control. To support the diverse range of robotic forms and their specific tasks, a versatile and adaptable software stack is essential. However, creating a unified software architecture that ensures cohesive operation across these varied elements introduces substantial complexity, making it difficult to build a streamlined and efficient software ecosystem.

AIRSHIP has been developed to tackle the problem of software complexity in embodied AI. Its mission is to provide an easy-to-deploy software stack that empowers a wide variety of intelligent robots, thereby facilitating scalability and accelerating the commercialization of the embodied AI sector. AIRSHIP takes inspiration from Android, which played a crucial role in the mobile computing revolution by offering an open-source, flexible platform. Android enabled a wide range of device manufacturers to create smartphones and tablets at different price points, sparking rapid innovation and competition. This led to the widespread availability of affordable and powerful mobile devices. Android’s robust ecosystem, supported by a vast library of apps through the Google Play Store, allowed developers to reach a global audience, significantly advancing mobile technology adoption.

Similarly, AIRSHIP’s vision is to empower robot builders by providing an open-source embodied AI software stack. This platform enables the creation of truly intelligent robots capable of performing a variety of tasks that were previously unattainable at a reasonable cost. AIRSHIP’s motto, “Stronger United, Yet Distinct,” embodies the belief that true intelligence emerges through integration, but such integration should enhance, not constrain, the creative possibilities for robotic designers, allowing for distinct and innovative designs.

To realize this vision, AIRSHIP has been designed with flexibility, extensibility, and intelligence at its core. In this release, AIRSHIP offers both software and hardware specifications, enabling robotic builders to develop complete embodied AI systems for a range of scenarios, including home, retail, and warehouse environments. AIRSHIP is capable of understanding natural language instructions and executing navigation and grasping tasks based on those instructions. The current AIRSHIP robot form factor features a hybrid design that includes a wheeled chassis, a robotic arm, a suite of sensors, and an embedded computing system. However, AIRSHIP is rapidly evolving, with plans to support many more form factors in the near future. The software architecture follows a hierarchical and modular design, incorporating large model capabilities into traditional robot software stacks. This modularity allows developers to customize the AIRSHIP software and swap out modules to meet specific application requirements.

AIRSHIP is distinguished by the following characteristics:

- It is an integrated, open-source embodied robot system that provides detailed hardware specifications and software components.

- It features a modular and flexible software system, where modules can be adapted or replaced for different applications.

- While it is empowered by large models, AIRSHIP maintains computational efficiency, with most software modules running on an embedded computing system, ensuring the system remains performant and accessible for various use cases.

Prerequisits

Computing platform

- Nvidia Jetson Orin AGX

Sensors

- IMU: HiPNUC CH104 9-axis IMU

- RGBD camera for grasping: Intel RealSense D435

- LiDAR: RoboSense Helios 32

- RGBD camera for navigation: Stereo Labs Zed2

Wheeled robot

-

AirsBot2

Robotic arm and gripper

- Elephant Robotics mycobot 630

- Elephant Robotics pro adaptive gripper

Software on Jetson Orin AGX

- Jetpack SDK 6.0

- Ubuntu 22.04

- ROS Humble

- python 3.10

- pytorch 2.3.0

Hardware Architecture

The AIRSHIP hybrid robot comprises a wheeled chassis, a robotic arm with a compatible gripper, an Nvidia Orin computing board, and a sensor suite including a LiDAR, a camera, and an RGBD camera. Detailed hardware specifications are provided in this file. The following figure illustrates the AIRSHIP robot’s hardware architecture.

Software Architecture

AIRSHIP is designed for scenarios that can be decomposed into sequential navigation and grasping tasks. Leveraging state-of-the-art language and vision foundation models, AIRSHIP augments traditional robotics with embodied AI capabilities, including high-level human instruction comprehension and scene understanding. It integrates these foundation models into existing robotic navigation and grasping software stacks.

The software architecture, illustrated in the above figure, employs an LLM to interpret high-level human instructions and break them down into a series of basic navigation and grasping actions. Navigation is accomplished using a traditional robotic navigation stack encompassing mapping, localization, path planning, and chassis control. The semantic map translates semantic objects into map locations, bridging high-level navigation goals with low-level robotic actions. Grasping is achieved through a neural network that determines gripper pose from visual input, followed by traditional robotic arm control for execution. A vision foundation model performs zero-shot object segmentation, converting semantic grasping tasks into vision-based ones.

Navigation Software

The navigation software pipeline operates as follows. The localization module fuses LiDAR and IMU data to produce robust odometry and accurately determines the robot’s position within a pre-built point cloud map. The path planning module generates collision-free trajectories using both global and local planners. Subsequently, the path planner provides velocity and twist commands to the base controller, which ultimately produces control signals to follow the planned path.

Grasping Software

The grasping software pipeline operates as follows: GroundingDINO receives an image and object name, outputting the object’s bounding box within the image. SAM utilizes this bounding box to generate a pixel-level object mask. GraspingNet processes the RGB and depth images to produce potential gripper poses for all objects in the scene. The object mask filters these poses to identify the optimal grasp for the target object.

Modules and Folders

- airship_chat: the voice user interface module, translating spoken language into text-based instructions

- airship_grasping: the grasping module

- airship_interface: defines topics and services messages used by each module

- airship_localization: the localization module

- airship_navigation: the planning and control module

- airship_perception: the object detection and segmentation module

- airship_planner: the LLM based task planner module

- airship_object: the senamic map module

- airship_description: contains the urdf file, defining the external parameter of robot links.

- docs: system setup on Orin without docker

- script: scripts for installing ros and docker

System Setup

If you don’t want to use docker, please refer to the setup instruction in “./docs/README.md”.

System setup on Nvidia Orin with Docker

-

Log in to your Nvidia Orin

-

Download AIRSHIP package from GitLab

```bash

${DIR_AIRSHIP} is the directory you create for the AIRSHIP package.

Here we take $HOME/airship as an example.

DIR_AIRSHIP=$HOME/airship mkdir -p ${DIR_AIRSHIP}/src

File truncated at 100 lines see the full file

CONTRIBUTING

|

airship repositoryairship_chat airship_description airship_grasp airship_interface airship_localization airship_navigation airship_object airship_perception airship_planner |

ROS Distro

|

Repository Summary

| Description | |

| Checkout URI | https://github.com/airs-cuhk/airship.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-07-18 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| airship_chat | 0.0.0 |

| airship_description | 0.0.0 |

| airship_grasp | 0.0.0 |