|

carla_vloc_benchmark repositorycarla_visual_navigation carla_visual_navigation_agent carla_visual_navigation_interfaces |

ROS Distro

|

Repository Summary

| Description | Official implementation of the WACV 2023 paper "Benchmarking Visual Localization for Autonomous Navigation". |

| Checkout URI | https://github.com/lasuomela/carla_vloc_benchmark.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2023-09-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| carla_visual_navigation | 0.0.0 |

| carla_visual_navigation_agent | 0.0.0 |

| carla_visual_navigation_interfaces | 0.0.0 |

README

</a>

</a>

Carla Visual localization benchmark

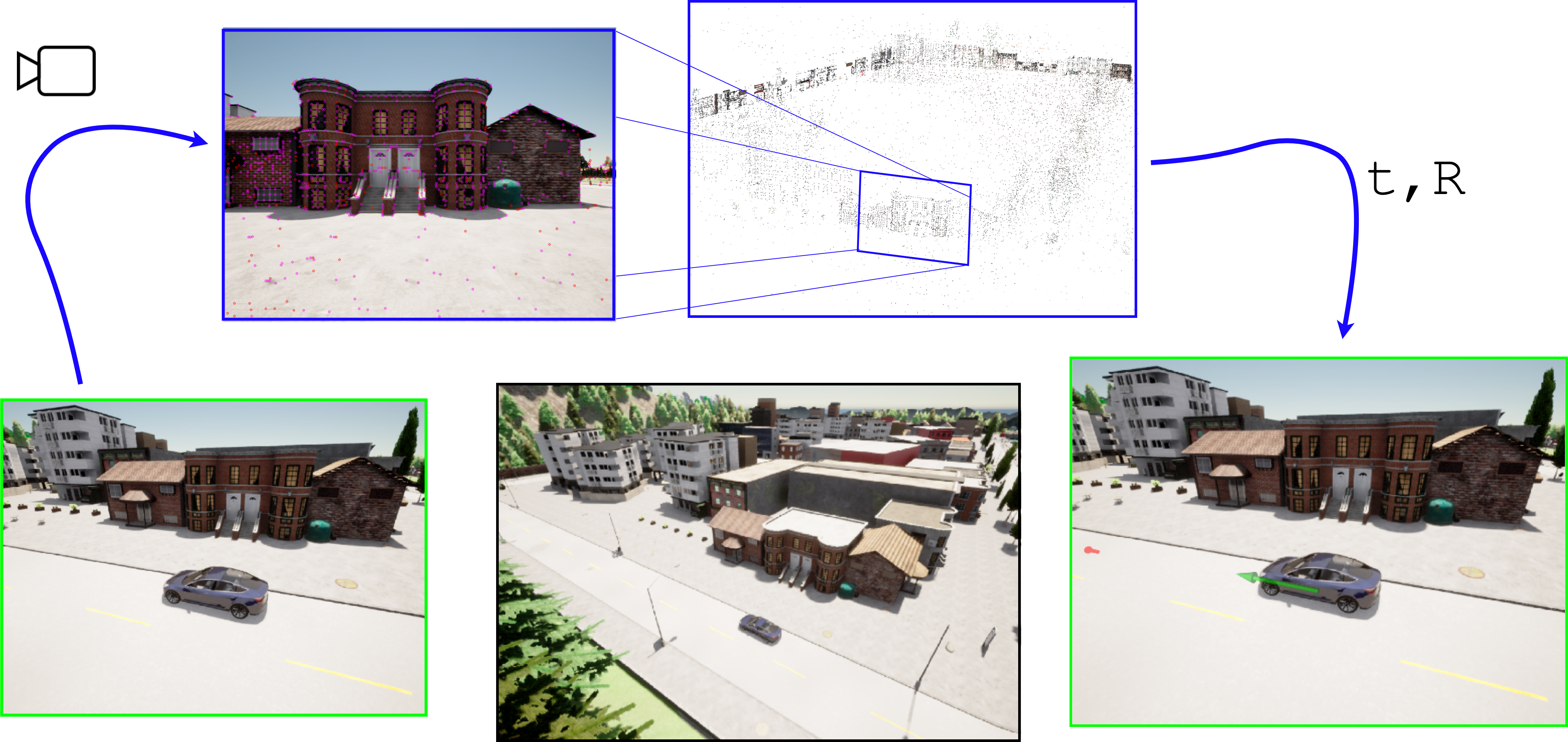

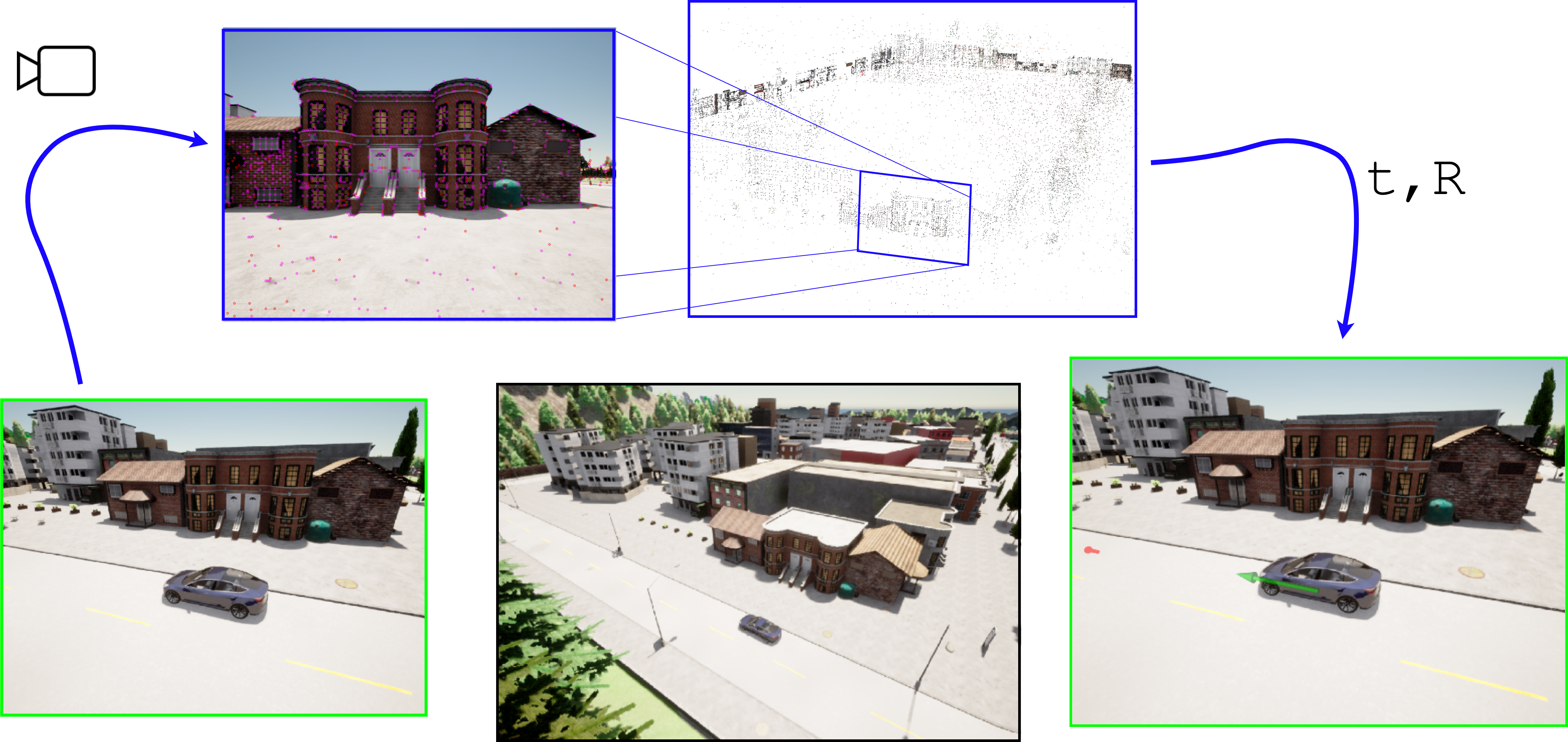

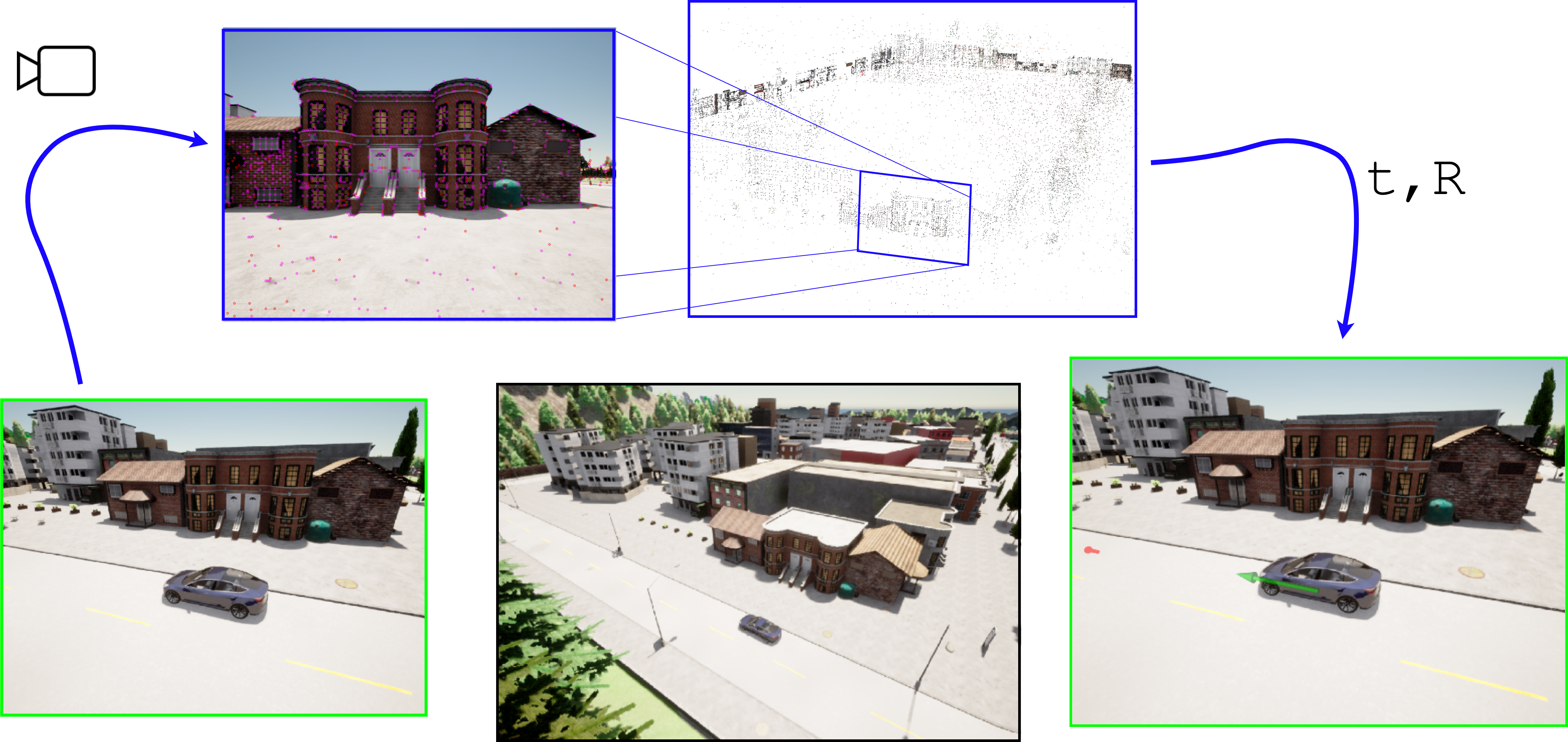

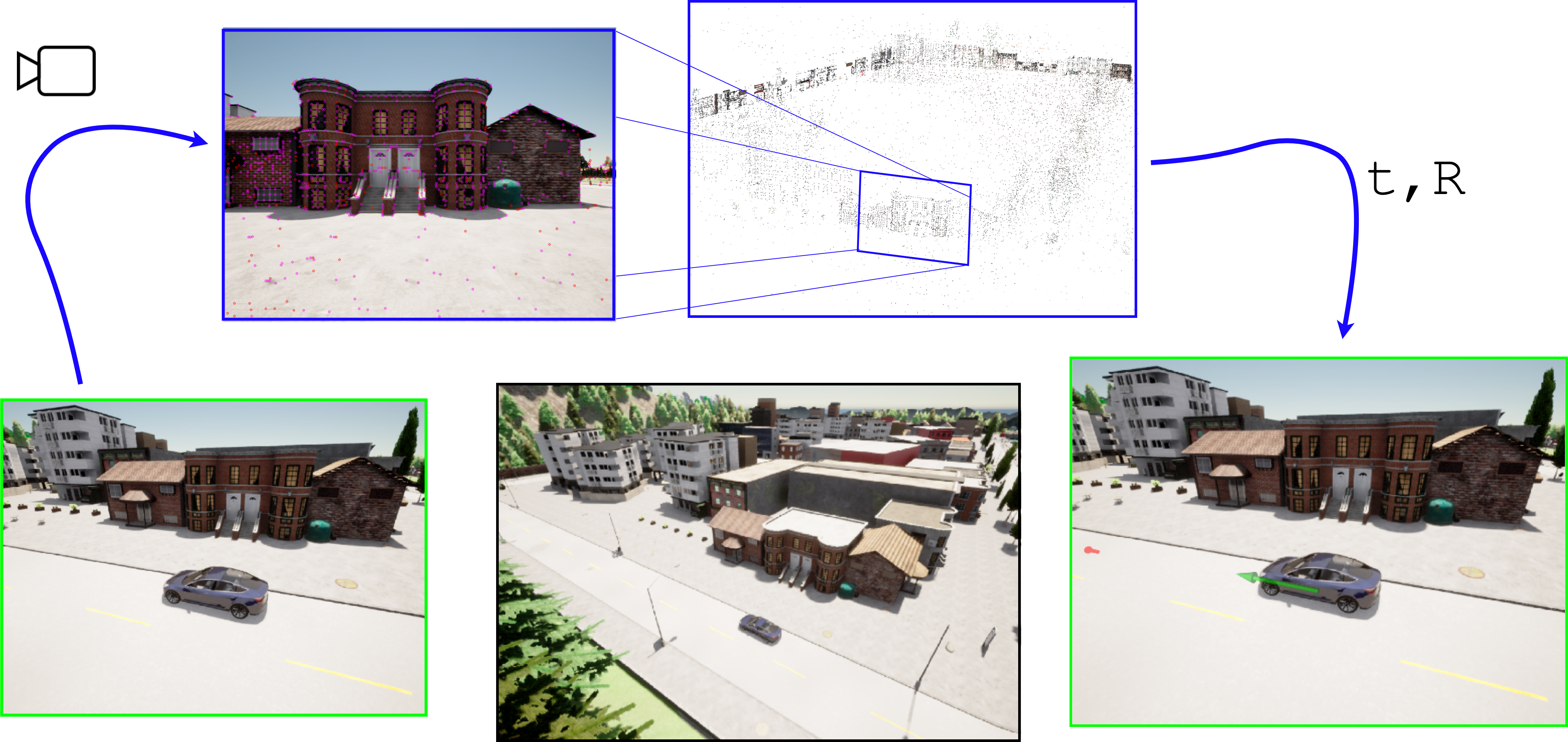

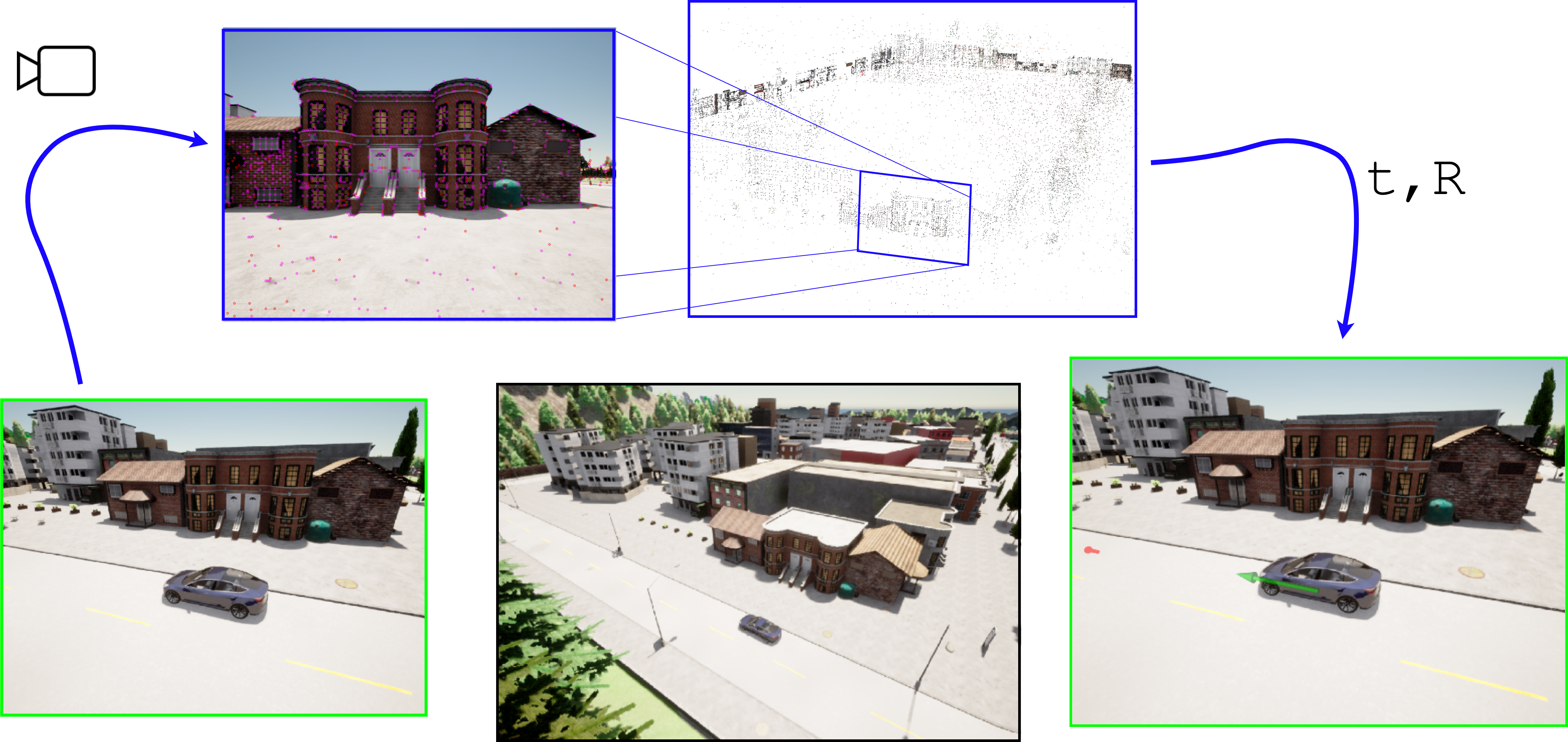

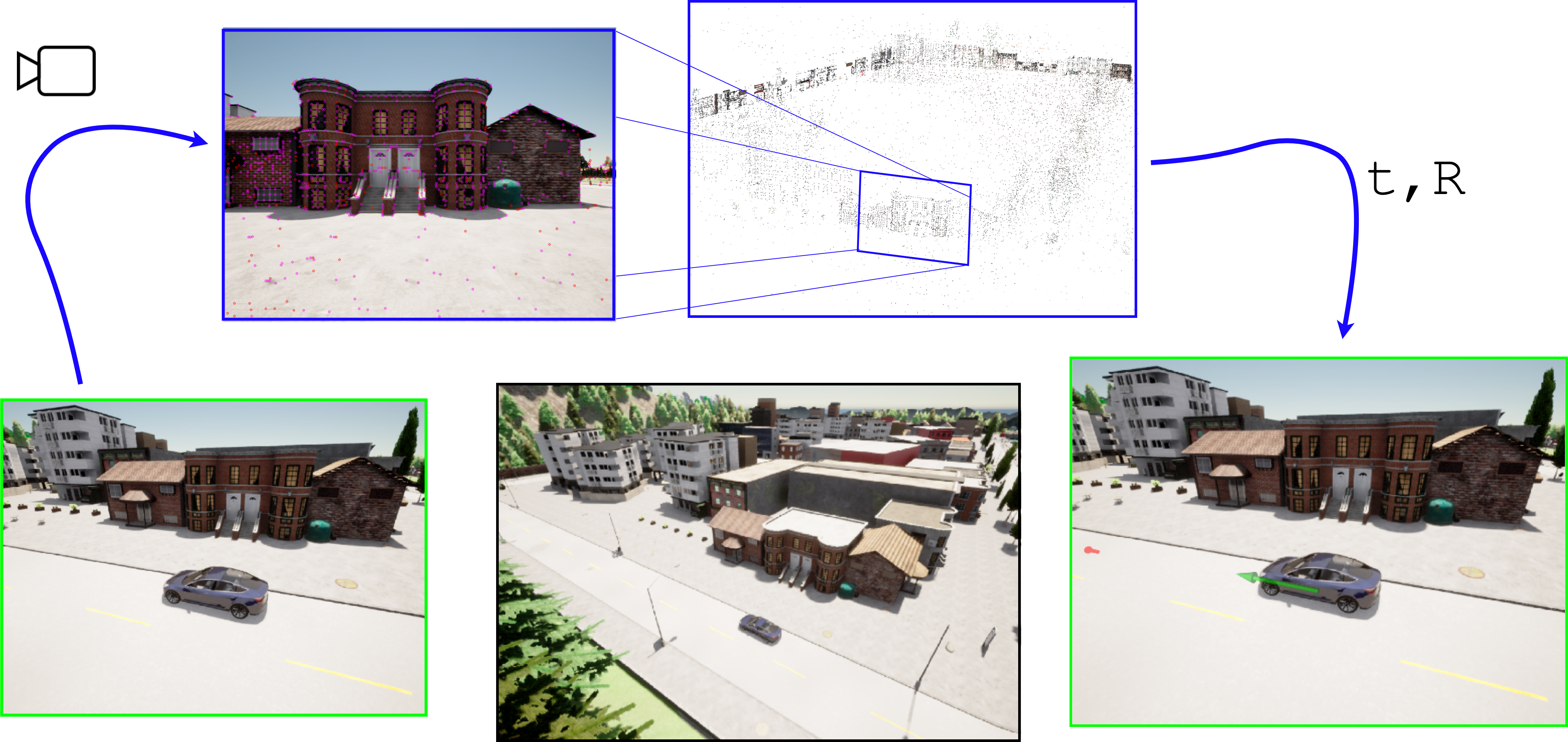

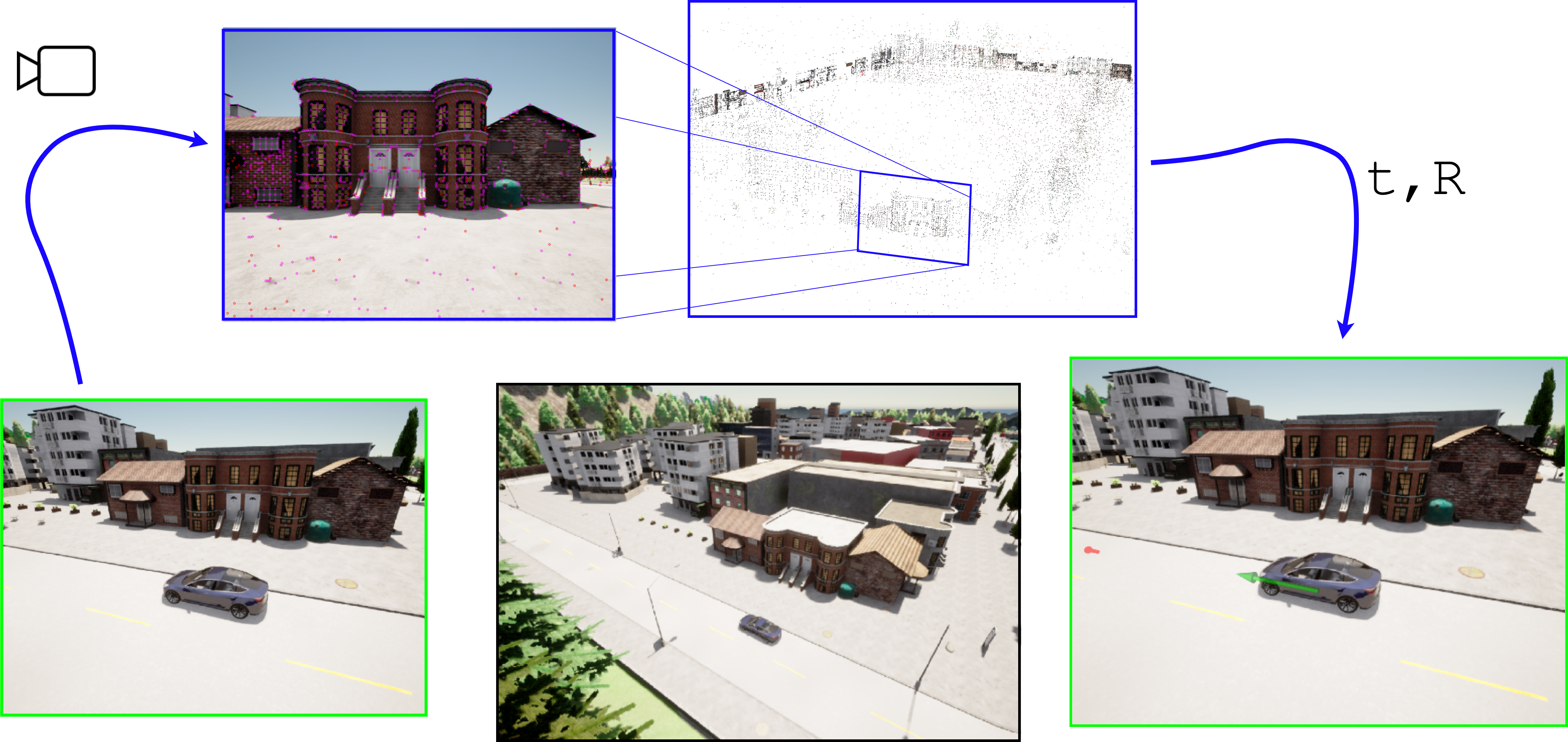

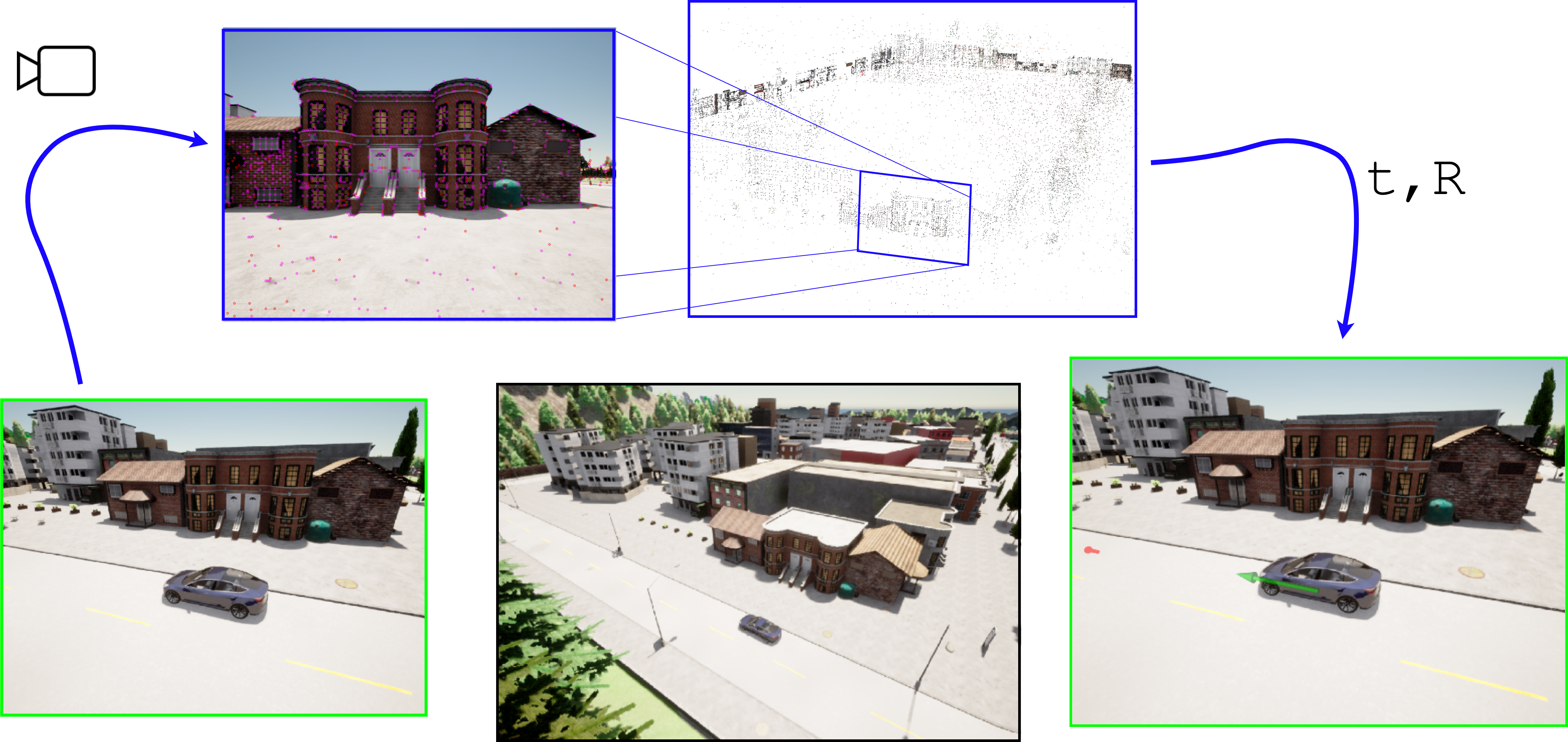

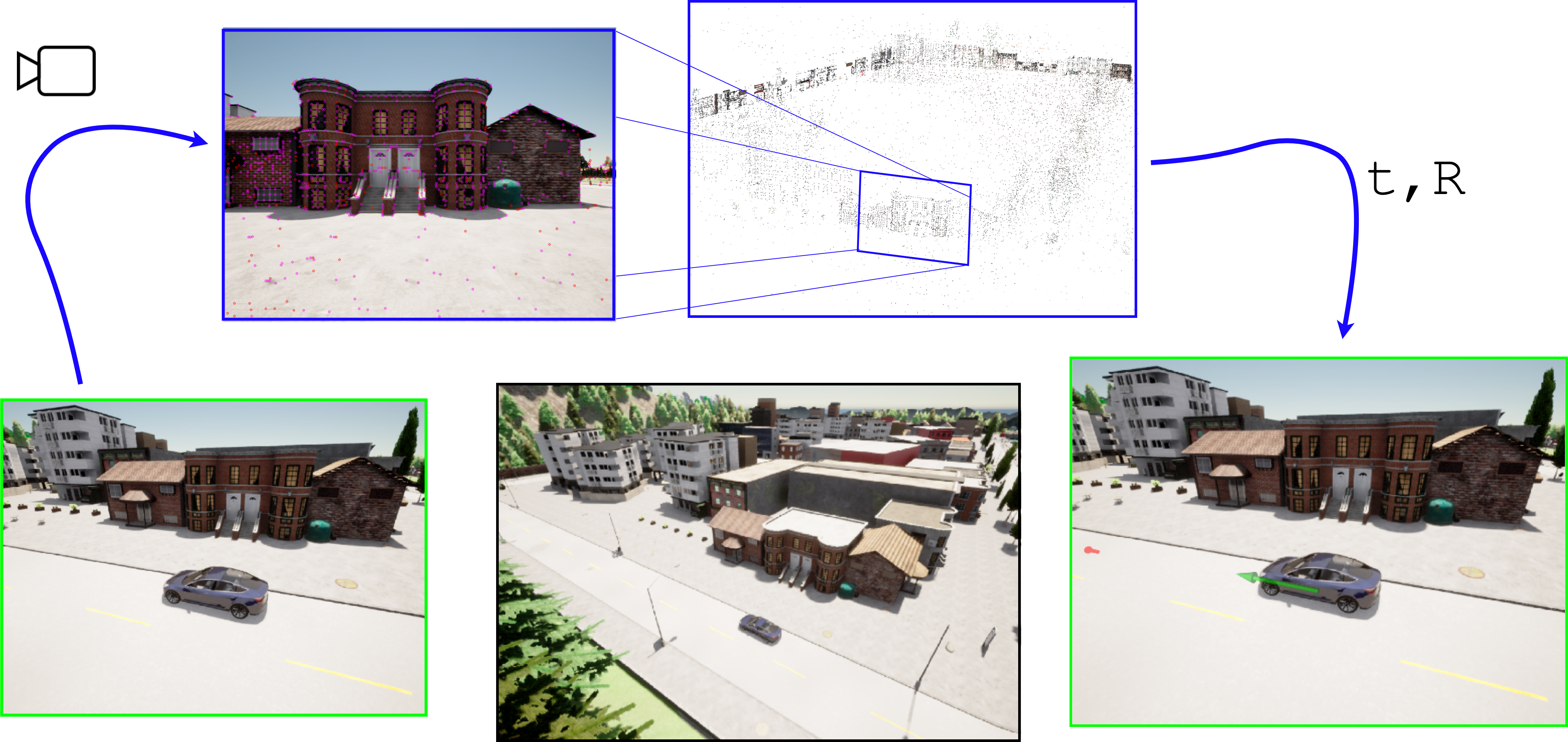

This is the official implementation of the paper “Benchmarking Visual Localization for Autonomous Navigation”.

The benchmark enables easy experimentation with different visual localization methods as part of a navigation stack. The platform enables investigating how various factors such as illumination, viewpoint, and weather changes affect visual localization and subsequent navigation performance. The benchmark is based on the Carla autonomous driving simulator and our ROS2 port of the Hloc visual localization toolbox.

Citing

If you find the benchmark useful in your research, please cite our work as:

@InProceedings{Suomela_2023_WACV,

author = {Suomela, Lauri and Kalliola, Jussi and Dag, Atakan and Edelman, Harry and Kämäräinen, Joni-Kristian},

title = {Benchmarking Visual Localization for Autonomous Navigation},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {2945-2955}

}

System requirements

- Docker

- Nvidia-docker

- Nvidia GPU with minimum of 12GB memory. Recommended Nvidia RTX3090

- 70GB disk space for Docker images

Installation

The code is tested on Ubuntu 20.04 with one Nvidia RTX3090. As everything runs inside docker containers, supporting platforms other than linux should only require modifying the build and run scripts in the docker folder.

Pull the repository:

git clone https://github.com/lasuomela/carla_vloc_benchmark/

git submodule update --init --recursive

We strongly recommend running the benchmark inside the provided docker images. Build the images:

cd docker

./build-carla.sh

./build-ros-bridge-scenario.sh

Next, validate that the environment is correctly set up. Launch the Carla simulator:

# With GUI

./run-carla.sh

In another terminal window, start the autonomous agent / scenario runner container:

cd docker

./run-ros-bridge-scenario.sh

Now, inside the container terminal:

cd /opt/visual_robot_localization/src/visual_robot_localization/utils

# Run SfM with the example images included with the visual_robot_localization package.

./do_SfM.sh

# Visualize the resulting model

./visualize_colmap.sh

# Test that the package can localize agains the model

launch_test /opt/visual_robot_localization/src/visual_robot_localization/test/visual_pose_estimator_test.launch.py

If all functions correctly, you are good to go.

Reproduce the paper experiments

### 1. Launch the environment In one terminal window, launch the Carla simulator: ```sh cd docker # Headless ./run-bash-carla.sh ``` In another terminal window, launch a container which contains the autonomous agent and scenario execution logic: File truncated at 100 lines [see the full file](https://github.com/lasuomela/carla_vloc_benchmark/tree/main/README.md)CONTRIBUTING

|

carla_vloc_benchmark repositorycarla_visual_navigation carla_visual_navigation_agent carla_visual_navigation_interfaces |

ROS Distro

|

Repository Summary

| Description | Official implementation of the WACV 2023 paper "Benchmarking Visual Localization for Autonomous Navigation". |

| Checkout URI | https://github.com/lasuomela/carla_vloc_benchmark.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2023-09-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| carla_visual_navigation | 0.0.0 |

| carla_visual_navigation_agent | 0.0.0 |

| carla_visual_navigation_interfaces | 0.0.0 |

README

</a>

</a>

Carla Visual localization benchmark

This is the official implementation of the paper “Benchmarking Visual Localization for Autonomous Navigation”.

The benchmark enables easy experimentation with different visual localization methods as part of a navigation stack. The platform enables investigating how various factors such as illumination, viewpoint, and weather changes affect visual localization and subsequent navigation performance. The benchmark is based on the Carla autonomous driving simulator and our ROS2 port of the Hloc visual localization toolbox.

Citing

If you find the benchmark useful in your research, please cite our work as:

@InProceedings{Suomela_2023_WACV,

author = {Suomela, Lauri and Kalliola, Jussi and Dag, Atakan and Edelman, Harry and Kämäräinen, Joni-Kristian},

title = {Benchmarking Visual Localization for Autonomous Navigation},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {2945-2955}

}

System requirements

- Docker

- Nvidia-docker

- Nvidia GPU with minimum of 12GB memory. Recommended Nvidia RTX3090

- 70GB disk space for Docker images

Installation

The code is tested on Ubuntu 20.04 with one Nvidia RTX3090. As everything runs inside docker containers, supporting platforms other than linux should only require modifying the build and run scripts in the docker folder.

Pull the repository:

git clone https://github.com/lasuomela/carla_vloc_benchmark/

git submodule update --init --recursive

We strongly recommend running the benchmark inside the provided docker images. Build the images:

cd docker

./build-carla.sh

./build-ros-bridge-scenario.sh

Next, validate that the environment is correctly set up. Launch the Carla simulator:

# With GUI

./run-carla.sh

In another terminal window, start the autonomous agent / scenario runner container:

cd docker

./run-ros-bridge-scenario.sh

Now, inside the container terminal:

cd /opt/visual_robot_localization/src/visual_robot_localization/utils

# Run SfM with the example images included with the visual_robot_localization package.

./do_SfM.sh

# Visualize the resulting model

./visualize_colmap.sh

# Test that the package can localize agains the model

launch_test /opt/visual_robot_localization/src/visual_robot_localization/test/visual_pose_estimator_test.launch.py

If all functions correctly, you are good to go.

Reproduce the paper experiments

### 1. Launch the environment In one terminal window, launch the Carla simulator: ```sh cd docker # Headless ./run-bash-carla.sh ``` In another terminal window, launch a container which contains the autonomous agent and scenario execution logic: File truncated at 100 lines [see the full file](https://github.com/lasuomela/carla_vloc_benchmark/tree/main/README.md)CONTRIBUTING

|

carla_vloc_benchmark repositorycarla_visual_navigation carla_visual_navigation_agent carla_visual_navigation_interfaces |

ROS Distro

|

Repository Summary

| Description | Official implementation of the WACV 2023 paper "Benchmarking Visual Localization for Autonomous Navigation". |

| Checkout URI | https://github.com/lasuomela/carla_vloc_benchmark.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2023-09-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| carla_visual_navigation | 0.0.0 |

| carla_visual_navigation_agent | 0.0.0 |

| carla_visual_navigation_interfaces | 0.0.0 |

README

</a>

</a>

Carla Visual localization benchmark

This is the official implementation of the paper “Benchmarking Visual Localization for Autonomous Navigation”.

The benchmark enables easy experimentation with different visual localization methods as part of a navigation stack. The platform enables investigating how various factors such as illumination, viewpoint, and weather changes affect visual localization and subsequent navigation performance. The benchmark is based on the Carla autonomous driving simulator and our ROS2 port of the Hloc visual localization toolbox.

Citing

If you find the benchmark useful in your research, please cite our work as:

@InProceedings{Suomela_2023_WACV,

author = {Suomela, Lauri and Kalliola, Jussi and Dag, Atakan and Edelman, Harry and Kämäräinen, Joni-Kristian},

title = {Benchmarking Visual Localization for Autonomous Navigation},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {2945-2955}

}

System requirements

- Docker

- Nvidia-docker

- Nvidia GPU with minimum of 12GB memory. Recommended Nvidia RTX3090

- 70GB disk space for Docker images

Installation

The code is tested on Ubuntu 20.04 with one Nvidia RTX3090. As everything runs inside docker containers, supporting platforms other than linux should only require modifying the build and run scripts in the docker folder.

Pull the repository:

git clone https://github.com/lasuomela/carla_vloc_benchmark/

git submodule update --init --recursive

We strongly recommend running the benchmark inside the provided docker images. Build the images:

cd docker

./build-carla.sh

./build-ros-bridge-scenario.sh

Next, validate that the environment is correctly set up. Launch the Carla simulator:

# With GUI

./run-carla.sh

In another terminal window, start the autonomous agent / scenario runner container:

cd docker

./run-ros-bridge-scenario.sh

Now, inside the container terminal:

cd /opt/visual_robot_localization/src/visual_robot_localization/utils

# Run SfM with the example images included with the visual_robot_localization package.

./do_SfM.sh

# Visualize the resulting model

./visualize_colmap.sh

# Test that the package can localize agains the model

launch_test /opt/visual_robot_localization/src/visual_robot_localization/test/visual_pose_estimator_test.launch.py

If all functions correctly, you are good to go.

Reproduce the paper experiments

### 1. Launch the environment In one terminal window, launch the Carla simulator: ```sh cd docker # Headless ./run-bash-carla.sh ``` In another terminal window, launch a container which contains the autonomous agent and scenario execution logic: File truncated at 100 lines [see the full file](https://github.com/lasuomela/carla_vloc_benchmark/tree/main/README.md)CONTRIBUTING

|

carla_vloc_benchmark repositorycarla_visual_navigation carla_visual_navigation_agent carla_visual_navigation_interfaces |

ROS Distro

|

Repository Summary

| Description | Official implementation of the WACV 2023 paper "Benchmarking Visual Localization for Autonomous Navigation". |

| Checkout URI | https://github.com/lasuomela/carla_vloc_benchmark.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2023-09-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| carla_visual_navigation | 0.0.0 |

| carla_visual_navigation_agent | 0.0.0 |

| carla_visual_navigation_interfaces | 0.0.0 |

README

</a>

</a>

Carla Visual localization benchmark

This is the official implementation of the paper “Benchmarking Visual Localization for Autonomous Navigation”.

The benchmark enables easy experimentation with different visual localization methods as part of a navigation stack. The platform enables investigating how various factors such as illumination, viewpoint, and weather changes affect visual localization and subsequent navigation performance. The benchmark is based on the Carla autonomous driving simulator and our ROS2 port of the Hloc visual localization toolbox.

Citing

If you find the benchmark useful in your research, please cite our work as:

@InProceedings{Suomela_2023_WACV,

author = {Suomela, Lauri and Kalliola, Jussi and Dag, Atakan and Edelman, Harry and Kämäräinen, Joni-Kristian},

title = {Benchmarking Visual Localization for Autonomous Navigation},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {2945-2955}

}

System requirements

- Docker

- Nvidia-docker

- Nvidia GPU with minimum of 12GB memory. Recommended Nvidia RTX3090

- 70GB disk space for Docker images

Installation

The code is tested on Ubuntu 20.04 with one Nvidia RTX3090. As everything runs inside docker containers, supporting platforms other than linux should only require modifying the build and run scripts in the docker folder.

Pull the repository:

git clone https://github.com/lasuomela/carla_vloc_benchmark/

git submodule update --init --recursive

We strongly recommend running the benchmark inside the provided docker images. Build the images:

cd docker

./build-carla.sh

./build-ros-bridge-scenario.sh

Next, validate that the environment is correctly set up. Launch the Carla simulator:

# With GUI

./run-carla.sh

In another terminal window, start the autonomous agent / scenario runner container:

cd docker

./run-ros-bridge-scenario.sh

Now, inside the container terminal:

cd /opt/visual_robot_localization/src/visual_robot_localization/utils

# Run SfM with the example images included with the visual_robot_localization package.

./do_SfM.sh

# Visualize the resulting model

./visualize_colmap.sh

# Test that the package can localize agains the model

launch_test /opt/visual_robot_localization/src/visual_robot_localization/test/visual_pose_estimator_test.launch.py

If all functions correctly, you are good to go.

Reproduce the paper experiments

### 1. Launch the environment In one terminal window, launch the Carla simulator: ```sh cd docker # Headless ./run-bash-carla.sh ``` In another terminal window, launch a container which contains the autonomous agent and scenario execution logic: File truncated at 100 lines [see the full file](https://github.com/lasuomela/carla_vloc_benchmark/tree/main/README.md)CONTRIBUTING

|

carla_vloc_benchmark repositorycarla_visual_navigation carla_visual_navigation_agent carla_visual_navigation_interfaces |

ROS Distro

|

Repository Summary

| Description | Official implementation of the WACV 2023 paper "Benchmarking Visual Localization for Autonomous Navigation". |

| Checkout URI | https://github.com/lasuomela/carla_vloc_benchmark.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2023-09-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| carla_visual_navigation | 0.0.0 |

| carla_visual_navigation_agent | 0.0.0 |

| carla_visual_navigation_interfaces | 0.0.0 |

README

</a>

</a>

Carla Visual localization benchmark

This is the official implementation of the paper “Benchmarking Visual Localization for Autonomous Navigation”.

The benchmark enables easy experimentation with different visual localization methods as part of a navigation stack. The platform enables investigating how various factors such as illumination, viewpoint, and weather changes affect visual localization and subsequent navigation performance. The benchmark is based on the Carla autonomous driving simulator and our ROS2 port of the Hloc visual localization toolbox.

Citing

If you find the benchmark useful in your research, please cite our work as:

@InProceedings{Suomela_2023_WACV,

author = {Suomela, Lauri and Kalliola, Jussi and Dag, Atakan and Edelman, Harry and Kämäräinen, Joni-Kristian},

title = {Benchmarking Visual Localization for Autonomous Navigation},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {2945-2955}

}

System requirements

- Docker

- Nvidia-docker

- Nvidia GPU with minimum of 12GB memory. Recommended Nvidia RTX3090

- 70GB disk space for Docker images

Installation

The code is tested on Ubuntu 20.04 with one Nvidia RTX3090. As everything runs inside docker containers, supporting platforms other than linux should only require modifying the build and run scripts in the docker folder.

Pull the repository:

git clone https://github.com/lasuomela/carla_vloc_benchmark/

git submodule update --init --recursive

We strongly recommend running the benchmark inside the provided docker images. Build the images:

cd docker

./build-carla.sh

./build-ros-bridge-scenario.sh

Next, validate that the environment is correctly set up. Launch the Carla simulator:

# With GUI

./run-carla.sh

In another terminal window, start the autonomous agent / scenario runner container:

cd docker

./run-ros-bridge-scenario.sh

Now, inside the container terminal:

cd /opt/visual_robot_localization/src/visual_robot_localization/utils

# Run SfM with the example images included with the visual_robot_localization package.

./do_SfM.sh

# Visualize the resulting model

./visualize_colmap.sh

# Test that the package can localize agains the model

launch_test /opt/visual_robot_localization/src/visual_robot_localization/test/visual_pose_estimator_test.launch.py

If all functions correctly, you are good to go.

Reproduce the paper experiments

### 1. Launch the environment In one terminal window, launch the Carla simulator: ```sh cd docker # Headless ./run-bash-carla.sh ``` In another terminal window, launch a container which contains the autonomous agent and scenario execution logic: File truncated at 100 lines [see the full file](https://github.com/lasuomela/carla_vloc_benchmark/tree/main/README.md)CONTRIBUTING

|

carla_vloc_benchmark repositorycarla_visual_navigation carla_visual_navigation_agent carla_visual_navigation_interfaces |

ROS Distro

|

Repository Summary

| Description | Official implementation of the WACV 2023 paper "Benchmarking Visual Localization for Autonomous Navigation". |

| Checkout URI | https://github.com/lasuomela/carla_vloc_benchmark.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2023-09-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| carla_visual_navigation | 0.0.0 |

| carla_visual_navigation_agent | 0.0.0 |

| carla_visual_navigation_interfaces | 0.0.0 |

README

</a>

</a>

Carla Visual localization benchmark

This is the official implementation of the paper “Benchmarking Visual Localization for Autonomous Navigation”.

The benchmark enables easy experimentation with different visual localization methods as part of a navigation stack. The platform enables investigating how various factors such as illumination, viewpoint, and weather changes affect visual localization and subsequent navigation performance. The benchmark is based on the Carla autonomous driving simulator and our ROS2 port of the Hloc visual localization toolbox.

Citing

If you find the benchmark useful in your research, please cite our work as:

@InProceedings{Suomela_2023_WACV,

author = {Suomela, Lauri and Kalliola, Jussi and Dag, Atakan and Edelman, Harry and Kämäräinen, Joni-Kristian},

title = {Benchmarking Visual Localization for Autonomous Navigation},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {2945-2955}

}

System requirements

- Docker

- Nvidia-docker

- Nvidia GPU with minimum of 12GB memory. Recommended Nvidia RTX3090

- 70GB disk space for Docker images

Installation

The code is tested on Ubuntu 20.04 with one Nvidia RTX3090. As everything runs inside docker containers, supporting platforms other than linux should only require modifying the build and run scripts in the docker folder.

Pull the repository:

git clone https://github.com/lasuomela/carla_vloc_benchmark/

git submodule update --init --recursive

We strongly recommend running the benchmark inside the provided docker images. Build the images:

cd docker

./build-carla.sh

./build-ros-bridge-scenario.sh

Next, validate that the environment is correctly set up. Launch the Carla simulator:

# With GUI

./run-carla.sh

In another terminal window, start the autonomous agent / scenario runner container:

cd docker

./run-ros-bridge-scenario.sh

Now, inside the container terminal:

cd /opt/visual_robot_localization/src/visual_robot_localization/utils

# Run SfM with the example images included with the visual_robot_localization package.

./do_SfM.sh

# Visualize the resulting model

./visualize_colmap.sh

# Test that the package can localize agains the model

launch_test /opt/visual_robot_localization/src/visual_robot_localization/test/visual_pose_estimator_test.launch.py

If all functions correctly, you are good to go.

Reproduce the paper experiments

### 1. Launch the environment In one terminal window, launch the Carla simulator: ```sh cd docker # Headless ./run-bash-carla.sh ``` In another terminal window, launch a container which contains the autonomous agent and scenario execution logic: File truncated at 100 lines [see the full file](https://github.com/lasuomela/carla_vloc_benchmark/tree/main/README.md)CONTRIBUTING

|

carla_vloc_benchmark repositorycarla_visual_navigation carla_visual_navigation_agent carla_visual_navigation_interfaces |

ROS Distro

|

Repository Summary

| Description | Official implementation of the WACV 2023 paper "Benchmarking Visual Localization for Autonomous Navigation". |

| Checkout URI | https://github.com/lasuomela/carla_vloc_benchmark.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2023-09-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| carla_visual_navigation | 0.0.0 |

| carla_visual_navigation_agent | 0.0.0 |

| carla_visual_navigation_interfaces | 0.0.0 |

README

</a>

</a>

Carla Visual localization benchmark

This is the official implementation of the paper “Benchmarking Visual Localization for Autonomous Navigation”.

The benchmark enables easy experimentation with different visual localization methods as part of a navigation stack. The platform enables investigating how various factors such as illumination, viewpoint, and weather changes affect visual localization and subsequent navigation performance. The benchmark is based on the Carla autonomous driving simulator and our ROS2 port of the Hloc visual localization toolbox.

Citing

If you find the benchmark useful in your research, please cite our work as:

@InProceedings{Suomela_2023_WACV,

author = {Suomela, Lauri and Kalliola, Jussi and Dag, Atakan and Edelman, Harry and Kämäräinen, Joni-Kristian},

title = {Benchmarking Visual Localization for Autonomous Navigation},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {2945-2955}

}

System requirements

- Docker

- Nvidia-docker

- Nvidia GPU with minimum of 12GB memory. Recommended Nvidia RTX3090

- 70GB disk space for Docker images

Installation

The code is tested on Ubuntu 20.04 with one Nvidia RTX3090. As everything runs inside docker containers, supporting platforms other than linux should only require modifying the build and run scripts in the docker folder.

Pull the repository:

git clone https://github.com/lasuomela/carla_vloc_benchmark/

git submodule update --init --recursive

We strongly recommend running the benchmark inside the provided docker images. Build the images:

cd docker

./build-carla.sh

./build-ros-bridge-scenario.sh

Next, validate that the environment is correctly set up. Launch the Carla simulator:

# With GUI

./run-carla.sh

In another terminal window, start the autonomous agent / scenario runner container:

cd docker

./run-ros-bridge-scenario.sh

Now, inside the container terminal:

cd /opt/visual_robot_localization/src/visual_robot_localization/utils

# Run SfM with the example images included with the visual_robot_localization package.

./do_SfM.sh

# Visualize the resulting model

./visualize_colmap.sh

# Test that the package can localize agains the model

launch_test /opt/visual_robot_localization/src/visual_robot_localization/test/visual_pose_estimator_test.launch.py

If all functions correctly, you are good to go.

Reproduce the paper experiments

### 1. Launch the environment In one terminal window, launch the Carla simulator: ```sh cd docker # Headless ./run-bash-carla.sh ``` In another terminal window, launch a container which contains the autonomous agent and scenario execution logic: File truncated at 100 lines [see the full file](https://github.com/lasuomela/carla_vloc_benchmark/tree/main/README.md)CONTRIBUTING

|

carla_vloc_benchmark repositorycarla_visual_navigation carla_visual_navigation_agent carla_visual_navigation_interfaces |

ROS Distro

|

Repository Summary

| Description | Official implementation of the WACV 2023 paper "Benchmarking Visual Localization for Autonomous Navigation". |

| Checkout URI | https://github.com/lasuomela/carla_vloc_benchmark.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2023-09-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| carla_visual_navigation | 0.0.0 |

| carla_visual_navigation_agent | 0.0.0 |

| carla_visual_navigation_interfaces | 0.0.0 |

README

</a>

</a>

Carla Visual localization benchmark

This is the official implementation of the paper “Benchmarking Visual Localization for Autonomous Navigation”.

The benchmark enables easy experimentation with different visual localization methods as part of a navigation stack. The platform enables investigating how various factors such as illumination, viewpoint, and weather changes affect visual localization and subsequent navigation performance. The benchmark is based on the Carla autonomous driving simulator and our ROS2 port of the Hloc visual localization toolbox.

Citing

If you find the benchmark useful in your research, please cite our work as:

@InProceedings{Suomela_2023_WACV,

author = {Suomela, Lauri and Kalliola, Jussi and Dag, Atakan and Edelman, Harry and Kämäräinen, Joni-Kristian},

title = {Benchmarking Visual Localization for Autonomous Navigation},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {2945-2955}

}

System requirements

- Docker

- Nvidia-docker

- Nvidia GPU with minimum of 12GB memory. Recommended Nvidia RTX3090

- 70GB disk space for Docker images

Installation

The code is tested on Ubuntu 20.04 with one Nvidia RTX3090. As everything runs inside docker containers, supporting platforms other than linux should only require modifying the build and run scripts in the docker folder.

Pull the repository:

git clone https://github.com/lasuomela/carla_vloc_benchmark/

git submodule update --init --recursive

We strongly recommend running the benchmark inside the provided docker images. Build the images:

cd docker

./build-carla.sh

./build-ros-bridge-scenario.sh

Next, validate that the environment is correctly set up. Launch the Carla simulator:

# With GUI

./run-carla.sh

In another terminal window, start the autonomous agent / scenario runner container:

cd docker

./run-ros-bridge-scenario.sh

Now, inside the container terminal:

cd /opt/visual_robot_localization/src/visual_robot_localization/utils

# Run SfM with the example images included with the visual_robot_localization package.

./do_SfM.sh

# Visualize the resulting model

./visualize_colmap.sh

# Test that the package can localize agains the model

launch_test /opt/visual_robot_localization/src/visual_robot_localization/test/visual_pose_estimator_test.launch.py

If all functions correctly, you are good to go.

Reproduce the paper experiments

### 1. Launch the environment In one terminal window, launch the Carla simulator: ```sh cd docker # Headless ./run-bash-carla.sh ``` In another terminal window, launch a container which contains the autonomous agent and scenario execution logic: File truncated at 100 lines [see the full file](https://github.com/lasuomela/carla_vloc_benchmark/tree/main/README.md)CONTRIBUTING

|

carla_vloc_benchmark repositorycarla_visual_navigation carla_visual_navigation_agent carla_visual_navigation_interfaces |

ROS Distro

|

Repository Summary

| Description | Official implementation of the WACV 2023 paper "Benchmarking Visual Localization for Autonomous Navigation". |

| Checkout URI | https://github.com/lasuomela/carla_vloc_benchmark.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2023-09-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| carla_visual_navigation | 0.0.0 |

| carla_visual_navigation_agent | 0.0.0 |

| carla_visual_navigation_interfaces | 0.0.0 |

README

</a>

</a>

Carla Visual localization benchmark

This is the official implementation of the paper “Benchmarking Visual Localization for Autonomous Navigation”.

The benchmark enables easy experimentation with different visual localization methods as part of a navigation stack. The platform enables investigating how various factors such as illumination, viewpoint, and weather changes affect visual localization and subsequent navigation performance. The benchmark is based on the Carla autonomous driving simulator and our ROS2 port of the Hloc visual localization toolbox.

Citing

If you find the benchmark useful in your research, please cite our work as:

@InProceedings{Suomela_2023_WACV,

author = {Suomela, Lauri and Kalliola, Jussi and Dag, Atakan and Edelman, Harry and Kämäräinen, Joni-Kristian},

title = {Benchmarking Visual Localization for Autonomous Navigation},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {2945-2955}

}

System requirements

- Docker

- Nvidia-docker

- Nvidia GPU with minimum of 12GB memory. Recommended Nvidia RTX3090

- 70GB disk space for Docker images

Installation

The code is tested on Ubuntu 20.04 with one Nvidia RTX3090. As everything runs inside docker containers, supporting platforms other than linux should only require modifying the build and run scripts in the docker folder.

Pull the repository:

git clone https://github.com/lasuomela/carla_vloc_benchmark/

git submodule update --init --recursive

We strongly recommend running the benchmark inside the provided docker images. Build the images:

cd docker

./build-carla.sh

./build-ros-bridge-scenario.sh

Next, validate that the environment is correctly set up. Launch the Carla simulator:

# With GUI

./run-carla.sh

In another terminal window, start the autonomous agent / scenario runner container:

cd docker

./run-ros-bridge-scenario.sh

Now, inside the container terminal:

cd /opt/visual_robot_localization/src/visual_robot_localization/utils

# Run SfM with the example images included with the visual_robot_localization package.

./do_SfM.sh

# Visualize the resulting model

./visualize_colmap.sh

# Test that the package can localize agains the model

launch_test /opt/visual_robot_localization/src/visual_robot_localization/test/visual_pose_estimator_test.launch.py

If all functions correctly, you are good to go.