Repository Summary

| Description | |

| Checkout URI | https://github.com/prbonn/simp.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| data_processing | 0.0.0 |

| nmcl_msgs | 0.0.0 |

| nmcl_ros | 0.0.0 |

| omni3d_ros | 0.0.0 |

README

SIMP: Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization

This repository contains the implementation of the following publication:

@inproceedings{zimmerman2023iros,

author = {Zimmerman, Nicky and Sodano, Matteo and Marks, Elias and Behley, Jens and Stachniss, Cyrill},

title = {{Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization}},

booktitle = {IEEE/RSJ Intl.~Conf.~on Intelligent Robots and Systems (IROS)},

year = {2023}

}

Abstract

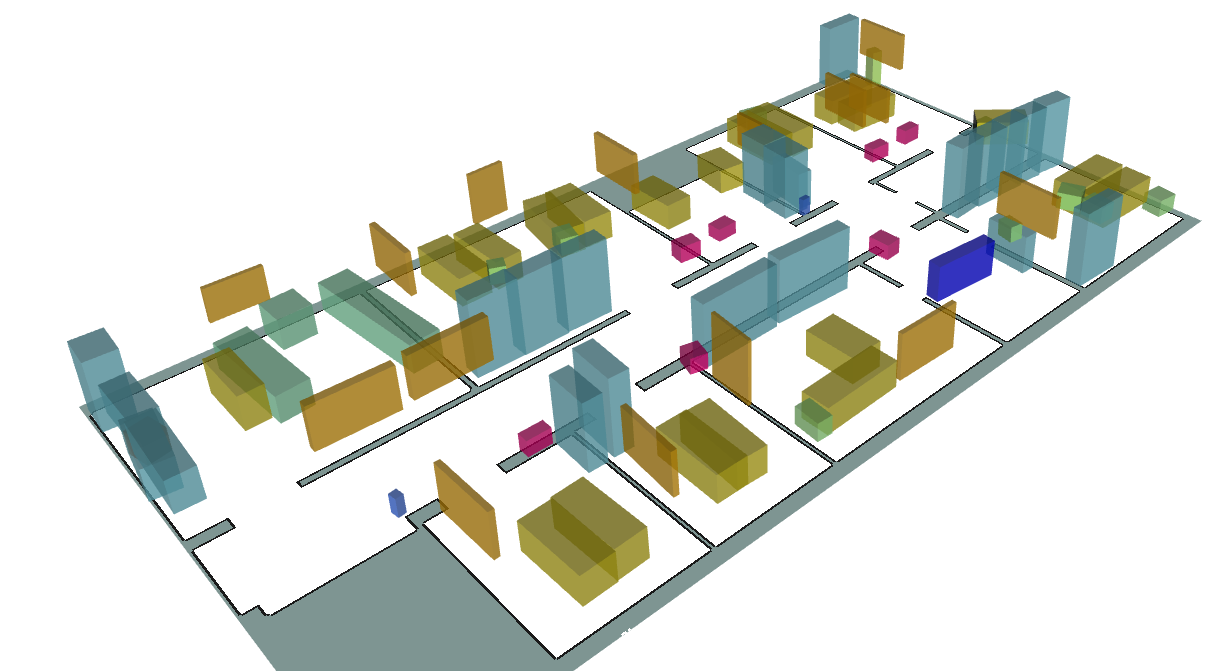

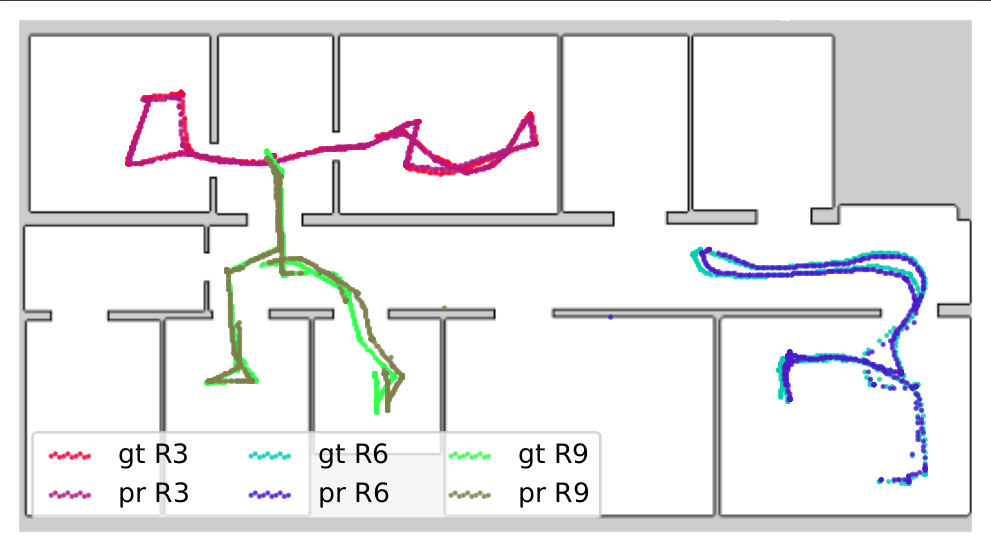

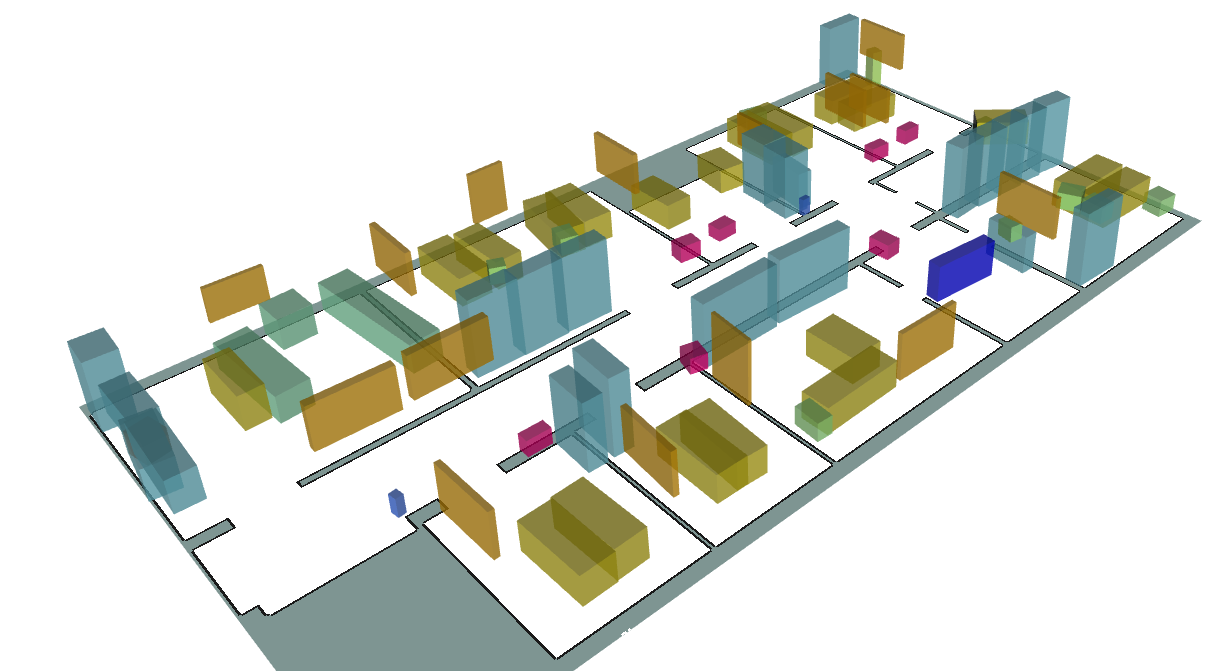

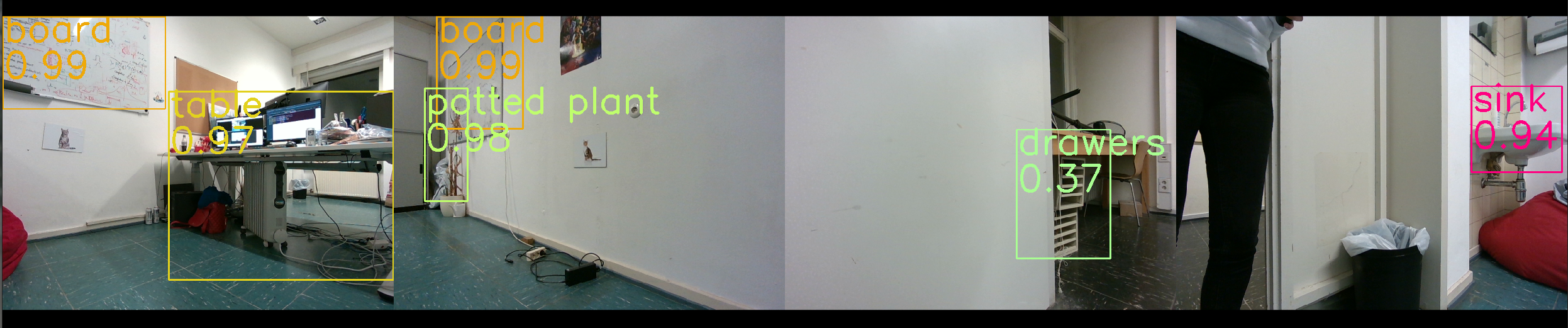

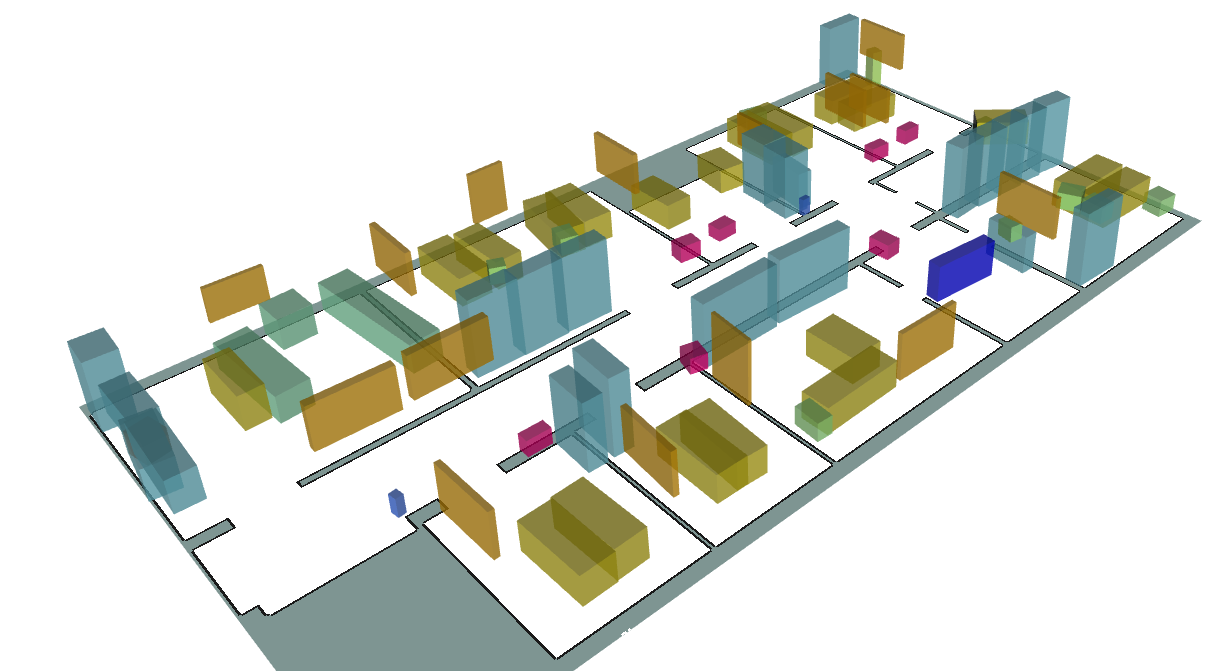

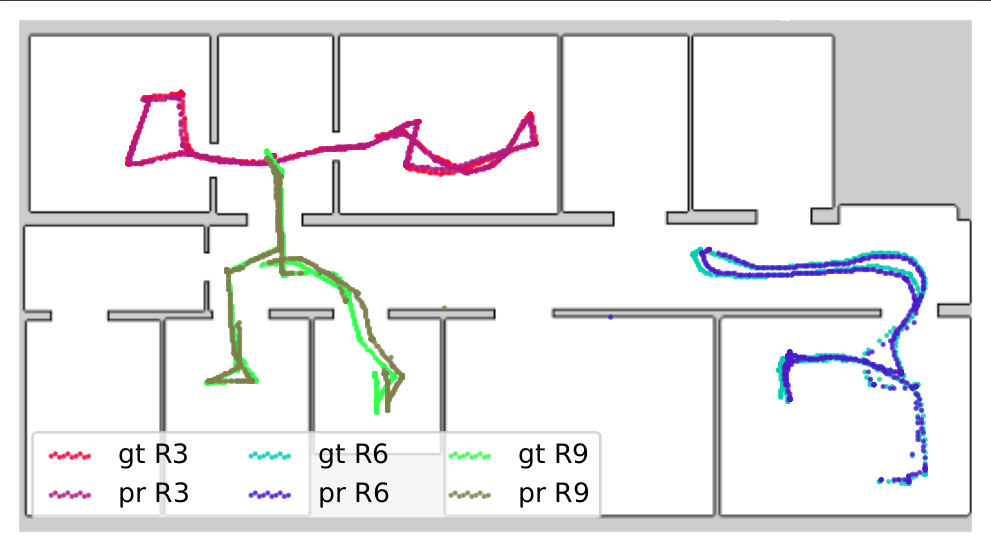

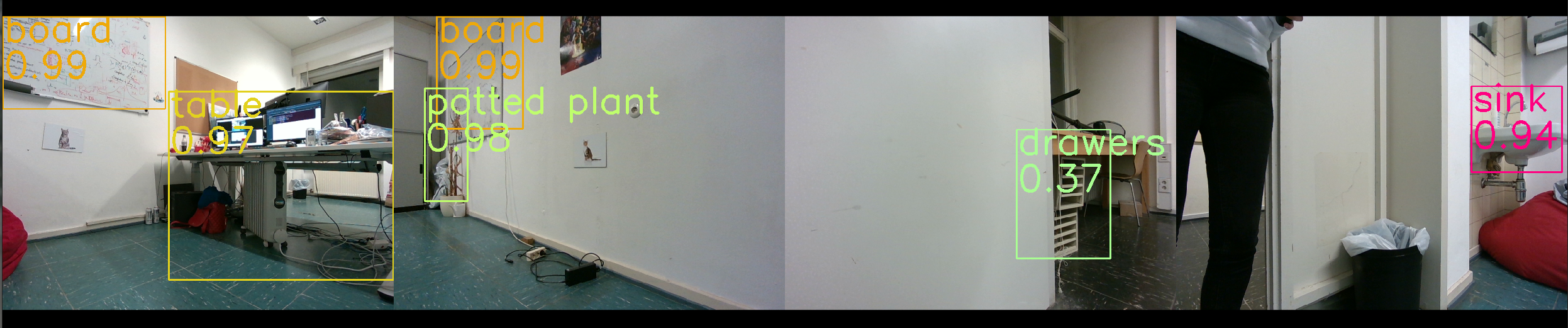

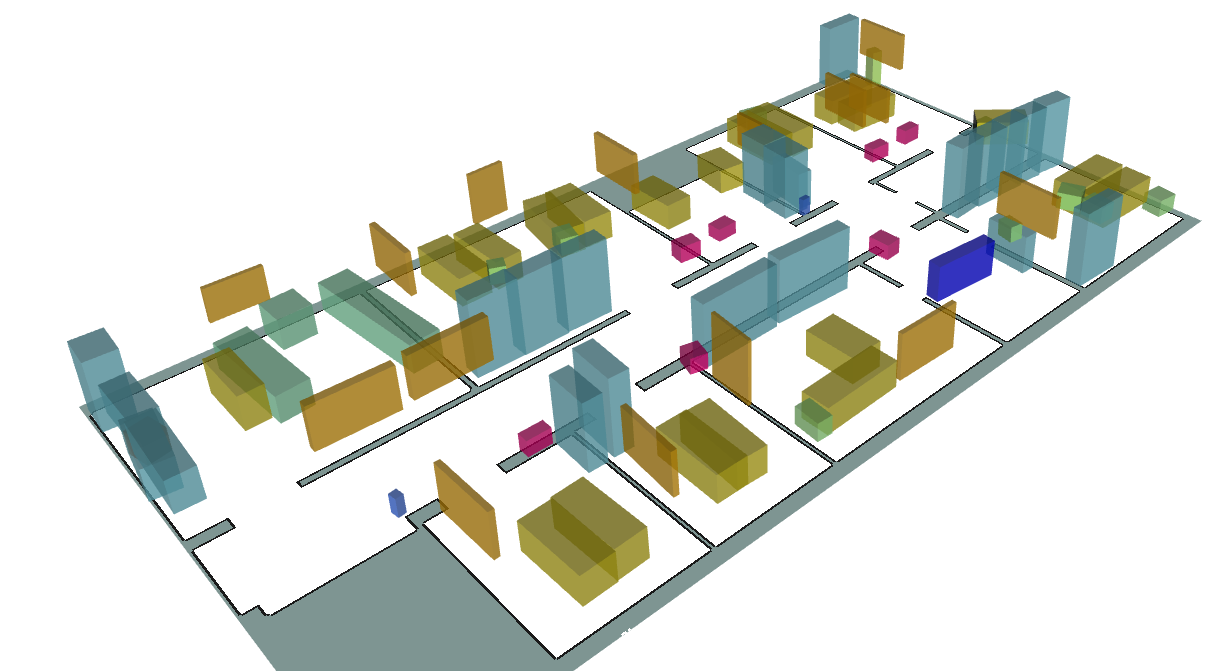

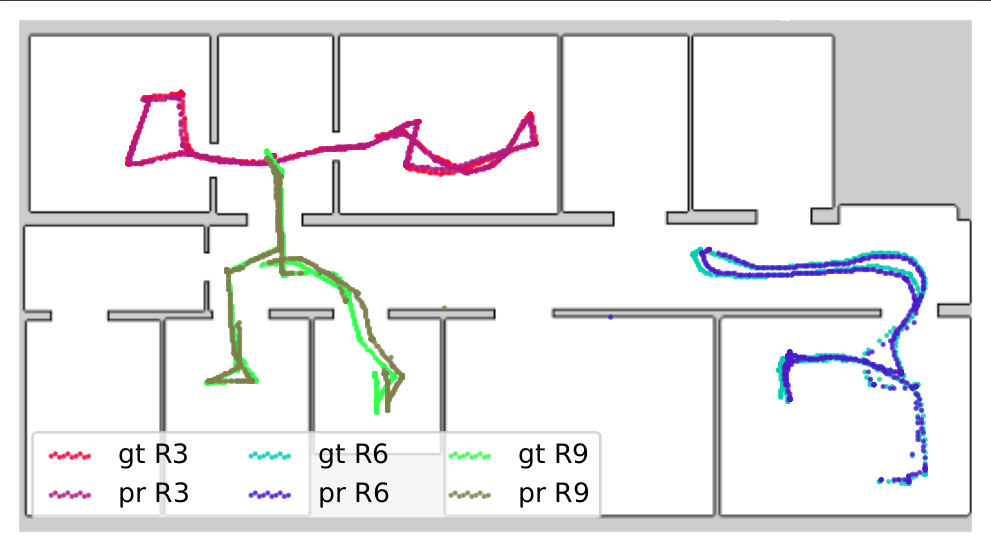

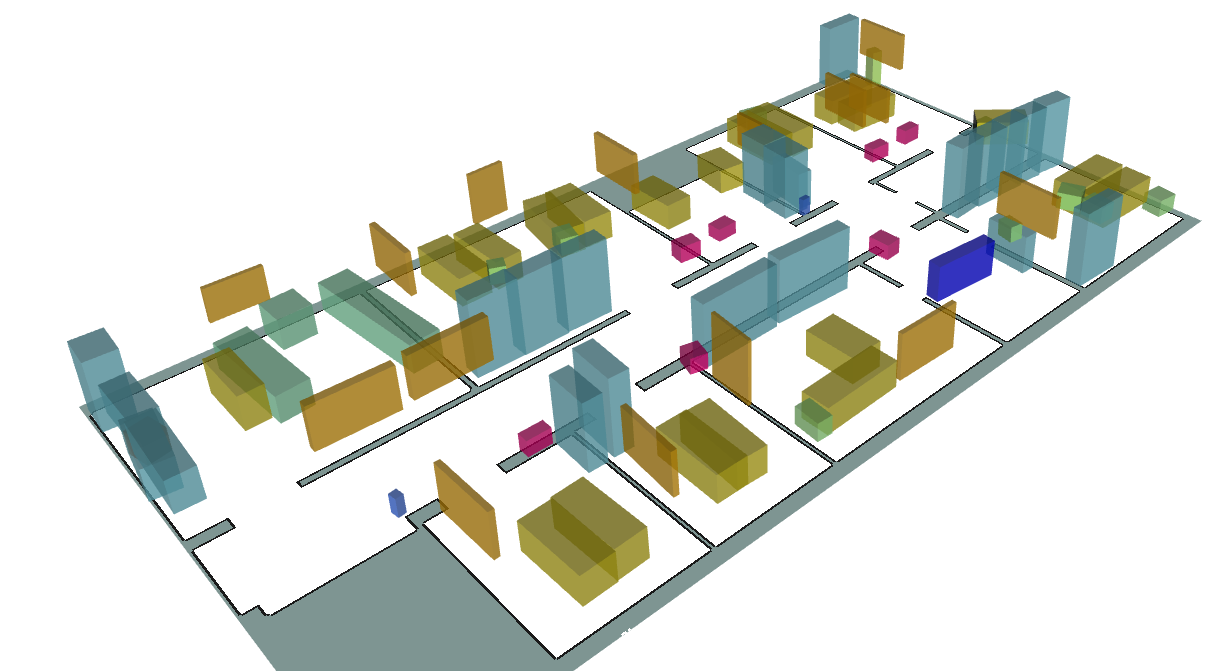

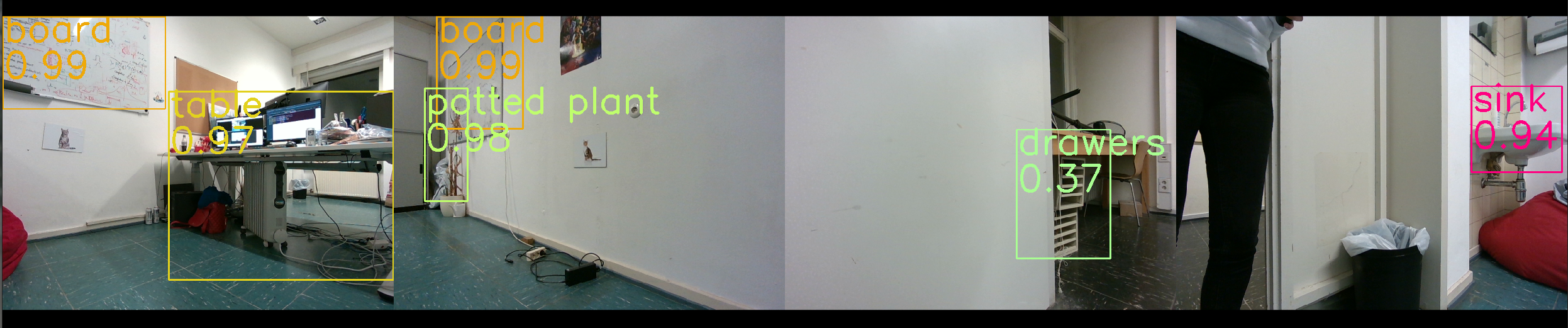

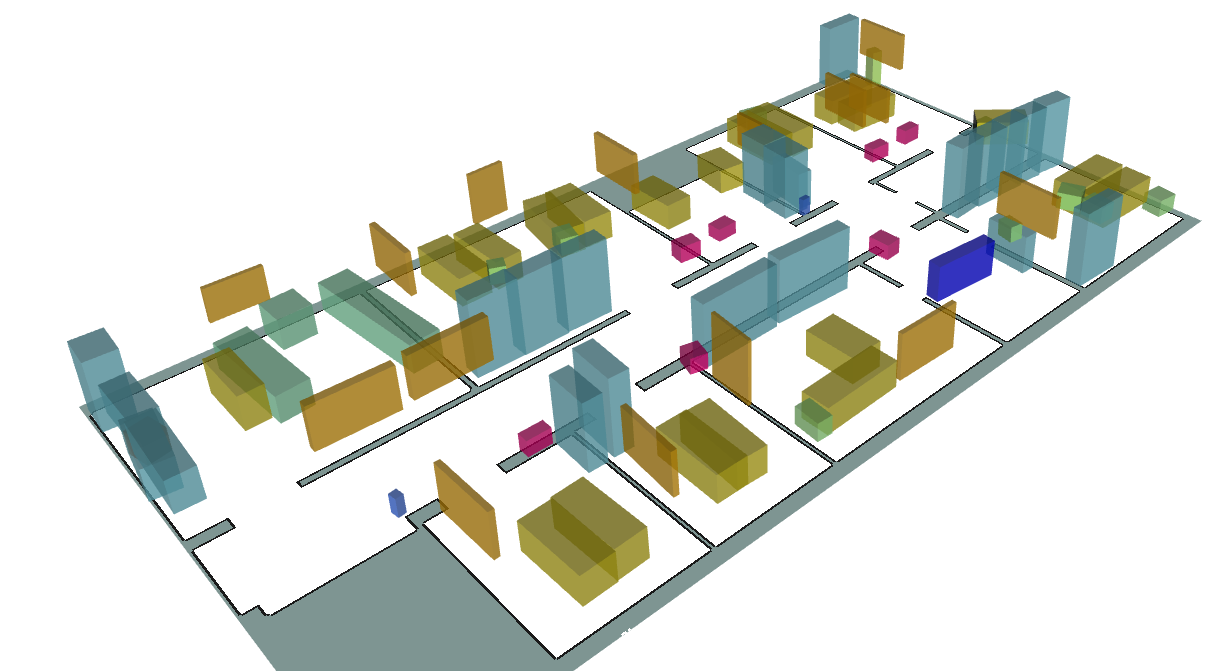

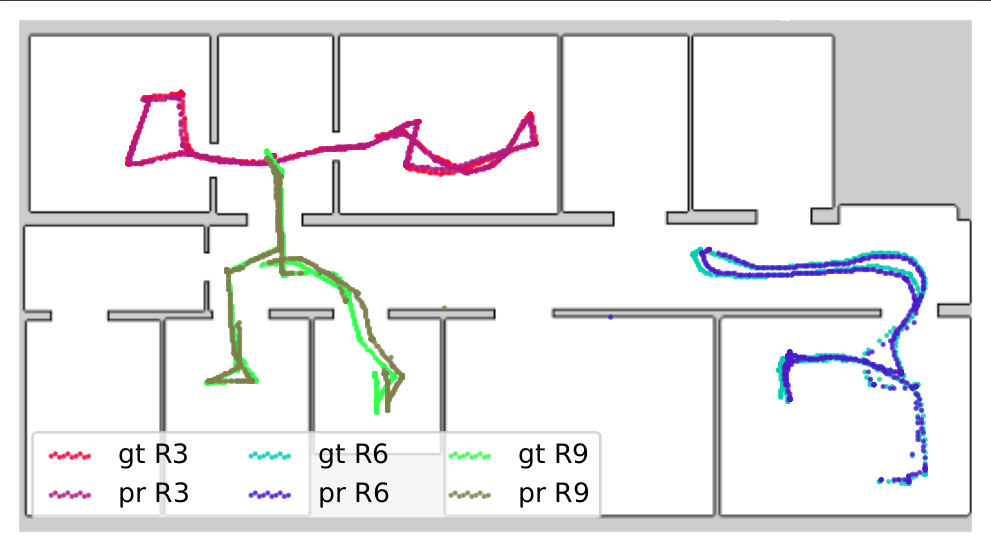

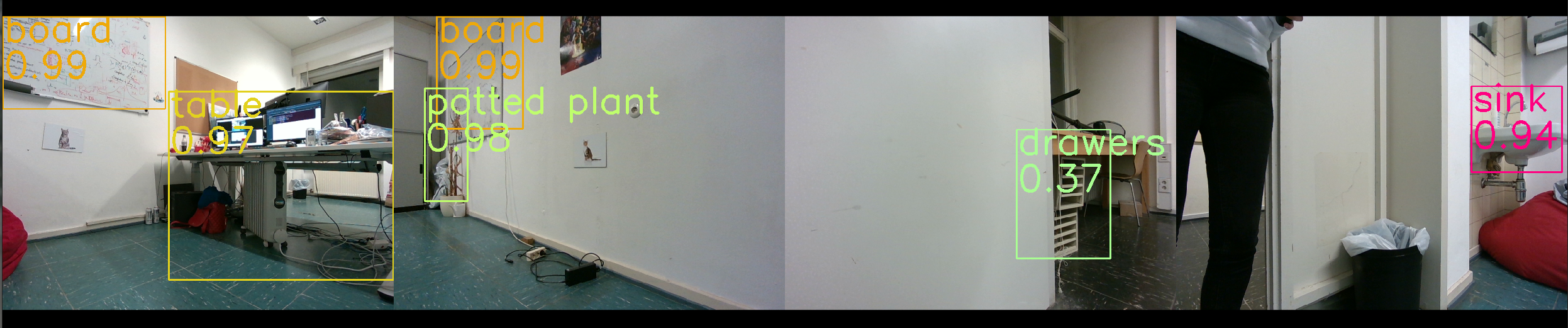

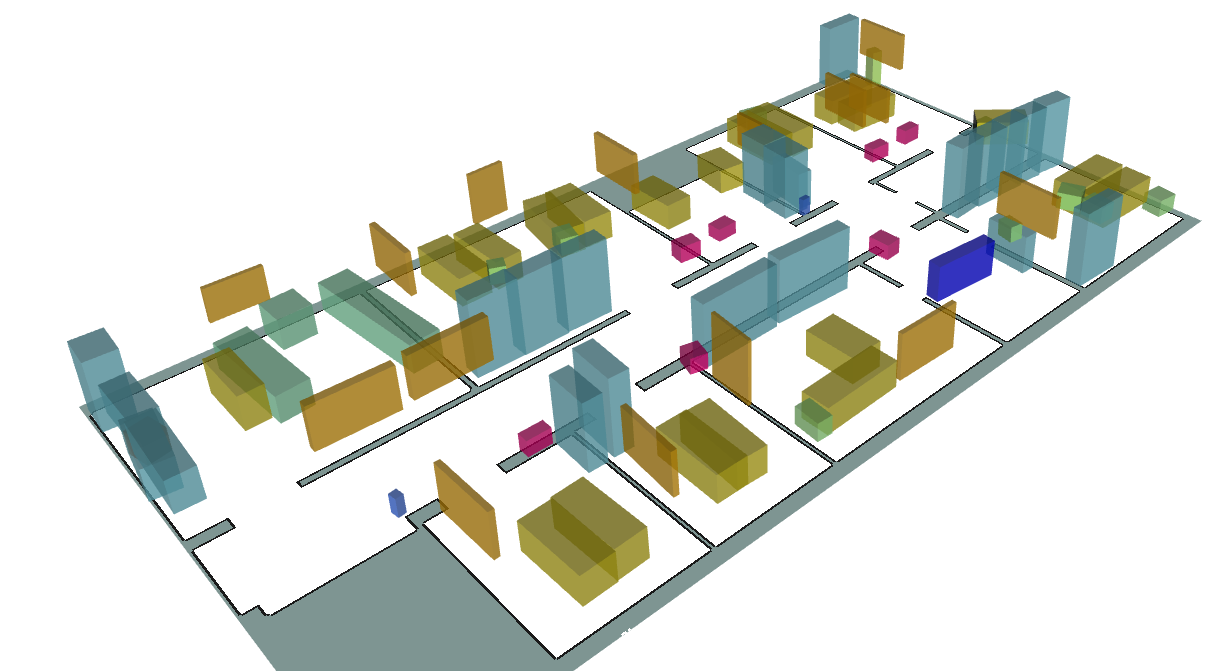

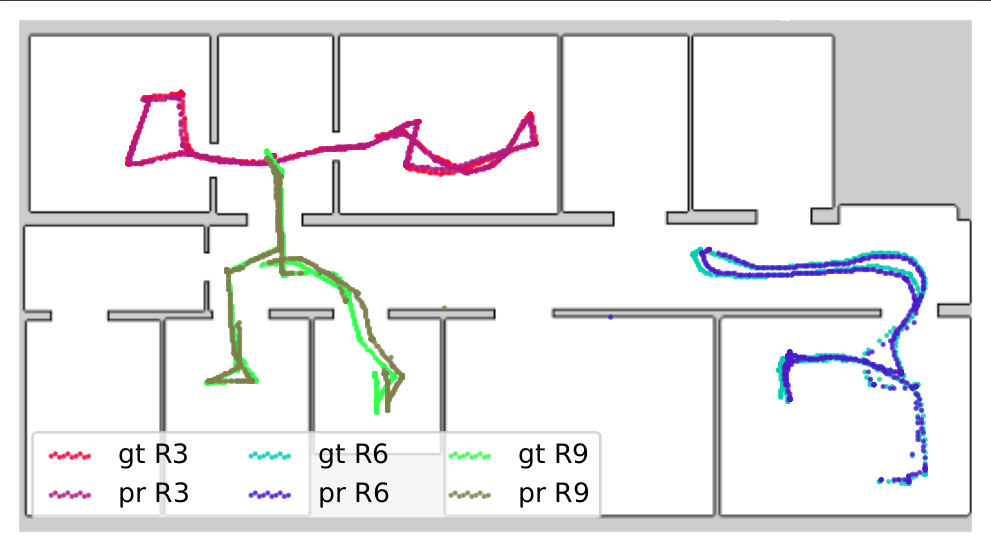

Object-based maps are relevant for scene understanding since they integrate geometric and semantic information of the environment, allowing autonomous robots to robustly localize and interact with on objects. In this paper, we address the task of constructing a metric-semantic map for the purpose of long-term object-based localization. We exploit 3D object detections from monocular RGB frames for both, the object-based map construction, and for globally localizing in the constructed map. To tailor the approach to a target environment, we propose an efficient way of generating 3D annotations to finetune the 3D object detection model. We evaluate our map construction in an office building, and test our long-term localization approach on challenging sequences recorded in the same environment over nine months. The experiments suggest that our approach is suitable for constructing metric-semantic maps, and that our localization approach is robust to long-term changes. Both, the mapping algorithm and the localization pipeline can run online on an onboard computer. We release an open-source C++/ROS implementation of our approach.

Results

Our live demos for both localization and mapping on the Dingo-O platform can seen in the following video:

The Dingo-O is mounted with Intel NUC10i7FNK and NVidia Jetson Xavier AGX, both running Ubuntu 20.04. Both mapping and localization can be executed onboard of the Dingo.

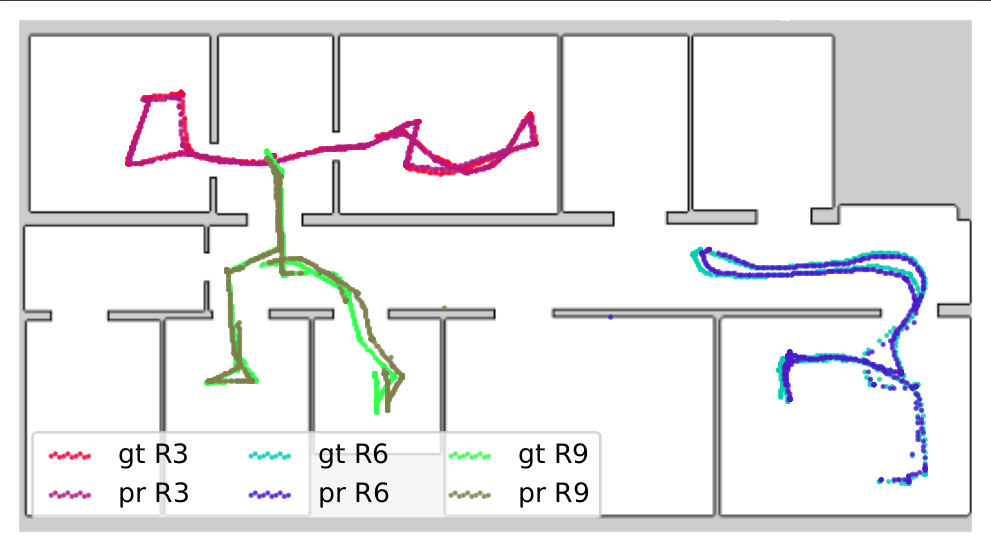

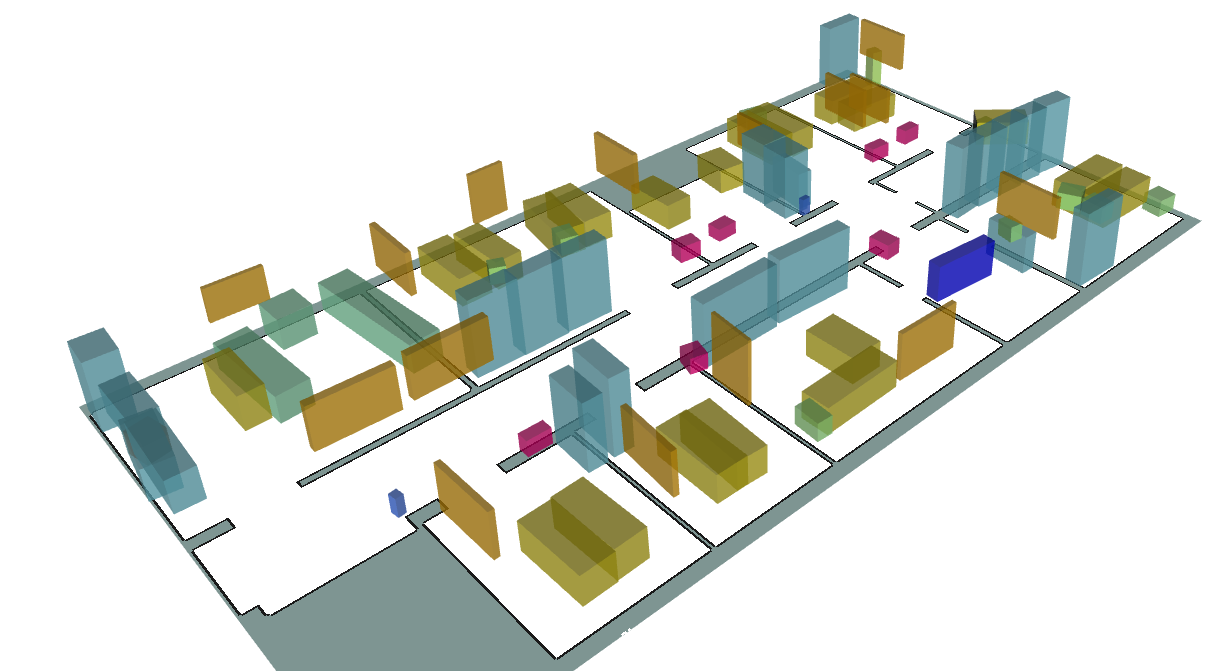

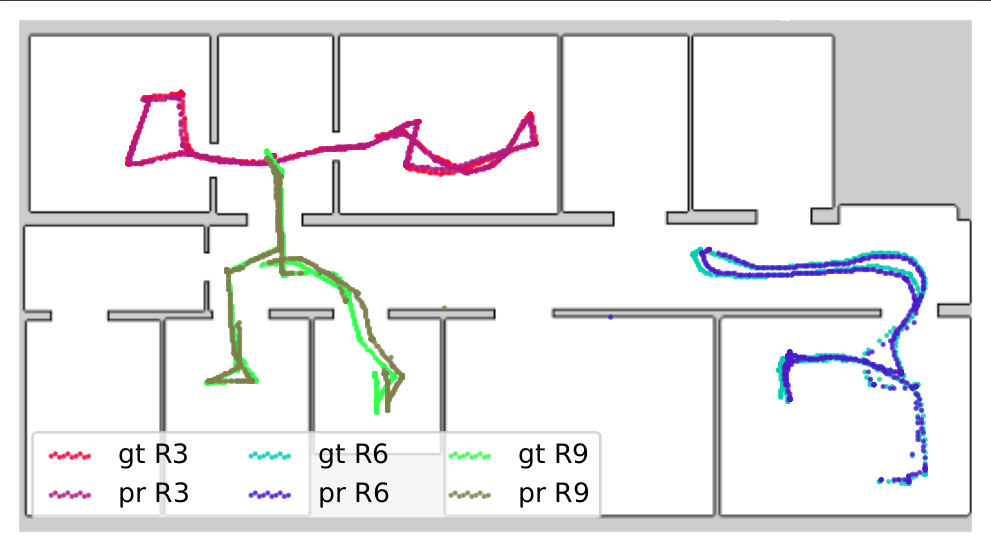

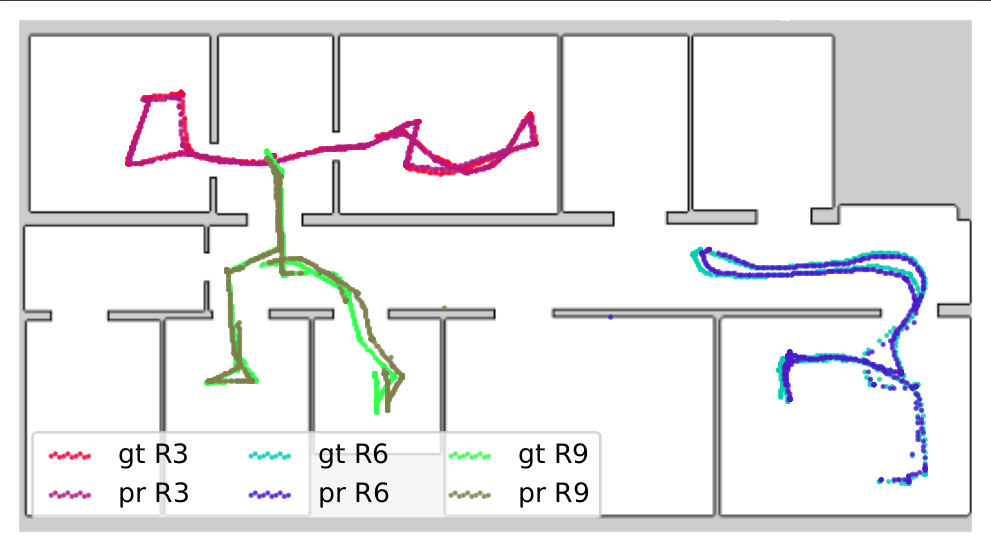

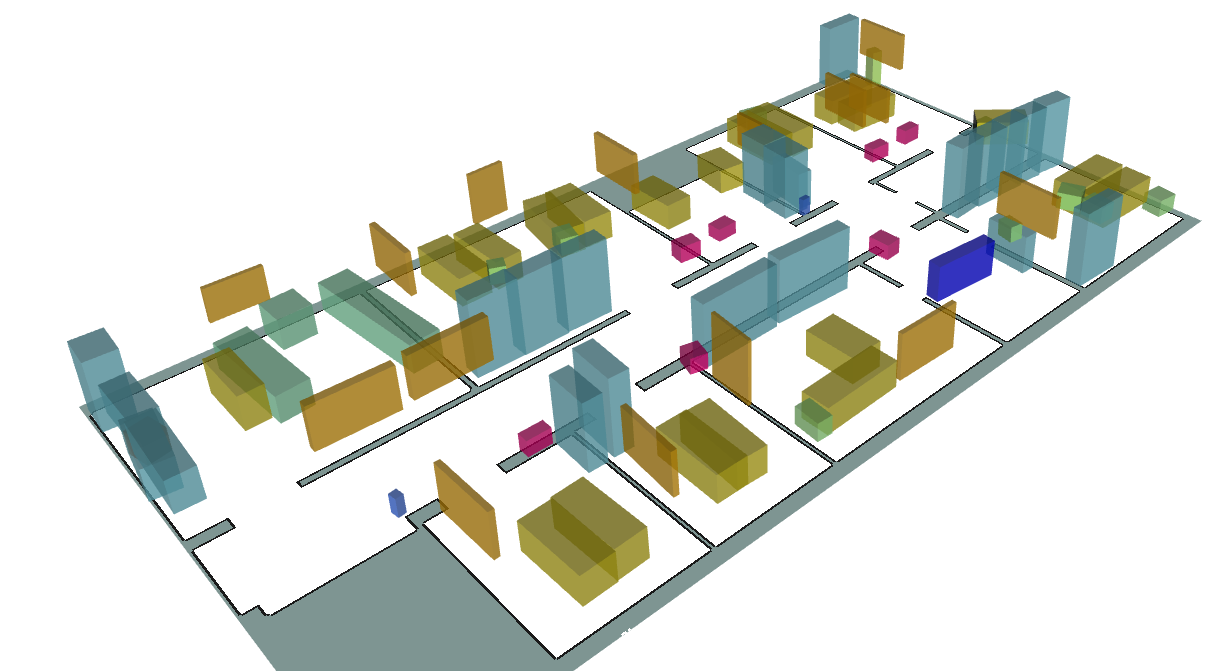

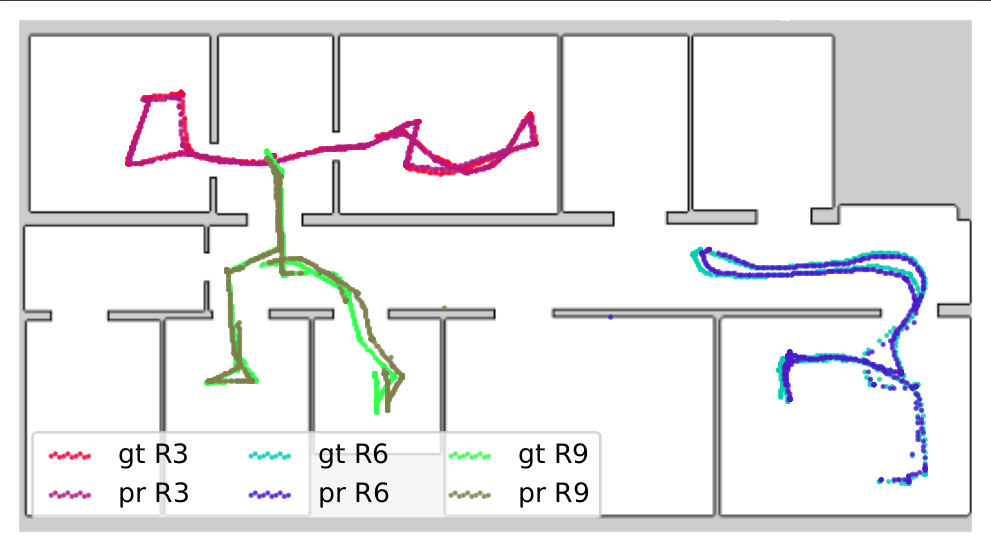

For the quantitative evaluation, we collected data for 9 months, traversing our entire floor. Our data collection platform is a Kuka YouBot, and we recorded 4 RGB camera streams, ground truth poses and wheel odometry. We used a 30-minutes long sequence to construct an object-based map of our lab, enriching our floor plan with geometric and semantic information. We then localized on challenging sequences, spanning over 9 months, which include dynamics such a humans and changes to the furniture. A visualization of our 3D metric-semantic map, and qualitative results for the localization approach evaluated on our map

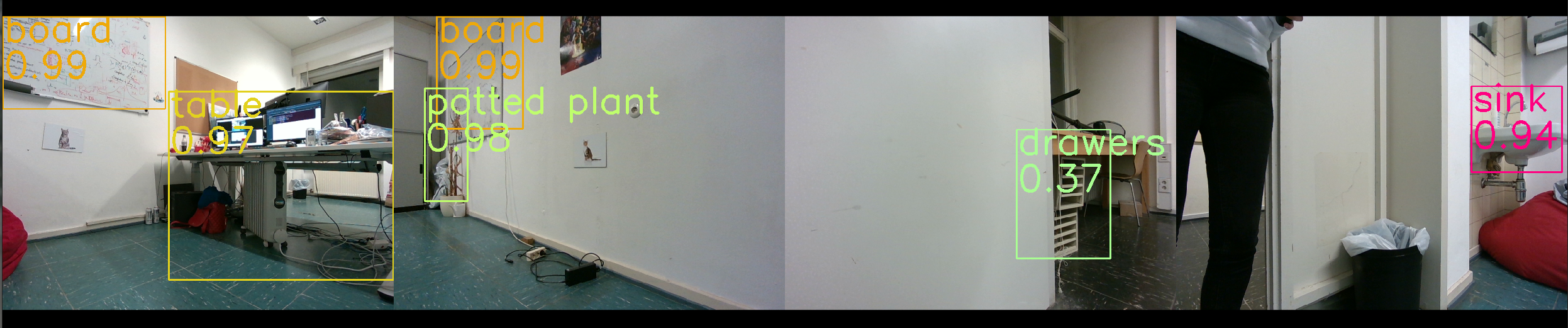

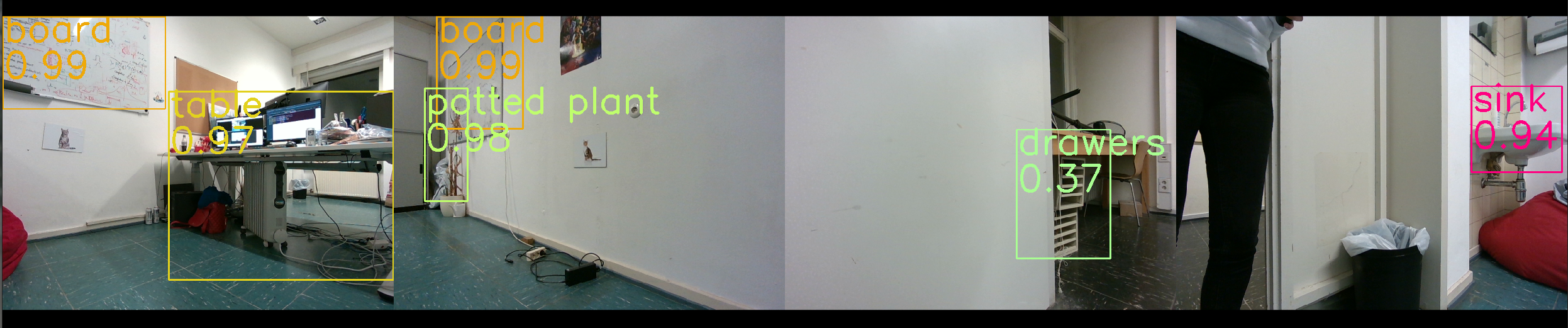

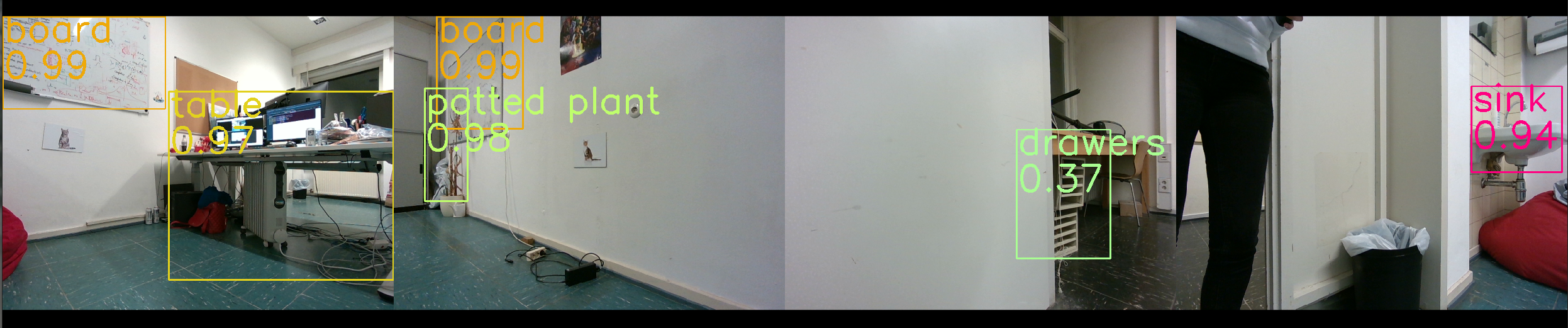

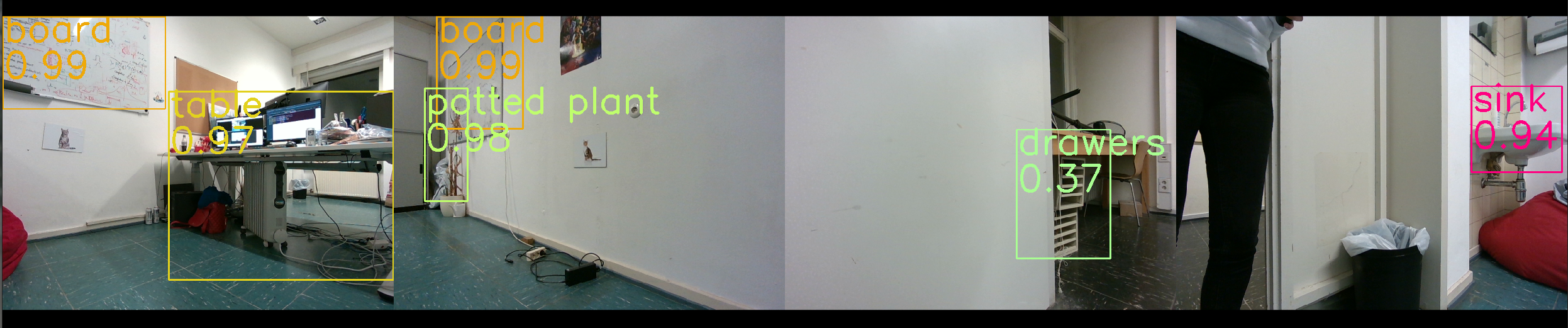

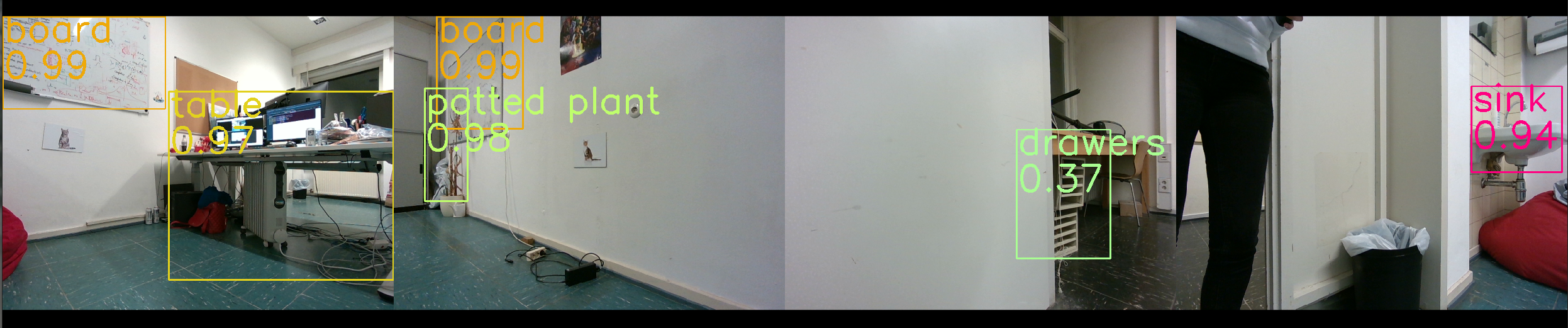

3D Object Detection

We use Omni3D & Cube R-CNN for object detection, but in theory it can be replaced by any other model that provides our algorithm with class prediction, confidence score and 3D bounding boxes expressed as translation, rotation and scale. Our trained model can be downloaded here.

Installation

For our evaluation we installed the code as suggested in the native installation instructions. We provide Docker installations for both ROS 2 Foxy and ROS Noetic, but we did not evaluate our code with them so performance may vary.

Docker Installation for ROS 2 Foxy

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make foxy=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros2_ws/src/omni3d_ros && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros2_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run foxy=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros2_ws && . /opt/ros/foxy/setup.bash && colcon build && . install/setup.bash

You can run the localization node using

ros2 launch nmcl_ros confignmcl.launch dataFolder:="/SIMP/ncore/data/floor/GTMap/"

And the mapping node using

ros2 launch omni3d_ros omni3d.launch

Docker Installation for ROS Noetic

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make noetic=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros1_ws/src/ && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros1_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run noetic=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros1_ws && . /opt/ros/noetic/setup.bash && catkin_make && source devel/setup.bash

You can run the localization node using

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | |

| Checkout URI | https://github.com/prbonn/simp.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| data_processing | 0.0.0 |

| nmcl_msgs | 0.0.0 |

| nmcl_ros | 0.0.0 |

| omni3d_ros | 0.0.0 |

README

SIMP: Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization

This repository contains the implementation of the following publication:

@inproceedings{zimmerman2023iros,

author = {Zimmerman, Nicky and Sodano, Matteo and Marks, Elias and Behley, Jens and Stachniss, Cyrill},

title = {{Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization}},

booktitle = {IEEE/RSJ Intl.~Conf.~on Intelligent Robots and Systems (IROS)},

year = {2023}

}

Abstract

Object-based maps are relevant for scene understanding since they integrate geometric and semantic information of the environment, allowing autonomous robots to robustly localize and interact with on objects. In this paper, we address the task of constructing a metric-semantic map for the purpose of long-term object-based localization. We exploit 3D object detections from monocular RGB frames for both, the object-based map construction, and for globally localizing in the constructed map. To tailor the approach to a target environment, we propose an efficient way of generating 3D annotations to finetune the 3D object detection model. We evaluate our map construction in an office building, and test our long-term localization approach on challenging sequences recorded in the same environment over nine months. The experiments suggest that our approach is suitable for constructing metric-semantic maps, and that our localization approach is robust to long-term changes. Both, the mapping algorithm and the localization pipeline can run online on an onboard computer. We release an open-source C++/ROS implementation of our approach.

Results

Our live demos for both localization and mapping on the Dingo-O platform can seen in the following video:

The Dingo-O is mounted with Intel NUC10i7FNK and NVidia Jetson Xavier AGX, both running Ubuntu 20.04. Both mapping and localization can be executed onboard of the Dingo.

For the quantitative evaluation, we collected data for 9 months, traversing our entire floor. Our data collection platform is a Kuka YouBot, and we recorded 4 RGB camera streams, ground truth poses and wheel odometry. We used a 30-minutes long sequence to construct an object-based map of our lab, enriching our floor plan with geometric and semantic information. We then localized on challenging sequences, spanning over 9 months, which include dynamics such a humans and changes to the furniture. A visualization of our 3D metric-semantic map, and qualitative results for the localization approach evaluated on our map

3D Object Detection

We use Omni3D & Cube R-CNN for object detection, but in theory it can be replaced by any other model that provides our algorithm with class prediction, confidence score and 3D bounding boxes expressed as translation, rotation and scale. Our trained model can be downloaded here.

Installation

For our evaluation we installed the code as suggested in the native installation instructions. We provide Docker installations for both ROS 2 Foxy and ROS Noetic, but we did not evaluate our code with them so performance may vary.

Docker Installation for ROS 2 Foxy

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make foxy=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros2_ws/src/omni3d_ros && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros2_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run foxy=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros2_ws && . /opt/ros/foxy/setup.bash && colcon build && . install/setup.bash

You can run the localization node using

ros2 launch nmcl_ros confignmcl.launch dataFolder:="/SIMP/ncore/data/floor/GTMap/"

And the mapping node using

ros2 launch omni3d_ros omni3d.launch

Docker Installation for ROS Noetic

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make noetic=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros1_ws/src/ && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros1_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run noetic=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros1_ws && . /opt/ros/noetic/setup.bash && catkin_make && source devel/setup.bash

You can run the localization node using

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | |

| Checkout URI | https://github.com/prbonn/simp.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| data_processing | 0.0.0 |

| nmcl_msgs | 0.0.0 |

| nmcl_ros | 0.0.0 |

| omni3d_ros | 0.0.0 |

README

SIMP: Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization

This repository contains the implementation of the following publication:

@inproceedings{zimmerman2023iros,

author = {Zimmerman, Nicky and Sodano, Matteo and Marks, Elias and Behley, Jens and Stachniss, Cyrill},

title = {{Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization}},

booktitle = {IEEE/RSJ Intl.~Conf.~on Intelligent Robots and Systems (IROS)},

year = {2023}

}

Abstract

Object-based maps are relevant for scene understanding since they integrate geometric and semantic information of the environment, allowing autonomous robots to robustly localize and interact with on objects. In this paper, we address the task of constructing a metric-semantic map for the purpose of long-term object-based localization. We exploit 3D object detections from monocular RGB frames for both, the object-based map construction, and for globally localizing in the constructed map. To tailor the approach to a target environment, we propose an efficient way of generating 3D annotations to finetune the 3D object detection model. We evaluate our map construction in an office building, and test our long-term localization approach on challenging sequences recorded in the same environment over nine months. The experiments suggest that our approach is suitable for constructing metric-semantic maps, and that our localization approach is robust to long-term changes. Both, the mapping algorithm and the localization pipeline can run online on an onboard computer. We release an open-source C++/ROS implementation of our approach.

Results

Our live demos for both localization and mapping on the Dingo-O platform can seen in the following video:

The Dingo-O is mounted with Intel NUC10i7FNK and NVidia Jetson Xavier AGX, both running Ubuntu 20.04. Both mapping and localization can be executed onboard of the Dingo.

For the quantitative evaluation, we collected data for 9 months, traversing our entire floor. Our data collection platform is a Kuka YouBot, and we recorded 4 RGB camera streams, ground truth poses and wheel odometry. We used a 30-minutes long sequence to construct an object-based map of our lab, enriching our floor plan with geometric and semantic information. We then localized on challenging sequences, spanning over 9 months, which include dynamics such a humans and changes to the furniture. A visualization of our 3D metric-semantic map, and qualitative results for the localization approach evaluated on our map

3D Object Detection

We use Omni3D & Cube R-CNN for object detection, but in theory it can be replaced by any other model that provides our algorithm with class prediction, confidence score and 3D bounding boxes expressed as translation, rotation and scale. Our trained model can be downloaded here.

Installation

For our evaluation we installed the code as suggested in the native installation instructions. We provide Docker installations for both ROS 2 Foxy and ROS Noetic, but we did not evaluate our code with them so performance may vary.

Docker Installation for ROS 2 Foxy

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make foxy=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros2_ws/src/omni3d_ros && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros2_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run foxy=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros2_ws && . /opt/ros/foxy/setup.bash && colcon build && . install/setup.bash

You can run the localization node using

ros2 launch nmcl_ros confignmcl.launch dataFolder:="/SIMP/ncore/data/floor/GTMap/"

And the mapping node using

ros2 launch omni3d_ros omni3d.launch

Docker Installation for ROS Noetic

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make noetic=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros1_ws/src/ && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros1_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run noetic=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros1_ws && . /opt/ros/noetic/setup.bash && catkin_make && source devel/setup.bash

You can run the localization node using

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | |

| Checkout URI | https://github.com/prbonn/simp.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| data_processing | 0.0.0 |

| nmcl_msgs | 0.0.0 |

| nmcl_ros | 0.0.0 |

| omni3d_ros | 0.0.0 |

README

SIMP: Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization

This repository contains the implementation of the following publication:

@inproceedings{zimmerman2023iros,

author = {Zimmerman, Nicky and Sodano, Matteo and Marks, Elias and Behley, Jens and Stachniss, Cyrill},

title = {{Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization}},

booktitle = {IEEE/RSJ Intl.~Conf.~on Intelligent Robots and Systems (IROS)},

year = {2023}

}

Abstract

Object-based maps are relevant for scene understanding since they integrate geometric and semantic information of the environment, allowing autonomous robots to robustly localize and interact with on objects. In this paper, we address the task of constructing a metric-semantic map for the purpose of long-term object-based localization. We exploit 3D object detections from monocular RGB frames for both, the object-based map construction, and for globally localizing in the constructed map. To tailor the approach to a target environment, we propose an efficient way of generating 3D annotations to finetune the 3D object detection model. We evaluate our map construction in an office building, and test our long-term localization approach on challenging sequences recorded in the same environment over nine months. The experiments suggest that our approach is suitable for constructing metric-semantic maps, and that our localization approach is robust to long-term changes. Both, the mapping algorithm and the localization pipeline can run online on an onboard computer. We release an open-source C++/ROS implementation of our approach.

Results

Our live demos for both localization and mapping on the Dingo-O platform can seen in the following video:

The Dingo-O is mounted with Intel NUC10i7FNK and NVidia Jetson Xavier AGX, both running Ubuntu 20.04. Both mapping and localization can be executed onboard of the Dingo.

For the quantitative evaluation, we collected data for 9 months, traversing our entire floor. Our data collection platform is a Kuka YouBot, and we recorded 4 RGB camera streams, ground truth poses and wheel odometry. We used a 30-minutes long sequence to construct an object-based map of our lab, enriching our floor plan with geometric and semantic information. We then localized on challenging sequences, spanning over 9 months, which include dynamics such a humans and changes to the furniture. A visualization of our 3D metric-semantic map, and qualitative results for the localization approach evaluated on our map

3D Object Detection

We use Omni3D & Cube R-CNN for object detection, but in theory it can be replaced by any other model that provides our algorithm with class prediction, confidence score and 3D bounding boxes expressed as translation, rotation and scale. Our trained model can be downloaded here.

Installation

For our evaluation we installed the code as suggested in the native installation instructions. We provide Docker installations for both ROS 2 Foxy and ROS Noetic, but we did not evaluate our code with them so performance may vary.

Docker Installation for ROS 2 Foxy

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make foxy=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros2_ws/src/omni3d_ros && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros2_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run foxy=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros2_ws && . /opt/ros/foxy/setup.bash && colcon build && . install/setup.bash

You can run the localization node using

ros2 launch nmcl_ros confignmcl.launch dataFolder:="/SIMP/ncore/data/floor/GTMap/"

And the mapping node using

ros2 launch omni3d_ros omni3d.launch

Docker Installation for ROS Noetic

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make noetic=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros1_ws/src/ && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros1_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run noetic=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros1_ws && . /opt/ros/noetic/setup.bash && catkin_make && source devel/setup.bash

You can run the localization node using

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | |

| Checkout URI | https://github.com/prbonn/simp.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| data_processing | 0.0.0 |

| nmcl_msgs | 0.0.0 |

| nmcl_ros | 0.0.0 |

| omni3d_ros | 0.0.0 |

README

SIMP: Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization

This repository contains the implementation of the following publication:

@inproceedings{zimmerman2023iros,

author = {Zimmerman, Nicky and Sodano, Matteo and Marks, Elias and Behley, Jens and Stachniss, Cyrill},

title = {{Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization}},

booktitle = {IEEE/RSJ Intl.~Conf.~on Intelligent Robots and Systems (IROS)},

year = {2023}

}

Abstract

Object-based maps are relevant for scene understanding since they integrate geometric and semantic information of the environment, allowing autonomous robots to robustly localize and interact with on objects. In this paper, we address the task of constructing a metric-semantic map for the purpose of long-term object-based localization. We exploit 3D object detections from monocular RGB frames for both, the object-based map construction, and for globally localizing in the constructed map. To tailor the approach to a target environment, we propose an efficient way of generating 3D annotations to finetune the 3D object detection model. We evaluate our map construction in an office building, and test our long-term localization approach on challenging sequences recorded in the same environment over nine months. The experiments suggest that our approach is suitable for constructing metric-semantic maps, and that our localization approach is robust to long-term changes. Both, the mapping algorithm and the localization pipeline can run online on an onboard computer. We release an open-source C++/ROS implementation of our approach.

Results

Our live demos for both localization and mapping on the Dingo-O platform can seen in the following video:

The Dingo-O is mounted with Intel NUC10i7FNK and NVidia Jetson Xavier AGX, both running Ubuntu 20.04. Both mapping and localization can be executed onboard of the Dingo.

For the quantitative evaluation, we collected data for 9 months, traversing our entire floor. Our data collection platform is a Kuka YouBot, and we recorded 4 RGB camera streams, ground truth poses and wheel odometry. We used a 30-minutes long sequence to construct an object-based map of our lab, enriching our floor plan with geometric and semantic information. We then localized on challenging sequences, spanning over 9 months, which include dynamics such a humans and changes to the furniture. A visualization of our 3D metric-semantic map, and qualitative results for the localization approach evaluated on our map

3D Object Detection

We use Omni3D & Cube R-CNN for object detection, but in theory it can be replaced by any other model that provides our algorithm with class prediction, confidence score and 3D bounding boxes expressed as translation, rotation and scale. Our trained model can be downloaded here.

Installation

For our evaluation we installed the code as suggested in the native installation instructions. We provide Docker installations for both ROS 2 Foxy and ROS Noetic, but we did not evaluate our code with them so performance may vary.

Docker Installation for ROS 2 Foxy

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make foxy=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros2_ws/src/omni3d_ros && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros2_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run foxy=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros2_ws && . /opt/ros/foxy/setup.bash && colcon build && . install/setup.bash

You can run the localization node using

ros2 launch nmcl_ros confignmcl.launch dataFolder:="/SIMP/ncore/data/floor/GTMap/"

And the mapping node using

ros2 launch omni3d_ros omni3d.launch

Docker Installation for ROS Noetic

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make noetic=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros1_ws/src/ && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros1_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run noetic=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros1_ws && . /opt/ros/noetic/setup.bash && catkin_make && source devel/setup.bash

You can run the localization node using

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | |

| Checkout URI | https://github.com/prbonn/simp.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| data_processing | 0.0.0 |

| nmcl_msgs | 0.0.0 |

| nmcl_ros | 0.0.0 |

| omni3d_ros | 0.0.0 |

README

SIMP: Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization

This repository contains the implementation of the following publication:

@inproceedings{zimmerman2023iros,

author = {Zimmerman, Nicky and Sodano, Matteo and Marks, Elias and Behley, Jens and Stachniss, Cyrill},

title = {{Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization}},

booktitle = {IEEE/RSJ Intl.~Conf.~on Intelligent Robots and Systems (IROS)},

year = {2023}

}

Abstract

Object-based maps are relevant for scene understanding since they integrate geometric and semantic information of the environment, allowing autonomous robots to robustly localize and interact with on objects. In this paper, we address the task of constructing a metric-semantic map for the purpose of long-term object-based localization. We exploit 3D object detections from monocular RGB frames for both, the object-based map construction, and for globally localizing in the constructed map. To tailor the approach to a target environment, we propose an efficient way of generating 3D annotations to finetune the 3D object detection model. We evaluate our map construction in an office building, and test our long-term localization approach on challenging sequences recorded in the same environment over nine months. The experiments suggest that our approach is suitable for constructing metric-semantic maps, and that our localization approach is robust to long-term changes. Both, the mapping algorithm and the localization pipeline can run online on an onboard computer. We release an open-source C++/ROS implementation of our approach.

Results

Our live demos for both localization and mapping on the Dingo-O platform can seen in the following video:

The Dingo-O is mounted with Intel NUC10i7FNK and NVidia Jetson Xavier AGX, both running Ubuntu 20.04. Both mapping and localization can be executed onboard of the Dingo.

For the quantitative evaluation, we collected data for 9 months, traversing our entire floor. Our data collection platform is a Kuka YouBot, and we recorded 4 RGB camera streams, ground truth poses and wheel odometry. We used a 30-minutes long sequence to construct an object-based map of our lab, enriching our floor plan with geometric and semantic information. We then localized on challenging sequences, spanning over 9 months, which include dynamics such a humans and changes to the furniture. A visualization of our 3D metric-semantic map, and qualitative results for the localization approach evaluated on our map

3D Object Detection

We use Omni3D & Cube R-CNN for object detection, but in theory it can be replaced by any other model that provides our algorithm with class prediction, confidence score and 3D bounding boxes expressed as translation, rotation and scale. Our trained model can be downloaded here.

Installation

For our evaluation we installed the code as suggested in the native installation instructions. We provide Docker installations for both ROS 2 Foxy and ROS Noetic, but we did not evaluate our code with them so performance may vary.

Docker Installation for ROS 2 Foxy

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make foxy=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros2_ws/src/omni3d_ros && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros2_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run foxy=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros2_ws && . /opt/ros/foxy/setup.bash && colcon build && . install/setup.bash

You can run the localization node using

ros2 launch nmcl_ros confignmcl.launch dataFolder:="/SIMP/ncore/data/floor/GTMap/"

And the mapping node using

ros2 launch omni3d_ros omni3d.launch

Docker Installation for ROS Noetic

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make noetic=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros1_ws/src/ && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros1_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run noetic=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros1_ws && . /opt/ros/noetic/setup.bash && catkin_make && source devel/setup.bash

You can run the localization node using

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | |

| Checkout URI | https://github.com/prbonn/simp.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| data_processing | 0.0.0 |

| nmcl_msgs | 0.0.0 |

| nmcl_ros | 0.0.0 |

| omni3d_ros | 0.0.0 |

README

SIMP: Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization

This repository contains the implementation of the following publication:

@inproceedings{zimmerman2023iros,

author = {Zimmerman, Nicky and Sodano, Matteo and Marks, Elias and Behley, Jens and Stachniss, Cyrill},

title = {{Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization}},

booktitle = {IEEE/RSJ Intl.~Conf.~on Intelligent Robots and Systems (IROS)},

year = {2023}

}

Abstract

Object-based maps are relevant for scene understanding since they integrate geometric and semantic information of the environment, allowing autonomous robots to robustly localize and interact with on objects. In this paper, we address the task of constructing a metric-semantic map for the purpose of long-term object-based localization. We exploit 3D object detections from monocular RGB frames for both, the object-based map construction, and for globally localizing in the constructed map. To tailor the approach to a target environment, we propose an efficient way of generating 3D annotations to finetune the 3D object detection model. We evaluate our map construction in an office building, and test our long-term localization approach on challenging sequences recorded in the same environment over nine months. The experiments suggest that our approach is suitable for constructing metric-semantic maps, and that our localization approach is robust to long-term changes. Both, the mapping algorithm and the localization pipeline can run online on an onboard computer. We release an open-source C++/ROS implementation of our approach.

Results

Our live demos for both localization and mapping on the Dingo-O platform can seen in the following video:

The Dingo-O is mounted with Intel NUC10i7FNK and NVidia Jetson Xavier AGX, both running Ubuntu 20.04. Both mapping and localization can be executed onboard of the Dingo.

For the quantitative evaluation, we collected data for 9 months, traversing our entire floor. Our data collection platform is a Kuka YouBot, and we recorded 4 RGB camera streams, ground truth poses and wheel odometry. We used a 30-minutes long sequence to construct an object-based map of our lab, enriching our floor plan with geometric and semantic information. We then localized on challenging sequences, spanning over 9 months, which include dynamics such a humans and changes to the furniture. A visualization of our 3D metric-semantic map, and qualitative results for the localization approach evaluated on our map

3D Object Detection

We use Omni3D & Cube R-CNN for object detection, but in theory it can be replaced by any other model that provides our algorithm with class prediction, confidence score and 3D bounding boxes expressed as translation, rotation and scale. Our trained model can be downloaded here.

Installation

For our evaluation we installed the code as suggested in the native installation instructions. We provide Docker installations for both ROS 2 Foxy and ROS Noetic, but we did not evaluate our code with them so performance may vary.

Docker Installation for ROS 2 Foxy

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make foxy=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros2_ws/src/omni3d_ros && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros2_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run foxy=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros2_ws && . /opt/ros/foxy/setup.bash && colcon build && . install/setup.bash

You can run the localization node using

ros2 launch nmcl_ros confignmcl.launch dataFolder:="/SIMP/ncore/data/floor/GTMap/"

And the mapping node using

ros2 launch omni3d_ros omni3d.launch

Docker Installation for ROS Noetic

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make noetic=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros1_ws/src/ && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros1_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run noetic=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros1_ws && . /opt/ros/noetic/setup.bash && catkin_make && source devel/setup.bash

You can run the localization node using

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | |

| Checkout URI | https://github.com/prbonn/simp.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| data_processing | 0.0.0 |

| nmcl_msgs | 0.0.0 |

| nmcl_ros | 0.0.0 |

| omni3d_ros | 0.0.0 |

README

SIMP: Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization

This repository contains the implementation of the following publication:

@inproceedings{zimmerman2023iros,

author = {Zimmerman, Nicky and Sodano, Matteo and Marks, Elias and Behley, Jens and Stachniss, Cyrill},

title = {{Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization}},

booktitle = {IEEE/RSJ Intl.~Conf.~on Intelligent Robots and Systems (IROS)},

year = {2023}

}

Abstract

Object-based maps are relevant for scene understanding since they integrate geometric and semantic information of the environment, allowing autonomous robots to robustly localize and interact with on objects. In this paper, we address the task of constructing a metric-semantic map for the purpose of long-term object-based localization. We exploit 3D object detections from monocular RGB frames for both, the object-based map construction, and for globally localizing in the constructed map. To tailor the approach to a target environment, we propose an efficient way of generating 3D annotations to finetune the 3D object detection model. We evaluate our map construction in an office building, and test our long-term localization approach on challenging sequences recorded in the same environment over nine months. The experiments suggest that our approach is suitable for constructing metric-semantic maps, and that our localization approach is robust to long-term changes. Both, the mapping algorithm and the localization pipeline can run online on an onboard computer. We release an open-source C++/ROS implementation of our approach.

Results

Our live demos for both localization and mapping on the Dingo-O platform can seen in the following video:

The Dingo-O is mounted with Intel NUC10i7FNK and NVidia Jetson Xavier AGX, both running Ubuntu 20.04. Both mapping and localization can be executed onboard of the Dingo.

For the quantitative evaluation, we collected data for 9 months, traversing our entire floor. Our data collection platform is a Kuka YouBot, and we recorded 4 RGB camera streams, ground truth poses and wheel odometry. We used a 30-minutes long sequence to construct an object-based map of our lab, enriching our floor plan with geometric and semantic information. We then localized on challenging sequences, spanning over 9 months, which include dynamics such a humans and changes to the furniture. A visualization of our 3D metric-semantic map, and qualitative results for the localization approach evaluated on our map

3D Object Detection

We use Omni3D & Cube R-CNN for object detection, but in theory it can be replaced by any other model that provides our algorithm with class prediction, confidence score and 3D bounding boxes expressed as translation, rotation and scale. Our trained model can be downloaded here.

Installation

For our evaluation we installed the code as suggested in the native installation instructions. We provide Docker installations for both ROS 2 Foxy and ROS Noetic, but we did not evaluate our code with them so performance may vary.

Docker Installation for ROS 2 Foxy

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make foxy=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros2_ws/src/omni3d_ros && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros2_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run foxy=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros2_ws && . /opt/ros/foxy/setup.bash && colcon build && . install/setup.bash

You can run the localization node using

ros2 launch nmcl_ros confignmcl.launch dataFolder:="/SIMP/ncore/data/floor/GTMap/"

And the mapping node using

ros2 launch omni3d_ros omni3d.launch

Docker Installation for ROS Noetic

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make noetic=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros1_ws/src/ && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros1_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run noetic=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros1_ws && . /opt/ros/noetic/setup.bash && catkin_make && source devel/setup.bash

You can run the localization node using

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Description | |

| Checkout URI | https://github.com/prbonn/simp.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-11-30 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| data_processing | 0.0.0 |

| nmcl_msgs | 0.0.0 |

| nmcl_ros | 0.0.0 |

| omni3d_ros | 0.0.0 |

README

SIMP: Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization

This repository contains the implementation of the following publication:

@inproceedings{zimmerman2023iros,

author = {Zimmerman, Nicky and Sodano, Matteo and Marks, Elias and Behley, Jens and Stachniss, Cyrill},

title = {{Constructing Metric-Semantic Maps Using Floor Plan Priors for Long-Term Indoor Localization}},

booktitle = {IEEE/RSJ Intl.~Conf.~on Intelligent Robots and Systems (IROS)},

year = {2023}

}

Abstract

Object-based maps are relevant for scene understanding since they integrate geometric and semantic information of the environment, allowing autonomous robots to robustly localize and interact with on objects. In this paper, we address the task of constructing a metric-semantic map for the purpose of long-term object-based localization. We exploit 3D object detections from monocular RGB frames for both, the object-based map construction, and for globally localizing in the constructed map. To tailor the approach to a target environment, we propose an efficient way of generating 3D annotations to finetune the 3D object detection model. We evaluate our map construction in an office building, and test our long-term localization approach on challenging sequences recorded in the same environment over nine months. The experiments suggest that our approach is suitable for constructing metric-semantic maps, and that our localization approach is robust to long-term changes. Both, the mapping algorithm and the localization pipeline can run online on an onboard computer. We release an open-source C++/ROS implementation of our approach.

Results

Our live demos for both localization and mapping on the Dingo-O platform can seen in the following video:

The Dingo-O is mounted with Intel NUC10i7FNK and NVidia Jetson Xavier AGX, both running Ubuntu 20.04. Both mapping and localization can be executed onboard of the Dingo.

For the quantitative evaluation, we collected data for 9 months, traversing our entire floor. Our data collection platform is a Kuka YouBot, and we recorded 4 RGB camera streams, ground truth poses and wheel odometry. We used a 30-minutes long sequence to construct an object-based map of our lab, enriching our floor plan with geometric and semantic information. We then localized on challenging sequences, spanning over 9 months, which include dynamics such a humans and changes to the furniture. A visualization of our 3D metric-semantic map, and qualitative results for the localization approach evaluated on our map

3D Object Detection

We use Omni3D & Cube R-CNN for object detection, but in theory it can be replaced by any other model that provides our algorithm with class prediction, confidence score and 3D bounding boxes expressed as translation, rotation and scale. Our trained model can be downloaded here.

Installation

For our evaluation we installed the code as suggested in the native installation instructions. We provide Docker installations for both ROS 2 Foxy and ROS Noetic, but we did not evaluate our code with them so performance may vary.

Docker Installation for ROS 2 Foxy

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make foxy=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros2_ws/src/omni3d_ros && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros2_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run foxy=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros2_ws && . /opt/ros/foxy/setup.bash && colcon build && . install/setup.bash

You can run the localization node using

ros2 launch nmcl_ros confignmcl.launch dataFolder:="/SIMP/ncore/data/floor/GTMap/"

And the mapping node using

ros2 launch omni3d_ros omni3d.launch

Docker Installation for ROS Noetic

Make sure you installed Docker Engine and NVidia container so you can run Dokcer with GPU support.

In the SIMP folder run

sudo make noetic=1

To build the Docker. Then download the external resources in the host machine

cd /SIMP/ros1_ws/src/ && git clone https://github.com/FullMetalNicky/omni3d.git

cd /SIMP/ros1_ws/src/omni3d_ros && mkdir models/ && cd models/ && curl -LO https://www.ipb.uni-bonn.de/html/projects/simp/model_recent.pth

To enable RVIZ visualization from the Docker run in the host machine

xhost +local:docker

To run the Docker

sudo make run noetic=1

Then in the Docker, build the code

cd ncore && mkdir build && cd build && cmake .. -DBUILD_TESTING=1 && make -j12

cd ros1_ws && . /opt/ros/noetic/setup.bash && catkin_make && source devel/setup.bash

You can run the localization node using

File truncated at 100 lines see the full file