Repository Summary

| Description | Dockerized ROS2 stack for the WATonomous Autonomous Driving Software Pipeline |

| Checkout URI | https://github.com/watonomous/wato_monorepo.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-24 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

README

WATonomous Monorepo (for EVE)

Dockerized monorepo for the WATonomous autonomous vehicle project (dubbed EVE).

Prerequisite Installation

These steps are to setup the monorepo to work on your own PC. We utilize docker to enable ease of reproducibility and deployability.

Why docker? It’s so that you don’t need to download any coding libraries on your bare metal pc, saving headache :3

- Our monorepo infrastructure supports Linux Ubuntu >= 22.04, Windows (WSL/WSL2), and MacOS. Though, aspects of this repo might require specific hardware like NVidia GPUs.

- Once inside Linux, Download Docker Engine using the

aptrepository. If you are using WSL, install docker outside of WSL, it will automatically setup docker within WSL for you. - You’re all set! Information on running the monorepo with our infrastructure is given here

Available Modules

Infrastructure Starts the foxglove bridge and data streamer for rosbags.

Interfacing Launches packages directly connecting to hardware. This includes the sensors of the car and the car itself. see docs

Perception Launches packages for perception. see docs

World Modeling Launches packages for world modeling. see docs

Action Launches packages for action. see docs

Simulation Launches packages CARLA simulator. see docs

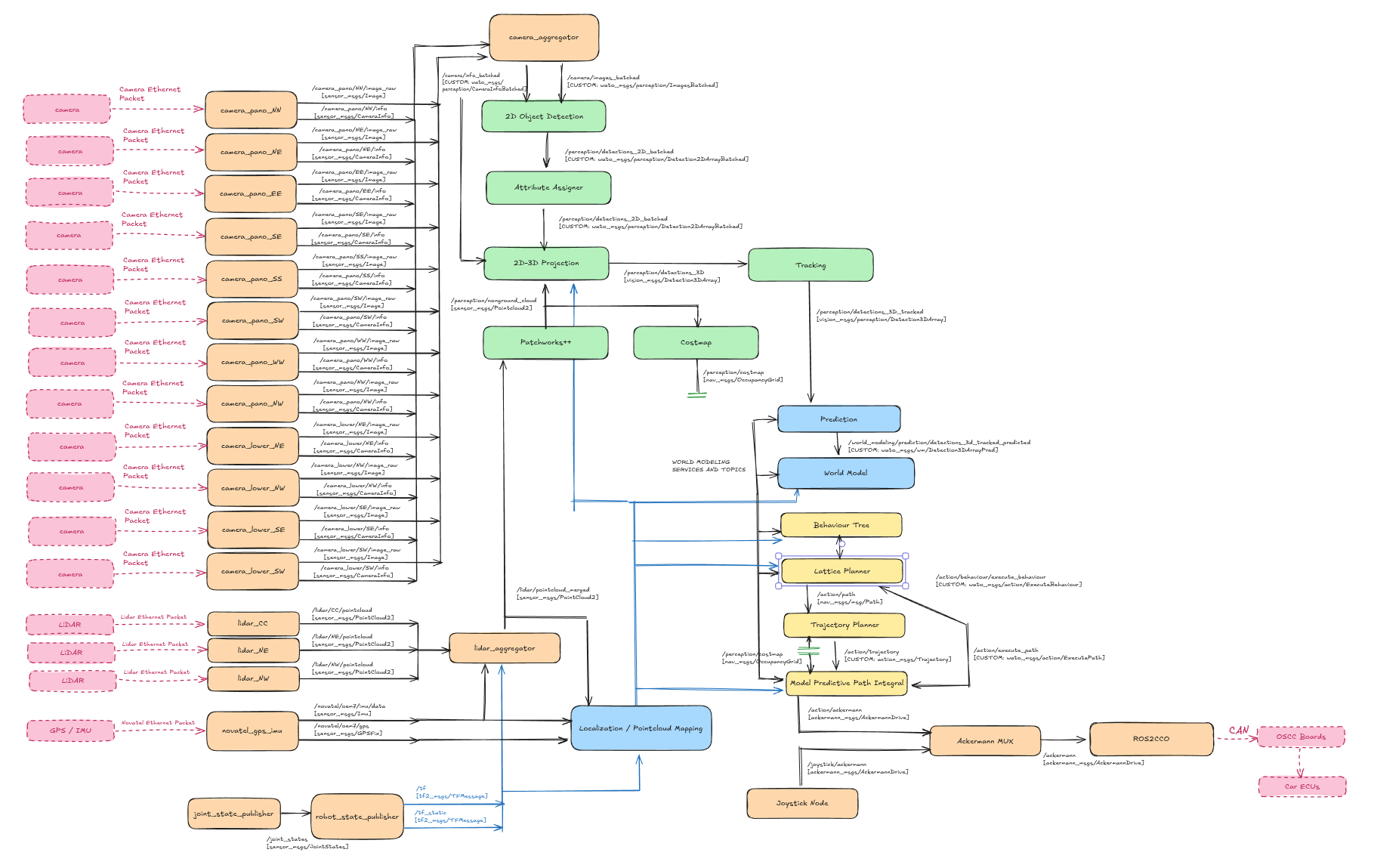

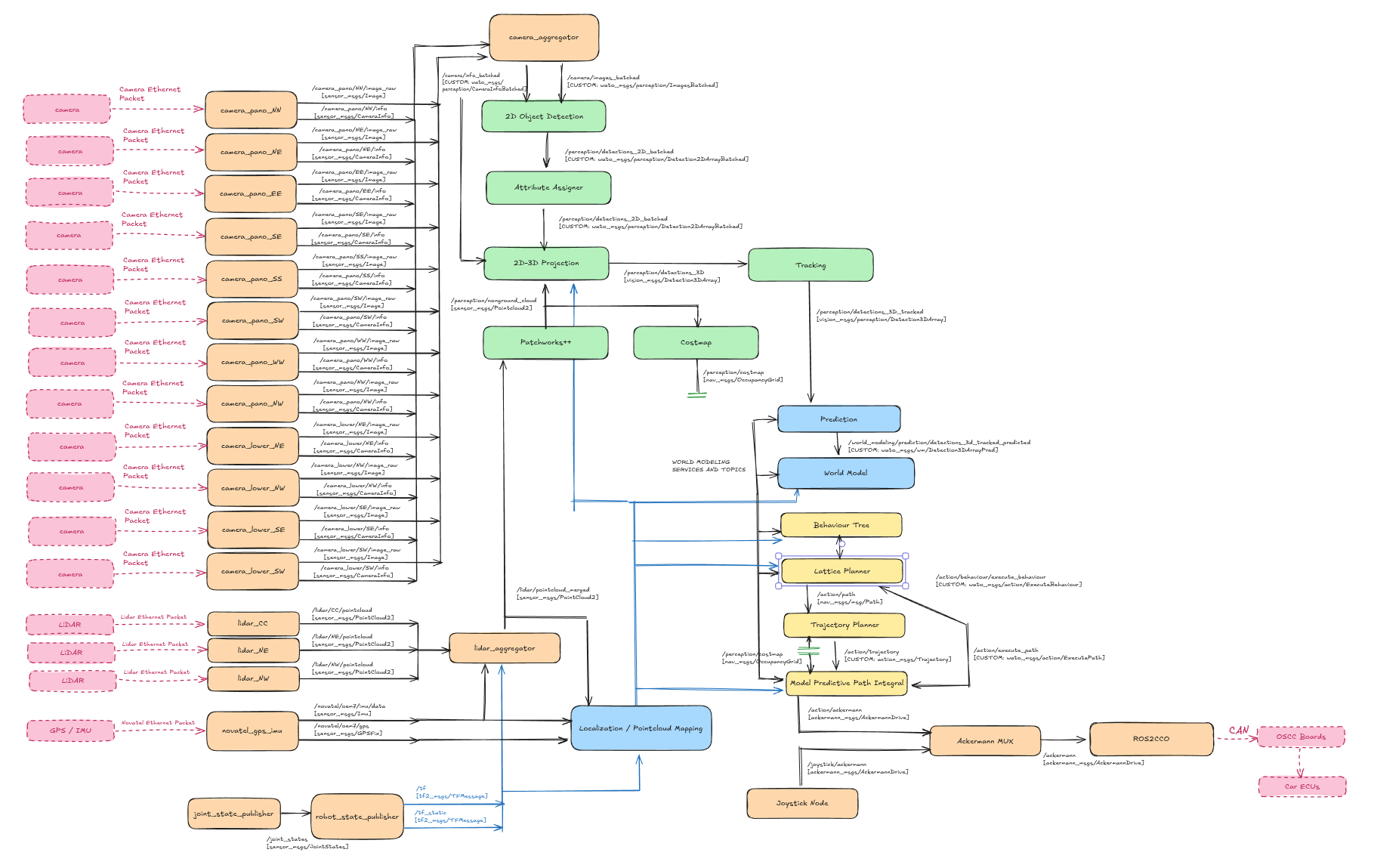

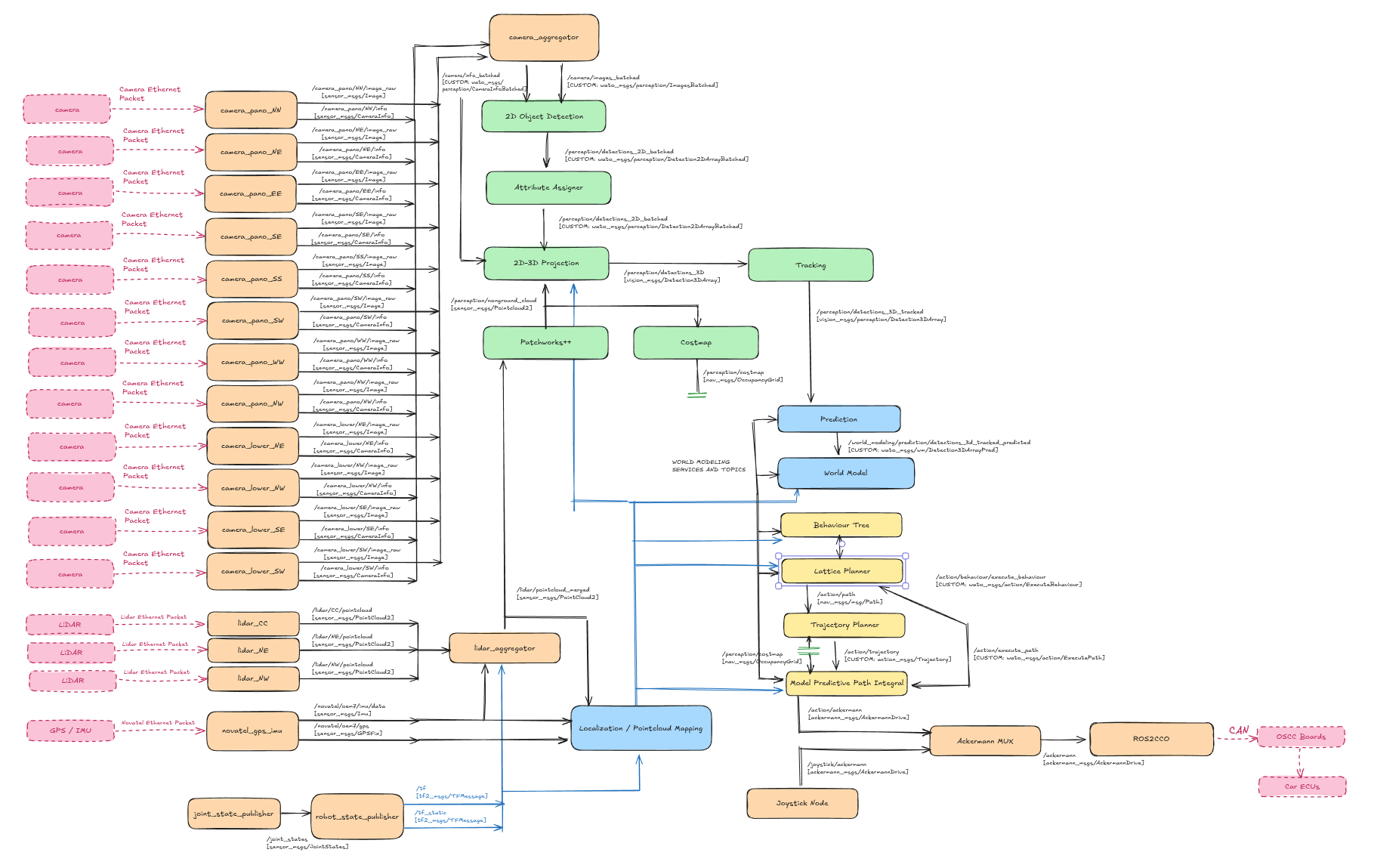

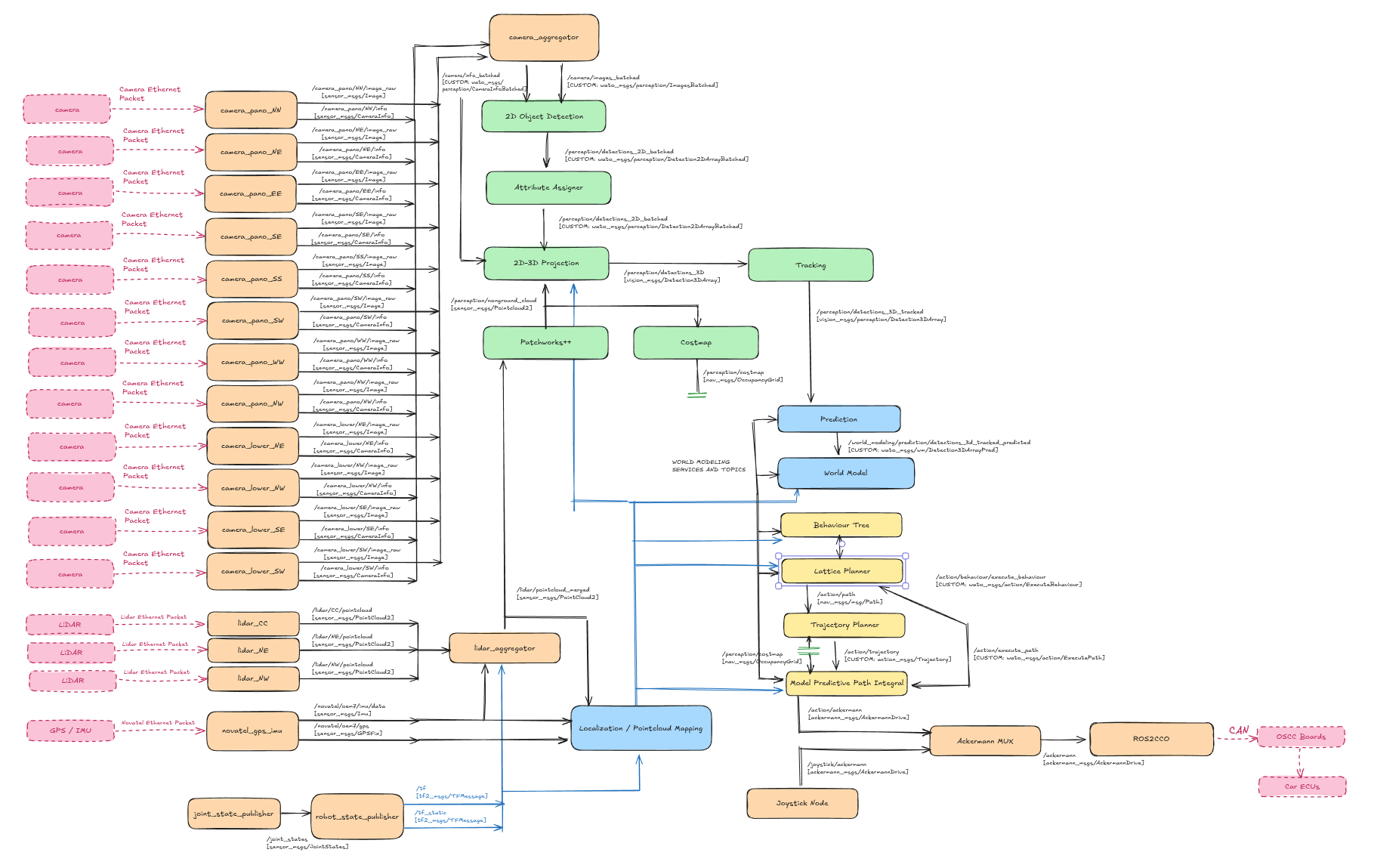

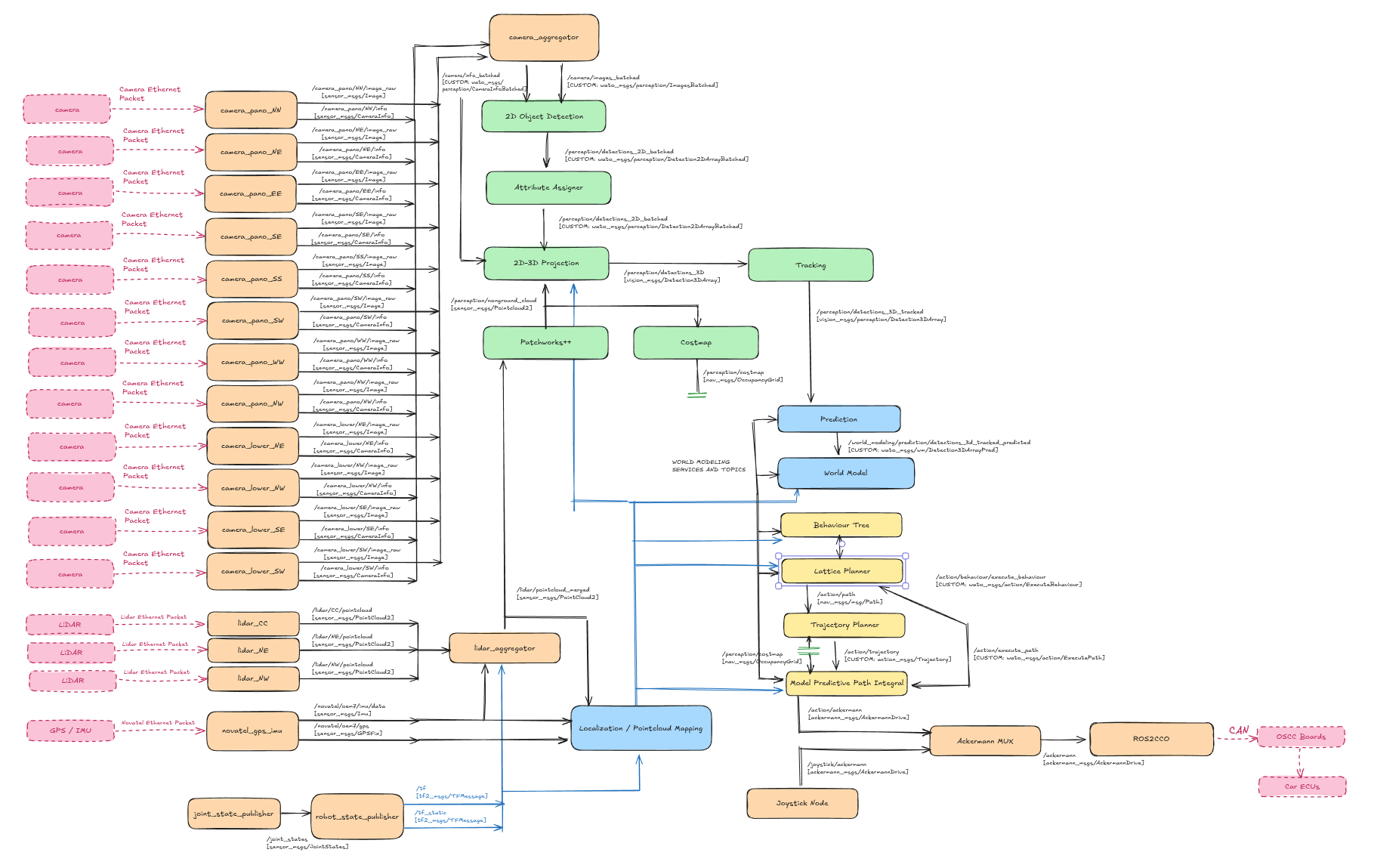

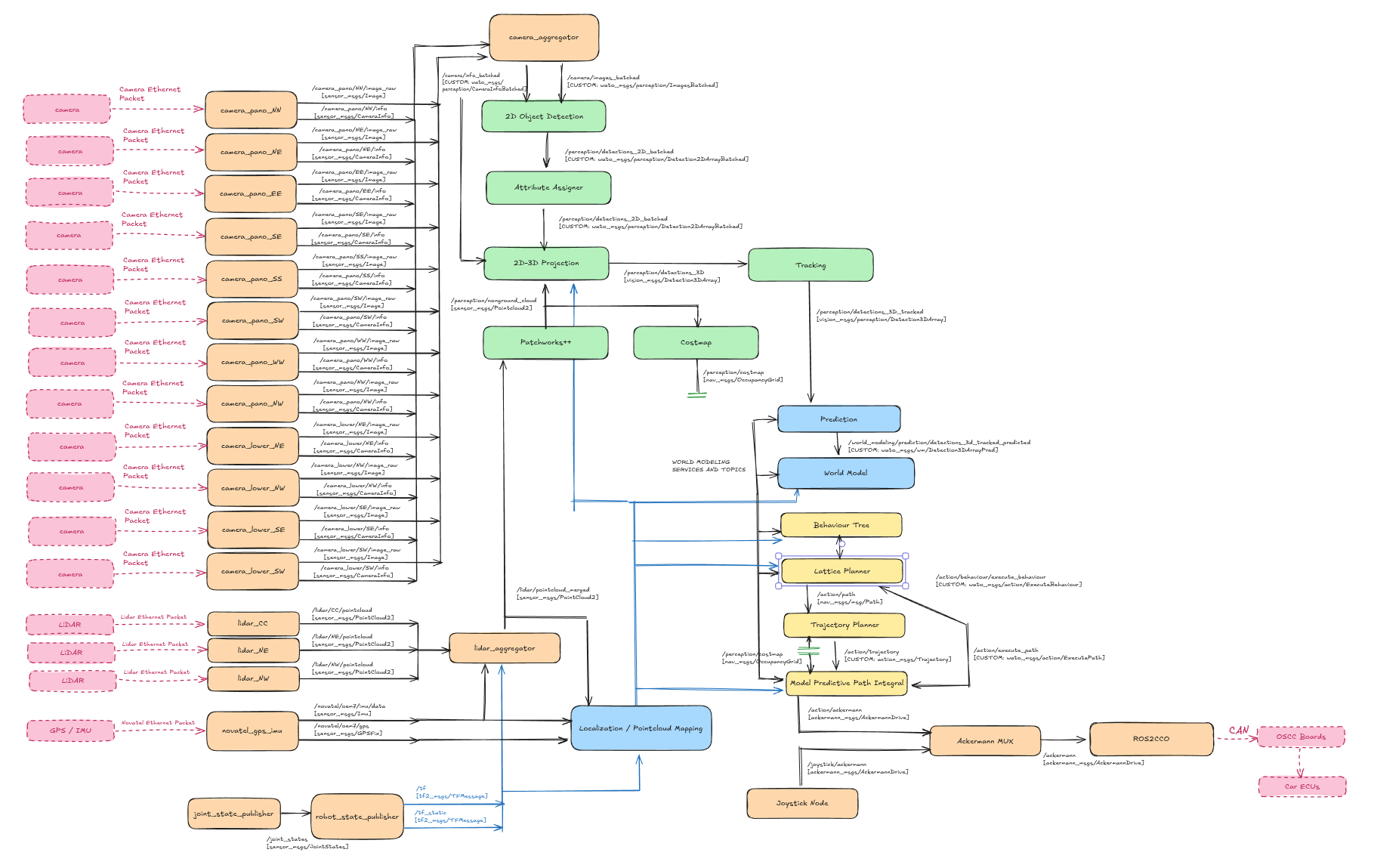

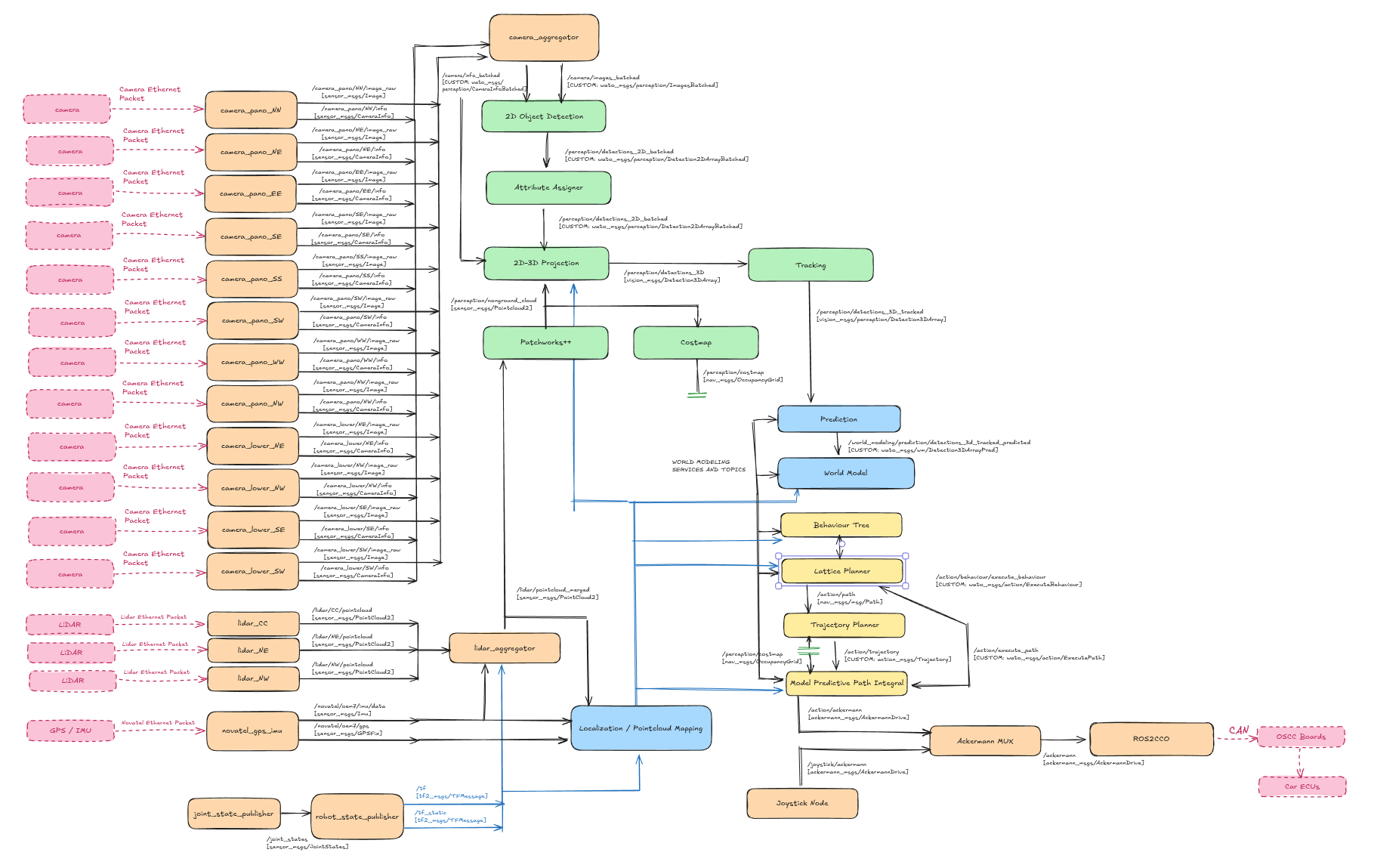

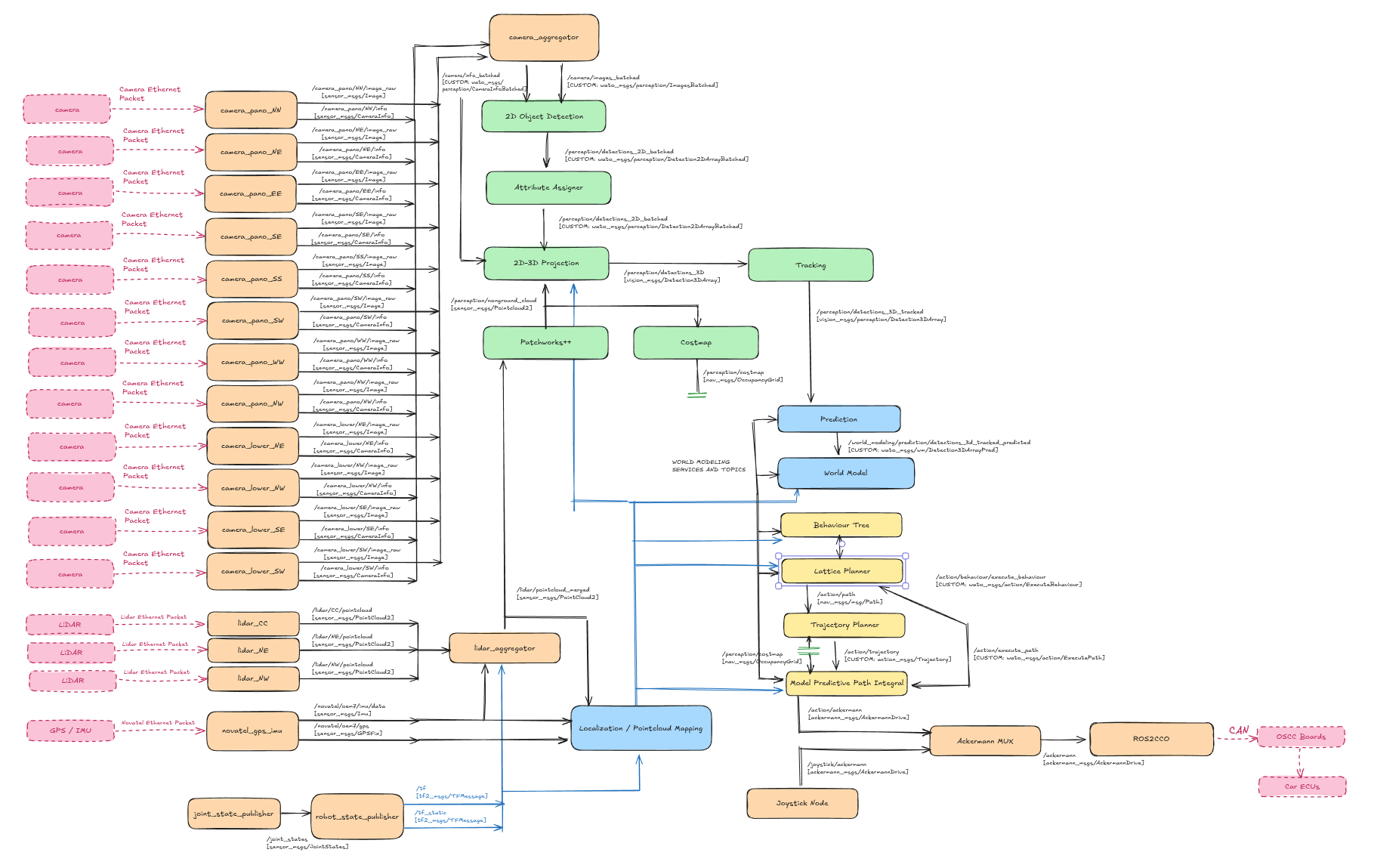

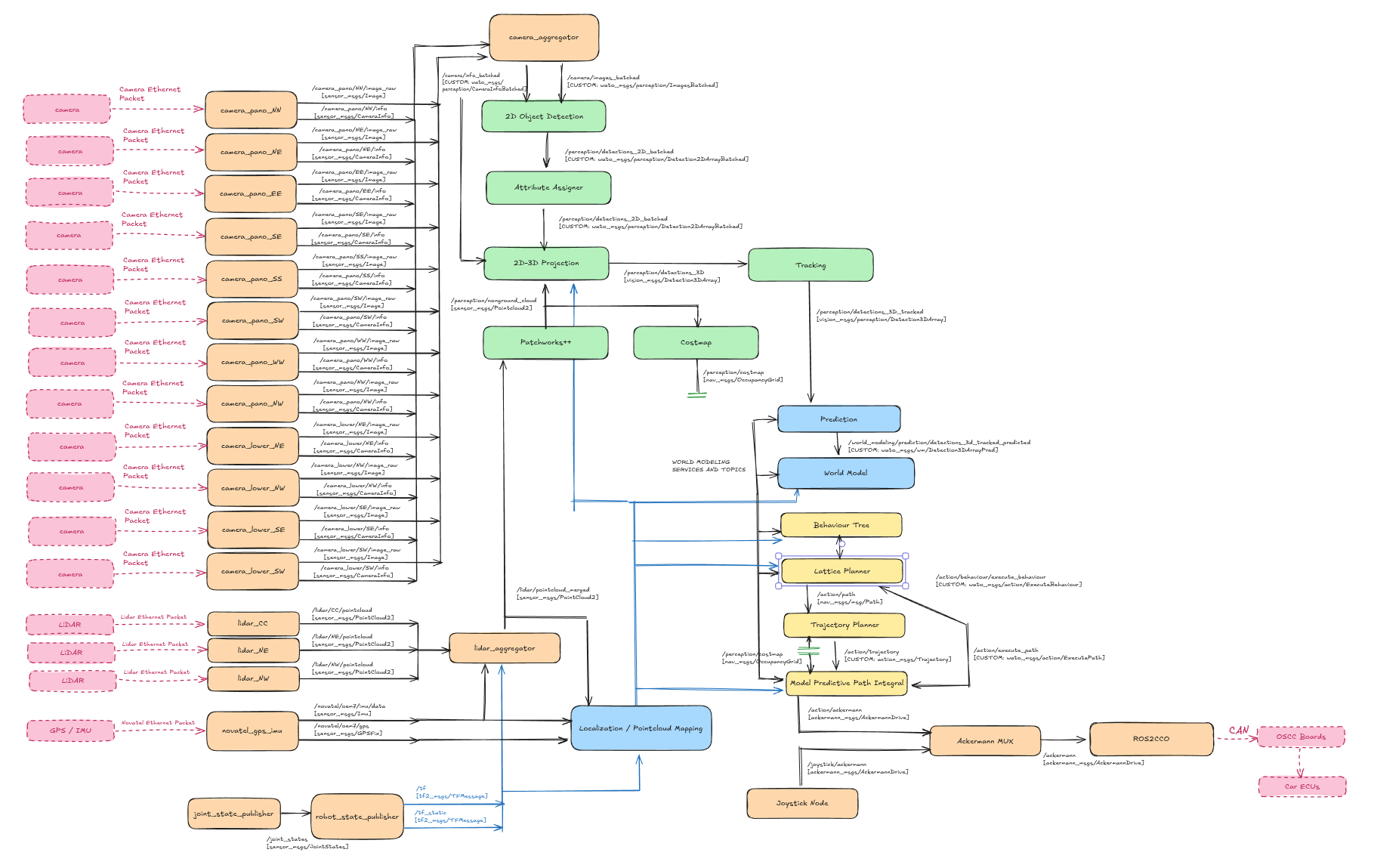

System Architecture

Contribute

Information on contributing to the monorepo is given in DEVELOPING.md

CONTRIBUTING

Repository Summary

| Description | Dockerized ROS2 stack for the WATonomous Autonomous Driving Software Pipeline |

| Checkout URI | https://github.com/watonomous/wato_monorepo.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-24 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

README

WATonomous Monorepo (for EVE)

Dockerized monorepo for the WATonomous autonomous vehicle project (dubbed EVE).

Prerequisite Installation

These steps are to setup the monorepo to work on your own PC. We utilize docker to enable ease of reproducibility and deployability.

Why docker? It’s so that you don’t need to download any coding libraries on your bare metal pc, saving headache :3

- Our monorepo infrastructure supports Linux Ubuntu >= 22.04, Windows (WSL/WSL2), and MacOS. Though, aspects of this repo might require specific hardware like NVidia GPUs.

- Once inside Linux, Download Docker Engine using the

aptrepository. If you are using WSL, install docker outside of WSL, it will automatically setup docker within WSL for you. - You’re all set! Information on running the monorepo with our infrastructure is given here

Available Modules

Infrastructure Starts the foxglove bridge and data streamer for rosbags.

Interfacing Launches packages directly connecting to hardware. This includes the sensors of the car and the car itself. see docs

Perception Launches packages for perception. see docs

World Modeling Launches packages for world modeling. see docs

Action Launches packages for action. see docs

Simulation Launches packages CARLA simulator. see docs

System Architecture

Contribute

Information on contributing to the monorepo is given in DEVELOPING.md

CONTRIBUTING

Repository Summary

| Description | Dockerized ROS2 stack for the WATonomous Autonomous Driving Software Pipeline |

| Checkout URI | https://github.com/watonomous/wato_monorepo.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-24 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

README

WATonomous Monorepo (for EVE)

Dockerized monorepo for the WATonomous autonomous vehicle project (dubbed EVE).

Prerequisite Installation

These steps are to setup the monorepo to work on your own PC. We utilize docker to enable ease of reproducibility and deployability.

Why docker? It’s so that you don’t need to download any coding libraries on your bare metal pc, saving headache :3

- Our monorepo infrastructure supports Linux Ubuntu >= 22.04, Windows (WSL/WSL2), and MacOS. Though, aspects of this repo might require specific hardware like NVidia GPUs.

- Once inside Linux, Download Docker Engine using the

aptrepository. If you are using WSL, install docker outside of WSL, it will automatically setup docker within WSL for you. - You’re all set! Information on running the monorepo with our infrastructure is given here

Available Modules

Infrastructure Starts the foxglove bridge and data streamer for rosbags.

Interfacing Launches packages directly connecting to hardware. This includes the sensors of the car and the car itself. see docs

Perception Launches packages for perception. see docs

World Modeling Launches packages for world modeling. see docs

Action Launches packages for action. see docs

Simulation Launches packages CARLA simulator. see docs

System Architecture

Contribute

Information on contributing to the monorepo is given in DEVELOPING.md

CONTRIBUTING

Repository Summary

| Description | Dockerized ROS2 stack for the WATonomous Autonomous Driving Software Pipeline |

| Checkout URI | https://github.com/watonomous/wato_monorepo.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-24 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

README

WATonomous Monorepo (for EVE)

Dockerized monorepo for the WATonomous autonomous vehicle project (dubbed EVE).

Prerequisite Installation

These steps are to setup the monorepo to work on your own PC. We utilize docker to enable ease of reproducibility and deployability.

Why docker? It’s so that you don’t need to download any coding libraries on your bare metal pc, saving headache :3

- Our monorepo infrastructure supports Linux Ubuntu >= 22.04, Windows (WSL/WSL2), and MacOS. Though, aspects of this repo might require specific hardware like NVidia GPUs.

- Once inside Linux, Download Docker Engine using the

aptrepository. If you are using WSL, install docker outside of WSL, it will automatically setup docker within WSL for you. - You’re all set! Information on running the monorepo with our infrastructure is given here

Available Modules

Infrastructure Starts the foxglove bridge and data streamer for rosbags.

Interfacing Launches packages directly connecting to hardware. This includes the sensors of the car and the car itself. see docs

Perception Launches packages for perception. see docs

World Modeling Launches packages for world modeling. see docs

Action Launches packages for action. see docs

Simulation Launches packages CARLA simulator. see docs

System Architecture

Contribute

Information on contributing to the monorepo is given in DEVELOPING.md

CONTRIBUTING

Repository Summary

| Description | Dockerized ROS2 stack for the WATonomous Autonomous Driving Software Pipeline |

| Checkout URI | https://github.com/watonomous/wato_monorepo.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-24 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

README

WATonomous Monorepo (for EVE)

Dockerized monorepo for the WATonomous autonomous vehicle project (dubbed EVE).

Prerequisite Installation

These steps are to setup the monorepo to work on your own PC. We utilize docker to enable ease of reproducibility and deployability.

Why docker? It’s so that you don’t need to download any coding libraries on your bare metal pc, saving headache :3

- Our monorepo infrastructure supports Linux Ubuntu >= 22.04, Windows (WSL/WSL2), and MacOS. Though, aspects of this repo might require specific hardware like NVidia GPUs.

- Once inside Linux, Download Docker Engine using the

aptrepository. If you are using WSL, install docker outside of WSL, it will automatically setup docker within WSL for you. - You’re all set! Information on running the monorepo with our infrastructure is given here

Available Modules

Infrastructure Starts the foxglove bridge and data streamer for rosbags.

Interfacing Launches packages directly connecting to hardware. This includes the sensors of the car and the car itself. see docs

Perception Launches packages for perception. see docs

World Modeling Launches packages for world modeling. see docs

Action Launches packages for action. see docs

Simulation Launches packages CARLA simulator. see docs

System Architecture

Contribute

Information on contributing to the monorepo is given in DEVELOPING.md

CONTRIBUTING

Repository Summary

| Description | Dockerized ROS2 stack for the WATonomous Autonomous Driving Software Pipeline |

| Checkout URI | https://github.com/watonomous/wato_monorepo.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-24 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

README

WATonomous Monorepo (for EVE)

Dockerized monorepo for the WATonomous autonomous vehicle project (dubbed EVE).

Prerequisite Installation

These steps are to setup the monorepo to work on your own PC. We utilize docker to enable ease of reproducibility and deployability.

Why docker? It’s so that you don’t need to download any coding libraries on your bare metal pc, saving headache :3

- Our monorepo infrastructure supports Linux Ubuntu >= 22.04, Windows (WSL/WSL2), and MacOS. Though, aspects of this repo might require specific hardware like NVidia GPUs.

- Once inside Linux, Download Docker Engine using the

aptrepository. If you are using WSL, install docker outside of WSL, it will automatically setup docker within WSL for you. - You’re all set! Information on running the monorepo with our infrastructure is given here

Available Modules

Infrastructure Starts the foxglove bridge and data streamer for rosbags.

Interfacing Launches packages directly connecting to hardware. This includes the sensors of the car and the car itself. see docs

Perception Launches packages for perception. see docs

World Modeling Launches packages for world modeling. see docs

Action Launches packages for action. see docs

Simulation Launches packages CARLA simulator. see docs

System Architecture

Contribute

Information on contributing to the monorepo is given in DEVELOPING.md

CONTRIBUTING

Repository Summary

| Description | Dockerized ROS2 stack for the WATonomous Autonomous Driving Software Pipeline |

| Checkout URI | https://github.com/watonomous/wato_monorepo.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-24 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

README

WATonomous Monorepo (for EVE)

Dockerized monorepo for the WATonomous autonomous vehicle project (dubbed EVE).

Prerequisite Installation

These steps are to setup the monorepo to work on your own PC. We utilize docker to enable ease of reproducibility and deployability.

Why docker? It’s so that you don’t need to download any coding libraries on your bare metal pc, saving headache :3

- Our monorepo infrastructure supports Linux Ubuntu >= 22.04, Windows (WSL/WSL2), and MacOS. Though, aspects of this repo might require specific hardware like NVidia GPUs.

- Once inside Linux, Download Docker Engine using the

aptrepository. If you are using WSL, install docker outside of WSL, it will automatically setup docker within WSL for you. - You’re all set! Information on running the monorepo with our infrastructure is given here

Available Modules

Infrastructure Starts the foxglove bridge and data streamer for rosbags.

Interfacing Launches packages directly connecting to hardware. This includes the sensors of the car and the car itself. see docs

Perception Launches packages for perception. see docs

World Modeling Launches packages for world modeling. see docs

Action Launches packages for action. see docs

Simulation Launches packages CARLA simulator. see docs

System Architecture

Contribute

Information on contributing to the monorepo is given in DEVELOPING.md

CONTRIBUTING

Repository Summary

| Description | Dockerized ROS2 stack for the WATonomous Autonomous Driving Software Pipeline |

| Checkout URI | https://github.com/watonomous/wato_monorepo.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-24 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

README

WATonomous Monorepo (for EVE)

Dockerized monorepo for the WATonomous autonomous vehicle project (dubbed EVE).

Prerequisite Installation

These steps are to setup the monorepo to work on your own PC. We utilize docker to enable ease of reproducibility and deployability.

Why docker? It’s so that you don’t need to download any coding libraries on your bare metal pc, saving headache :3

- Our monorepo infrastructure supports Linux Ubuntu >= 22.04, Windows (WSL/WSL2), and MacOS. Though, aspects of this repo might require specific hardware like NVidia GPUs.

- Once inside Linux, Download Docker Engine using the

aptrepository. If you are using WSL, install docker outside of WSL, it will automatically setup docker within WSL for you. - You’re all set! Information on running the monorepo with our infrastructure is given here

Available Modules

Infrastructure Starts the foxglove bridge and data streamer for rosbags.

Interfacing Launches packages directly connecting to hardware. This includes the sensors of the car and the car itself. see docs

Perception Launches packages for perception. see docs

World Modeling Launches packages for world modeling. see docs

Action Launches packages for action. see docs

Simulation Launches packages CARLA simulator. see docs

System Architecture

Contribute

Information on contributing to the monorepo is given in DEVELOPING.md

CONTRIBUTING

Repository Summary

| Description | Dockerized ROS2 stack for the WATonomous Autonomous Driving Software Pipeline |

| Checkout URI | https://github.com/watonomous/wato_monorepo.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-24 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

README

WATonomous Monorepo (for EVE)

Dockerized monorepo for the WATonomous autonomous vehicle project (dubbed EVE).

Prerequisite Installation

These steps are to setup the monorepo to work on your own PC. We utilize docker to enable ease of reproducibility and deployability.

Why docker? It’s so that you don’t need to download any coding libraries on your bare metal pc, saving headache :3

- Our monorepo infrastructure supports Linux Ubuntu >= 22.04, Windows (WSL/WSL2), and MacOS. Though, aspects of this repo might require specific hardware like NVidia GPUs.

- Once inside Linux, Download Docker Engine using the

aptrepository. If you are using WSL, install docker outside of WSL, it will automatically setup docker within WSL for you. - You’re all set! Information on running the monorepo with our infrastructure is given here

Available Modules

Infrastructure Starts the foxglove bridge and data streamer for rosbags.

Interfacing Launches packages directly connecting to hardware. This includes the sensors of the car and the car itself. see docs

Perception Launches packages for perception. see docs

World Modeling Launches packages for world modeling. see docs

Action Launches packages for action. see docs

Simulation Launches packages CARLA simulator. see docs

System Architecture

Contribute

Information on contributing to the monorepo is given in DEVELOPING.md