Package Summary

| Version | 0.50.0 |

| License | Apache License 2.0 |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Description | |

| Checkout URI | https://github.com/autowarefoundation/autoware_universe.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Maintainers

- shintarotomie

- Max-Bin

- Yukihiro Saito

Authors

autoware_tensorrt_vad

Overview

The autoware_tensorrt_vad is a ROS 2 component that implements end-to-end autonomous driving using the TensorRT-optimized Vectorized Autonomous Driving (VAD) model. It leverages the VAD model (Jiang et al., 2023), optimized for deployment using NVIDIA’s DL4AGX TensorRT framework.

This module replaces traditional localization, perception, and planning modules with a single neural network, trained on the Bench2Drive benchmark (Jia et al., 2024) using CARLA simulation data. It integrates seamlessly with Autoware and is designed to work within the Autoware framework.

Features

- Monolithic End-to-End Architecture: Single neural network directly maps camera inputs to trajectories, replacing the entire traditional perception-planning pipeline with one unified model - no separate detection, tracking, prediction, or planning modules

- Multi-Camera Perception: Processes 6 surround-view cameras simultaneously for 360° awareness

- Vectorized Scene Representation: Efficient scene encoding using vector maps for reduced computational overhead

- Real-time TensorRT Inference: Optimized for embedded deployment with ~20ms inference time

- Integrated Perception Outputs: Generates both object predictions (with future trajectories) and map elements as auxiliary outputs

- Temporal Modeling: Leverages historical features for improved temporal consistency and prediction accuracy

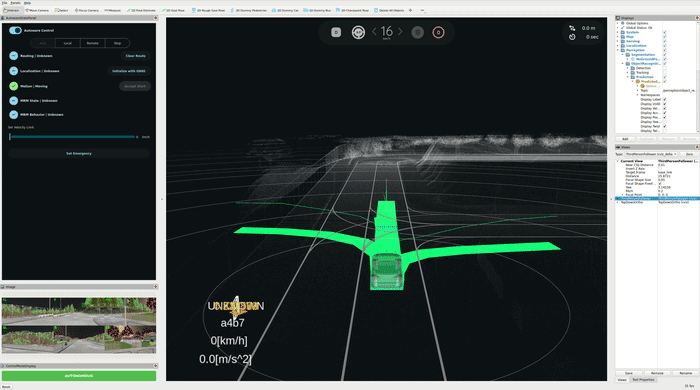

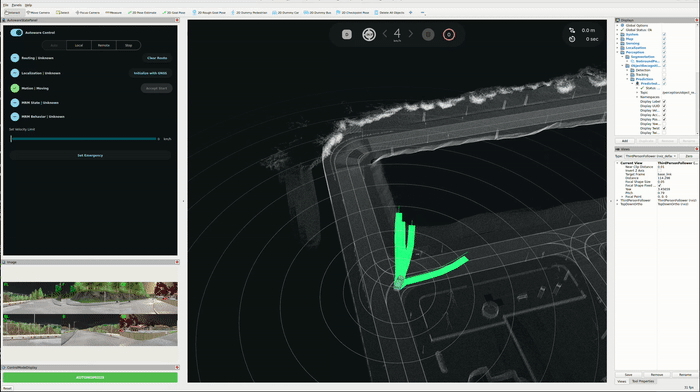

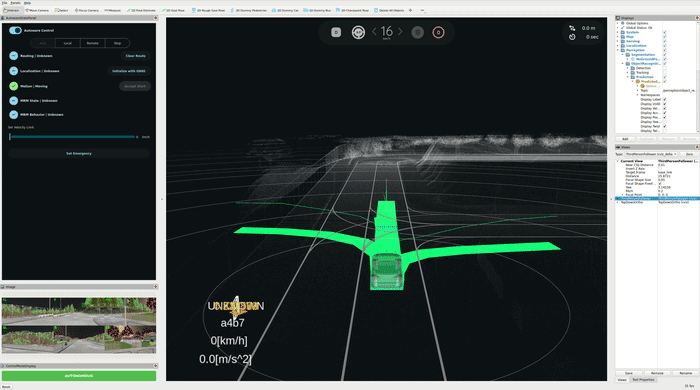

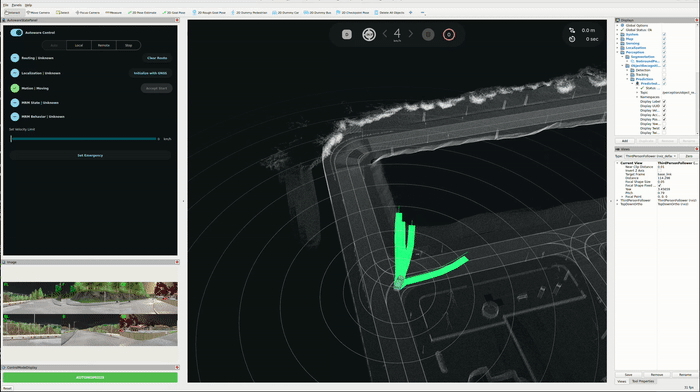

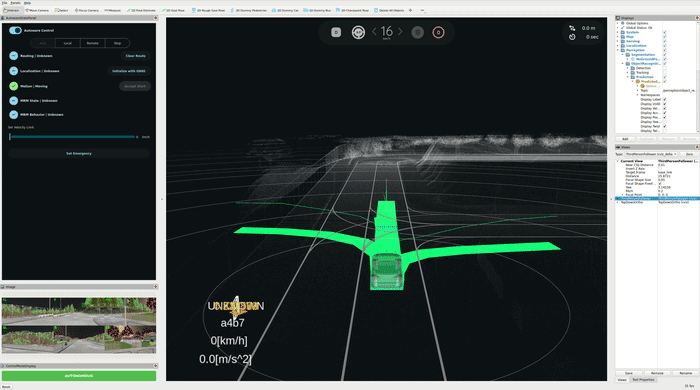

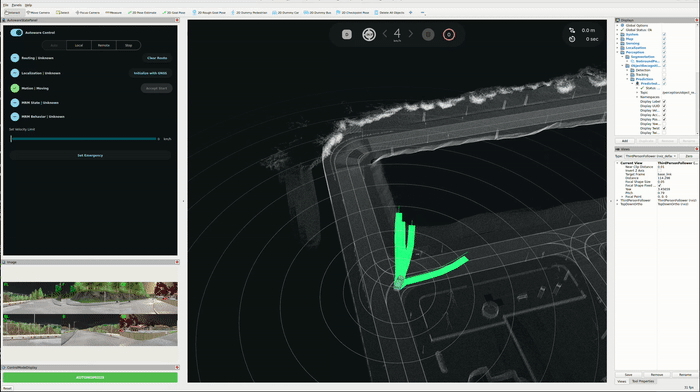

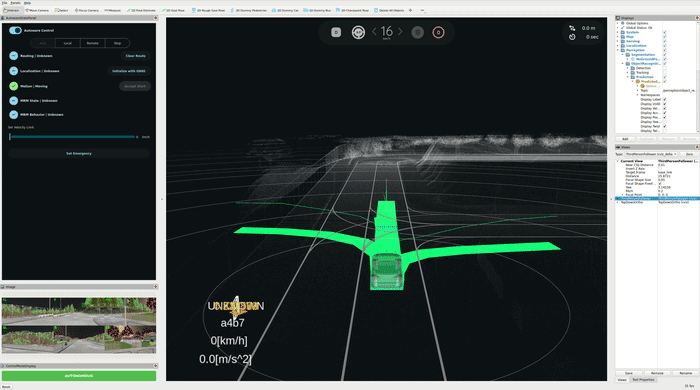

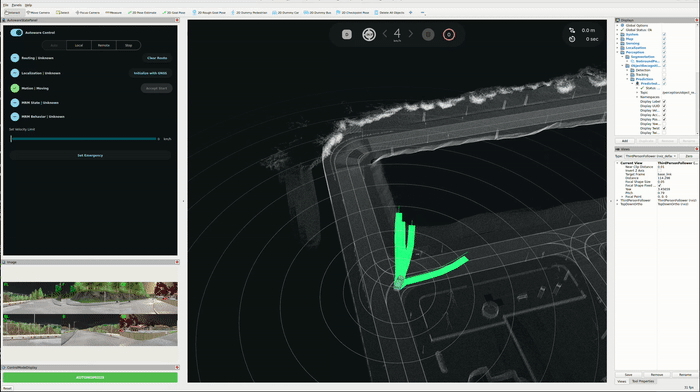

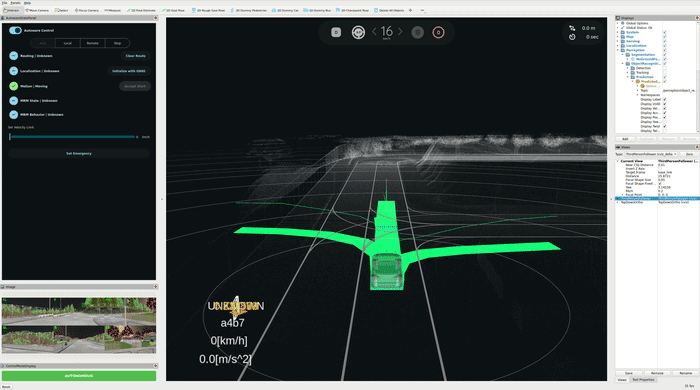

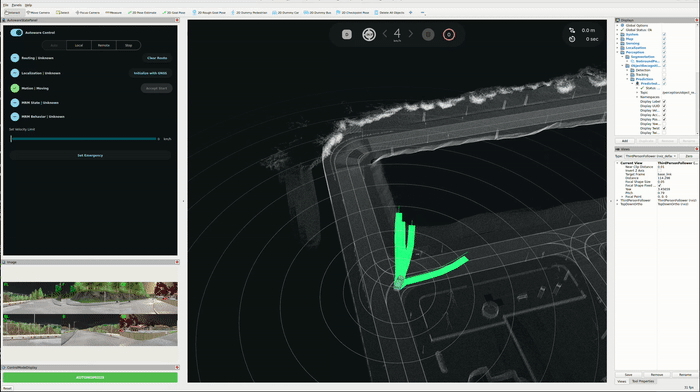

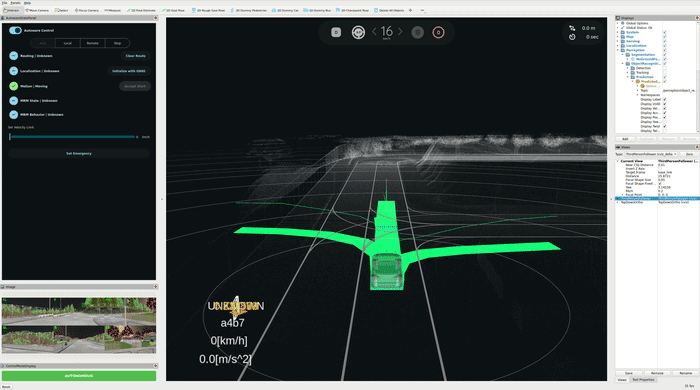

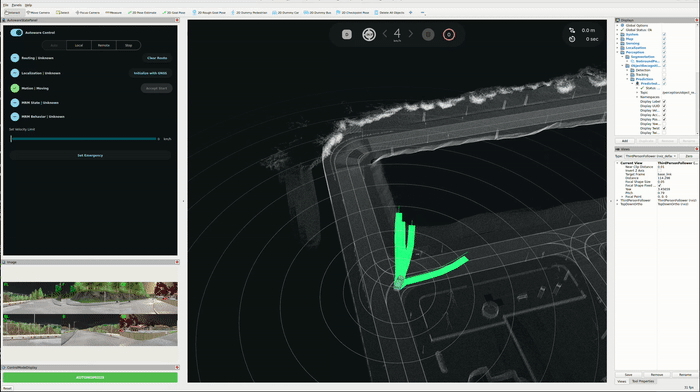

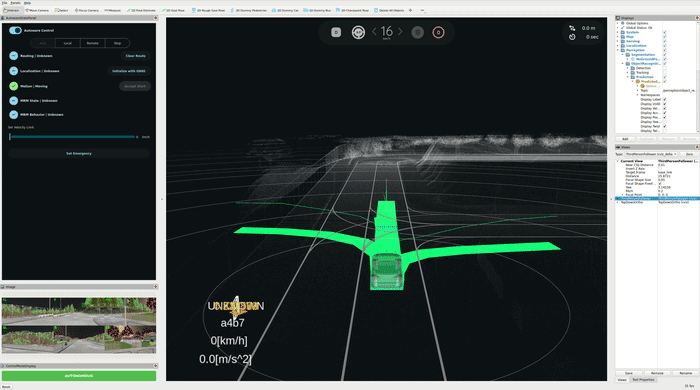

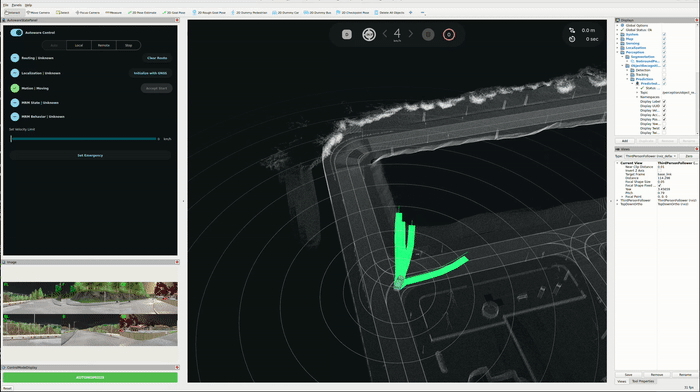

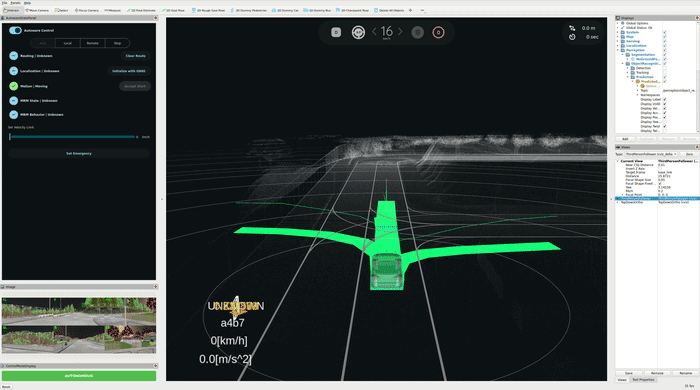

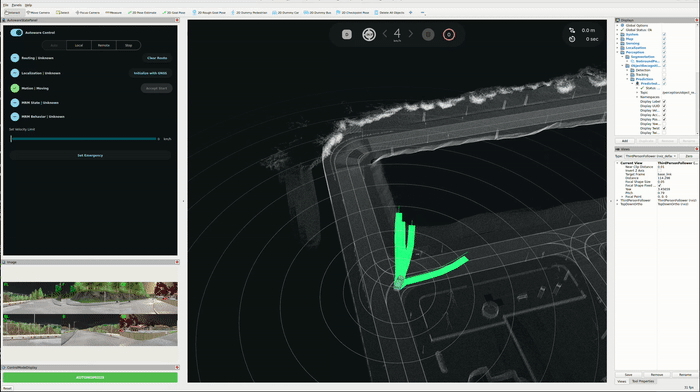

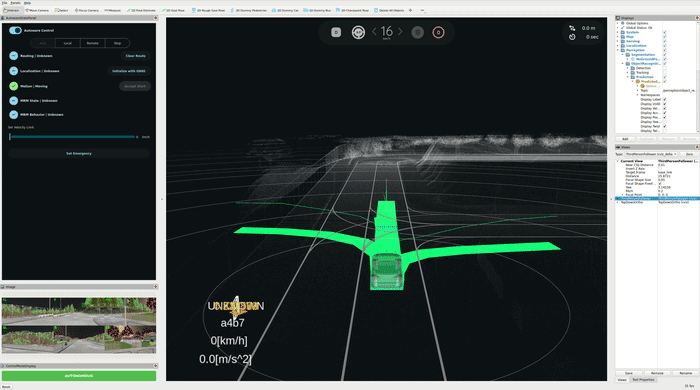

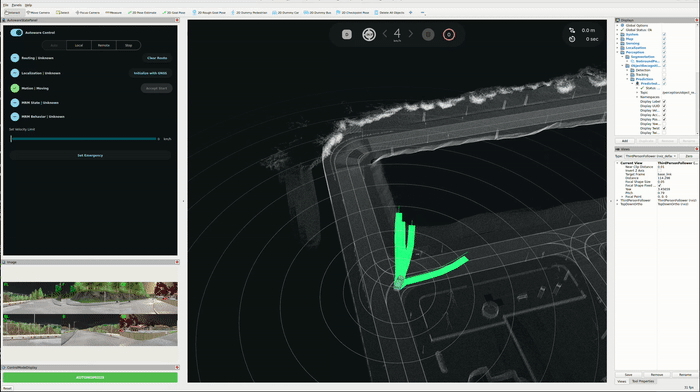

Visualization

Lane Following Demo

Turn Right Demo

Parameters

Parameters can be set via configuration files:

- Deployment configuration (node and interface parameters):

config/vad_carla_tiny.param.yaml - Model architecture parameters:

vad-carla-tiny.param.json(downloaded with model to~/autoware_data/vad/v0.1/)

Inputs

| Topic | Message Type | Description |

|---|---|---|

| ~/input/image* | sensor_msgs/msg/Image* | Camera images 0-5: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT |

| ~/input/camera_info* | sensor_msgs/msg/CameraInfo | Camera calibration for cameras 0-5 |

| ~/input/kinematic_state | nav_msgs/msg/Odometry | Vehicle odometry |

| ~/input/acceleration | geometry_msgs/msg/AccelWithCovarianceStamped | Vehicle acceleration |

*Image transport supports both raw and compressed formats. Configure per-camera via use_raw parameter (default: compressed).

Outputs

| Topic | Message Type | Description |

|---|---|---|

| ~/output/trajectory | autoware_planning_msgs/msg/Trajectory | Selected ego trajectory |

| ~/output/trajectories | autoware_internal_planning_msgs/msg/CandidateTrajectories | All 6 candidate trajectories |

| ~/output/objects | autoware_perception_msgs/msg/PredictedObjects | Predicted objects with trajectories |

| ~/output/map | visualization_msgs/msg/MarkerArray | Predicted map elements |

Building

Build the package with colcon:

colcon build --symlink-install --cmake-args -DCMAKE_EXPORT_COMPILE_COMMANDS=ON -DCMAKE_BUILD_TYPE=Release --packages-up-to autoware_tensorrt_vad

Testing

Unit Tests

Unit tests are provided and can be run with:

colcon test --packages-select autoware_tensorrt_vad

colcon test-result --all

For verbose output:

colcon test --packages-select autoware_tensorrt_vad --event-handlers console_cohesion+

CARLA Simulator Testing

First, setup CARLA following the autoware_carla_interface instructions.

Then launch the E2E VAD system:

```bash

File truncated at 100 lines see the full file

Changelog for package autoware_tensorrt_vad

0.50.0 (2026-02-14)

-

Merge remote-tracking branch 'origin/main' into humble

-

feat(autoware_tensorrt_vad): update nvcc flags (#12044) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INST… (#11988) fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INSTALL_DIR The USE_SCOPED_HEADER_INSTALL_DIR flag is unnecessary for this package because:

- The package has no include/ directory (no public headers)

- All headers are private in src/ directory

- This flag only affects public header installation paths

- It can cause build failures in certain configurations This flag was the only usage in autoware_universe and provided no benefit.

-

chore: remove autoware_launch exec_depend from e2e/autoware_tensorrt_vad (#11879) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unused function [load_object_configuration]{.title-ref} (#11909)

-

fix(autoware_tensorrt_vad): remove unused function [load_map_configuration]{.title-ref} (#11908)

-

fix(autoware_tensorrt_vad): remove unused function [load_image_normalization]{.title-ref} (#11910)

-

fix: add cv_bridge.hpp support (#11873)

-

Contributors: Amadeusz Szymko, Max-Bin, Mete Fatih Cırıt, Ryohsuke Mitsudome, Ryuta Kambe, Taeseung Sohn

0.49.0 (2025-12-30)

-

chore: align version number

-

Merge remote-tracking branch 'origin/main' into prepare-0.49.0-changelog

-

refactor: migrate autoware_tensorrt_vad to e2e directory (#11730) Move autoware_tensorrt_vad package from planning/ to e2e/ directory to align with AWF's organization of end-to-end learning-based components. Changes:

- Created e2e/ directory for end-to-end components

- Moved all autoware_tensorrt_vad files from planning/ to e2e/

- Updated documentation references to reflect new location

- Verified package builds successfully in new location This follows the pattern established in the AWF main repository: https://github.com/autowarefoundation/autoware_universe/tree/main/e2e

-

Contributors: Max-Bin, Ryohsuke Mitsudome

Package Dependencies

System Dependencies

| Name |

|---|

| nlohmann-json-dev |

Dependant Packages

Launch files

- launch/vad_carla_tiny.launch.xml

- VAD (Vectorized Autonomous Driving) - CARLA Tiny Configuration This launch file runs the TensorRT-optimized VAD model trained on Bench2Drive CARLA dataset using the standard Autoware coordinate system (Y->X, -X->Y transformation, rear axis origin). Key requirements: - 6 surround-view cameras in specific order: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT - ONNX models in model_path: vad-carla-tiny_backbone.onnx, vad-carla-tiny_head_no_prev.onnx, vad-carla-tiny_head.onnx - TensorRT engines will be built automatically on first run (GPU-specific) For more information, see: README.md

-

- log_level [default: info]

- use_sim_time [default: true]

- data_path [default: $(env HOME)/autoware_data]

- model_path [default: $(var data_path)/vad]

- plugins_path [default: $(find-pkg-share autoware_tensorrt_plugins)]

- camera_info0 [default: /sensing/camera/CAM_FRONT/camera_info]

- camera_info1 [default: /sensing/camera/CAM_BACK/camera_info]

- camera_info2 [default: /sensing/camera/CAM_FRONT_LEFT/camera_info]

- camera_info3 [default: /sensing/camera/CAM_BACK_LEFT/camera_info]

- camera_info4 [default: /sensing/camera/CAM_FRONT_RIGHT/camera_info]

- camera_info5 [default: /sensing/camera/CAM_BACK_RIGHT/camera_info]

- image0 [default: /sensing/camera/CAM_FRONT/image_raw]

- image1 [default: /sensing/camera/CAM_BACK/image_raw]

- image2 [default: /sensing/camera/CAM_FRONT_LEFT/image_raw]

- image3 [default: /sensing/camera/CAM_BACK_LEFT/image_raw]

- image4 [default: /sensing/camera/CAM_FRONT_RIGHT/image_raw]

- image5 [default: /sensing/camera/CAM_BACK_RIGHT/image_raw]

- kinematic_state [default: /localization/kinematic_state]

- acceleration [default: /localization/acceleration]

- output_objects [default: /perception/object_recognition/objects]

- output_trajectory [default: /planning/scenario_planning/trajectory]

- output_candidate_trajectories [default: /planning/scenario_planning/vad/candidate_trajectories]

- output_map_points [default: /perception/vad/map_points]

Messages

Services

Plugins

Recent questions tagged autoware_tensorrt_vad at Robotics Stack Exchange

Package Summary

| Version | 0.50.0 |

| License | Apache License 2.0 |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Description | |

| Checkout URI | https://github.com/autowarefoundation/autoware_universe.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Maintainers

- shintarotomie

- Max-Bin

- Yukihiro Saito

Authors

autoware_tensorrt_vad

Overview

The autoware_tensorrt_vad is a ROS 2 component that implements end-to-end autonomous driving using the TensorRT-optimized Vectorized Autonomous Driving (VAD) model. It leverages the VAD model (Jiang et al., 2023), optimized for deployment using NVIDIA’s DL4AGX TensorRT framework.

This module replaces traditional localization, perception, and planning modules with a single neural network, trained on the Bench2Drive benchmark (Jia et al., 2024) using CARLA simulation data. It integrates seamlessly with Autoware and is designed to work within the Autoware framework.

Features

- Monolithic End-to-End Architecture: Single neural network directly maps camera inputs to trajectories, replacing the entire traditional perception-planning pipeline with one unified model - no separate detection, tracking, prediction, or planning modules

- Multi-Camera Perception: Processes 6 surround-view cameras simultaneously for 360° awareness

- Vectorized Scene Representation: Efficient scene encoding using vector maps for reduced computational overhead

- Real-time TensorRT Inference: Optimized for embedded deployment with ~20ms inference time

- Integrated Perception Outputs: Generates both object predictions (with future trajectories) and map elements as auxiliary outputs

- Temporal Modeling: Leverages historical features for improved temporal consistency and prediction accuracy

Visualization

Lane Following Demo

Turn Right Demo

Parameters

Parameters can be set via configuration files:

- Deployment configuration (node and interface parameters):

config/vad_carla_tiny.param.yaml - Model architecture parameters:

vad-carla-tiny.param.json(downloaded with model to~/autoware_data/vad/v0.1/)

Inputs

| Topic | Message Type | Description |

|---|---|---|

| ~/input/image* | sensor_msgs/msg/Image* | Camera images 0-5: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT |

| ~/input/camera_info* | sensor_msgs/msg/CameraInfo | Camera calibration for cameras 0-5 |

| ~/input/kinematic_state | nav_msgs/msg/Odometry | Vehicle odometry |

| ~/input/acceleration | geometry_msgs/msg/AccelWithCovarianceStamped | Vehicle acceleration |

*Image transport supports both raw and compressed formats. Configure per-camera via use_raw parameter (default: compressed).

Outputs

| Topic | Message Type | Description |

|---|---|---|

| ~/output/trajectory | autoware_planning_msgs/msg/Trajectory | Selected ego trajectory |

| ~/output/trajectories | autoware_internal_planning_msgs/msg/CandidateTrajectories | All 6 candidate trajectories |

| ~/output/objects | autoware_perception_msgs/msg/PredictedObjects | Predicted objects with trajectories |

| ~/output/map | visualization_msgs/msg/MarkerArray | Predicted map elements |

Building

Build the package with colcon:

colcon build --symlink-install --cmake-args -DCMAKE_EXPORT_COMPILE_COMMANDS=ON -DCMAKE_BUILD_TYPE=Release --packages-up-to autoware_tensorrt_vad

Testing

Unit Tests

Unit tests are provided and can be run with:

colcon test --packages-select autoware_tensorrt_vad

colcon test-result --all

For verbose output:

colcon test --packages-select autoware_tensorrt_vad --event-handlers console_cohesion+

CARLA Simulator Testing

First, setup CARLA following the autoware_carla_interface instructions.

Then launch the E2E VAD system:

```bash

File truncated at 100 lines see the full file

Changelog for package autoware_tensorrt_vad

0.50.0 (2026-02-14)

-

Merge remote-tracking branch 'origin/main' into humble

-

feat(autoware_tensorrt_vad): update nvcc flags (#12044) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INST… (#11988) fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INSTALL_DIR The USE_SCOPED_HEADER_INSTALL_DIR flag is unnecessary for this package because:

- The package has no include/ directory (no public headers)

- All headers are private in src/ directory

- This flag only affects public header installation paths

- It can cause build failures in certain configurations This flag was the only usage in autoware_universe and provided no benefit.

-

chore: remove autoware_launch exec_depend from e2e/autoware_tensorrt_vad (#11879) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unused function [load_object_configuration]{.title-ref} (#11909)

-

fix(autoware_tensorrt_vad): remove unused function [load_map_configuration]{.title-ref} (#11908)

-

fix(autoware_tensorrt_vad): remove unused function [load_image_normalization]{.title-ref} (#11910)

-

fix: add cv_bridge.hpp support (#11873)

-

Contributors: Amadeusz Szymko, Max-Bin, Mete Fatih Cırıt, Ryohsuke Mitsudome, Ryuta Kambe, Taeseung Sohn

0.49.0 (2025-12-30)

-

chore: align version number

-

Merge remote-tracking branch 'origin/main' into prepare-0.49.0-changelog

-

refactor: migrate autoware_tensorrt_vad to e2e directory (#11730) Move autoware_tensorrt_vad package from planning/ to e2e/ directory to align with AWF's organization of end-to-end learning-based components. Changes:

- Created e2e/ directory for end-to-end components

- Moved all autoware_tensorrt_vad files from planning/ to e2e/

- Updated documentation references to reflect new location

- Verified package builds successfully in new location This follows the pattern established in the AWF main repository: https://github.com/autowarefoundation/autoware_universe/tree/main/e2e

-

Contributors: Max-Bin, Ryohsuke Mitsudome

Package Dependencies

System Dependencies

| Name |

|---|

| nlohmann-json-dev |

Dependant Packages

Launch files

- launch/vad_carla_tiny.launch.xml

- VAD (Vectorized Autonomous Driving) - CARLA Tiny Configuration This launch file runs the TensorRT-optimized VAD model trained on Bench2Drive CARLA dataset using the standard Autoware coordinate system (Y->X, -X->Y transformation, rear axis origin). Key requirements: - 6 surround-view cameras in specific order: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT - ONNX models in model_path: vad-carla-tiny_backbone.onnx, vad-carla-tiny_head_no_prev.onnx, vad-carla-tiny_head.onnx - TensorRT engines will be built automatically on first run (GPU-specific) For more information, see: README.md

-

- log_level [default: info]

- use_sim_time [default: true]

- data_path [default: $(env HOME)/autoware_data]

- model_path [default: $(var data_path)/vad]

- plugins_path [default: $(find-pkg-share autoware_tensorrt_plugins)]

- camera_info0 [default: /sensing/camera/CAM_FRONT/camera_info]

- camera_info1 [default: /sensing/camera/CAM_BACK/camera_info]

- camera_info2 [default: /sensing/camera/CAM_FRONT_LEFT/camera_info]

- camera_info3 [default: /sensing/camera/CAM_BACK_LEFT/camera_info]

- camera_info4 [default: /sensing/camera/CAM_FRONT_RIGHT/camera_info]

- camera_info5 [default: /sensing/camera/CAM_BACK_RIGHT/camera_info]

- image0 [default: /sensing/camera/CAM_FRONT/image_raw]

- image1 [default: /sensing/camera/CAM_BACK/image_raw]

- image2 [default: /sensing/camera/CAM_FRONT_LEFT/image_raw]

- image3 [default: /sensing/camera/CAM_BACK_LEFT/image_raw]

- image4 [default: /sensing/camera/CAM_FRONT_RIGHT/image_raw]

- image5 [default: /sensing/camera/CAM_BACK_RIGHT/image_raw]

- kinematic_state [default: /localization/kinematic_state]

- acceleration [default: /localization/acceleration]

- output_objects [default: /perception/object_recognition/objects]

- output_trajectory [default: /planning/scenario_planning/trajectory]

- output_candidate_trajectories [default: /planning/scenario_planning/vad/candidate_trajectories]

- output_map_points [default: /perception/vad/map_points]

Messages

Services

Plugins

Recent questions tagged autoware_tensorrt_vad at Robotics Stack Exchange

Package Summary

| Version | 0.50.0 |

| License | Apache License 2.0 |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Description | |

| Checkout URI | https://github.com/autowarefoundation/autoware_universe.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Maintainers

- shintarotomie

- Max-Bin

- Yukihiro Saito

Authors

autoware_tensorrt_vad

Overview

The autoware_tensorrt_vad is a ROS 2 component that implements end-to-end autonomous driving using the TensorRT-optimized Vectorized Autonomous Driving (VAD) model. It leverages the VAD model (Jiang et al., 2023), optimized for deployment using NVIDIA’s DL4AGX TensorRT framework.

This module replaces traditional localization, perception, and planning modules with a single neural network, trained on the Bench2Drive benchmark (Jia et al., 2024) using CARLA simulation data. It integrates seamlessly with Autoware and is designed to work within the Autoware framework.

Features

- Monolithic End-to-End Architecture: Single neural network directly maps camera inputs to trajectories, replacing the entire traditional perception-planning pipeline with one unified model - no separate detection, tracking, prediction, or planning modules

- Multi-Camera Perception: Processes 6 surround-view cameras simultaneously for 360° awareness

- Vectorized Scene Representation: Efficient scene encoding using vector maps for reduced computational overhead

- Real-time TensorRT Inference: Optimized for embedded deployment with ~20ms inference time

- Integrated Perception Outputs: Generates both object predictions (with future trajectories) and map elements as auxiliary outputs

- Temporal Modeling: Leverages historical features for improved temporal consistency and prediction accuracy

Visualization

Lane Following Demo

Turn Right Demo

Parameters

Parameters can be set via configuration files:

- Deployment configuration (node and interface parameters):

config/vad_carla_tiny.param.yaml - Model architecture parameters:

vad-carla-tiny.param.json(downloaded with model to~/autoware_data/vad/v0.1/)

Inputs

| Topic | Message Type | Description |

|---|---|---|

| ~/input/image* | sensor_msgs/msg/Image* | Camera images 0-5: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT |

| ~/input/camera_info* | sensor_msgs/msg/CameraInfo | Camera calibration for cameras 0-5 |

| ~/input/kinematic_state | nav_msgs/msg/Odometry | Vehicle odometry |

| ~/input/acceleration | geometry_msgs/msg/AccelWithCovarianceStamped | Vehicle acceleration |

*Image transport supports both raw and compressed formats. Configure per-camera via use_raw parameter (default: compressed).

Outputs

| Topic | Message Type | Description |

|---|---|---|

| ~/output/trajectory | autoware_planning_msgs/msg/Trajectory | Selected ego trajectory |

| ~/output/trajectories | autoware_internal_planning_msgs/msg/CandidateTrajectories | All 6 candidate trajectories |

| ~/output/objects | autoware_perception_msgs/msg/PredictedObjects | Predicted objects with trajectories |

| ~/output/map | visualization_msgs/msg/MarkerArray | Predicted map elements |

Building

Build the package with colcon:

colcon build --symlink-install --cmake-args -DCMAKE_EXPORT_COMPILE_COMMANDS=ON -DCMAKE_BUILD_TYPE=Release --packages-up-to autoware_tensorrt_vad

Testing

Unit Tests

Unit tests are provided and can be run with:

colcon test --packages-select autoware_tensorrt_vad

colcon test-result --all

For verbose output:

colcon test --packages-select autoware_tensorrt_vad --event-handlers console_cohesion+

CARLA Simulator Testing

First, setup CARLA following the autoware_carla_interface instructions.

Then launch the E2E VAD system:

```bash

File truncated at 100 lines see the full file

Changelog for package autoware_tensorrt_vad

0.50.0 (2026-02-14)

-

Merge remote-tracking branch 'origin/main' into humble

-

feat(autoware_tensorrt_vad): update nvcc flags (#12044) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INST… (#11988) fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INSTALL_DIR The USE_SCOPED_HEADER_INSTALL_DIR flag is unnecessary for this package because:

- The package has no include/ directory (no public headers)

- All headers are private in src/ directory

- This flag only affects public header installation paths

- It can cause build failures in certain configurations This flag was the only usage in autoware_universe and provided no benefit.

-

chore: remove autoware_launch exec_depend from e2e/autoware_tensorrt_vad (#11879) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unused function [load_object_configuration]{.title-ref} (#11909)

-

fix(autoware_tensorrt_vad): remove unused function [load_map_configuration]{.title-ref} (#11908)

-

fix(autoware_tensorrt_vad): remove unused function [load_image_normalization]{.title-ref} (#11910)

-

fix: add cv_bridge.hpp support (#11873)

-

Contributors: Amadeusz Szymko, Max-Bin, Mete Fatih Cırıt, Ryohsuke Mitsudome, Ryuta Kambe, Taeseung Sohn

0.49.0 (2025-12-30)

-

chore: align version number

-

Merge remote-tracking branch 'origin/main' into prepare-0.49.0-changelog

-

refactor: migrate autoware_tensorrt_vad to e2e directory (#11730) Move autoware_tensorrt_vad package from planning/ to e2e/ directory to align with AWF's organization of end-to-end learning-based components. Changes:

- Created e2e/ directory for end-to-end components

- Moved all autoware_tensorrt_vad files from planning/ to e2e/

- Updated documentation references to reflect new location

- Verified package builds successfully in new location This follows the pattern established in the AWF main repository: https://github.com/autowarefoundation/autoware_universe/tree/main/e2e

-

Contributors: Max-Bin, Ryohsuke Mitsudome

Package Dependencies

System Dependencies

| Name |

|---|

| nlohmann-json-dev |

Dependant Packages

Launch files

- launch/vad_carla_tiny.launch.xml

- VAD (Vectorized Autonomous Driving) - CARLA Tiny Configuration This launch file runs the TensorRT-optimized VAD model trained on Bench2Drive CARLA dataset using the standard Autoware coordinate system (Y->X, -X->Y transformation, rear axis origin). Key requirements: - 6 surround-view cameras in specific order: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT - ONNX models in model_path: vad-carla-tiny_backbone.onnx, vad-carla-tiny_head_no_prev.onnx, vad-carla-tiny_head.onnx - TensorRT engines will be built automatically on first run (GPU-specific) For more information, see: README.md

-

- log_level [default: info]

- use_sim_time [default: true]

- data_path [default: $(env HOME)/autoware_data]

- model_path [default: $(var data_path)/vad]

- plugins_path [default: $(find-pkg-share autoware_tensorrt_plugins)]

- camera_info0 [default: /sensing/camera/CAM_FRONT/camera_info]

- camera_info1 [default: /sensing/camera/CAM_BACK/camera_info]

- camera_info2 [default: /sensing/camera/CAM_FRONT_LEFT/camera_info]

- camera_info3 [default: /sensing/camera/CAM_BACK_LEFT/camera_info]

- camera_info4 [default: /sensing/camera/CAM_FRONT_RIGHT/camera_info]

- camera_info5 [default: /sensing/camera/CAM_BACK_RIGHT/camera_info]

- image0 [default: /sensing/camera/CAM_FRONT/image_raw]

- image1 [default: /sensing/camera/CAM_BACK/image_raw]

- image2 [default: /sensing/camera/CAM_FRONT_LEFT/image_raw]

- image3 [default: /sensing/camera/CAM_BACK_LEFT/image_raw]

- image4 [default: /sensing/camera/CAM_FRONT_RIGHT/image_raw]

- image5 [default: /sensing/camera/CAM_BACK_RIGHT/image_raw]

- kinematic_state [default: /localization/kinematic_state]

- acceleration [default: /localization/acceleration]

- output_objects [default: /perception/object_recognition/objects]

- output_trajectory [default: /planning/scenario_planning/trajectory]

- output_candidate_trajectories [default: /planning/scenario_planning/vad/candidate_trajectories]

- output_map_points [default: /perception/vad/map_points]

Messages

Services

Plugins

Recent questions tagged autoware_tensorrt_vad at Robotics Stack Exchange

Package Summary

| Version | 0.50.0 |

| License | Apache License 2.0 |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Description | |

| Checkout URI | https://github.com/autowarefoundation/autoware_universe.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Maintainers

- shintarotomie

- Max-Bin

- Yukihiro Saito

Authors

autoware_tensorrt_vad

Overview

The autoware_tensorrt_vad is a ROS 2 component that implements end-to-end autonomous driving using the TensorRT-optimized Vectorized Autonomous Driving (VAD) model. It leverages the VAD model (Jiang et al., 2023), optimized for deployment using NVIDIA’s DL4AGX TensorRT framework.

This module replaces traditional localization, perception, and planning modules with a single neural network, trained on the Bench2Drive benchmark (Jia et al., 2024) using CARLA simulation data. It integrates seamlessly with Autoware and is designed to work within the Autoware framework.

Features

- Monolithic End-to-End Architecture: Single neural network directly maps camera inputs to trajectories, replacing the entire traditional perception-planning pipeline with one unified model - no separate detection, tracking, prediction, or planning modules

- Multi-Camera Perception: Processes 6 surround-view cameras simultaneously for 360° awareness

- Vectorized Scene Representation: Efficient scene encoding using vector maps for reduced computational overhead

- Real-time TensorRT Inference: Optimized for embedded deployment with ~20ms inference time

- Integrated Perception Outputs: Generates both object predictions (with future trajectories) and map elements as auxiliary outputs

- Temporal Modeling: Leverages historical features for improved temporal consistency and prediction accuracy

Visualization

Lane Following Demo

Turn Right Demo

Parameters

Parameters can be set via configuration files:

- Deployment configuration (node and interface parameters):

config/vad_carla_tiny.param.yaml - Model architecture parameters:

vad-carla-tiny.param.json(downloaded with model to~/autoware_data/vad/v0.1/)

Inputs

| Topic | Message Type | Description |

|---|---|---|

| ~/input/image* | sensor_msgs/msg/Image* | Camera images 0-5: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT |

| ~/input/camera_info* | sensor_msgs/msg/CameraInfo | Camera calibration for cameras 0-5 |

| ~/input/kinematic_state | nav_msgs/msg/Odometry | Vehicle odometry |

| ~/input/acceleration | geometry_msgs/msg/AccelWithCovarianceStamped | Vehicle acceleration |

*Image transport supports both raw and compressed formats. Configure per-camera via use_raw parameter (default: compressed).

Outputs

| Topic | Message Type | Description |

|---|---|---|

| ~/output/trajectory | autoware_planning_msgs/msg/Trajectory | Selected ego trajectory |

| ~/output/trajectories | autoware_internal_planning_msgs/msg/CandidateTrajectories | All 6 candidate trajectories |

| ~/output/objects | autoware_perception_msgs/msg/PredictedObjects | Predicted objects with trajectories |

| ~/output/map | visualization_msgs/msg/MarkerArray | Predicted map elements |

Building

Build the package with colcon:

colcon build --symlink-install --cmake-args -DCMAKE_EXPORT_COMPILE_COMMANDS=ON -DCMAKE_BUILD_TYPE=Release --packages-up-to autoware_tensorrt_vad

Testing

Unit Tests

Unit tests are provided and can be run with:

colcon test --packages-select autoware_tensorrt_vad

colcon test-result --all

For verbose output:

colcon test --packages-select autoware_tensorrt_vad --event-handlers console_cohesion+

CARLA Simulator Testing

First, setup CARLA following the autoware_carla_interface instructions.

Then launch the E2E VAD system:

```bash

File truncated at 100 lines see the full file

Changelog for package autoware_tensorrt_vad

0.50.0 (2026-02-14)

-

Merge remote-tracking branch 'origin/main' into humble

-

feat(autoware_tensorrt_vad): update nvcc flags (#12044) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INST… (#11988) fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INSTALL_DIR The USE_SCOPED_HEADER_INSTALL_DIR flag is unnecessary for this package because:

- The package has no include/ directory (no public headers)

- All headers are private in src/ directory

- This flag only affects public header installation paths

- It can cause build failures in certain configurations This flag was the only usage in autoware_universe and provided no benefit.

-

chore: remove autoware_launch exec_depend from e2e/autoware_tensorrt_vad (#11879) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unused function [load_object_configuration]{.title-ref} (#11909)

-

fix(autoware_tensorrt_vad): remove unused function [load_map_configuration]{.title-ref} (#11908)

-

fix(autoware_tensorrt_vad): remove unused function [load_image_normalization]{.title-ref} (#11910)

-

fix: add cv_bridge.hpp support (#11873)

-

Contributors: Amadeusz Szymko, Max-Bin, Mete Fatih Cırıt, Ryohsuke Mitsudome, Ryuta Kambe, Taeseung Sohn

0.49.0 (2025-12-30)

-

chore: align version number

-

Merge remote-tracking branch 'origin/main' into prepare-0.49.0-changelog

-

refactor: migrate autoware_tensorrt_vad to e2e directory (#11730) Move autoware_tensorrt_vad package from planning/ to e2e/ directory to align with AWF's organization of end-to-end learning-based components. Changes:

- Created e2e/ directory for end-to-end components

- Moved all autoware_tensorrt_vad files from planning/ to e2e/

- Updated documentation references to reflect new location

- Verified package builds successfully in new location This follows the pattern established in the AWF main repository: https://github.com/autowarefoundation/autoware_universe/tree/main/e2e

-

Contributors: Max-Bin, Ryohsuke Mitsudome

Package Dependencies

System Dependencies

| Name |

|---|

| nlohmann-json-dev |

Dependant Packages

Launch files

- launch/vad_carla_tiny.launch.xml

- VAD (Vectorized Autonomous Driving) - CARLA Tiny Configuration This launch file runs the TensorRT-optimized VAD model trained on Bench2Drive CARLA dataset using the standard Autoware coordinate system (Y->X, -X->Y transformation, rear axis origin). Key requirements: - 6 surround-view cameras in specific order: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT - ONNX models in model_path: vad-carla-tiny_backbone.onnx, vad-carla-tiny_head_no_prev.onnx, vad-carla-tiny_head.onnx - TensorRT engines will be built automatically on first run (GPU-specific) For more information, see: README.md

-

- log_level [default: info]

- use_sim_time [default: true]

- data_path [default: $(env HOME)/autoware_data]

- model_path [default: $(var data_path)/vad]

- plugins_path [default: $(find-pkg-share autoware_tensorrt_plugins)]

- camera_info0 [default: /sensing/camera/CAM_FRONT/camera_info]

- camera_info1 [default: /sensing/camera/CAM_BACK/camera_info]

- camera_info2 [default: /sensing/camera/CAM_FRONT_LEFT/camera_info]

- camera_info3 [default: /sensing/camera/CAM_BACK_LEFT/camera_info]

- camera_info4 [default: /sensing/camera/CAM_FRONT_RIGHT/camera_info]

- camera_info5 [default: /sensing/camera/CAM_BACK_RIGHT/camera_info]

- image0 [default: /sensing/camera/CAM_FRONT/image_raw]

- image1 [default: /sensing/camera/CAM_BACK/image_raw]

- image2 [default: /sensing/camera/CAM_FRONT_LEFT/image_raw]

- image3 [default: /sensing/camera/CAM_BACK_LEFT/image_raw]

- image4 [default: /sensing/camera/CAM_FRONT_RIGHT/image_raw]

- image5 [default: /sensing/camera/CAM_BACK_RIGHT/image_raw]

- kinematic_state [default: /localization/kinematic_state]

- acceleration [default: /localization/acceleration]

- output_objects [default: /perception/object_recognition/objects]

- output_trajectory [default: /planning/scenario_planning/trajectory]

- output_candidate_trajectories [default: /planning/scenario_planning/vad/candidate_trajectories]

- output_map_points [default: /perception/vad/map_points]

Messages

Services

Plugins

Recent questions tagged autoware_tensorrt_vad at Robotics Stack Exchange

Package Summary

| Version | 0.50.0 |

| License | Apache License 2.0 |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Description | |

| Checkout URI | https://github.com/autowarefoundation/autoware_universe.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Maintainers

- shintarotomie

- Max-Bin

- Yukihiro Saito

Authors

autoware_tensorrt_vad

Overview

The autoware_tensorrt_vad is a ROS 2 component that implements end-to-end autonomous driving using the TensorRT-optimized Vectorized Autonomous Driving (VAD) model. It leverages the VAD model (Jiang et al., 2023), optimized for deployment using NVIDIA’s DL4AGX TensorRT framework.

This module replaces traditional localization, perception, and planning modules with a single neural network, trained on the Bench2Drive benchmark (Jia et al., 2024) using CARLA simulation data. It integrates seamlessly with Autoware and is designed to work within the Autoware framework.

Features

- Monolithic End-to-End Architecture: Single neural network directly maps camera inputs to trajectories, replacing the entire traditional perception-planning pipeline with one unified model - no separate detection, tracking, prediction, or planning modules

- Multi-Camera Perception: Processes 6 surround-view cameras simultaneously for 360° awareness

- Vectorized Scene Representation: Efficient scene encoding using vector maps for reduced computational overhead

- Real-time TensorRT Inference: Optimized for embedded deployment with ~20ms inference time

- Integrated Perception Outputs: Generates both object predictions (with future trajectories) and map elements as auxiliary outputs

- Temporal Modeling: Leverages historical features for improved temporal consistency and prediction accuracy

Visualization

Lane Following Demo

Turn Right Demo

Parameters

Parameters can be set via configuration files:

- Deployment configuration (node and interface parameters):

config/vad_carla_tiny.param.yaml - Model architecture parameters:

vad-carla-tiny.param.json(downloaded with model to~/autoware_data/vad/v0.1/)

Inputs

| Topic | Message Type | Description |

|---|---|---|

| ~/input/image* | sensor_msgs/msg/Image* | Camera images 0-5: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT |

| ~/input/camera_info* | sensor_msgs/msg/CameraInfo | Camera calibration for cameras 0-5 |

| ~/input/kinematic_state | nav_msgs/msg/Odometry | Vehicle odometry |

| ~/input/acceleration | geometry_msgs/msg/AccelWithCovarianceStamped | Vehicle acceleration |

*Image transport supports both raw and compressed formats. Configure per-camera via use_raw parameter (default: compressed).

Outputs

| Topic | Message Type | Description |

|---|---|---|

| ~/output/trajectory | autoware_planning_msgs/msg/Trajectory | Selected ego trajectory |

| ~/output/trajectories | autoware_internal_planning_msgs/msg/CandidateTrajectories | All 6 candidate trajectories |

| ~/output/objects | autoware_perception_msgs/msg/PredictedObjects | Predicted objects with trajectories |

| ~/output/map | visualization_msgs/msg/MarkerArray | Predicted map elements |

Building

Build the package with colcon:

colcon build --symlink-install --cmake-args -DCMAKE_EXPORT_COMPILE_COMMANDS=ON -DCMAKE_BUILD_TYPE=Release --packages-up-to autoware_tensorrt_vad

Testing

Unit Tests

Unit tests are provided and can be run with:

colcon test --packages-select autoware_tensorrt_vad

colcon test-result --all

For verbose output:

colcon test --packages-select autoware_tensorrt_vad --event-handlers console_cohesion+

CARLA Simulator Testing

First, setup CARLA following the autoware_carla_interface instructions.

Then launch the E2E VAD system:

```bash

File truncated at 100 lines see the full file

Changelog for package autoware_tensorrt_vad

0.50.0 (2026-02-14)

-

Merge remote-tracking branch 'origin/main' into humble

-

feat(autoware_tensorrt_vad): update nvcc flags (#12044) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INST… (#11988) fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INSTALL_DIR The USE_SCOPED_HEADER_INSTALL_DIR flag is unnecessary for this package because:

- The package has no include/ directory (no public headers)

- All headers are private in src/ directory

- This flag only affects public header installation paths

- It can cause build failures in certain configurations This flag was the only usage in autoware_universe and provided no benefit.

-

chore: remove autoware_launch exec_depend from e2e/autoware_tensorrt_vad (#11879) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unused function [load_object_configuration]{.title-ref} (#11909)

-

fix(autoware_tensorrt_vad): remove unused function [load_map_configuration]{.title-ref} (#11908)

-

fix(autoware_tensorrt_vad): remove unused function [load_image_normalization]{.title-ref} (#11910)

-

fix: add cv_bridge.hpp support (#11873)

-

Contributors: Amadeusz Szymko, Max-Bin, Mete Fatih Cırıt, Ryohsuke Mitsudome, Ryuta Kambe, Taeseung Sohn

0.49.0 (2025-12-30)

-

chore: align version number

-

Merge remote-tracking branch 'origin/main' into prepare-0.49.0-changelog

-

refactor: migrate autoware_tensorrt_vad to e2e directory (#11730) Move autoware_tensorrt_vad package from planning/ to e2e/ directory to align with AWF's organization of end-to-end learning-based components. Changes:

- Created e2e/ directory for end-to-end components

- Moved all autoware_tensorrt_vad files from planning/ to e2e/

- Updated documentation references to reflect new location

- Verified package builds successfully in new location This follows the pattern established in the AWF main repository: https://github.com/autowarefoundation/autoware_universe/tree/main/e2e

-

Contributors: Max-Bin, Ryohsuke Mitsudome

Package Dependencies

System Dependencies

| Name |

|---|

| nlohmann-json-dev |

Dependant Packages

Launch files

- launch/vad_carla_tiny.launch.xml

- VAD (Vectorized Autonomous Driving) - CARLA Tiny Configuration This launch file runs the TensorRT-optimized VAD model trained on Bench2Drive CARLA dataset using the standard Autoware coordinate system (Y->X, -X->Y transformation, rear axis origin). Key requirements: - 6 surround-view cameras in specific order: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT - ONNX models in model_path: vad-carla-tiny_backbone.onnx, vad-carla-tiny_head_no_prev.onnx, vad-carla-tiny_head.onnx - TensorRT engines will be built automatically on first run (GPU-specific) For more information, see: README.md

-

- log_level [default: info]

- use_sim_time [default: true]

- data_path [default: $(env HOME)/autoware_data]

- model_path [default: $(var data_path)/vad]

- plugins_path [default: $(find-pkg-share autoware_tensorrt_plugins)]

- camera_info0 [default: /sensing/camera/CAM_FRONT/camera_info]

- camera_info1 [default: /sensing/camera/CAM_BACK/camera_info]

- camera_info2 [default: /sensing/camera/CAM_FRONT_LEFT/camera_info]

- camera_info3 [default: /sensing/camera/CAM_BACK_LEFT/camera_info]

- camera_info4 [default: /sensing/camera/CAM_FRONT_RIGHT/camera_info]

- camera_info5 [default: /sensing/camera/CAM_BACK_RIGHT/camera_info]

- image0 [default: /sensing/camera/CAM_FRONT/image_raw]

- image1 [default: /sensing/camera/CAM_BACK/image_raw]

- image2 [default: /sensing/camera/CAM_FRONT_LEFT/image_raw]

- image3 [default: /sensing/camera/CAM_BACK_LEFT/image_raw]

- image4 [default: /sensing/camera/CAM_FRONT_RIGHT/image_raw]

- image5 [default: /sensing/camera/CAM_BACK_RIGHT/image_raw]

- kinematic_state [default: /localization/kinematic_state]

- acceleration [default: /localization/acceleration]

- output_objects [default: /perception/object_recognition/objects]

- output_trajectory [default: /planning/scenario_planning/trajectory]

- output_candidate_trajectories [default: /planning/scenario_planning/vad/candidate_trajectories]

- output_map_points [default: /perception/vad/map_points]

Messages

Services

Plugins

Recent questions tagged autoware_tensorrt_vad at Robotics Stack Exchange

Package Summary

| Version | 0.50.0 |

| License | Apache License 2.0 |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Description | |

| Checkout URI | https://github.com/autowarefoundation/autoware_universe.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Maintainers

- shintarotomie

- Max-Bin

- Yukihiro Saito

Authors

autoware_tensorrt_vad

Overview

The autoware_tensorrt_vad is a ROS 2 component that implements end-to-end autonomous driving using the TensorRT-optimized Vectorized Autonomous Driving (VAD) model. It leverages the VAD model (Jiang et al., 2023), optimized for deployment using NVIDIA’s DL4AGX TensorRT framework.

This module replaces traditional localization, perception, and planning modules with a single neural network, trained on the Bench2Drive benchmark (Jia et al., 2024) using CARLA simulation data. It integrates seamlessly with Autoware and is designed to work within the Autoware framework.

Features

- Monolithic End-to-End Architecture: Single neural network directly maps camera inputs to trajectories, replacing the entire traditional perception-planning pipeline with one unified model - no separate detection, tracking, prediction, or planning modules

- Multi-Camera Perception: Processes 6 surround-view cameras simultaneously for 360° awareness

- Vectorized Scene Representation: Efficient scene encoding using vector maps for reduced computational overhead

- Real-time TensorRT Inference: Optimized for embedded deployment with ~20ms inference time

- Integrated Perception Outputs: Generates both object predictions (with future trajectories) and map elements as auxiliary outputs

- Temporal Modeling: Leverages historical features for improved temporal consistency and prediction accuracy

Visualization

Lane Following Demo

Turn Right Demo

Parameters

Parameters can be set via configuration files:

- Deployment configuration (node and interface parameters):

config/vad_carla_tiny.param.yaml - Model architecture parameters:

vad-carla-tiny.param.json(downloaded with model to~/autoware_data/vad/v0.1/)

Inputs

| Topic | Message Type | Description |

|---|---|---|

| ~/input/image* | sensor_msgs/msg/Image* | Camera images 0-5: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT |

| ~/input/camera_info* | sensor_msgs/msg/CameraInfo | Camera calibration for cameras 0-5 |

| ~/input/kinematic_state | nav_msgs/msg/Odometry | Vehicle odometry |

| ~/input/acceleration | geometry_msgs/msg/AccelWithCovarianceStamped | Vehicle acceleration |

*Image transport supports both raw and compressed formats. Configure per-camera via use_raw parameter (default: compressed).

Outputs

| Topic | Message Type | Description |

|---|---|---|

| ~/output/trajectory | autoware_planning_msgs/msg/Trajectory | Selected ego trajectory |

| ~/output/trajectories | autoware_internal_planning_msgs/msg/CandidateTrajectories | All 6 candidate trajectories |

| ~/output/objects | autoware_perception_msgs/msg/PredictedObjects | Predicted objects with trajectories |

| ~/output/map | visualization_msgs/msg/MarkerArray | Predicted map elements |

Building

Build the package with colcon:

colcon build --symlink-install --cmake-args -DCMAKE_EXPORT_COMPILE_COMMANDS=ON -DCMAKE_BUILD_TYPE=Release --packages-up-to autoware_tensorrt_vad

Testing

Unit Tests

Unit tests are provided and can be run with:

colcon test --packages-select autoware_tensorrt_vad

colcon test-result --all

For verbose output:

colcon test --packages-select autoware_tensorrt_vad --event-handlers console_cohesion+

CARLA Simulator Testing

First, setup CARLA following the autoware_carla_interface instructions.

Then launch the E2E VAD system:

```bash

File truncated at 100 lines see the full file

Changelog for package autoware_tensorrt_vad

0.50.0 (2026-02-14)

-

Merge remote-tracking branch 'origin/main' into humble

-

feat(autoware_tensorrt_vad): update nvcc flags (#12044) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INST… (#11988) fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INSTALL_DIR The USE_SCOPED_HEADER_INSTALL_DIR flag is unnecessary for this package because:

- The package has no include/ directory (no public headers)

- All headers are private in src/ directory

- This flag only affects public header installation paths

- It can cause build failures in certain configurations This flag was the only usage in autoware_universe and provided no benefit.

-

chore: remove autoware_launch exec_depend from e2e/autoware_tensorrt_vad (#11879) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unused function [load_object_configuration]{.title-ref} (#11909)

-

fix(autoware_tensorrt_vad): remove unused function [load_map_configuration]{.title-ref} (#11908)

-

fix(autoware_tensorrt_vad): remove unused function [load_image_normalization]{.title-ref} (#11910)

-

fix: add cv_bridge.hpp support (#11873)

-

Contributors: Amadeusz Szymko, Max-Bin, Mete Fatih Cırıt, Ryohsuke Mitsudome, Ryuta Kambe, Taeseung Sohn

0.49.0 (2025-12-30)

-

chore: align version number

-

Merge remote-tracking branch 'origin/main' into prepare-0.49.0-changelog

-

refactor: migrate autoware_tensorrt_vad to e2e directory (#11730) Move autoware_tensorrt_vad package from planning/ to e2e/ directory to align with AWF's organization of end-to-end learning-based components. Changes:

- Created e2e/ directory for end-to-end components

- Moved all autoware_tensorrt_vad files from planning/ to e2e/

- Updated documentation references to reflect new location

- Verified package builds successfully in new location This follows the pattern established in the AWF main repository: https://github.com/autowarefoundation/autoware_universe/tree/main/e2e

-

Contributors: Max-Bin, Ryohsuke Mitsudome

Package Dependencies

System Dependencies

| Name |

|---|

| nlohmann-json-dev |

Dependant Packages

Launch files

- launch/vad_carla_tiny.launch.xml

- VAD (Vectorized Autonomous Driving) - CARLA Tiny Configuration This launch file runs the TensorRT-optimized VAD model trained on Bench2Drive CARLA dataset using the standard Autoware coordinate system (Y->X, -X->Y transformation, rear axis origin). Key requirements: - 6 surround-view cameras in specific order: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT - ONNX models in model_path: vad-carla-tiny_backbone.onnx, vad-carla-tiny_head_no_prev.onnx, vad-carla-tiny_head.onnx - TensorRT engines will be built automatically on first run (GPU-specific) For more information, see: README.md

-

- log_level [default: info]

- use_sim_time [default: true]

- data_path [default: $(env HOME)/autoware_data]

- model_path [default: $(var data_path)/vad]

- plugins_path [default: $(find-pkg-share autoware_tensorrt_plugins)]

- camera_info0 [default: /sensing/camera/CAM_FRONT/camera_info]

- camera_info1 [default: /sensing/camera/CAM_BACK/camera_info]

- camera_info2 [default: /sensing/camera/CAM_FRONT_LEFT/camera_info]

- camera_info3 [default: /sensing/camera/CAM_BACK_LEFT/camera_info]

- camera_info4 [default: /sensing/camera/CAM_FRONT_RIGHT/camera_info]

- camera_info5 [default: /sensing/camera/CAM_BACK_RIGHT/camera_info]

- image0 [default: /sensing/camera/CAM_FRONT/image_raw]

- image1 [default: /sensing/camera/CAM_BACK/image_raw]

- image2 [default: /sensing/camera/CAM_FRONT_LEFT/image_raw]

- image3 [default: /sensing/camera/CAM_BACK_LEFT/image_raw]

- image4 [default: /sensing/camera/CAM_FRONT_RIGHT/image_raw]

- image5 [default: /sensing/camera/CAM_BACK_RIGHT/image_raw]

- kinematic_state [default: /localization/kinematic_state]

- acceleration [default: /localization/acceleration]

- output_objects [default: /perception/object_recognition/objects]

- output_trajectory [default: /planning/scenario_planning/trajectory]

- output_candidate_trajectories [default: /planning/scenario_planning/vad/candidate_trajectories]

- output_map_points [default: /perception/vad/map_points]

Messages

Services

Plugins

Recent questions tagged autoware_tensorrt_vad at Robotics Stack Exchange

Package Summary

| Version | 0.50.0 |

| License | Apache License 2.0 |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Description | |

| Checkout URI | https://github.com/autowarefoundation/autoware_universe.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Maintainers

- shintarotomie

- Max-Bin

- Yukihiro Saito

Authors

autoware_tensorrt_vad

Overview

The autoware_tensorrt_vad is a ROS 2 component that implements end-to-end autonomous driving using the TensorRT-optimized Vectorized Autonomous Driving (VAD) model. It leverages the VAD model (Jiang et al., 2023), optimized for deployment using NVIDIA’s DL4AGX TensorRT framework.

This module replaces traditional localization, perception, and planning modules with a single neural network, trained on the Bench2Drive benchmark (Jia et al., 2024) using CARLA simulation data. It integrates seamlessly with Autoware and is designed to work within the Autoware framework.

Features

- Monolithic End-to-End Architecture: Single neural network directly maps camera inputs to trajectories, replacing the entire traditional perception-planning pipeline with one unified model - no separate detection, tracking, prediction, or planning modules

- Multi-Camera Perception: Processes 6 surround-view cameras simultaneously for 360° awareness

- Vectorized Scene Representation: Efficient scene encoding using vector maps for reduced computational overhead

- Real-time TensorRT Inference: Optimized for embedded deployment with ~20ms inference time

- Integrated Perception Outputs: Generates both object predictions (with future trajectories) and map elements as auxiliary outputs

- Temporal Modeling: Leverages historical features for improved temporal consistency and prediction accuracy

Visualization

Lane Following Demo

Turn Right Demo

Parameters

Parameters can be set via configuration files:

- Deployment configuration (node and interface parameters):

config/vad_carla_tiny.param.yaml - Model architecture parameters:

vad-carla-tiny.param.json(downloaded with model to~/autoware_data/vad/v0.1/)

Inputs

| Topic | Message Type | Description |

|---|---|---|

| ~/input/image* | sensor_msgs/msg/Image* | Camera images 0-5: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT |

| ~/input/camera_info* | sensor_msgs/msg/CameraInfo | Camera calibration for cameras 0-5 |

| ~/input/kinematic_state | nav_msgs/msg/Odometry | Vehicle odometry |

| ~/input/acceleration | geometry_msgs/msg/AccelWithCovarianceStamped | Vehicle acceleration |

*Image transport supports both raw and compressed formats. Configure per-camera via use_raw parameter (default: compressed).

Outputs

| Topic | Message Type | Description |

|---|---|---|

| ~/output/trajectory | autoware_planning_msgs/msg/Trajectory | Selected ego trajectory |

| ~/output/trajectories | autoware_internal_planning_msgs/msg/CandidateTrajectories | All 6 candidate trajectories |

| ~/output/objects | autoware_perception_msgs/msg/PredictedObjects | Predicted objects with trajectories |

| ~/output/map | visualization_msgs/msg/MarkerArray | Predicted map elements |

Building

Build the package with colcon:

colcon build --symlink-install --cmake-args -DCMAKE_EXPORT_COMPILE_COMMANDS=ON -DCMAKE_BUILD_TYPE=Release --packages-up-to autoware_tensorrt_vad

Testing

Unit Tests

Unit tests are provided and can be run with:

colcon test --packages-select autoware_tensorrt_vad

colcon test-result --all

For verbose output:

colcon test --packages-select autoware_tensorrt_vad --event-handlers console_cohesion+

CARLA Simulator Testing

First, setup CARLA following the autoware_carla_interface instructions.

Then launch the E2E VAD system:

```bash

File truncated at 100 lines see the full file

Changelog for package autoware_tensorrt_vad

0.50.0 (2026-02-14)

-

Merge remote-tracking branch 'origin/main' into humble

-

feat(autoware_tensorrt_vad): update nvcc flags (#12044) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INST… (#11988) fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INSTALL_DIR The USE_SCOPED_HEADER_INSTALL_DIR flag is unnecessary for this package because:

- The package has no include/ directory (no public headers)

- All headers are private in src/ directory

- This flag only affects public header installation paths

- It can cause build failures in certain configurations This flag was the only usage in autoware_universe and provided no benefit.

-

chore: remove autoware_launch exec_depend from e2e/autoware_tensorrt_vad (#11879) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unused function [load_object_configuration]{.title-ref} (#11909)

-

fix(autoware_tensorrt_vad): remove unused function [load_map_configuration]{.title-ref} (#11908)

-

fix(autoware_tensorrt_vad): remove unused function [load_image_normalization]{.title-ref} (#11910)

-

fix: add cv_bridge.hpp support (#11873)

-

Contributors: Amadeusz Szymko, Max-Bin, Mete Fatih Cırıt, Ryohsuke Mitsudome, Ryuta Kambe, Taeseung Sohn

0.49.0 (2025-12-30)

-

chore: align version number

-

Merge remote-tracking branch 'origin/main' into prepare-0.49.0-changelog

-

refactor: migrate autoware_tensorrt_vad to e2e directory (#11730) Move autoware_tensorrt_vad package from planning/ to e2e/ directory to align with AWF's organization of end-to-end learning-based components. Changes:

- Created e2e/ directory for end-to-end components

- Moved all autoware_tensorrt_vad files from planning/ to e2e/

- Updated documentation references to reflect new location

- Verified package builds successfully in new location This follows the pattern established in the AWF main repository: https://github.com/autowarefoundation/autoware_universe/tree/main/e2e

-

Contributors: Max-Bin, Ryohsuke Mitsudome

Package Dependencies

System Dependencies

| Name |

|---|

| nlohmann-json-dev |

Dependant Packages

Launch files

- launch/vad_carla_tiny.launch.xml

- VAD (Vectorized Autonomous Driving) - CARLA Tiny Configuration This launch file runs the TensorRT-optimized VAD model trained on Bench2Drive CARLA dataset using the standard Autoware coordinate system (Y->X, -X->Y transformation, rear axis origin). Key requirements: - 6 surround-view cameras in specific order: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT - ONNX models in model_path: vad-carla-tiny_backbone.onnx, vad-carla-tiny_head_no_prev.onnx, vad-carla-tiny_head.onnx - TensorRT engines will be built automatically on first run (GPU-specific) For more information, see: README.md

-

- log_level [default: info]

- use_sim_time [default: true]

- data_path [default: $(env HOME)/autoware_data]

- model_path [default: $(var data_path)/vad]

- plugins_path [default: $(find-pkg-share autoware_tensorrt_plugins)]

- camera_info0 [default: /sensing/camera/CAM_FRONT/camera_info]

- camera_info1 [default: /sensing/camera/CAM_BACK/camera_info]

- camera_info2 [default: /sensing/camera/CAM_FRONT_LEFT/camera_info]

- camera_info3 [default: /sensing/camera/CAM_BACK_LEFT/camera_info]

- camera_info4 [default: /sensing/camera/CAM_FRONT_RIGHT/camera_info]

- camera_info5 [default: /sensing/camera/CAM_BACK_RIGHT/camera_info]

- image0 [default: /sensing/camera/CAM_FRONT/image_raw]

- image1 [default: /sensing/camera/CAM_BACK/image_raw]

- image2 [default: /sensing/camera/CAM_FRONT_LEFT/image_raw]

- image3 [default: /sensing/camera/CAM_BACK_LEFT/image_raw]

- image4 [default: /sensing/camera/CAM_FRONT_RIGHT/image_raw]

- image5 [default: /sensing/camera/CAM_BACK_RIGHT/image_raw]

- kinematic_state [default: /localization/kinematic_state]

- acceleration [default: /localization/acceleration]

- output_objects [default: /perception/object_recognition/objects]

- output_trajectory [default: /planning/scenario_planning/trajectory]

- output_candidate_trajectories [default: /planning/scenario_planning/vad/candidate_trajectories]

- output_map_points [default: /perception/vad/map_points]

Messages

Services

Plugins

Recent questions tagged autoware_tensorrt_vad at Robotics Stack Exchange

Package Summary

| Version | 0.50.0 |

| License | Apache License 2.0 |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Description | |

| Checkout URI | https://github.com/autowarefoundation/autoware_universe.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Maintainers

- shintarotomie

- Max-Bin

- Yukihiro Saito

Authors

autoware_tensorrt_vad

Overview

The autoware_tensorrt_vad is a ROS 2 component that implements end-to-end autonomous driving using the TensorRT-optimized Vectorized Autonomous Driving (VAD) model. It leverages the VAD model (Jiang et al., 2023), optimized for deployment using NVIDIA’s DL4AGX TensorRT framework.

This module replaces traditional localization, perception, and planning modules with a single neural network, trained on the Bench2Drive benchmark (Jia et al., 2024) using CARLA simulation data. It integrates seamlessly with Autoware and is designed to work within the Autoware framework.

Features

- Monolithic End-to-End Architecture: Single neural network directly maps camera inputs to trajectories, replacing the entire traditional perception-planning pipeline with one unified model - no separate detection, tracking, prediction, or planning modules

- Multi-Camera Perception: Processes 6 surround-view cameras simultaneously for 360° awareness

- Vectorized Scene Representation: Efficient scene encoding using vector maps for reduced computational overhead

- Real-time TensorRT Inference: Optimized for embedded deployment with ~20ms inference time

- Integrated Perception Outputs: Generates both object predictions (with future trajectories) and map elements as auxiliary outputs

- Temporal Modeling: Leverages historical features for improved temporal consistency and prediction accuracy

Visualization

Lane Following Demo

Turn Right Demo

Parameters

Parameters can be set via configuration files:

- Deployment configuration (node and interface parameters):

config/vad_carla_tiny.param.yaml - Model architecture parameters:

vad-carla-tiny.param.json(downloaded with model to~/autoware_data/vad/v0.1/)

Inputs

| Topic | Message Type | Description |

|---|---|---|

| ~/input/image* | sensor_msgs/msg/Image* | Camera images 0-5: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT |

| ~/input/camera_info* | sensor_msgs/msg/CameraInfo | Camera calibration for cameras 0-5 |

| ~/input/kinematic_state | nav_msgs/msg/Odometry | Vehicle odometry |

| ~/input/acceleration | geometry_msgs/msg/AccelWithCovarianceStamped | Vehicle acceleration |

*Image transport supports both raw and compressed formats. Configure per-camera via use_raw parameter (default: compressed).

Outputs

| Topic | Message Type | Description |

|---|---|---|

| ~/output/trajectory | autoware_planning_msgs/msg/Trajectory | Selected ego trajectory |

| ~/output/trajectories | autoware_internal_planning_msgs/msg/CandidateTrajectories | All 6 candidate trajectories |

| ~/output/objects | autoware_perception_msgs/msg/PredictedObjects | Predicted objects with trajectories |

| ~/output/map | visualization_msgs/msg/MarkerArray | Predicted map elements |

Building

Build the package with colcon:

colcon build --symlink-install --cmake-args -DCMAKE_EXPORT_COMPILE_COMMANDS=ON -DCMAKE_BUILD_TYPE=Release --packages-up-to autoware_tensorrt_vad

Testing

Unit Tests

Unit tests are provided and can be run with:

colcon test --packages-select autoware_tensorrt_vad

colcon test-result --all

For verbose output:

colcon test --packages-select autoware_tensorrt_vad --event-handlers console_cohesion+

CARLA Simulator Testing

First, setup CARLA following the autoware_carla_interface instructions.

Then launch the E2E VAD system:

```bash

File truncated at 100 lines see the full file

Changelog for package autoware_tensorrt_vad

0.50.0 (2026-02-14)

-

Merge remote-tracking branch 'origin/main' into humble

-

feat(autoware_tensorrt_vad): update nvcc flags (#12044) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INST… (#11988) fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INSTALL_DIR The USE_SCOPED_HEADER_INSTALL_DIR flag is unnecessary for this package because:

- The package has no include/ directory (no public headers)

- All headers are private in src/ directory

- This flag only affects public header installation paths

- It can cause build failures in certain configurations This flag was the only usage in autoware_universe and provided no benefit.

-

chore: remove autoware_launch exec_depend from e2e/autoware_tensorrt_vad (#11879) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unused function [load_object_configuration]{.title-ref} (#11909)

-

fix(autoware_tensorrt_vad): remove unused function [load_map_configuration]{.title-ref} (#11908)

-

fix(autoware_tensorrt_vad): remove unused function [load_image_normalization]{.title-ref} (#11910)

-

fix: add cv_bridge.hpp support (#11873)

-

Contributors: Amadeusz Szymko, Max-Bin, Mete Fatih Cırıt, Ryohsuke Mitsudome, Ryuta Kambe, Taeseung Sohn

0.49.0 (2025-12-30)

-

chore: align version number

-

Merge remote-tracking branch 'origin/main' into prepare-0.49.0-changelog

-

refactor: migrate autoware_tensorrt_vad to e2e directory (#11730) Move autoware_tensorrt_vad package from planning/ to e2e/ directory to align with AWF's organization of end-to-end learning-based components. Changes:

- Created e2e/ directory for end-to-end components

- Moved all autoware_tensorrt_vad files from planning/ to e2e/

- Updated documentation references to reflect new location

- Verified package builds successfully in new location This follows the pattern established in the AWF main repository: https://github.com/autowarefoundation/autoware_universe/tree/main/e2e

-

Contributors: Max-Bin, Ryohsuke Mitsudome

Package Dependencies

System Dependencies

| Name |

|---|

| nlohmann-json-dev |

Dependant Packages

Launch files

- launch/vad_carla_tiny.launch.xml

- VAD (Vectorized Autonomous Driving) - CARLA Tiny Configuration This launch file runs the TensorRT-optimized VAD model trained on Bench2Drive CARLA dataset using the standard Autoware coordinate system (Y->X, -X->Y transformation, rear axis origin). Key requirements: - 6 surround-view cameras in specific order: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT - ONNX models in model_path: vad-carla-tiny_backbone.onnx, vad-carla-tiny_head_no_prev.onnx, vad-carla-tiny_head.onnx - TensorRT engines will be built automatically on first run (GPU-specific) For more information, see: README.md

-

- log_level [default: info]

- use_sim_time [default: true]

- data_path [default: $(env HOME)/autoware_data]

- model_path [default: $(var data_path)/vad]

- plugins_path [default: $(find-pkg-share autoware_tensorrt_plugins)]

- camera_info0 [default: /sensing/camera/CAM_FRONT/camera_info]

- camera_info1 [default: /sensing/camera/CAM_BACK/camera_info]

- camera_info2 [default: /sensing/camera/CAM_FRONT_LEFT/camera_info]

- camera_info3 [default: /sensing/camera/CAM_BACK_LEFT/camera_info]

- camera_info4 [default: /sensing/camera/CAM_FRONT_RIGHT/camera_info]

- camera_info5 [default: /sensing/camera/CAM_BACK_RIGHT/camera_info]

- image0 [default: /sensing/camera/CAM_FRONT/image_raw]

- image1 [default: /sensing/camera/CAM_BACK/image_raw]

- image2 [default: /sensing/camera/CAM_FRONT_LEFT/image_raw]

- image3 [default: /sensing/camera/CAM_BACK_LEFT/image_raw]

- image4 [default: /sensing/camera/CAM_FRONT_RIGHT/image_raw]

- image5 [default: /sensing/camera/CAM_BACK_RIGHT/image_raw]

- kinematic_state [default: /localization/kinematic_state]

- acceleration [default: /localization/acceleration]

- output_objects [default: /perception/object_recognition/objects]

- output_trajectory [default: /planning/scenario_planning/trajectory]

- output_candidate_trajectories [default: /planning/scenario_planning/vad/candidate_trajectories]

- output_map_points [default: /perception/vad/map_points]

Messages

Services

Plugins

Recent questions tagged autoware_tensorrt_vad at Robotics Stack Exchange

Package Summary

| Version | 0.50.0 |

| License | Apache License 2.0 |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Description | |

| Checkout URI | https://github.com/autowarefoundation/autoware_universe.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2026-02-25 |

| Dev Status | UNKNOWN |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Maintainers

- shintarotomie

- Max-Bin

- Yukihiro Saito

Authors

autoware_tensorrt_vad

Overview

The autoware_tensorrt_vad is a ROS 2 component that implements end-to-end autonomous driving using the TensorRT-optimized Vectorized Autonomous Driving (VAD) model. It leverages the VAD model (Jiang et al., 2023), optimized for deployment using NVIDIA’s DL4AGX TensorRT framework.

This module replaces traditional localization, perception, and planning modules with a single neural network, trained on the Bench2Drive benchmark (Jia et al., 2024) using CARLA simulation data. It integrates seamlessly with Autoware and is designed to work within the Autoware framework.

Features

- Monolithic End-to-End Architecture: Single neural network directly maps camera inputs to trajectories, replacing the entire traditional perception-planning pipeline with one unified model - no separate detection, tracking, prediction, or planning modules

- Multi-Camera Perception: Processes 6 surround-view cameras simultaneously for 360° awareness

- Vectorized Scene Representation: Efficient scene encoding using vector maps for reduced computational overhead

- Real-time TensorRT Inference: Optimized for embedded deployment with ~20ms inference time

- Integrated Perception Outputs: Generates both object predictions (with future trajectories) and map elements as auxiliary outputs

- Temporal Modeling: Leverages historical features for improved temporal consistency and prediction accuracy

Visualization

Lane Following Demo

Turn Right Demo

Parameters

Parameters can be set via configuration files:

- Deployment configuration (node and interface parameters):

config/vad_carla_tiny.param.yaml - Model architecture parameters:

vad-carla-tiny.param.json(downloaded with model to~/autoware_data/vad/v0.1/)

Inputs

| Topic | Message Type | Description |

|---|---|---|

| ~/input/image* | sensor_msgs/msg/Image* | Camera images 0-5: FRONT, BACK, FRONT_LEFT, BACK_LEFT, FRONT_RIGHT, BACK_RIGHT |

| ~/input/camera_info* | sensor_msgs/msg/CameraInfo | Camera calibration for cameras 0-5 |

| ~/input/kinematic_state | nav_msgs/msg/Odometry | Vehicle odometry |

| ~/input/acceleration | geometry_msgs/msg/AccelWithCovarianceStamped | Vehicle acceleration |

*Image transport supports both raw and compressed formats. Configure per-camera via use_raw parameter (default: compressed).

Outputs

| Topic | Message Type | Description |

|---|---|---|

| ~/output/trajectory | autoware_planning_msgs/msg/Trajectory | Selected ego trajectory |

| ~/output/trajectories | autoware_internal_planning_msgs/msg/CandidateTrajectories | All 6 candidate trajectories |

| ~/output/objects | autoware_perception_msgs/msg/PredictedObjects | Predicted objects with trajectories |

| ~/output/map | visualization_msgs/msg/MarkerArray | Predicted map elements |

Building

Build the package with colcon:

colcon build --symlink-install --cmake-args -DCMAKE_EXPORT_COMPILE_COMMANDS=ON -DCMAKE_BUILD_TYPE=Release --packages-up-to autoware_tensorrt_vad

Testing

Unit Tests

Unit tests are provided and can be run with:

colcon test --packages-select autoware_tensorrt_vad

colcon test-result --all

For verbose output:

colcon test --packages-select autoware_tensorrt_vad --event-handlers console_cohesion+

CARLA Simulator Testing

First, setup CARLA following the autoware_carla_interface instructions.

Then launch the E2E VAD system:

```bash

File truncated at 100 lines see the full file

Changelog for package autoware_tensorrt_vad

0.50.0 (2026-02-14)

-

Merge remote-tracking branch 'origin/main' into humble

-

feat(autoware_tensorrt_vad): update nvcc flags (#12044) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INST… (#11988) fix(autoware_tensorrt_vad): remove unnecessary USE_SCOPED_HEADER_INSTALL_DIR The USE_SCOPED_HEADER_INSTALL_DIR flag is unnecessary for this package because:

- The package has no include/ directory (no public headers)

- All headers are private in src/ directory

- This flag only affects public header installation paths

- It can cause build failures in certain configurations This flag was the only usage in autoware_universe and provided no benefit.

-

chore: remove autoware_launch exec_depend from e2e/autoware_tensorrt_vad (#11879) Co-authored-by: Max-Bin <<vborisw@gmail.com>>

-

fix(autoware_tensorrt_vad): remove unused function [load_object_configuration]{.title-ref} (#11909)

-

fix(autoware_tensorrt_vad): remove unused function [load_map_configuration]{.title-ref} (#11908)

-

fix(autoware_tensorrt_vad): remove unused function [load_image_normalization]{.title-ref} (#11910)

-

fix: add cv_bridge.hpp support (#11873)

-

Contributors: Amadeusz Szymko, Max-Bin, Mete Fatih Cırıt, Ryohsuke Mitsudome, Ryuta Kambe, Taeseung Sohn

0.49.0 (2025-12-30)

-

chore: align version number

-

Merge remote-tracking branch 'origin/main' into prepare-0.49.0-changelog

-

refactor: migrate autoware_tensorrt_vad to e2e directory (#11730) Move autoware_tensorrt_vad package from planning/ to e2e/ directory to align with AWF's organization of end-to-end learning-based components. Changes:

- Created e2e/ directory for end-to-end components

- Moved all autoware_tensorrt_vad files from planning/ to e2e/

- Updated documentation references to reflect new location

- Verified package builds successfully in new location This follows the pattern established in the AWF main repository: https://github.com/autowarefoundation/autoware_universe/tree/main/e2e

-

Contributors: Max-Bin, Ryohsuke Mitsudome

Package Dependencies

System Dependencies

| Name |

|---|

| nlohmann-json-dev |

Dependant Packages

Launch files

- launch/vad_carla_tiny.launch.xml